Compare commits

170 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

500e3157b9 | ||

|

|

eba86b1d23 | ||

|

|

b69a563fc2 | ||

|

|

a900c36395 | ||

|

|

1d9b324d3e | ||

|

|

539e7b8efe | ||

|

|

50a477ee47 | ||

|

|

7000123a8b | ||

|

|

d48a7d2398 | ||

|

|

389a00ce59 | ||

|

|

7a460de3c2 | ||

|

|

8ea1f4a751 | ||

|

|

1c69ccc6cd | ||

|

|

84b5bbd3b6 | ||

|

|

9ccd327298 | ||

|

|

11df36f3cf | ||

|

|

f62dd0e3cc | ||

|

|

ad18b6e15e | ||

|

|

c00b80ca29 | ||

|

|

92ed4ba3f8 | ||

|

|

7de9775dd9 | ||

|

|

5ce9060e5c | ||

|

|

f727d5cb5a | ||

|

|

4735fb1ebb | ||

|

|

c7d05cc13d | ||

|

|

51c152ff4a | ||

|

|

eeed2a840c | ||

|

|

4aaa111925 | ||

|

|

e31248f018 | ||

|

|

8b4cf022f2 | ||

|

|

4e7455268a | ||

|

|

680f8ae814 | ||

|

|

90555a4cea | ||

|

|

56a62db591 | ||

|

|

cf51997680 | ||

|

|

f05cc18d61 | ||

|

|

5384c2e0f5 | ||

|

|

9bfbf80a0e | ||

|

|

f874d7754f | ||

|

|

a669f79480 | ||

|

|

1c3894743a | ||

|

|

75cdf17df4 | ||

|

|

de7dd1e60a | ||

|

|

0ee574a718 | ||

|

|

faac894706 | ||

|

|

dac2fad48e | ||

|

|

77f624b01e | ||

|

|

e24ffebfc8 | ||

|

|

70d07d1609 | ||

|

|

bfb3303d87 | ||

|

|

660705a436 | ||

|

|

74a3f97671 | ||

|

|

b3e35bb494 | ||

|

|

76adac7c72 | ||

|

|

5dc75ebb67 | ||

|

|

d686ce12b6 | ||

|

|

d3c40a423e | ||

|

|

2fb1e6dab8 | ||

|

|

10430b347f | ||

|

|

e0e3f6ac3e | ||

|

|

c694cbffdc | ||

|

|

bdd0e5d771 | ||

|

|

aa98e427f0 | ||

|

|

daa6f4c94c | ||

|

|

4a76663fb2 | ||

|

|

cebda5028a | ||

|

|

3fa377a580 | ||

|

|

a11c1005a8 | ||

|

|

4a6aea9328 | ||

|

|

4ca041e93e | ||

|

|

52a866a405 | ||

|

|

8b6bd0e6ac | ||

|

|

780fc4639a | ||

|

|

3692fc9d83 | ||

|

|

c2a0b1b4c6 | ||

|

|

21bbdb5419 | ||

|

|

aa1c08962c | ||

|

|

8a5d0399dd | ||

|

|

f2cd0b0c4a | ||

|

|

c2b66bbe73 | ||

|

|

48b957f1d5 | ||

|

|

3683984c8d | ||

|

|

a3431512d8 | ||

|

|

d832b787e7 | ||

|

|

6f75b02723 | ||

|

|

b8241710bd | ||

|

|

d638404b6a | ||

|

|

9362ca3ed9 | ||

|

|

d1a03c6d17 | ||

|

|

c6c31702c2 | ||

|

|

bd2d88c96e | ||

|

|

76b1857e4e | ||

|

|

095bd17d10 | ||

|

|

204bfac3fa | ||

|

|

ac49b0ca93 | ||

|

|

c5b04f6fef | ||

|

|

5c58fda46d | ||

|

|

062730c70c | ||

|

|

cade1990ce | ||

|

|

59b6e61816 | ||

|

|

daff7ff158 | ||

|

|

0862860961 | ||

|

|

1cb24045a0 | ||

|

|

622358b172 | ||

|

|

7998884a9d | ||

|

|

51ddecd101 | ||

|

|

7a35ab1d1e | ||

|

|

48564ba52a | ||

|

|

49efffd740 | ||

|

|

d6ac224c8f | ||

|

|

a772b8c3f2 | ||

|

|

b580953dcd | ||

|

|

d86653c763 | ||

|

|

dded4fca76 | ||

|

|

36365ffa6b | ||

|

|

0f9aeeaa27 | ||

|

|

d8ebcd0ef7 | ||

|

|

6e445487b1 | ||

|

|

6605e461c7 | ||

|

|

40ce4e2275 | ||

|

|

8fef9e363e | ||

|

|

4792c2770d | ||

|

|

87bb49da36 | ||

|

|

1c0071d9ce | ||

|

|

efded35c2e | ||

|

|

1d74240b9a | ||

|

|

098184ff7b | ||

|

|

4083533916 | ||

|

|

feb1acd43a | ||

|

|

a9591db734 | ||

|

|

9ebf148cbe | ||

|

|

a473e5e19a | ||

|

|

5d3034c231 | ||

|

|

c3a895af64 | ||

|

|

cea5aecbf2 | ||

|

|

0e61e70670 | ||

|

|

1e333c0939 | ||

|

|

917b6ec03c | ||

|

|

fe67c52ead | ||

|

|

909c7bee3e | ||

|

|

27ca54d138 | ||

|

|

2147c3a646 | ||

|

|

a99120116f | ||

|

|

802efeaff2 | ||

|

|

9ad3af1ef6 | ||

|

|

715727b811 | ||

|

|

c6eaa7b836 | ||

|

|

c2fceea2a5 | ||

|

|

190e11f7ea | ||

|

|

ad7413a5ff | ||

|

|

903b9e627a | ||

|

|

c5c1e96cf8 | ||

|

|

62fbb04c9d | ||

|

|

728dc62d0b | ||

|

|

2dfe1b1c6b | ||

|

|

35d4a1a6af | ||

|

|

eb3fa5aa6b | ||

|

|

438384425a | ||

|

|

0b6f102436 | ||

|

|

c9b7ec72d8 | ||

|

|

256c7f1789 | ||

|

|

4e5a323c62 | ||

|

|

f4a3bbd237 | ||

|

|

fe73f2d579 | ||

|

|

f79fcc7073 | ||

|

|

4c4b3790c7 | ||

|

|

bd60b464bb | ||

|

|

6bce852765 | ||

|

|

3b19a5a59d | ||

|

|

f024583011 |

13

.gitignore

vendored

13

.gitignore

vendored

@@ -5,13 +5,16 @@ __pycache__/

|

|||||||

MANIFEST.in

|

MANIFEST.in

|

||||||

MANIFEST

|

MANIFEST

|

||||||

copyparty.egg-info/

|

copyparty.egg-info/

|

||||||

buildenv/

|

|

||||||

build/

|

|

||||||

dist/

|

|

||||||

sfx/

|

|

||||||

py2/

|

|

||||||

.venv/

|

.venv/

|

||||||

|

|

||||||

|

/buildenv/

|

||||||

|

/build/

|

||||||

|

/dist/

|

||||||

|

/py2/

|

||||||

|

/sfx*

|

||||||

|

/unt/

|

||||||

|

/log/

|

||||||

|

|

||||||

# ide

|

# ide

|

||||||

*.sublime-workspace

|

*.sublime-workspace

|

||||||

|

|

||||||

|

|||||||

25

.vscode/settings.json

vendored

25

.vscode/settings.json

vendored

@@ -23,7 +23,6 @@

|

|||||||

"terminal.ansiBrightWhite": "#ffffff",

|

"terminal.ansiBrightWhite": "#ffffff",

|

||||||

},

|

},

|

||||||

"python.testing.pytestEnabled": false,

|

"python.testing.pytestEnabled": false,

|

||||||

"python.testing.nosetestsEnabled": false,

|

|

||||||

"python.testing.unittestEnabled": true,

|

"python.testing.unittestEnabled": true,

|

||||||

"python.testing.unittestArgs": [

|

"python.testing.unittestArgs": [

|

||||||

"-v",

|

"-v",

|

||||||

@@ -35,18 +34,40 @@

|

|||||||

"python.linting.pylintEnabled": true,

|

"python.linting.pylintEnabled": true,

|

||||||

"python.linting.flake8Enabled": true,

|

"python.linting.flake8Enabled": true,

|

||||||

"python.linting.banditEnabled": true,

|

"python.linting.banditEnabled": true,

|

||||||

|

"python.linting.mypyEnabled": true,

|

||||||

|

"python.linting.mypyArgs": [

|

||||||

|

"--ignore-missing-imports",

|

||||||

|

"--follow-imports=silent",

|

||||||

|

"--show-column-numbers",

|

||||||

|

"--strict"

|

||||||

|

],

|

||||||

"python.linting.flake8Args": [

|

"python.linting.flake8Args": [

|

||||||

"--max-line-length=120",

|

"--max-line-length=120",

|

||||||

"--ignore=E722,F405,E203,W503,W293,E402",

|

"--ignore=E722,F405,E203,W503,W293,E402,E501,E128",

|

||||||

],

|

],

|

||||||

"python.linting.banditArgs": [

|

"python.linting.banditArgs": [

|

||||||

"--ignore=B104"

|

"--ignore=B104"

|

||||||

],

|

],

|

||||||

|

"python.linting.pylintArgs": [

|

||||||

|

"--disable=missing-module-docstring",

|

||||||

|

"--disable=missing-class-docstring",

|

||||||

|

"--disable=missing-function-docstring",

|

||||||

|

"--disable=wrong-import-position",

|

||||||

|

"--disable=raise-missing-from",

|

||||||

|

"--disable=bare-except",

|

||||||

|

"--disable=invalid-name",

|

||||||

|

"--disable=line-too-long",

|

||||||

|

"--disable=consider-using-f-string"

|

||||||

|

],

|

||||||

|

// python3 -m isort --py=27 --profile=black copyparty/

|

||||||

"python.formatting.provider": "black",

|

"python.formatting.provider": "black",

|

||||||

"editor.formatOnSave": true,

|

"editor.formatOnSave": true,

|

||||||

"[html]": {

|

"[html]": {

|

||||||

"editor.formatOnSave": false,

|

"editor.formatOnSave": false,

|

||||||

},

|

},

|

||||||

|

"[css]": {

|

||||||

|

"editor.formatOnSave": false,

|

||||||

|

},

|

||||||

"files.associations": {

|

"files.associations": {

|

||||||

"*.makefile": "makefile"

|

"*.makefile": "makefile"

|

||||||

},

|

},

|

||||||

|

|||||||

204

README.md

204

README.md

@@ -9,11 +9,12 @@

|

|||||||

turn your phone or raspi into a portable file server with resumable uploads/downloads using *any* web browser

|

turn your phone or raspi into a portable file server with resumable uploads/downloads using *any* web browser

|

||||||

|

|

||||||

* server only needs `py2.7` or `py3.3+`, all dependencies optional

|

* server only needs `py2.7` or `py3.3+`, all dependencies optional

|

||||||

* browse/upload with IE4 / netscape4.0 on win3.11 (heh)

|

* browse/upload with [IE4](#browser-support) / netscape4.0 on win3.11 (heh)

|

||||||

* *resumable* uploads need `firefox 34+` / `chrome 41+` / `safari 7+` for full speed

|

* *resumable* uploads need `firefox 34+` / `chrome 41+` / `safari 7+`

|

||||||

* code standard: `black`

|

|

||||||

|

|

||||||

📷 **screenshots:** [browser](#the-browser) // [upload](#uploading) // [unpost](#unpost) // [thumbnails](#thumbnails) // [search](#searching) // [fsearch](#file-search) // [zip-DL](#zip-downloads) // [md-viewer](#markdown-viewer) // [ie4](#browser-support)

|

try the **[read-only demo server](https://a.ocv.me/pub/demo/)** 👀 running from a basement in finland

|

||||||

|

|

||||||

|

📷 **screenshots:** [browser](#the-browser) // [upload](#uploading) // [unpost](#unpost) // [thumbnails](#thumbnails) // [search](#searching) // [fsearch](#file-search) // [zip-DL](#zip-downloads) // [md-viewer](#markdown-viewer)

|

||||||

|

|

||||||

|

|

||||||

## get the app

|

## get the app

|

||||||

@@ -43,7 +44,7 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

|||||||

* [tabs](#tabs) - the main tabs in the ui

|

* [tabs](#tabs) - the main tabs in the ui

|

||||||

* [hotkeys](#hotkeys) - the browser has the following hotkeys

|

* [hotkeys](#hotkeys) - the browser has the following hotkeys

|

||||||

* [navpane](#navpane) - switching between breadcrumbs or navpane

|

* [navpane](#navpane) - switching between breadcrumbs or navpane

|

||||||

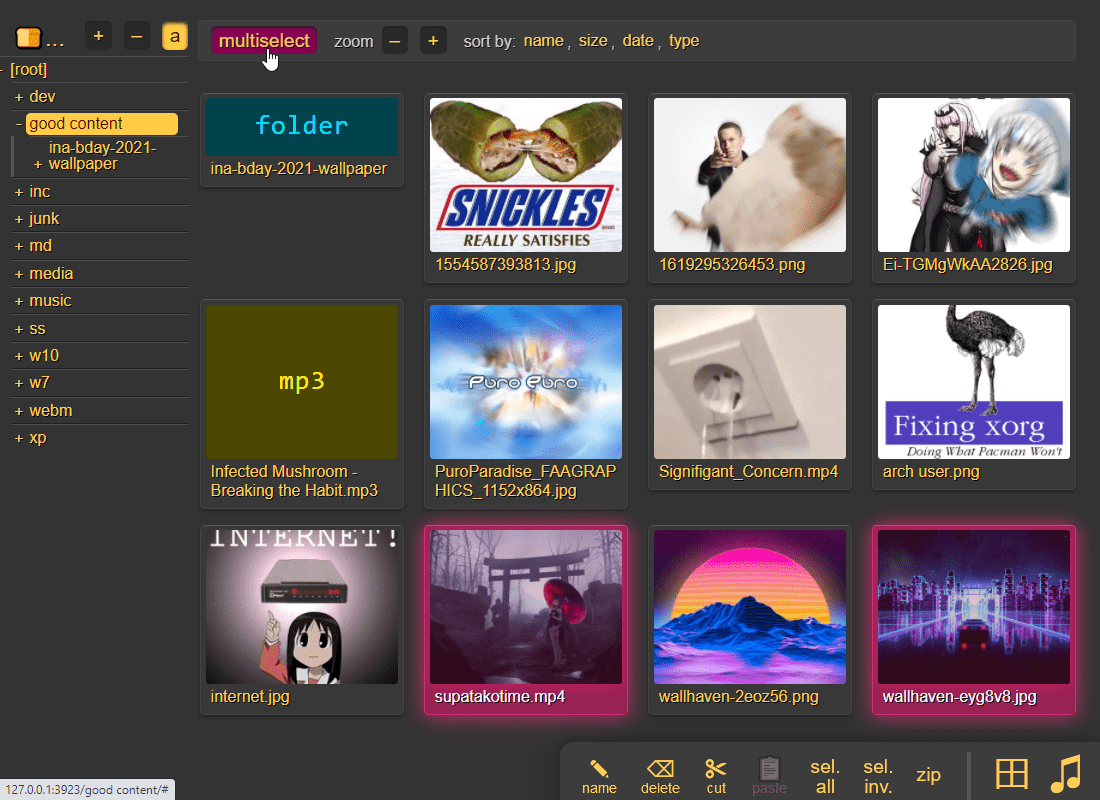

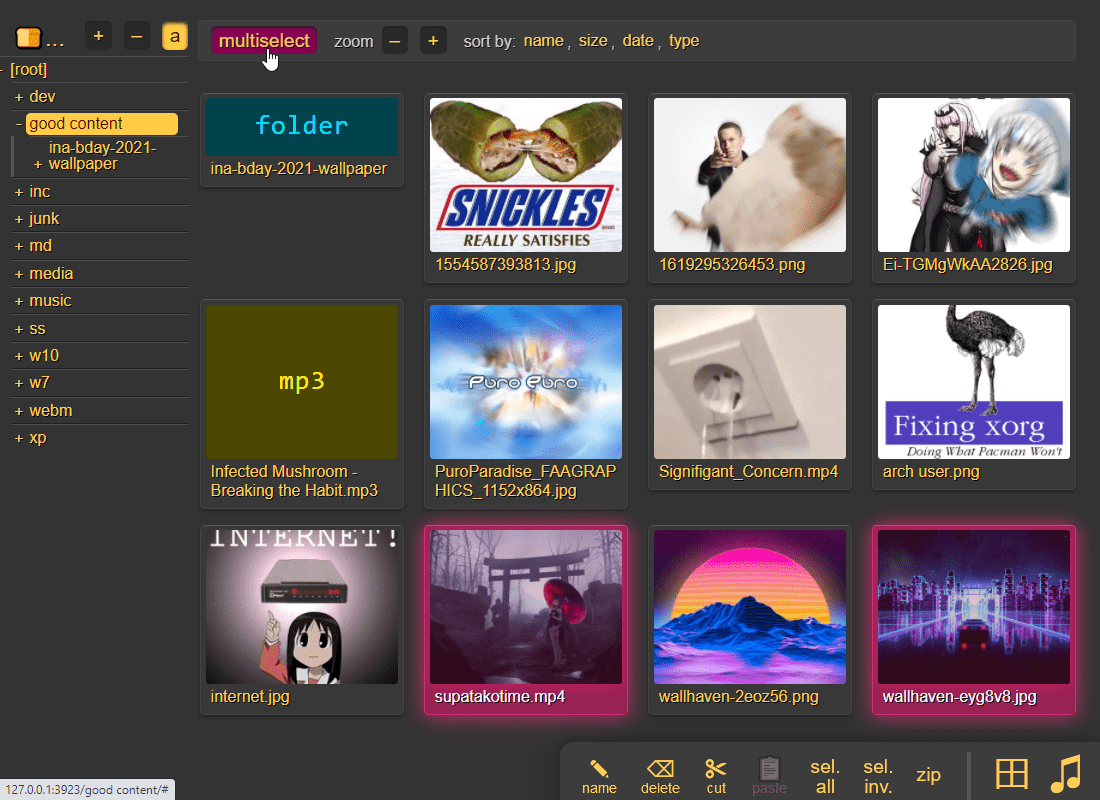

* [thumbnails](#thumbnails) - press `g` to toggle grid-view instead of the file listing

|

* [thumbnails](#thumbnails) - press `g` or `田` to toggle grid-view instead of the file listing

|

||||||

* [zip downloads](#zip-downloads) - download folders (or file selections) as `zip` or `tar` files

|

* [zip downloads](#zip-downloads) - download folders (or file selections) as `zip` or `tar` files

|

||||||

* [uploading](#uploading) - drag files/folders into the web-browser to upload

|

* [uploading](#uploading) - drag files/folders into the web-browser to upload

|

||||||

* [file-search](#file-search) - dropping files into the browser also lets you see if they exist on the server

|

* [file-search](#file-search) - dropping files into the browser also lets you see if they exist on the server

|

||||||

@@ -55,8 +56,11 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

|||||||

* [searching](#searching) - search by size, date, path/name, mp3-tags, ...

|

* [searching](#searching) - search by size, date, path/name, mp3-tags, ...

|

||||||

* [server config](#server-config) - using arguments or config files, or a mix of both

|

* [server config](#server-config) - using arguments or config files, or a mix of both

|

||||||

* [ftp-server](#ftp-server) - an FTP server can be started using `--ftp 3921`

|

* [ftp-server](#ftp-server) - an FTP server can be started using `--ftp 3921`

|

||||||

* [file indexing](#file-indexing)

|

* [file indexing](#file-indexing) - enables dedup and music search ++

|

||||||

* [upload rules](#upload-rules) - set upload rules using volume flags

|

* [exclude-patterns](#exclude-patterns) - to save some time

|

||||||

|

* [filesystem guards](#filesystem-guards) - avoid traversing into other filesystems

|

||||||

|

* [periodic rescan](#periodic-rescan) - filesystem monitoring

|

||||||

|

* [upload rules](#upload-rules) - set upload rules using volflags

|

||||||

* [compress uploads](#compress-uploads) - files can be autocompressed on upload

|

* [compress uploads](#compress-uploads) - files can be autocompressed on upload

|

||||||

* [database location](#database-location) - in-volume (`.hist/up2k.db`, default) or somewhere else

|

* [database location](#database-location) - in-volume (`.hist/up2k.db`, default) or somewhere else

|

||||||

* [metadata from audio files](#metadata-from-audio-files) - set `-e2t` to index tags on upload

|

* [metadata from audio files](#metadata-from-audio-files) - set `-e2t` to index tags on upload

|

||||||

@@ -101,7 +105,7 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

|||||||

|

|

||||||

download **[copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py)** and you're all set!

|

download **[copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py)** and you're all set!

|

||||||

|

|

||||||

running the sfx without arguments (for example doubleclicking it on Windows) will give everyone read/write access to the current folder; see `-h` for help if you want [accounts and volumes](#accounts-and-volumes) etc

|

running the sfx without arguments (for example doubleclicking it on Windows) will give everyone read/write access to the current folder; you may want [accounts and volumes](#accounts-and-volumes)

|

||||||

|

|

||||||

some recommended options:

|

some recommended options:

|

||||||

* `-e2dsa` enables general [file indexing](#file-indexing)

|

* `-e2dsa` enables general [file indexing](#file-indexing)

|

||||||

@@ -109,7 +113,7 @@ some recommended options:

|

|||||||

* `-v /mnt/music:/music:r:rw,foo -a foo:bar` shares `/mnt/music` as `/music`, `r`eadable by anyone, and read-write for user `foo`, password `bar`

|

* `-v /mnt/music:/music:r:rw,foo -a foo:bar` shares `/mnt/music` as `/music`, `r`eadable by anyone, and read-write for user `foo`, password `bar`

|

||||||

* replace `:r:rw,foo` with `:r,foo` to only make the folder readable by `foo` and nobody else

|

* replace `:r:rw,foo` with `:r,foo` to only make the folder readable by `foo` and nobody else

|

||||||

* see [accounts and volumes](#accounts-and-volumes) for the syntax and other permissions (`r`ead, `w`rite, `m`ove, `d`elete, `g`et)

|

* see [accounts and volumes](#accounts-and-volumes) for the syntax and other permissions (`r`ead, `w`rite, `m`ove, `d`elete, `g`et)

|

||||||

* `--ls '**,*,ln,p,r'` to crash on startup if any of the volumes contain a symlink which point outside the volume, as that could give users unintended access

|

* `--ls '**,*,ln,p,r'` to crash on startup if any of the volumes contain a symlink which point outside the volume, as that could give users unintended access (see `--help-ls`)

|

||||||

|

|

||||||

|

|

||||||

### on servers

|

### on servers

|

||||||

@@ -167,7 +171,7 @@ feature summary

|

|||||||

* download

|

* download

|

||||||

* ☑ single files in browser

|

* ☑ single files in browser

|

||||||

* ☑ [folders as zip / tar files](#zip-downloads)

|

* ☑ [folders as zip / tar files](#zip-downloads)

|

||||||

* ☑ FUSE client (read-only)

|

* ☑ [FUSE client](https://github.com/9001/copyparty/tree/hovudstraum/bin#copyparty-fusepy) (read-only)

|

||||||

* browser

|

* browser

|

||||||

* ☑ [navpane](#navpane) (directory tree sidebar)

|

* ☑ [navpane](#navpane) (directory tree sidebar)

|

||||||

* ☑ file manager (cut/paste, delete, [batch-rename](#batch-rename))

|

* ☑ file manager (cut/paste, delete, [batch-rename](#batch-rename))

|

||||||

@@ -203,6 +207,7 @@ project goals / philosophy

|

|||||||

* inverse linux philosophy -- do all the things, and do an *okay* job

|

* inverse linux philosophy -- do all the things, and do an *okay* job

|

||||||

* quick drop-in service to get a lot of features in a pinch

|

* quick drop-in service to get a lot of features in a pinch

|

||||||

* there are probably [better alternatives](https://github.com/awesome-selfhosted/awesome-selfhosted) if you have specific/long-term needs

|

* there are probably [better alternatives](https://github.com/awesome-selfhosted/awesome-selfhosted) if you have specific/long-term needs

|

||||||

|

* but the resumable multithreaded uploads are p slick ngl

|

||||||

* run anywhere, support everything

|

* run anywhere, support everything

|

||||||

* as many web-browsers and python versions as possible

|

* as many web-browsers and python versions as possible

|

||||||

* every browser should at least be able to browse, download, upload files

|

* every browser should at least be able to browse, download, upload files

|

||||||

@@ -241,15 +246,21 @@ some improvement ideas

|

|||||||

|

|

||||||

## general bugs

|

## general bugs

|

||||||

|

|

||||||

* Windows: if the up2k db is on a samba-share or network disk, you'll get unpredictable behavior if the share is disconnected for a bit

|

* Windows: if the `up2k.db` (filesystem index) is on a samba-share or network disk, you'll get unpredictable behavior if the share is disconnected for a bit

|

||||||

* use `--hist` or the `hist` volflag (`-v [...]:c,hist=/tmp/foo`) to place the db on a local disk instead

|

* use `--hist` or the `hist` volflag (`-v [...]:c,hist=/tmp/foo`) to place the db on a local disk instead

|

||||||

* all volumes must exist / be available on startup; up2k (mtp especially) gets funky otherwise

|

* all volumes must exist / be available on startup; up2k (mtp especially) gets funky otherwise

|

||||||

|

* [the database can get stuck](https://github.com/9001/copyparty/issues/10)

|

||||||

|

* has only happened once but that is once too many

|

||||||

|

* luckily not dangerous for file integrity and doesn't really stop uploads or anything like that

|

||||||

|

* but would really appreciate some logs if anyone ever runs into it again

|

||||||

* probably more, pls let me know

|

* probably more, pls let me know

|

||||||

|

|

||||||

## not my bugs

|

## not my bugs

|

||||||

|

|

||||||

* [Chrome issue 1317069](https://bugs.chromium.org/p/chromium/issues/detail?id=1317069) -- if you try to upload a folder which contains symlinks by dragging it into the browser, the symlinked files will not get uploaded

|

* [Chrome issue 1317069](https://bugs.chromium.org/p/chromium/issues/detail?id=1317069) -- if you try to upload a folder which contains symlinks by dragging it into the browser, the symlinked files will not get uploaded

|

||||||

|

|

||||||

|

* [Chrome issue 1352210](https://bugs.chromium.org/p/chromium/issues/detail?id=1352210) -- plaintext http may be faster at filehashing than https (but also extremely CPU-intensive)

|

||||||

|

|

||||||

* iPhones: the volume control doesn't work because [apple doesn't want it to](https://developer.apple.com/library/archive/documentation/AudioVideo/Conceptual/Using_HTML5_Audio_Video/Device-SpecificConsiderations/Device-SpecificConsiderations.html#//apple_ref/doc/uid/TP40009523-CH5-SW11)

|

* iPhones: the volume control doesn't work because [apple doesn't want it to](https://developer.apple.com/library/archive/documentation/AudioVideo/Conceptual/Using_HTML5_Audio_Video/Device-SpecificConsiderations/Device-SpecificConsiderations.html#//apple_ref/doc/uid/TP40009523-CH5-SW11)

|

||||||

* *future workaround:* enable the equalizer, make it all-zero, and set a negative boost to reduce the volume

|

* *future workaround:* enable the equalizer, make it all-zero, and set a negative boost to reduce the volume

|

||||||

* "future" because `AudioContext` is broken in the current iOS version (15.1), maybe one day...

|

* "future" because `AudioContext` is broken in the current iOS version (15.1), maybe one day...

|

||||||

@@ -273,7 +284,7 @@ some improvement ideas

|

|||||||

* you can also do this with linux filesystem permissions; `chmod 111 music` will make it possible to access files and folders inside the `music` folder but not list the immediate contents -- also works with other software, not just copyparty

|

* you can also do this with linux filesystem permissions; `chmod 111 music` will make it possible to access files and folders inside the `music` folder but not list the immediate contents -- also works with other software, not just copyparty

|

||||||

|

|

||||||

* can I make copyparty download a file to my server if I give it a URL?

|

* can I make copyparty download a file to my server if I give it a URL?

|

||||||

* not officially, but there is a [terrible hack](https://github.com/9001/copyparty/blob/hovudstraum/bin/mtag/wget.py) which makes it possible

|

* not really, but there is a [terrible hack](https://github.com/9001/copyparty/blob/hovudstraum/bin/mtag/wget.py) which makes it possible

|

||||||

|

|

||||||

|

|

||||||

# accounts and volumes

|

# accounts and volumes

|

||||||

@@ -281,6 +292,8 @@ some improvement ideas

|

|||||||

per-folder, per-user permissions - if your setup is getting complex, consider making a [config file](./docs/example.conf) instead of using arguments

|

per-folder, per-user permissions - if your setup is getting complex, consider making a [config file](./docs/example.conf) instead of using arguments

|

||||||

* much easier to manage, and you can modify the config at runtime with `systemctl reload copyparty` or more conveniently using the `[reload cfg]` button in the control-panel (if logged in as admin)

|

* much easier to manage, and you can modify the config at runtime with `systemctl reload copyparty` or more conveniently using the `[reload cfg]` button in the control-panel (if logged in as admin)

|

||||||

|

|

||||||

|

a quick summary can be seen using `--help-accounts`

|

||||||

|

|

||||||

configuring accounts/volumes with arguments:

|

configuring accounts/volumes with arguments:

|

||||||

* `-a usr:pwd` adds account `usr` with password `pwd`

|

* `-a usr:pwd` adds account `usr` with password `pwd`

|

||||||

* `-v .::r` adds current-folder `.` as the webroot, `r`eadable by anyone

|

* `-v .::r` adds current-folder `.` as the webroot, `r`eadable by anyone

|

||||||

@@ -305,7 +318,7 @@ examples:

|

|||||||

* `u1` can open the `inc` folder, but cannot see the contents, only upload new files to it

|

* `u1` can open the `inc` folder, but cannot see the contents, only upload new files to it

|

||||||

* `u2` can browse it and move files *from* `/inc` into any folder where `u2` has write-access

|

* `u2` can browse it and move files *from* `/inc` into any folder where `u2` has write-access

|

||||||

* make folder `/mnt/ss` available at `/i`, read-write for u1, get-only for everyone else, and enable accesskeys: `-v /mnt/ss:i:rw,u1:g:c,fk=4`

|

* make folder `/mnt/ss` available at `/i`, read-write for u1, get-only for everyone else, and enable accesskeys: `-v /mnt/ss:i:rw,u1:g:c,fk=4`

|

||||||

* `c,fk=4` sets the `fk` volume-flag to 4, meaning each file gets a 4-character accesskey

|

* `c,fk=4` sets the `fk` volflag to 4, meaning each file gets a 4-character accesskey

|

||||||

* `u1` can upload files, browse the folder, and see the generated accesskeys

|

* `u1` can upload files, browse the folder, and see the generated accesskeys

|

||||||

* other users cannot browse the folder, but can access the files if they have the full file URL with the accesskey

|

* other users cannot browse the folder, but can access the files if they have the full file URL with the accesskey

|

||||||

|

|

||||||

@@ -337,7 +350,7 @@ the browser has the following hotkeys (always qwerty)

|

|||||||

* `I/K` prev/next folder

|

* `I/K` prev/next folder

|

||||||

* `M` parent folder (or unexpand current)

|

* `M` parent folder (or unexpand current)

|

||||||

* `V` toggle folders / textfiles in the navpane

|

* `V` toggle folders / textfiles in the navpane

|

||||||

* `G` toggle list / [grid view](#thumbnails)

|

* `G` toggle list / [grid view](#thumbnails) -- same as `田` bottom-right

|

||||||

* `T` toggle thumbnails / icons

|

* `T` toggle thumbnails / icons

|

||||||

* `ESC` close various things

|

* `ESC` close various things

|

||||||

* `ctrl-X` cut selected files/folders

|

* `ctrl-X` cut selected files/folders

|

||||||

@@ -358,19 +371,24 @@ the browser has the following hotkeys (always qwerty)

|

|||||||

* `U/O` skip 10sec back/forward

|

* `U/O` skip 10sec back/forward

|

||||||

* `0..9` jump to 0%..90%

|

* `0..9` jump to 0%..90%

|

||||||

* `P` play/pause (also starts playing the folder)

|

* `P` play/pause (also starts playing the folder)

|

||||||

|

* `Y` download file

|

||||||

* when viewing images / playing videos:

|

* when viewing images / playing videos:

|

||||||

* `J/L, Left/Right` prev/next file

|

* `J/L, Left/Right` prev/next file

|

||||||

* `Home/End` first/last file

|

* `Home/End` first/last file

|

||||||

|

* `F` toggle fullscreen

|

||||||

* `S` toggle selection

|

* `S` toggle selection

|

||||||

* `R` rotate clockwise (shift=ccw)

|

* `R` rotate clockwise (shift=ccw)

|

||||||

|

* `Y` download file

|

||||||

* `Esc` close viewer

|

* `Esc` close viewer

|

||||||

* videos:

|

* videos:

|

||||||

* `U/O` skip 10sec back/forward

|

* `U/O` skip 10sec back/forward

|

||||||

|

* `0..9` jump to 0%..90%

|

||||||

* `P/K/Space` play/pause

|

* `P/K/Space` play/pause

|

||||||

* `F` fullscreen

|

|

||||||

* `C` continue playing next video

|

|

||||||

* `V` loop

|

|

||||||

* `M` mute

|

* `M` mute

|

||||||

|

* `C` continue playing next video

|

||||||

|

* `V` loop entire file

|

||||||

|

* `[` loop range (start)

|

||||||

|

* `]` loop range (end)

|

||||||

* when the navpane is open:

|

* when the navpane is open:

|

||||||

* `A/D` adjust tree width

|

* `A/D` adjust tree width

|

||||||

* in the [grid view](#thumbnails):

|

* in the [grid view](#thumbnails):

|

||||||

@@ -402,7 +420,7 @@ click the `🌲` or pressing the `B` hotkey to toggle between breadcrumbs path (

|

|||||||

|

|

||||||

## thumbnails

|

## thumbnails

|

||||||

|

|

||||||

press `g` to toggle grid-view instead of the file listing, and `t` toggles icons / thumbnails

|

press `g` or `田` to toggle grid-view instead of the file listing and `t` toggles icons / thumbnails

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -444,13 +462,13 @@ you can also zip a selection of files or folders by clicking them in the browser

|

|||||||

|

|

||||||

## uploading

|

## uploading

|

||||||

|

|

||||||

drag files/folders into the web-browser to upload

|

drag files/folders into the web-browser to upload (or use the [command-line uploader](https://github.com/9001/copyparty/tree/hovudstraum/bin#up2kpy))

|

||||||

|

|

||||||

this initiates an upload using `up2k`; there are two uploaders available:

|

this initiates an upload using `up2k`; there are two uploaders available:

|

||||||

* `[🎈] bup`, the basic uploader, supports almost every browser since netscape 4.0

|

* `[🎈] bup`, the basic uploader, supports almost every browser since netscape 4.0

|

||||||

* `[🚀] up2k`, the fancy one

|

* `[🚀] up2k`, the good / fancy one

|

||||||

|

|

||||||

you can also undo/delete uploads by using `[🧯]` [unpost](#unpost)

|

NB: you can undo/delete your own uploads with `[🧯]` [unpost](#unpost)

|

||||||

|

|

||||||

up2k has several advantages:

|

up2k has several advantages:

|

||||||

* you can drop folders into the browser (files are added recursively)

|

* you can drop folders into the browser (files are added recursively)

|

||||||

@@ -462,7 +480,7 @@ up2k has several advantages:

|

|||||||

* much higher speeds than ftp/scp/tarpipe on some internet connections (mainly american ones) thanks to parallel connections

|

* much higher speeds than ftp/scp/tarpipe on some internet connections (mainly american ones) thanks to parallel connections

|

||||||

* the last-modified timestamp of the file is preserved

|

* the last-modified timestamp of the file is preserved

|

||||||

|

|

||||||

see [up2k](#up2k) for details on how it works

|

see [up2k](#up2k) for details on how it works, or watch a [demo video](https://a.ocv.me/pub/demo/pics-vids/#gf-0f6f5c0d)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -473,8 +491,8 @@ see [up2k](#up2k) for details on how it works

|

|||||||

the up2k UI is the epitome of polished inutitive experiences:

|

the up2k UI is the epitome of polished inutitive experiences:

|

||||||

* "parallel uploads" specifies how many chunks to upload at the same time

|

* "parallel uploads" specifies how many chunks to upload at the same time

|

||||||

* `[🏃]` analysis of other files should continue while one is uploading

|

* `[🏃]` analysis of other files should continue while one is uploading

|

||||||

|

* `[🥔]` shows a simpler UI for faster uploads from slow devices

|

||||||

* `[💭]` ask for confirmation before files are added to the queue

|

* `[💭]` ask for confirmation before files are added to the queue

|

||||||

* `[💤]` sync uploading between other copyparty browser-tabs so only one is active

|

|

||||||

* `[🔎]` switch between upload and [file-search](#file-search) mode

|

* `[🔎]` switch between upload and [file-search](#file-search) mode

|

||||||

* ignore `[🔎]` if you add files by dragging them into the browser

|

* ignore `[🔎]` if you add files by dragging them into the browser

|

||||||

|

|

||||||

@@ -486,7 +504,7 @@ and then theres the tabs below it,

|

|||||||

* plus up to 3 entries each from `[done]` and `[que]` for context

|

* plus up to 3 entries each from `[done]` and `[que]` for context

|

||||||

* `[que]` is all the files that are still queued

|

* `[que]` is all the files that are still queued

|

||||||

|

|

||||||

note that since up2k has to read each file twice, `[🎈 bup]` can *theoretically* be up to 2x faster in some extreme cases (files bigger than your ram, combined with an internet connection faster than the read-speed of your HDD, or if you're uploading from a cuo2duo)

|

note that since up2k has to read each file twice, `[🎈] bup` can *theoretically* be up to 2x faster in some extreme cases (files bigger than your ram, combined with an internet connection faster than the read-speed of your HDD, or if you're uploading from a cuo2duo)

|

||||||

|

|

||||||

if you are resuming a massive upload and want to skip hashing the files which already finished, you can enable `turbo` in the `[⚙️] config` tab, but please read the tooltip on that button

|

if you are resuming a massive upload and want to skip hashing the files which already finished, you can enable `turbo` in the `[⚙️] config` tab, but please read the tooltip on that button

|

||||||

|

|

||||||

@@ -597,7 +615,7 @@ and there are *two* editors

|

|||||||

|

|

||||||

* get a plaintext file listing by adding `?ls=t` to a URL, or a compact colored one with `?ls=v` (for unix terminals)

|

* get a plaintext file listing by adding `?ls=t` to a URL, or a compact colored one with `?ls=v` (for unix terminals)

|

||||||

|

|

||||||

* if you are using media hotkeys to switch songs and are getting tired of seeing the OSD popup which Windows doesn't let you disable, consider https://ocv.me/dev/?media-osd-bgone.ps1

|

* if you are using media hotkeys to switch songs and are getting tired of seeing the OSD popup which Windows doesn't let you disable, consider [./contrib/media-osd-bgone.ps1](contrib/#media-osd-bgoneps1)

|

||||||

|

|

||||||

* click the bottom-left `π` to open a javascript prompt for debugging

|

* click the bottom-left `π` to open a javascript prompt for debugging

|

||||||

|

|

||||||

@@ -620,7 +638,9 @@ path/name queries are space-separated, AND'ed together, and words are negated wi

|

|||||||

* path: `shibayan -bossa` finds all files where one of the folders contain `shibayan` but filters out any results where `bossa` exists somewhere in the path

|

* path: `shibayan -bossa` finds all files where one of the folders contain `shibayan` but filters out any results where `bossa` exists somewhere in the path

|

||||||

* name: `demetori styx` gives you [good stuff](https://www.youtube.com/watch?v=zGh0g14ZJ8I&list=PL3A147BD151EE5218&index=9)

|

* name: `demetori styx` gives you [good stuff](https://www.youtube.com/watch?v=zGh0g14ZJ8I&list=PL3A147BD151EE5218&index=9)

|

||||||

|

|

||||||

add the argument `-e2ts` to also scan/index tags from music files, which brings us over to:

|

the `raw` field allows for more complex stuff such as `( tags like *nhato* or tags like *taishi* ) and ( not tags like *nhato* or not tags like *taishi* )` which finds all songs by either nhato or taishi, excluding collabs (terrible example, why would you do that)

|

||||||

|

|

||||||

|

for the above example to work, add the commandline argument `-e2ts` to also scan/index tags from music files, which brings us over to:

|

||||||

|

|

||||||

|

|

||||||

# server config

|

# server config

|

||||||

@@ -645,7 +665,9 @@ an FTP server can be started using `--ftp 3921`, and/or `--ftps` for explicit T

|

|||||||

|

|

||||||

## file indexing

|

## file indexing

|

||||||

|

|

||||||

file indexing relies on two database tables, the up2k filetree (`-e2d`) and the metadata tags (`-e2t`), stored in `.hist/up2k.db`. Configuration can be done through arguments, volume flags, or a mix of both.

|

enables dedup and music search ++

|

||||||

|

|

||||||

|

file indexing relies on two database tables, the up2k filetree (`-e2d`) and the metadata tags (`-e2t`), stored in `.hist/up2k.db`. Configuration can be done through arguments, volflags, or a mix of both.

|

||||||

|

|

||||||

through arguments:

|

through arguments:

|

||||||

* `-e2d` enables file indexing on upload

|

* `-e2d` enables file indexing on upload

|

||||||

@@ -654,8 +676,11 @@ through arguments:

|

|||||||

* `-e2t` enables metadata indexing on upload

|

* `-e2t` enables metadata indexing on upload

|

||||||

* `-e2ts` also scans for tags in all files that don't have tags yet

|

* `-e2ts` also scans for tags in all files that don't have tags yet

|

||||||

* `-e2tsr` also deletes all existing tags, doing a full reindex

|

* `-e2tsr` also deletes all existing tags, doing a full reindex

|

||||||

|

* `-e2v` verfies file integrity at startup, comparing hashes from the db

|

||||||

|

* `-e2vu` patches the database with the new hashes from the filesystem

|

||||||

|

* `-e2vp` panics and kills copyparty instead

|

||||||

|

|

||||||

the same arguments can be set as volume flags, in addition to `d2d`, `d2ds`, `d2t`, `d2ts` for disabling:

|

the same arguments can be set as volflags, in addition to `d2d`, `d2ds`, `d2t`, `d2ts`, `d2v` for disabling:

|

||||||

* `-v ~/music::r:c,e2dsa,e2tsr` does a full reindex of everything on startup

|

* `-v ~/music::r:c,e2dsa,e2tsr` does a full reindex of everything on startup

|

||||||

* `-v ~/music::r:c,d2d` disables **all** indexing, even if any `-e2*` are on

|

* `-v ~/music::r:c,d2d` disables **all** indexing, even if any `-e2*` are on

|

||||||

* `-v ~/music::r:c,d2t` disables all `-e2t*` (tags), does not affect `-e2d*`

|

* `-v ~/music::r:c,d2t` disables all `-e2t*` (tags), does not affect `-e2d*`

|

||||||

@@ -667,7 +692,9 @@ note:

|

|||||||

* `e2tsr` is probably always overkill, since `e2ds`/`e2dsa` would pick up any file modifications and `e2ts` would then reindex those, unless there is a new copyparty version with new parsers and the release note says otherwise

|

* `e2tsr` is probably always overkill, since `e2ds`/`e2dsa` would pick up any file modifications and `e2ts` would then reindex those, unless there is a new copyparty version with new parsers and the release note says otherwise

|

||||||

* the rescan button in the admin panel has no effect unless the volume has `-e2ds` or higher

|

* the rescan button in the admin panel has no effect unless the volume has `-e2ds` or higher

|

||||||

|

|

||||||

to save some time, you can provide a regex pattern for filepaths to only index by filename/path/size/last-modified (and not the hash of the file contents) by setting `--no-hash \.iso$` or the volume-flag `:c,nohash=\.iso$`, this has the following consequences:

|

### exclude-patterns

|

||||||

|

|

||||||

|

to save some time, you can provide a regex pattern for filepaths to only index by filename/path/size/last-modified (and not the hash of the file contents) by setting `--no-hash \.iso$` or the volflag `:c,nohash=\.iso$`, this has the following consequences:

|

||||||

* initial indexing is way faster, especially when the volume is on a network disk

|

* initial indexing is way faster, especially when the volume is on a network disk

|

||||||

* makes it impossible to [file-search](#file-search)

|

* makes it impossible to [file-search](#file-search)

|

||||||

* if someone uploads the same file contents, the upload will not be detected as a dupe, so it will not get symlinked or rejected

|

* if someone uploads the same file contents, the upload will not be detected as a dupe, so it will not get symlinked or rejected

|

||||||

@@ -676,12 +703,29 @@ similarly, you can fully ignore files/folders using `--no-idx [...]` and `:c,noi

|

|||||||

|

|

||||||

if you set `--no-hash [...]` globally, you can enable hashing for specific volumes using flag `:c,nohash=`

|

if you set `--no-hash [...]` globally, you can enable hashing for specific volumes using flag `:c,nohash=`

|

||||||

|

|

||||||

|

### filesystem guards

|

||||||

|

|

||||||

|

avoid traversing into other filesystems using `--xdev` / volflag `:c,xdev`, skipping any symlinks or bind-mounts to another HDD for example

|

||||||

|

|

||||||

|

and/or you can `--xvol` / `:c,xvol` to ignore all symlinks leaving the volume's top directory, but still allow bind-mounts pointing elsewhere

|

||||||

|

|

||||||

|

**NB: only affects the indexer** -- users can still access anything inside a volume, unless shadowed by another volume

|

||||||

|

|

||||||

|

### periodic rescan

|

||||||

|

|

||||||

|

filesystem monitoring; if copyparty is not the only software doing stuff on your filesystem, you may want to enable periodic rescans to keep the index up to date

|

||||||

|

|

||||||

|

argument `--re-maxage 60` will rescan all volumes every 60 sec, same as volflag `:c,scan=60` to specify it per-volume

|

||||||

|

|

||||||

|

uploads are disabled while a rescan is happening, so rescans will be delayed by `--db-act` (default 10 sec) when there is write-activity going on (uploads, renames, ...)

|

||||||

|

|

||||||

|

|

||||||

## upload rules

|

## upload rules

|

||||||

|

|

||||||

set upload rules using volume flags, some examples:

|

set upload rules using volflags, some examples:

|

||||||

|

|

||||||

* `:c,sz=1k-3m` sets allowed filesize between 1 KiB and 3 MiB inclusive (suffixes: `b`, `k`, `m`, `g`)

|

* `:c,sz=1k-3m` sets allowed filesize between 1 KiB and 3 MiB inclusive (suffixes: `b`, `k`, `m`, `g`)

|

||||||

|

* `:c,df=4g` block uploads if there would be less than 4 GiB free disk space afterwards

|

||||||

* `:c,nosub` disallow uploading into subdirectories; goes well with `rotn` and `rotf`:

|

* `:c,nosub` disallow uploading into subdirectories; goes well with `rotn` and `rotf`:

|

||||||

* `:c,rotn=1000,2` moves uploads into subfolders, up to 1000 files in each folder before making a new one, two levels deep (must be at least 1)

|

* `:c,rotn=1000,2` moves uploads into subfolders, up to 1000 files in each folder before making a new one, two levels deep (must be at least 1)

|

||||||

* `:c,rotf=%Y/%m/%d/%H` enforces files to be uploaded into a structure of subfolders according to that date format

|

* `:c,rotf=%Y/%m/%d/%H` enforces files to be uploaded into a structure of subfolders according to that date format

|

||||||

@@ -700,16 +744,16 @@ you can also set transaction limits which apply per-IP and per-volume, but these

|

|||||||

|

|

||||||

files can be autocompressed on upload, either on user-request (if config allows) or forced by server-config

|

files can be autocompressed on upload, either on user-request (if config allows) or forced by server-config

|

||||||

|

|

||||||

* volume flag `gz` allows gz compression

|

* volflag `gz` allows gz compression

|

||||||

* volume flag `xz` allows lzma compression

|

* volflag `xz` allows lzma compression

|

||||||

* volume flag `pk` **forces** compression on all files

|

* volflag `pk` **forces** compression on all files

|

||||||

* url parameter `pk` requests compression with server-default algorithm

|

* url parameter `pk` requests compression with server-default algorithm

|

||||||

* url parameter `gz` or `xz` requests compression with a specific algorithm

|

* url parameter `gz` or `xz` requests compression with a specific algorithm

|

||||||

* url parameter `xz` requests xz compression

|

* url parameter `xz` requests xz compression

|

||||||

|

|

||||||

things to note,

|

things to note,

|

||||||

* the `gz` and `xz` arguments take a single optional argument, the compression level (range 0 to 9)

|

* the `gz` and `xz` arguments take a single optional argument, the compression level (range 0 to 9)

|

||||||

* the `pk` volume flag takes the optional argument `ALGORITHM,LEVEL` which will then be forced for all uploads, for example `gz,9` or `xz,0`

|

* the `pk` volflag takes the optional argument `ALGORITHM,LEVEL` which will then be forced for all uploads, for example `gz,9` or `xz,0`

|

||||||

* default compression is gzip level 9

|

* default compression is gzip level 9

|

||||||

* all upload methods except up2k are supported

|

* all upload methods except up2k are supported

|

||||||

* the files will be indexed after compression, so dupe-detection and file-search will not work as expected

|

* the files will be indexed after compression, so dupe-detection and file-search will not work as expected

|

||||||

@@ -729,7 +773,7 @@ in-volume (`.hist/up2k.db`, default) or somewhere else

|

|||||||

|

|

||||||

copyparty creates a subfolder named `.hist` inside each volume where it stores the database, thumbnails, and some other stuff

|

copyparty creates a subfolder named `.hist` inside each volume where it stores the database, thumbnails, and some other stuff

|

||||||

|

|

||||||

this can instead be kept in a single place using the `--hist` argument, or the `hist=` volume flag, or a mix of both:

|

this can instead be kept in a single place using the `--hist` argument, or the `hist=` volflag, or a mix of both:

|

||||||

* `--hist ~/.cache/copyparty -v ~/music::r:c,hist=-` sets `~/.cache/copyparty` as the default place to put volume info, but `~/music` gets the regular `.hist` subfolder (`-` restores default behavior)

|

* `--hist ~/.cache/copyparty -v ~/music::r:c,hist=-` sets `~/.cache/copyparty` as the default place to put volume info, but `~/music` gets the regular `.hist` subfolder (`-` restores default behavior)

|

||||||

|

|

||||||

note:

|

note:

|

||||||

@@ -767,27 +811,32 @@ see the beautiful mess of a dictionary in [mtag.py](https://github.com/9001/copy

|

|||||||

|

|

||||||

provide custom parsers to index additional tags, also see [./bin/mtag/README.md](./bin/mtag/README.md)

|

provide custom parsers to index additional tags, also see [./bin/mtag/README.md](./bin/mtag/README.md)

|

||||||

|

|

||||||

copyparty can invoke external programs to collect additional metadata for files using `mtp` (either as argument or volume flag), there is a default timeout of 30sec

|

copyparty can invoke external programs to collect additional metadata for files using `mtp` (either as argument or volflag), there is a default timeout of 30sec, and only files which contain audio get analyzed by default (see ay/an/ad below)

|

||||||

|

|

||||||

* `-mtp .bpm=~/bin/audio-bpm.py` will execute `~/bin/audio-bpm.py` with the audio file as argument 1 to provide the `.bpm` tag, if that does not exist in the audio metadata

|

* `-mtp .bpm=~/bin/audio-bpm.py` will execute `~/bin/audio-bpm.py` with the audio file as argument 1 to provide the `.bpm` tag, if that does not exist in the audio metadata

|

||||||

* `-mtp key=f,t5,~/bin/audio-key.py` uses `~/bin/audio-key.py` to get the `key` tag, replacing any existing metadata tag (`f,`), aborting if it takes longer than 5sec (`t5,`)

|

* `-mtp key=f,t5,~/bin/audio-key.py` uses `~/bin/audio-key.py` to get the `key` tag, replacing any existing metadata tag (`f,`), aborting if it takes longer than 5sec (`t5,`)

|

||||||

* `-v ~/music::r:c,mtp=.bpm=~/bin/audio-bpm.py:c,mtp=key=f,t5,~/bin/audio-key.py` both as a per-volume config wow this is getting ugly

|

* `-v ~/music::r:c,mtp=.bpm=~/bin/audio-bpm.py:c,mtp=key=f,t5,~/bin/audio-key.py` both as a per-volume config wow this is getting ugly

|

||||||

|

|

||||||

*but wait, there's more!* `-mtp` can be used for non-audio files as well using the `a` flag: `ay` only do audio files, `an` only do non-audio files, or `ad` do all files (d as in dontcare)

|

*but wait, there's more!* `-mtp` can be used for non-audio files as well using the `a` flag: `ay` only do audio files (default), `an` only do non-audio files, or `ad` do all files (d as in dontcare)

|

||||||

|

|

||||||

|

* "audio file" also means videos btw, as long as there is an audio stream

|

||||||

* `-mtp ext=an,~/bin/file-ext.py` runs `~/bin/file-ext.py` to get the `ext` tag only if file is not audio (`an`)

|

* `-mtp ext=an,~/bin/file-ext.py` runs `~/bin/file-ext.py` to get the `ext` tag only if file is not audio (`an`)

|

||||||

* `-mtp arch,built,ver,orig=an,eexe,edll,~/bin/exe.py` runs `~/bin/exe.py` to get properties about windows-binaries only if file is not audio (`an`) and file extension is exe or dll

|

* `-mtp arch,built,ver,orig=an,eexe,edll,~/bin/exe.py` runs `~/bin/exe.py` to get properties about windows-binaries only if file is not audio (`an`) and file extension is exe or dll

|

||||||

|

|

||||||

|

you can control how the parser is killed if it times out with option `kt` killing the entire process tree (default), `km` just the main process, or `kn` let it continue running until copyparty is terminated

|

||||||

|

|

||||||

|

if something doesn't work, try `--mtag-v` for verbose error messages

|

||||||

|

|

||||||

|

|

||||||

## upload events

|

## upload events

|

||||||

|

|

||||||

trigger a script/program on each upload like so:

|

trigger a script/program on each upload like so:

|

||||||

|

|

||||||

```

|

```

|

||||||

-v /mnt/inc:inc:w:c,mte=+a1:c,mtp=a1=ad,/usr/bin/notify-send

|

-v /mnt/inc:inc:w:c,mte=+x1:c,mtp=x1=ad,kn,/usr/bin/notify-send

|

||||||

```

|

```

|

||||||

|

|

||||||

so filesystem location `/mnt/inc` shared at `/inc`, write-only for everyone, appending `a1` to the list of tags to index, and using `/usr/bin/notify-send` to "provide" that tag

|

so filesystem location `/mnt/inc` shared at `/inc`, write-only for everyone, appending `x1` to the list of tags to index (`mte`), and using `/usr/bin/notify-send` to "provide" tag `x1` for any filetype (`ad`) with kill-on-timeout disabled (`kn`)

|

||||||

|

|

||||||

that'll run the command `notify-send` with the path to the uploaded file as the first and only argument (so on linux it'll show a notification on-screen)

|

that'll run the command `notify-send` with the path to the uploaded file as the first and only argument (so on linux it'll show a notification on-screen)

|

||||||

|

|

||||||

@@ -803,8 +852,8 @@ if this becomes popular maybe there should be a less janky way to do it actually

|

|||||||

tell search engines you dont wanna be indexed, either using the good old [robots.txt](https://www.robotstxt.org/robotstxt.html) or through copyparty settings:

|

tell search engines you dont wanna be indexed, either using the good old [robots.txt](https://www.robotstxt.org/robotstxt.html) or through copyparty settings:

|

||||||

|

|

||||||

* `--no-robots` adds HTTP (`X-Robots-Tag`) and HTML (`<meta>`) headers with `noindex, nofollow` globally

|

* `--no-robots` adds HTTP (`X-Robots-Tag`) and HTML (`<meta>`) headers with `noindex, nofollow` globally

|

||||||

* volume-flag `[...]:c,norobots` does the same thing for that single volume

|

* volflag `[...]:c,norobots` does the same thing for that single volume

|

||||||

* volume-flag `[...]:c,robots` ALLOWS search-engine crawling for that volume, even if `--no-robots` is set globally

|

* volflag `[...]:c,robots` ALLOWS search-engine crawling for that volume, even if `--no-robots` is set globally

|

||||||

|

|

||||||

also, `--force-js` disables the plain HTML folder listing, making things harder to parse for search engines

|

also, `--force-js` disables the plain HTML folder listing, making things harder to parse for search engines

|

||||||

|

|

||||||

@@ -834,8 +883,17 @@ see the top of [./copyparty/web/browser.css](./copyparty/web/browser.css) where

|

|||||||

|

|

||||||

## complete examples

|

## complete examples

|

||||||

|

|

||||||

* read-only music server with bpm and key scanning

|

* read-only music server

|

||||||

`python copyparty-sfx.py -v /mnt/nas/music:/music:r -e2dsa -e2ts -mtp .bpm=f,audio-bpm.py -mtp key=f,audio-key.py`

|

`python copyparty-sfx.py -v /mnt/nas/music:/music:r -e2dsa -e2ts --no-robots --force-js --theme 2`

|

||||||

|

|

||||||

|

* ...with bpm and key scanning

|

||||||

|

`-mtp .bpm=f,audio-bpm.py -mtp key=f,audio-key.py`

|

||||||

|

|

||||||

|

* ...with a read-write folder for `kevin` whose password is `okgo`

|

||||||

|

`-a kevin:okgo -v /mnt/nas/inc:/inc:rw,kevin`

|

||||||

|

|

||||||

|

* ...with logging to disk

|

||||||

|

`-lo log/cpp-%Y-%m%d-%H%M%S.txt.xz`

|

||||||

|

|

||||||

|

|

||||||

# browser support

|

# browser support

|

||||||

@@ -882,6 +940,7 @@ quick summary of more eccentric web-browsers trying to view a directory index:

|

|||||||

| **netsurf** (3.10/arch) | is basically ie6 with much better css (javascript has almost no effect) |

|

| **netsurf** (3.10/arch) | is basically ie6 with much better css (javascript has almost no effect) |

|

||||||

| **opera** (11.60/winxp) | OK: thumbnails, image-viewer, zip-selection, rename/cut/paste. NG: up2k, navpane, markdown, audio |

|

| **opera** (11.60/winxp) | OK: thumbnails, image-viewer, zip-selection, rename/cut/paste. NG: up2k, navpane, markdown, audio |

|

||||||

| **ie4** and **netscape** 4.0 | can browse, upload with `?b=u`, auth with `&pw=wark` |

|

| **ie4** and **netscape** 4.0 | can browse, upload with `?b=u`, auth with `&pw=wark` |

|

||||||

|

| **ncsa mosaic** 2.7 | does not get a pass, [pic1](https://user-images.githubusercontent.com/241032/174189227-ae816026-cf6f-4be5-a26e-1b3b072c1b2f.png) - [pic2](https://user-images.githubusercontent.com/241032/174189225-5651c059-5152-46e9-ac26-7e98e497901b.png) |

|

||||||

| **SerenityOS** (7e98457) | hits a page fault, works with `?b=u`, file upload not-impl |

|

| **SerenityOS** (7e98457) | hits a page fault, works with `?b=u`, file upload not-impl |

|

||||||

|

|

||||||

|

|

||||||

@@ -940,17 +999,29 @@ quick outline of the up2k protocol, see [uploading](#uploading) for the web-clie

|

|||||||

|

|

||||||

up2k has saved a few uploads from becoming corrupted in-transfer already; caught an android phone on wifi redhanded in wireshark with a bitflip, however bup with https would *probably* have noticed as well (thanks to tls also functioning as an integrity check)

|

up2k has saved a few uploads from becoming corrupted in-transfer already; caught an android phone on wifi redhanded in wireshark with a bitflip, however bup with https would *probably* have noticed as well (thanks to tls also functioning as an integrity check)

|

||||||

|

|

||||||

|

regarding the frequent server log message during uploads;

|

||||||

|

`6.0M 106M/s 2.77G 102.9M/s n948 thank 4/0/3/1 10042/7198 00:01:09`

|

||||||

|

* this chunk was `6 MiB`, uploaded at `106 MiB/s`

|

||||||

|

* on this http connection, `2.77 GiB` transferred, `102.9 MiB/s` average, `948` chunks handled

|

||||||

|

* client says `4` uploads OK, `0` failed, `3` busy, `1` queued, `10042 MiB` total size, `7198 MiB` and `00:01:09` left

|

||||||

|

|

||||||

|

|

||||||

## why chunk-hashes

|

## why chunk-hashes

|

||||||

|

|

||||||

a single sha512 would be better, right?

|

a single sha512 would be better, right?

|

||||||

|

|

||||||

this is due to `crypto.subtle` not providing a streaming api (or the option to seed the sha512 hasher with a starting hash)

|

this is due to `crypto.subtle` [not yet](https://github.com/w3c/webcrypto/issues/73) providing a streaming api (or the option to seed the sha512 hasher with a starting hash)

|

||||||

|

|

||||||

as a result, the hashes are much less useful than they could have been (search the server by sha512, provide the sha512 in the response http headers, ...)

|

as a result, the hashes are much less useful than they could have been (search the server by sha512, provide the sha512 in the response http headers, ...)

|

||||||

|

|

||||||

|

however it allows for hashing multiple chunks in parallel, greatly increasing upload speed from fast storage (NVMe, raid-0 and such)

|

||||||

|

|

||||||

|

* both the [browser uploader](#uploading) and the [commandline one](https://github.com/9001/copyparty/blob/hovudstraum/bin/up2k.py) does this now, allowing for fast uploading even from plaintext http

|

||||||

|

|

||||||

hashwasm would solve the streaming issue but reduces hashing speed for sha512 (xxh128 does 6 GiB/s), and it would make old browsers and [iphones](https://bugs.webkit.org/show_bug.cgi?id=228552) unsupported

|

hashwasm would solve the streaming issue but reduces hashing speed for sha512 (xxh128 does 6 GiB/s), and it would make old browsers and [iphones](https://bugs.webkit.org/show_bug.cgi?id=228552) unsupported

|

||||||

|

|

||||||

|

* blake2 might be a better choice since xxh is non-cryptographic, but that gets ~15 MiB/s on slower androids

|

||||||

|

|

||||||

|

|

||||||

# performance

|

# performance

|

||||||

|

|

||||||

@@ -980,6 +1051,7 @@ when uploading files,

|

|||||||

|

|

||||||

* if you're cpu-bottlenecked, or the browser is maxing a cpu core:

|

* if you're cpu-bottlenecked, or the browser is maxing a cpu core:

|

||||||

* up to 30% faster uploads if you hide the upload status list by switching away from the `[🚀]` up2k ui-tab (or closing it)

|

* up to 30% faster uploads if you hide the upload status list by switching away from the `[🚀]` up2k ui-tab (or closing it)

|

||||||

|

* optionally you can switch to the lightweight potato ui by clicking the `[🥔]`

|

||||||

* switching to another browser-tab also works, the favicon will update every 10 seconds in that case

|

* switching to another browser-tab also works, the favicon will update every 10 seconds in that case

|

||||||

* unlikely to be a problem, but can happen when uploding many small files, or your internet is too fast, or PC too slow

|

* unlikely to be a problem, but can happen when uploding many small files, or your internet is too fast, or PC too slow

|

||||||

|

|

||||||

@@ -988,16 +1060,28 @@ when uploading files,

|

|||||||

|

|

||||||

some notes on hardening

|

some notes on hardening

|

||||||

|

|

||||||

on public copyparty instances with anonymous upload enabled:

|

* option `-s` is a shortcut to set the following options:

|

||||||

|

* `--no-thumb` disables thumbnails and audio transcoding to stop copyparty from running `FFmpeg`/`Pillow`/`VIPS` on uploaded files, which is a [good idea](https://www.cvedetails.com/vulnerability-list.php?vendor_id=3611) if anonymous upload is enabled

|

||||||

|

* `--no-mtag-ff` uses `mutagen` to grab music tags instead of `FFmpeg`, which is safer and faster but less accurate

|

||||||

|

* `--dotpart` hides uploads from directory listings while they're still incoming

|

||||||

|

* `--no-robots` and `--force-js` makes life harder for crawlers, see [hiding from google](#hiding-from-google)

|

||||||

|

|

||||||

* users can upload html/css/js which will evaluate for other visitors in a few ways,

|

* option `-ss` is a shortcut for the above plus:

|

||||||

* unless `--no-readme` is set: by uploading/modifying a file named `readme.md`

|

* `--no-logues` and `--no-readme` disables support for readme's and prologues / epilogues in directory listings, which otherwise lets people upload arbitrary `<script>` tags

|

||||||

* if `move` access is granted AND none of `--no-logues`, `--no-dot-mv`, `--no-dot-ren` is set: by uploading some .html file and renaming it to `.epilogue.html` (uploading it directly is blocked)

|

* `--unpost 0`, `--no-del`, `--no-mv` disables all move/delete support

|

||||||

|

* `--hardlink` creates hardlinks instead of symlinks when deduplicating uploads, which is less maintenance

|

||||||

|

* however note if you edit one file it will also affect the other copies

|

||||||

|

* `--vague-403` returns a "404 not found" instead of "403 forbidden" which is a common enterprise meme

|

||||||

|

* `--nih` removes the server hostname from directory listings

|

||||||

|

|

||||||

other misc:

|

* option `-sss` is a shortcut for the above plus:

|

||||||

|

* `-lo cpp-%Y-%m%d-%H%M%S.txt.xz` enables logging to disk

|

||||||

|

* `-ls **,*,ln,p,r` does a scan on startup for any dangerous symlinks

|

||||||

|

|

||||||

|

other misc notes:

|

||||||

|

|

||||||

* you can disable directory listings by giving permission `g` instead of `r`, only accepting direct URLs to files

|

* you can disable directory listings by giving permission `g` instead of `r`, only accepting direct URLs to files

|

||||||

* combine this with volume-flag `c,fk` to generate per-file accesskeys; users which have full read-access will then see URLs with `?k=...` appended to the end, and `g` users must provide that URL including the correct key to avoid a 404

|

* combine this with volflag `c,fk` to generate per-file accesskeys; users which have full read-access will then see URLs with `?k=...` appended to the end, and `g` users must provide that URL including the correct key to avoid a 404

|

||||||

|

|

||||||

|

|

||||||

## gotchas

|

## gotchas

|

||||||

@@ -1190,25 +1274,32 @@ if you want thumbnails, `apt -y install ffmpeg`

|

|||||||

|

|

||||||

ideas for context to include in bug reports

|

ideas for context to include in bug reports

|

||||||

|

|

||||||

|

in general, commandline arguments (and config file if any)

|

||||||

|

|

||||||

if something broke during an upload (replacing FILENAME with a part of the filename that broke):

|

if something broke during an upload (replacing FILENAME with a part of the filename that broke):

|

||||||

```

|

```

|

||||||

journalctl -aS '48 hour ago' -u copyparty | grep -C10 FILENAME | tee bug.log

|

journalctl -aS '48 hour ago' -u copyparty | grep -C10 FILENAME | tee bug.log

|

||||||

```

|

```

|

||||||

|

|

||||||

|

if there's a wall of base64 in the log (thread stacks) then please include that, especially if you run into something freezing up or getting stuck, for example `OperationalError('database is locked')` -- alternatively you can visit `/?stack` to see the stacks live, so http://127.0.0.1:3923/?stack for example

|

||||||

|

|

||||||

|

|

||||||

# building

|

# building

|

||||||

|

|

||||||

## dev env setup

|

## dev env setup

|

||||||

|

|

||||||

mostly optional; if you need a working env for vscode or similar

|

you need python 3.9 or newer due to type hints

|

||||||

|

|

||||||

|

the rest is mostly optional; if you need a working env for vscode or similar

|

||||||

|

|

||||||

```sh

|

```sh

|

||||||

python3 -m venv .venv

|

python3 -m venv .venv

|

||||||

. .venv/bin/activate

|

. .venv/bin/activate

|

||||||

pip install jinja2 # mandatory

|

pip install jinja2 strip_hints # MANDATORY

|

||||||

pip install mutagen # audio metadata

|

pip install mutagen # audio metadata

|

||||||

|

pip install pyftpdlib # ftp server

|

||||||

pip install Pillow pyheif-pillow-opener pillow-avif-plugin # thumbnails

|

pip install Pillow pyheif-pillow-opener pillow-avif-plugin # thumbnails

|

||||||

pip install black==21.12b0 bandit pylint flake8 # vscode tooling

|

pip install black==21.12b0 click==8.0.2 bandit pylint flake8 isort mypy # vscode tooling

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

@@ -1239,10 +1330,7 @@ also builds the sfx so skip the sfx section above

|

|||||||

in the `scripts` folder:

|

in the `scripts` folder:

|

||||||

|

|

||||||

* run `make -C deps-docker` to build all dependencies

|

* run `make -C deps-docker` to build all dependencies

|

||||||

* `git tag v1.2.3 && git push origin --tags`

|

* run `./rls.sh 1.2.3` which uploads to pypi + creates github release + sfx

|

||||||

* upload to pypi with `make-pypi-release.(sh|bat)`

|

|

||||||

* create github release with `make-tgz-release.sh`

|

|

||||||

* create sfx with `make-sfx.sh`

|

|

||||||

|

|

||||||

|

|

||||||

# todo

|

# todo

|

||||||

@@ -1269,7 +1357,7 @@ roughly sorted by priority

|

|||||||

* up2k partials ui

|

* up2k partials ui

|

||||||

* feels like there isn't much point

|

* feels like there isn't much point

|

||||||

* cache sha512 chunks on client

|

* cache sha512 chunks on client

|

||||||

* too dangerous

|

* too dangerous -- overtaken by turbo mode

|

||||||

* comment field

|

* comment field

|

||||||

* nah

|

* nah

|

||||||

* look into android thumbnail cache file format

|

* look into android thumbnail cache file format

|

||||||

|

|||||||

@@ -42,7 +42,7 @@ run [`install-deps.sh`](install-deps.sh) to build/install most dependencies requ

|

|||||||

* `mtp` modules will not run if a file has existing tags in the db, so clear out the tags with `-e2tsr` the first time you launch with new `mtp` options

|

* `mtp` modules will not run if a file has existing tags in the db, so clear out the tags with `-e2tsr` the first time you launch with new `mtp` options

|

||||||

|

|

||||||

|

|

||||||

## usage with volume-flags

|

## usage with volflags

|

||||||

|

|

||||||

instead of affecting all volumes, you can set the options for just one volume like so:

|

instead of affecting all volumes, you can set the options for just one volume like so:

|

||||||

|

|

||||||

|

|||||||

@@ -17,7 +17,7 @@ except:

|

|||||||

|

|

||||||

"""

|

"""

|

||||||

calculates various checksums for uploads,

|

calculates various checksums for uploads,

|

||||||

usage: -mtp crc32,md5,sha1,sha256b=bin/mtag/cksum.py

|

usage: -mtp crc32,md5,sha1,sha256b=ad,bin/mtag/cksum.py

|

||||||

"""

|

"""

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@@ -43,7 +43,6 @@ PS: this requires e2ts to be functional,

|

|||||||

|

|

||||||

import os

|

import os

|

||||||

import sys

|

import sys

|

||||||

import time

|

|

||||||

import filecmp

|

import filecmp

|

||||||

import subprocess as sp

|

import subprocess as sp

|

||||||

|

|

||||||

@@ -90,4 +89,7 @@ def main():

|

|||||||

|

|

||||||

|

|

||||||

if __name__ == "__main__":

|

if __name__ == "__main__":

|

||||||

main()

|

try:

|

||||||

|

main()

|

||||||

|

except:

|