Compare commits

410 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

cc0cc8cdf0 | ||

|

|

fb13969798 | ||

|

|

278258ee9f | ||

|

|

9e542cf86b | ||

|

|

244e952f79 | ||

|

|

aa2a8fa223 | ||

|

|

467acb47bf | ||

|

|

0c0d6b2bfc | ||

|

|

ce0e5be406 | ||

|

|

65ce4c90fa | ||

|

|

9897a08d09 | ||

|

|

f5753ba720 | ||

|

|

fcf32a935b | ||

|

|

ec50788987 | ||

|

|

ac0a2da3b5 | ||

|

|

9f84dc42fe | ||

|

|

21f9304235 | ||

|

|

5cedd22bbd | ||

|

|

c0dacbc4dd | ||

|

|

dd6e9ea70c | ||

|

|

87598dcd7f | ||

|

|

3bb7b677f8 | ||

|

|

988a7223f4 | ||

|

|

7f044372fa | ||

|

|

552897abbc | ||

|

|

946a8c5baa | ||

|

|

888b31aa92 | ||

|

|

e2dec2510f | ||

|

|

da5ad2ab9f | ||

|

|

eaa4b04a22 | ||

|

|

3051b13108 | ||

|

|

4c4e48bab7 | ||

|

|

01a3eb29cb | ||

|

|

73f7249c5f | ||

|

|

18c6559199 | ||

|

|

e66ece993f | ||

|

|

0686860624 | ||

|

|

24ce46b380 | ||

|

|

a49bf81ff2 | ||

|

|

64501fd7f1 | ||

|

|

db3c0b0907 | ||

|

|

edda117a7a | ||

|

|

cdface0dd5 | ||

|

|

be6afe2d3a | ||

|

|

9163780000 | ||

|

|

d7aa7dfe64 | ||

|

|

f1decb531d | ||

|

|

99399c698b | ||

|

|

1f5f42f216 | ||

|

|

9082c4702f | ||

|

|

6cedcfbf77 | ||

|

|

8a631f045e | ||

|

|

a6a2ee5b6b | ||

|

|

016708276c | ||

|

|

4cfdc4c513 | ||

|

|

0f257c9308 | ||

|

|

c8104b6e78 | ||

|

|

1a1d731043 | ||

|

|

c5a000d2ae | ||

|

|

94d1924fa9 | ||

|

|

6c1cf68bca | ||

|

|

395af051bd | ||

|

|

42fd66675e | ||

|

|

21a3f3699b | ||

|

|

d168b2acac | ||

|

|

2ce8233921 | ||

|

|

697a4fa8a4 | ||

|

|

2f83c6c7d1 | ||

|

|

127f414e9c | ||

|

|

33c4ccffab | ||

|

|

bafe7f5a09 | ||

|

|

baf41112d1 | ||

|

|

a90dde94e1 | ||

|

|

7dfbfc7227 | ||

|

|

b10843d051 | ||

|

|

520ac8f4dc | ||

|

|

537a6e50e9 | ||

|

|

2d0cbdf1a8 | ||

|

|

5afb562aa3 | ||

|

|

db069c3d4a | ||

|

|

fae40c7e2f | ||

|

|

0c43b592dc | ||

|

|

2ab8924e2d | ||

|

|

0e31cfa784 | ||

|

|

8f7ffcf350 | ||

|

|

9c8507a0fd | ||

|

|

e9b2cab088 | ||

|

|

d3ccacccb1 | ||

|

|

df386c8fbc | ||

|

|

4d15dd6e17 | ||

|

|

56a0499636 | ||

|

|

10fc4768e8 | ||

|

|

2b63d7d10d | ||

|

|

1f177528c1 | ||

|

|

fc3bbb70a3 | ||

|

|

ce3cab0295 | ||

|

|

c784e5285e | ||

|

|

2bf9055cae | ||

|

|

8aba5aed4f | ||

|

|

0ce7cf5e10 | ||

|

|

96edcbccd7 | ||

|

|

4603afb6de | ||

|

|

56317b00af | ||

|

|

cacec9c1f3 | ||

|

|

44ee07f0b2 | ||

|

|

6a8d5e1731 | ||

|

|

d9962f65b3 | ||

|

|

119e88d87b | ||

|

|

71d9e010d9 | ||

|

|

5718caa957 | ||

|

|

efd8a32ed6 | ||

|

|

b22d700e16 | ||

|

|

ccdacea0c4 | ||

|

|

4bdcbc1cb5 | ||

|

|

833c6cf2ec | ||

|

|

dd6dbdd90a | ||

|

|

63013cc565 | ||

|

|

912402364a | ||

|

|

159f51b12b | ||

|

|

7678a91b0e | ||

|

|

b13899c63d | ||

|

|

3a0d882c5e | ||

|

|

cb81f0ad6d | ||

|

|

518bacf628 | ||

|

|

ca63b03e55 | ||

|

|

cecef88d6b | ||

|

|

7ffd805a03 | ||

|

|

a7e2a0c981 | ||

|

|

2a570bb4ca | ||

|

|

5ca8f0706d | ||

|

|

a9b4436cdc | ||

|

|

5f91999512 | ||

|

|

9f000beeaf | ||

|

|

ff0a71f212 | ||

|

|

22dfc6ec24 | ||

|

|

48147c079e | ||

|

|

d715479ef6 | ||

|

|

fc8298c468 | ||

|

|

e94ca5dc91 | ||

|

|

114b71b751 | ||

|

|

b2770a2087 | ||

|

|

cba1878bb2 | ||

|

|

a2e037d6af | ||

|

|

65a2b6a223 | ||

|

|

9ed799e803 | ||

|

|

c1c0ecca13 | ||

|

|

ee62836383 | ||

|

|

705f598b1a | ||

|

|

414de88925 | ||

|

|

53ffd245dd | ||

|

|

cf1b756206 | ||

|

|

22b58e31ef | ||

|

|

b7f9bf5a28 | ||

|

|

aba680b6c2 | ||

|

|

fabada95f6 | ||

|

|

9ccd8bb3ea | ||

|

|

1d68acf8f0 | ||

|

|

1e7697b551 | ||

|

|

4a4ec88d00 | ||

|

|

6adc778d62 | ||

|

|

6b7ebdb7e9 | ||

|

|

3d7facd774 | ||

|

|

eaee1f2cab | ||

|

|

ff012221ae | ||

|

|

c398553748 | ||

|

|

3ccbcf6185 | ||

|

|

f0abc0ef59 | ||

|

|

a99fa3375d | ||

|

|

22c7e09b3f | ||

|

|

0dfe1d5b35 | ||

|

|

a99a3bc6d7 | ||

|

|

9804f25de3 | ||

|

|

ae98200660 | ||

|

|

e45420646f | ||

|

|

21be82ef8b | ||

|

|

001afe00cb | ||

|

|

19a5985f29 | ||

|

|

2715ee6c61 | ||

|

|

dc157fa28f | ||

|

|

1ff14b4e05 | ||

|

|

480ac254ab | ||

|

|

4b95db81aa | ||

|

|

c81e898435 | ||

|

|

f1646b96ca | ||

|

|

44f2b63e43 | ||

|

|

847a2bdc85 | ||

|

|

03f0f99469 | ||

|

|

3900e66158 | ||

|

|

3dff6cda40 | ||

|

|

73d05095b5 | ||

|

|

fcdc1728eb | ||

|

|

8b942ea237 | ||

|

|

88a1c5ca5d | ||

|

|

047176b297 | ||

|

|

dc4d0d8e71 | ||

|

|

b9c5c7bbde | ||

|

|

9daeed923f | ||

|

|

66b260cea9 | ||

|

|

58cf01c2ad | ||

|

|

d866841c19 | ||

|

|

a462a644fb | ||

|

|

678675a9a6 | ||

|

|

de9069ef1d | ||

|

|

c0c0a1a83a | ||

|

|

1d004b6dbd | ||

|

|

b90e1200d7 | ||

|

|

4493a0a804 | ||

|

|

58835b2b42 | ||

|

|

427597b603 | ||

|

|

7d64879ba8 | ||

|

|

bb715704b7 | ||

|

|

d67e9cc507 | ||

|

|

2927bbb2d6 | ||

|

|

0527b59180 | ||

|

|

a5ce1032d3 | ||

|

|

1c2acdc985 | ||

|

|

4e75534ef8 | ||

|

|

7a573cafd1 | ||

|

|

844194ee29 | ||

|

|

609c5921d4 | ||

|

|

c79eaa089a | ||

|

|

e9d962f273 | ||

|

|

b5405174ec | ||

|

|

6eee601521 | ||

|

|

2fac2bee7c | ||

|

|

c140eeee6b | ||

|

|

c5988a04f9 | ||

|

|

a2e0f98693 | ||

|

|

1111153f06 | ||

|

|

e5a836cb7d | ||

|

|

b0de84cbc5 | ||

|

|

cbb718e10d | ||

|

|

b5ad9369fe | ||

|

|

4401de0413 | ||

|

|

6e671c5245 | ||

|

|

08848be784 | ||

|

|

b599fbae97 | ||

|

|

a8dabc99f6 | ||

|

|

f1130db131 | ||

|

|

735ec35546 | ||

|

|

5a009a2a64 | ||

|

|

d9e9526247 | ||

|

|

5a8c3b8be0 | ||

|

|

1c9c17fb9b | ||

|

|

7f82449179 | ||

|

|

e455ec994e | ||

|

|

c111027420 | ||

|

|

abcdf479e6 | ||

|

|

ad2371f810 | ||

|

|

c4e2b0f95f | ||

|

|

3da62ec234 | ||

|

|

01233991f3 | ||

|

|

ee35974273 | ||

|

|

7037e7365e | ||

|

|

03b13e8a1c | ||

|

|

cdd2da0208 | ||

|

|

cec0e0cf02 | ||

|

|

8122ddedfe | ||

|

|

55a77c5e89 | ||

|

|

461f31582d | ||

|

|

f356faa278 | ||

|

|

9f034d9c4c | ||

|

|

ba52590ae4 | ||

|

|

92edea1de5 | ||

|

|

7ff46966da | ||

|

|

fca70b3508 | ||

|

|

70009cd984 | ||

|

|

8d8b88c4fd | ||

|

|

c4b0cccefd | ||

|

|

7c2beba555 | ||

|

|

7d8d94388b | ||

|

|

0b46b1a614 | ||

|

|

5153db6bff | ||

|

|

b0af4b3712 | ||

|

|

c8f4aeaefa | ||

|

|

00da74400c | ||

|

|

83fb569d61 | ||

|

|

5a62cb4869 | ||

|

|

687df2fabd | ||

|

|

cdd0794d6e | ||

|

|

dcc988135e | ||

|

|

3db117d85f | ||

|

|

ee9aad82dd | ||

|

|

2d6eb63fce | ||

|

|

ca001c8504 | ||

|

|

4e581c59da | ||

|

|

dbd42bc6bf | ||

|

|

c862ec1b64 | ||

|

|

f709140571 | ||

|

|

ef1c4b7a20 | ||

|

|

6c94a63f1c | ||

|

|

20669c73d3 | ||

|

|

0da719f4c2 | ||

|

|

373194c38a | ||

|

|

3d245431fc | ||

|

|

250c8c56f0 | ||

|

|

e136231c8e | ||

|

|

98ffaadf52 | ||

|

|

ebb1981803 | ||

|

|

72361c99e1 | ||

|

|

d5c9c8ebbd | ||

|

|

746229846d | ||

|

|

ffd7cd3ca8 | ||

|

|

b3cecabca3 | ||

|

|

662541c64c | ||

|

|

225bd80ea8 | ||

|

|

85e54980cc | ||

|

|

a19a0fa9f3 | ||

|

|

9bb6e0dc62 | ||

|

|

15ddcf53e7 | ||

|

|

6b54972ec0 | ||

|

|

0219eada23 | ||

|

|

8916bce306 | ||

|

|

99edba4fd9 | ||

|

|

64de3e01e8 | ||

|

|

8222ccc40b | ||

|

|

dc449bf8b0 | ||

|

|

ef0ecf878b | ||

|

|

53f1e3c91d | ||

|

|

eeef80919f | ||

|

|

987bce2182 | ||

|

|

b511d686f0 | ||

|

|

132a83501e | ||

|

|

e565ad5f55 | ||

|

|

f955d2bd58 | ||

|

|

5953399090 | ||

|

|

d26a944d95 | ||

|

|

50dac15568 | ||

|

|

ac1e11e4ce | ||

|

|

d749683d48 | ||

|

|

84e8e1ddfb | ||

|

|

6e58514b84 | ||

|

|

803e156509 | ||

|

|

c06aa683eb | ||

|

|

6644ceef49 | ||

|

|

bd3b3863ae | ||

|

|

ffd4f9c8b9 | ||

|

|

760ff2db72 | ||

|

|

f37187a041 | ||

|

|

1cdb170290 | ||

|

|

d5de3f2fe0 | ||

|

|

d76673e62d | ||

|

|

c549f367c1 | ||

|

|

927c3bce96 | ||

|

|

d75a2c77da | ||

|

|

e6c55d7ff9 | ||

|

|

4c2cb26991 | ||

|

|

dfe7f1d9af | ||

|

|

666297f6fb | ||

|

|

55a011b9c1 | ||

|

|

27aff12a1e | ||

|

|

9a87ee2fe4 | ||

|

|

0a9f4c6074 | ||

|

|

7219331057 | ||

|

|

2fd12a839c | ||

|

|

8c73e0cbc2 | ||

|

|

52e06226a2 | ||

|

|

452592519d | ||

|

|

c9281f8912 | ||

|

|

36d6d29a0c | ||

|

|

db6059e100 | ||

|

|

aab57cb24b | ||

|

|

f00b939402 | ||

|

|

bef9617638 | ||

|

|

692175f5b0 | ||

|

|

5ad65450c4 | ||

|

|

60c96f990a | ||

|

|

07b2bf1104 | ||

|

|

ac1bc232a9 | ||

|

|

5919607ad0 | ||

|

|

07ea629ca5 | ||

|

|

b629d18df6 | ||

|

|

566cbb6507 | ||

|

|

400d700845 | ||

|

|

82ce6862ee | ||

|

|

38e4fdfe03 | ||

|

|

c04662798d | ||

|

|

19d156ff4e | ||

|

|

87c60a1ec9 | ||

|

|

2c92dab165 | ||

|

|

5c1e23907d | ||

|

|

925c7f0a57 | ||

|

|

feed08deb2 | ||

|

|

560d7b6672 | ||

|

|

565daee98b | ||

|

|

e396c5c2b5 | ||

|

|

1ee2cdd089 | ||

|

|

beacedab50 | ||

|

|

25139a4358 | ||

|

|

f8491970fd | ||

|

|

da091aec85 | ||

|

|

e9eb5affcd | ||

|

|

c1918bc36c | ||

|

|

fdda567f50 | ||

|

|

603d0ed72b | ||

|

|

b15a4ef79f | ||

|

|

48a6789d36 | ||

|

|

36f2c446af | ||

|

|

69517e4624 | ||

|

|

ea270ab9f2 | ||

|

|

b6cf2d3089 | ||

|

|

e8db3dd37f | ||

|

|

27485a4cb1 | ||

|

|

253a414443 | ||

|

|

f6e693f0f5 | ||

|

|

c5f7cfc355 | ||

|

|

bc2c1e427a | ||

|

|

95d9e693c6 | ||

|

|

70a3cf36d1 | ||

|

|

aa45fccf11 |

2

.gitignore

vendored

2

.gitignore

vendored

@@ -12,6 +12,7 @@ copyparty.egg-info/

|

||||

/dist/

|

||||

/py2/

|

||||

/sfx*

|

||||

/pyz/

|

||||

/unt/

|

||||

/log/

|

||||

|

||||

@@ -29,6 +30,7 @@ copyparty/res/COPYING.txt

|

||||

copyparty/web/deps/

|

||||

srv/

|

||||

scripts/docker/i/

|

||||

scripts/deps-docker/uncomment.py

|

||||

contrib/package/arch/pkg/

|

||||

contrib/package/arch/src/

|

||||

|

||||

|

||||

24

.vscode/settings.json

vendored

24

.vscode/settings.json

vendored

@@ -22,6 +22,9 @@

|

||||

"terminal.ansiBrightCyan": "#9cf0ed",

|

||||

"terminal.ansiBrightWhite": "#ffffff",

|

||||

},

|

||||

"python.terminal.activateEnvironment": false,

|

||||

"python.analysis.enablePytestSupport": false,

|

||||

"python.analysis.typeCheckingMode": "standard",

|

||||

"python.testing.pytestEnabled": false,

|

||||

"python.testing.unittestEnabled": true,

|

||||

"python.testing.unittestArgs": [

|

||||

@@ -31,23 +34,8 @@

|

||||

"-p",

|

||||

"test_*.py"

|

||||

],

|

||||

"python.linting.pylintEnabled": true,

|

||||

"python.linting.flake8Enabled": true,

|

||||

"python.linting.banditEnabled": true,

|

||||

"python.linting.mypyEnabled": true,

|

||||

"python.linting.flake8Args": [

|

||||

"--max-line-length=120",

|

||||

"--ignore=E722,F405,E203,W503,W293,E402,E501,E128,E226",

|

||||

],

|

||||

"python.linting.banditArgs": [

|

||||

"--ignore=B104,B110,B112"

|

||||

],

|

||||

// python3 -m isort --py=27 --profile=black copyparty/

|

||||

"python.formatting.provider": "none",

|

||||

"[python]": {

|

||||

"editor.defaultFormatter": "ms-python.black-formatter"

|

||||

},

|

||||

"editor.formatOnSave": true,

|

||||

// python3 -m isort --py=27 --profile=black ~/dev/copyparty/{copyparty,tests}/*.py && python3 -m black -t py27 ~/dev/copyparty/{copyparty,tests,bin}/*.py $(find ~/dev/copyparty/copyparty/stolen -iname '*.py')

|

||||

"editor.formatOnSave": false,

|

||||

"[html]": {

|

||||

"editor.formatOnSave": false,

|

||||

"editor.autoIndent": "keep",

|

||||

@@ -58,6 +46,4 @@

|

||||

"files.associations": {

|

||||

"*.makefile": "makefile"

|

||||

},

|

||||

"python.linting.enabled": true,

|

||||

"python.pythonPath": "/usr/bin/python3"

|

||||

}

|

||||

514

README.md

514

README.md

@@ -1,4 +1,6 @@

|

||||

# 💾🎉 copyparty

|

||||

<img src="https://github.com/9001/copyparty/raw/hovudstraum/docs/logo.svg" width="250" align="right"/>

|

||||

|

||||

### 💾🎉 copyparty

|

||||

|

||||

turn almost any device into a file server with resumable uploads/downloads using [*any*](#browser-support) web browser

|

||||

|

||||

@@ -41,12 +43,17 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [unpost](#unpost) - undo/delete accidental uploads

|

||||

* [self-destruct](#self-destruct) - uploads can be given a lifetime

|

||||

* [race the beam](#race-the-beam) - download files while they're still uploading ([demo video](http://a.ocv.me/pub/g/nerd-stuff/cpp/2024-0418-race-the-beam.webm))

|

||||

* [incoming files](#incoming-files) - the control-panel shows the ETA for all incoming files

|

||||

* [file manager](#file-manager) - cut/paste, rename, and delete files/folders (if you have permission)

|

||||

* [shares](#shares) - share a file or folder by creating a temporary link

|

||||

* [batch rename](#batch-rename) - select some files and press `F2` to bring up the rename UI

|

||||

* [rss feeds](#rss-feeds) - monitor a folder with your RSS reader

|

||||

* [recent uploads](#recent-uploads) - list all recent uploads

|

||||

* [media player](#media-player) - plays almost every audio format there is

|

||||

* [audio equalizer](#audio-equalizer) - and [dynamic range compressor](https://en.wikipedia.org/wiki/Dynamic_range_compression)

|

||||

* [fix unreliable playback on android](#fix-unreliable-playback-on-android) - due to phone / app settings

|

||||

* [markdown viewer](#markdown-viewer) - and there are *two* editors

|

||||

* [markdown vars](#markdown-vars) - dynamic docs with serverside variable expansion

|

||||

* [other tricks](#other-tricks)

|

||||

* [searching](#searching) - search by size, date, path/name, mp3-tags, ...

|

||||

* [server config](#server-config) - using arguments or config files, or a mix of both

|

||||

@@ -60,7 +67,9 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [tftp server](#tftp-server) - a TFTP server (read/write) can be started using `--tftp 3969`

|

||||

* [smb server](#smb-server) - unsafe, slow, not recommended for wan

|

||||

* [browser ux](#browser-ux) - tweaking the ui

|

||||

* [file indexing](#file-indexing) - enables dedup and music search ++

|

||||

* [opengraph](#opengraph) - discord and social-media embeds

|

||||

* [file deduplication](#file-deduplication) - enable symlink-based upload deduplication

|

||||

* [file indexing](#file-indexing) - enable music search, upload-undo, and better dedup

|

||||

* [exclude-patterns](#exclude-patterns) - to save some time

|

||||

* [filesystem guards](#filesystem-guards) - avoid traversing into other filesystems

|

||||

* [periodic rescan](#periodic-rescan) - filesystem monitoring

|

||||

@@ -73,14 +82,21 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [event hooks](#event-hooks) - trigger a program on uploads, renames etc ([examples](./bin/hooks/))

|

||||

* [upload events](#upload-events) - the older, more powerful approach ([examples](./bin/mtag/))

|

||||

* [handlers](#handlers) - redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

* [ip auth](#ip-auth) - autologin based on IP range (CIDR)

|

||||

* [identity providers](#identity-providers) - replace copyparty passwords with oauth and such

|

||||

* [user-changeable passwords](#user-changeable-passwords) - if permitted, users can change their own passwords

|

||||

* [using the cloud as storage](#using-the-cloud-as-storage) - connecting to an aws s3 bucket and similar

|

||||

* [hiding from google](#hiding-from-google) - tell search engines you dont wanna be indexed

|

||||

* [hiding from google](#hiding-from-google) - tell search engines you don't wanna be indexed

|

||||

* [themes](#themes)

|

||||

* [complete examples](#complete-examples)

|

||||

* [listen on port 80 and 443](#listen-on-port-80-and-443) - become a *real* webserver

|

||||

* [reverse-proxy](#reverse-proxy) - running copyparty next to other websites

|

||||

* [real-ip](#real-ip) - teaching copyparty how to see client IPs

|

||||

* [reverse-proxy performance](#reverse-proxy-performance)

|

||||

* [prometheus](#prometheus) - metrics/stats can be enabled

|

||||

* [other extremely specific features](#other-extremely-specific-features) - you'll never find a use for these

|

||||

* [custom mimetypes](#custom-mimetypes) - change the association of a file extension

|

||||

* [feature chickenbits](#feature-chickenbits) - buggy feature? rip it out

|

||||

* [packages](#packages) - the party might be closer than you think

|

||||

* [arch package](#arch-package) - now [available on aur](https://aur.archlinux.org/packages/copyparty) maintained by [@icxes](https://github.com/icxes)

|

||||

* [fedora package](#fedora-package) - does not exist yet

|

||||

@@ -103,13 +119,15 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [https](#https) - both HTTP and HTTPS are accepted

|

||||

* [recovering from crashes](#recovering-from-crashes)

|

||||

* [client crashes](#client-crashes)

|

||||

* [frefox wsod](#frefox-wsod) - firefox 87 can crash during uploads

|

||||

* [firefox wsod](#firefox-wsod) - firefox 87 can crash during uploads

|

||||

* [HTTP API](#HTTP-API) - see [devnotes](./docs/devnotes.md#http-api)

|

||||

* [dependencies](#dependencies) - mandatory deps

|

||||

* [optional dependencies](#optional-dependencies) - install these to enable bonus features

|

||||

* [dependency chickenbits](#dependency-chickenbits) - prevent loading an optional dependency

|

||||

* [optional gpl stuff](#optional-gpl-stuff)

|

||||

* [sfx](#sfx) - the self-contained "binary"

|

||||

* [sfx](#sfx) - the self-contained "binary" (recommended!)

|

||||

* [copyparty.exe](#copypartyexe) - download [copyparty.exe](https://github.com/9001/copyparty/releases/latest/download/copyparty.exe) (win8+) or [copyparty32.exe](https://github.com/9001/copyparty/releases/latest/download/copyparty32.exe) (win7+)

|

||||

* [zipapp](#zipapp) - another emergency alternative, [copyparty.pyz](https://github.com/9001/copyparty/releases/latest/download/copyparty.pyz)

|

||||

* [install on android](#install-on-android)

|

||||

* [reporting bugs](#reporting-bugs) - ideas for context to include, and where to submit them

|

||||

* [devnotes](#devnotes) - for build instructions etc, see [./docs/devnotes.md](./docs/devnotes.md)

|

||||

@@ -119,10 +137,12 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

|

||||

just run **[copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py)** -- that's it! 🎉

|

||||

|

||||

* or install through pypi: `python3 -m pip install --user -U copyparty`

|

||||

* or install through [pypi](https://pypi.org/project/copyparty/): `python3 -m pip install --user -U copyparty`

|

||||

* or if you cannot install python, you can use [copyparty.exe](#copypartyexe) instead

|

||||

* or install [on arch](#arch-package) ╱ [on NixOS](#nixos-module) ╱ [through nix](#nix-package)

|

||||

* or if you are on android, [install copyparty in termux](#install-on-android)

|

||||

* or maybe you have a [synology nas / dsm](./docs/synology-dsm.md)

|

||||

* or if your computer is messed up and nothing else works, [try the pyz](#zipapp)

|

||||

* or if you prefer to [use docker](./scripts/docker/) 🐋 you can do that too

|

||||

* docker has all deps built-in, so skip this step:

|

||||

|

||||

@@ -188,7 +208,7 @@ firewall-cmd --reload

|

||||

also see [comparison to similar software](./docs/versus.md)

|

||||

|

||||

* backend stuff

|

||||

* ☑ IPv6

|

||||

* ☑ IPv6 + unix-sockets

|

||||

* ☑ [multiprocessing](#performance) (actual multithreading)

|

||||

* ☑ volumes (mountpoints)

|

||||

* ☑ [accounts](#accounts-and-volumes)

|

||||

@@ -203,7 +223,7 @@ also see [comparison to similar software](./docs/versus.md)

|

||||

* upload

|

||||

* ☑ basic: plain multipart, ie6 support

|

||||

* ☑ [up2k](#uploading): js, resumable, multithreaded

|

||||

* unaffected by cloudflare's max-upload-size (100 MiB)

|

||||

* **no filesize limit!** even on Cloudflare

|

||||

* ☑ stash: simple PUT filedropper

|

||||

* ☑ filename randomizer

|

||||

* ☑ write-only folders

|

||||

@@ -219,6 +239,7 @@ also see [comparison to similar software](./docs/versus.md)

|

||||

* ☑ [navpane](#navpane) (directory tree sidebar)

|

||||

* ☑ file manager (cut/paste, delete, [batch-rename](#batch-rename))

|

||||

* ☑ audio player (with [OS media controls](https://user-images.githubusercontent.com/241032/215347492-b4250797-6c90-4e09-9a4c-721edf2fb15c.png) and opus/mp3 transcoding)

|

||||

* ☑ play video files as audio (converted on server)

|

||||

* ☑ image gallery with webm player

|

||||

* ☑ textfile browser with syntax hilighting

|

||||

* ☑ [thumbnails](#thumbnails)

|

||||

@@ -226,6 +247,7 @@ also see [comparison to similar software](./docs/versus.md)

|

||||

* ☑ ...of videos using FFmpeg

|

||||

* ☑ ...of audio (spectrograms) using FFmpeg

|

||||

* ☑ cache eviction (max-age; maybe max-size eventually)

|

||||

* ☑ multilingual UI (english, norwegian, chinese, [add your own](./docs/rice/#translations)))

|

||||

* ☑ SPA (browse while uploading)

|

||||

* server indexing

|

||||

* ☑ [locate files by contents](#file-search)

|

||||

@@ -234,9 +256,11 @@ also see [comparison to similar software](./docs/versus.md)

|

||||

* client support

|

||||

* ☑ [folder sync](#folder-sync)

|

||||

* ☑ [curl-friendly](https://user-images.githubusercontent.com/241032/215322619-ea5fd606-3654-40ad-94ee-2bc058647bb2.png)

|

||||

* ☑ [opengraph](#opengraph) (discord embeds)

|

||||

* markdown

|

||||

* ☑ [viewer](#markdown-viewer)

|

||||

* ☑ editor (sure why not)

|

||||

* ☑ [variables](#markdown-vars)

|

||||

|

||||

PS: something missing? post any crazy ideas you've got as a [feature request](https://github.com/9001/copyparty/issues/new?assignees=9001&labels=enhancement&template=feature_request.md) or [discussion](https://github.com/9001/copyparty/discussions/new?category=ideas) 🤙

|

||||

|

||||

@@ -318,6 +342,9 @@ same order here too

|

||||

|

||||

* [Chrome issue 1352210](https://bugs.chromium.org/p/chromium/issues/detail?id=1352210) -- plaintext http may be faster at filehashing than https (but also extremely CPU-intensive)

|

||||

|

||||

* [Chrome issue 383568268](https://issues.chromium.org/issues/383568268) -- filereaders in webworkers can OOM / crash the browser-tab

|

||||

* copyparty has a workaround which seems to work well enough

|

||||

|

||||

* [Firefox issue 1790500](https://bugzilla.mozilla.org/show_bug.cgi?id=1790500) -- entire browser can crash after uploading ~4000 small files

|

||||

|

||||

* Android: music playback randomly stops due to [battery usage settings](#fix-unreliable-playback-on-android)

|

||||

@@ -406,8 +433,8 @@ configuring accounts/volumes with arguments:

|

||||

`-v .::r,usr1,usr2:rw,usr3,usr4` = usr1/2 read-only, 3/4 read-write

|

||||

|

||||

permissions:

|

||||

* `r` (read): browse folder contents, download files, download as zip/tar

|

||||

* `w` (write): upload files, move files *into* this folder

|

||||

* `r` (read): browse folder contents, download files, download as zip/tar, see filekeys/dirkeys

|

||||

* `w` (write): upload files, move/copy files *into* this folder

|

||||

* `m` (move): move files/folders *from* this folder

|

||||

* `d` (delete): delete files/folders

|

||||

* `.` (dots): user can ask to show dotfiles in directory listings

|

||||

@@ -487,7 +514,8 @@ the browser has the following hotkeys (always qwerty)

|

||||

* `ESC` close various things

|

||||

* `ctrl-K` delete selected files/folders

|

||||

* `ctrl-X` cut selected files/folders

|

||||

* `ctrl-V` paste

|

||||

* `ctrl-C` copy selected files/folders to clipboard

|

||||

* `ctrl-V` paste (move/copy)

|

||||

* `Y` download selected files

|

||||

* `F2` [rename](#batch-rename) selected file/folder

|

||||

* when a file/folder is selected (in not-grid-view):

|

||||

@@ -556,6 +584,7 @@ click the `🌲` or pressing the `B` hotkey to toggle between breadcrumbs path (

|

||||

|

||||

press `g` or `田` to toggle grid-view instead of the file listing and `t` toggles icons / thumbnails

|

||||

* can be made default globally with `--grid` or per-volume with volflag `grid`

|

||||

* enable by adding `?imgs` to a link, or disable with `?imgs=0`

|

||||

|

||||

|

||||

|

||||

@@ -563,16 +592,15 @@ it does static images with Pillow / pyvips / FFmpeg, and uses FFmpeg for video f

|

||||

* pyvips is 3x faster than Pillow, Pillow is 3x faster than FFmpeg

|

||||

* disable thumbnails for specific volumes with volflag `dthumb` for all, or `dvthumb` / `dathumb` / `dithumb` for video/audio/images only

|

||||

|

||||

audio files are covnerted into spectrograms using FFmpeg unless you `--no-athumb` (and some FFmpeg builds may need `--th-ff-swr`)

|

||||

audio files are converted into spectrograms using FFmpeg unless you `--no-athumb` (and some FFmpeg builds may need `--th-ff-swr`)

|

||||

|

||||

images with the following names (see `--th-covers`) become the thumbnail of the folder they're in: `folder.png`, `folder.jpg`, `cover.png`, `cover.jpg`

|

||||

* the order is significant, so if both `cover.png` and `folder.jpg` exist in a folder, it will pick the first matching `--th-covers` entry (`folder.jpg`)

|

||||

* and, if you enable [file indexing](#file-indexing), it will also try those names as dotfiles (`.folder.jpg` and so), and then fallback on the first picture in the folder (if it has any pictures at all)

|

||||

|

||||

in the grid/thumbnail view, if the audio player panel is open, songs will start playing when clicked

|

||||

* indicated by the audio files having the ▶ icon instead of 💾

|

||||

|

||||

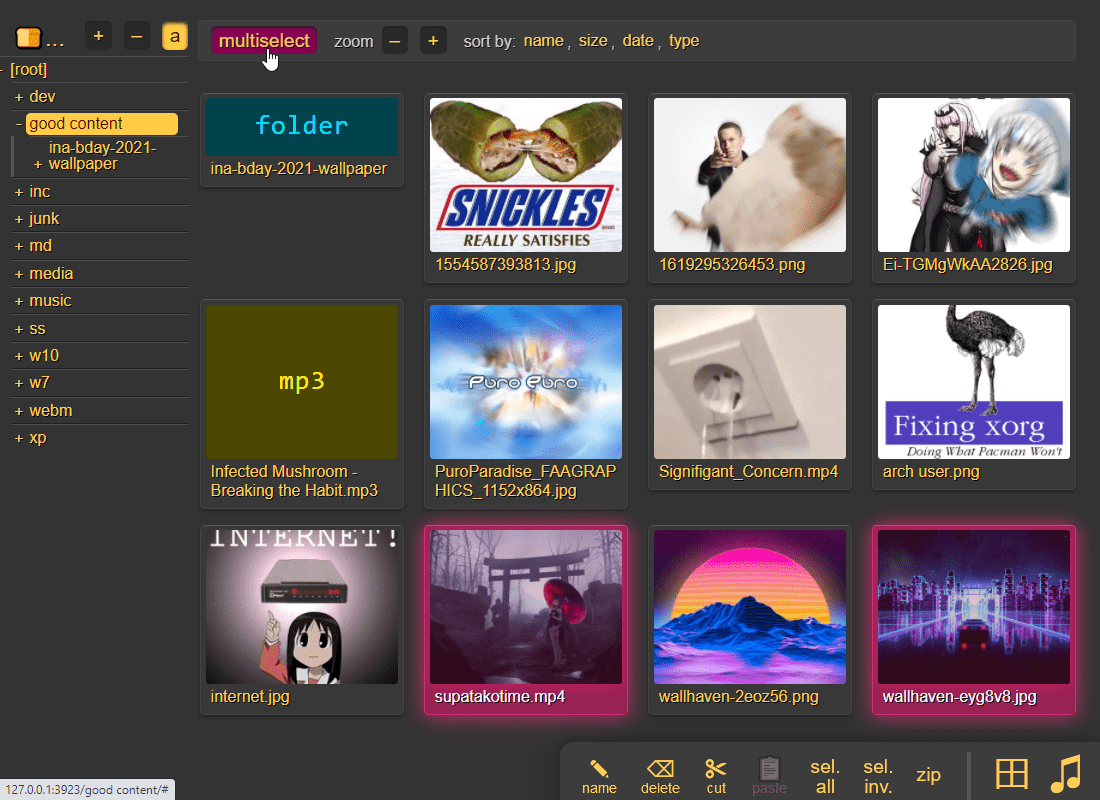

enabling `multiselect` lets you click files to select them, and then shift-click another file for range-select

|

||||

* `multiselect` is mostly intended for phones/tablets, but the `sel` option in the `[⚙️] settings` tab is better suited for desktop use, allowing selection by CTRL-clicking and range-selection with SHIFT-click, all without affecting regular clicking

|

||||

* the `sel` option can be made default globally with `--gsel` or per-volume with volflag `gsel`

|

||||

|

||||

|

||||

## zip downloads

|

||||

@@ -608,15 +636,21 @@ you can also zip a selection of files or folders by clicking them in the browser

|

||||

|

||||

cool trick: download a folder by appending url-params `?tar&opus` or `?tar&mp3` to transcode all audio files (except aac|m4a|mp3|ogg|opus|wma) to opus/mp3 before they're added to the archive

|

||||

* super useful if you're 5 minutes away from takeoff and realize you don't have any music on your phone but your server only has flac files and downloading those will burn through all your data + there wouldn't be enough time anyways

|

||||

* and url-params `&j` / `&w` produce jpeg/webm thumbnails/spectrograms instead of the original audio/video/images

|

||||

* and url-params `&j` / `&w` produce jpeg/webm thumbnails/spectrograms instead of the original audio/video/images (`&p` for audio waveforms)

|

||||

* can also be used to pregenerate thumbnails; combine with `--th-maxage=9999999` or `--th-clean=0`

|

||||

|

||||

|

||||

## uploading

|

||||

|

||||

drag files/folders into the web-browser to upload (or use the [command-line uploader](https://github.com/9001/copyparty/tree/hovudstraum/bin#u2cpy))

|

||||

drag files/folders into the web-browser to upload

|

||||

|

||||

this initiates an upload using `up2k`; there are two uploaders available:

|

||||

dragdrop is the recommended way, but you may also:

|

||||

|

||||

* select some files (not folders) in your file explorer and press CTRL-V inside the browser window

|

||||

* use the [command-line uploader](https://github.com/9001/copyparty/tree/hovudstraum/bin#u2cpy)

|

||||

* upload using [curl, sharex, ishare, ...](#client-examples)

|

||||

|

||||

when uploading files through dragdrop or CTRL-V, this initiates an upload using `up2k`; there are two browser-based uploaders available:

|

||||

* `[🎈] bup`, the basic uploader, supports almost every browser since netscape 4.0

|

||||

* `[🚀] up2k`, the good / fancy one

|

||||

|

||||

@@ -629,6 +663,7 @@ up2k has several advantages:

|

||||

* uploads resume if you reboot your browser or pc, just upload the same files again

|

||||

* server detects any corruption; the client reuploads affected chunks

|

||||

* the client doesn't upload anything that already exists on the server

|

||||

* no filesize limit, even when a proxy limits the request size (for example Cloudflare)

|

||||

* much higher speeds than ftp/scp/tarpipe on some internet connections (mainly american ones) thanks to parallel connections

|

||||

* the last-modified timestamp of the file is preserved

|

||||

|

||||

@@ -643,7 +678,7 @@ see [up2k](./docs/devnotes.md#up2k) for details on how it works, or watch a [dem

|

||||

|

||||

**protip:** if you enable `favicon` in the `[⚙️] settings` tab (by typing something into the textbox), the icon in the browser tab will indicate upload progress -- also, the `[🔔]` and/or `[🔊]` switches enable visible and/or audible notifications on upload completion

|

||||

|

||||

the up2k UI is the epitome of polished inutitive experiences:

|

||||

the up2k UI is the epitome of polished intuitive experiences:

|

||||

* "parallel uploads" specifies how many chunks to upload at the same time

|

||||

* `[🏃]` analysis of other files should continue while one is uploading

|

||||

* `[🥔]` shows a simpler UI for faster uploads from slow devices

|

||||

@@ -664,6 +699,8 @@ note that since up2k has to read each file twice, `[🎈] bup` can *theoreticall

|

||||

|

||||

if you are resuming a massive upload and want to skip hashing the files which already finished, you can enable `turbo` in the `[⚙️] config` tab, but please read the tooltip on that button

|

||||

|

||||

if the server is behind a proxy which imposes a request-size limit, you can configure up2k to sneak below the limit with server-option `--u2sz` (the default is 96 MiB to support Cloudflare)

|

||||

|

||||

|

||||

### file-search

|

||||

|

||||

@@ -683,7 +720,7 @@ files go into `[ok]` if they exist (and you get a link to where it is), otherwis

|

||||

|

||||

### unpost

|

||||

|

||||

undo/delete accidental uploads

|

||||

undo/delete accidental uploads using the `[🧯]` tab in the UI

|

||||

|

||||

|

||||

|

||||

@@ -692,11 +729,11 @@ you can unpost even if you don't have regular move/delete access, however only f

|

||||

|

||||

### self-destruct

|

||||

|

||||

uploads can be given a lifetime, afer which they expire / self-destruct

|

||||

uploads can be given a lifetime, after which they expire / self-destruct

|

||||

|

||||

the feature must be enabled per-volume with the `lifetime` [upload rule](#upload-rules) which sets the upper limit for how long a file gets to stay on the server

|

||||

|

||||

clients can specify a shorter expiration time using the [up2k ui](#uploading) -- the relevant options become visible upon navigating into a folder with `lifetimes` enabled -- or by using the `life` [upload modifier](#write)

|

||||

clients can specify a shorter expiration time using the [up2k ui](#uploading) -- the relevant options become visible upon navigating into a folder with `lifetimes` enabled -- or by using the `life` [upload modifier](./docs/devnotes.md#write)

|

||||

|

||||

specifying a custom expiration time client-side will affect the timespan in which unposts are permitted, so keep an eye on the estimates in the up2k ui

|

||||

|

||||

@@ -708,11 +745,18 @@ download files while they're still uploading ([demo video](http://a.ocv.me/pub/g

|

||||

requires the file to be uploaded using up2k (which is the default drag-and-drop uploader), alternatively the command-line program

|

||||

|

||||

|

||||

### incoming files

|

||||

|

||||

the control-panel shows the ETA for all incoming files , but only for files being uploaded into volumes where you have read-access

|

||||

|

||||

|

||||

|

||||

|

||||

## file manager

|

||||

|

||||

cut/paste, rename, and delete files/folders (if you have permission)

|

||||

|

||||

file selection: click somewhere on the line (not the link itsef), then:

|

||||

file selection: click somewhere on the line (not the link itself), then:

|

||||

* `space` to toggle

|

||||

* `up/down` to move

|

||||

* `shift-up/down` to move-and-select

|

||||

@@ -720,10 +764,48 @@ file selection: click somewhere on the line (not the link itsef), then:

|

||||

* shift-click another line for range-select

|

||||

|

||||

* cut: select some files and `ctrl-x`

|

||||

* copy: select some files and `ctrl-c`

|

||||

* paste: `ctrl-v` in another folder

|

||||

* rename: `F2`

|

||||

|

||||

you can move files across browser tabs (cut in one tab, paste in another)

|

||||

you can copy/move files across browser tabs (cut/copy in one tab, paste in another)

|

||||

|

||||

|

||||

## shares

|

||||

|

||||

share a file or folder by creating a temporary link

|

||||

|

||||

when enabled in the server settings (`--shr`), click the bottom-right `share` button to share the folder you're currently in, or alternatively:

|

||||

* select a folder first to share that folder instead

|

||||

* select one or more files to share only those files

|

||||

|

||||

this feature was made with [identity providers](#identity-providers) in mind -- configure your reverseproxy to skip the IdP's access-control for a given URL prefix and use that to safely share specific files/folders sans the usual auth checks

|

||||

|

||||

when creating a share, the creator can choose any of the following options:

|

||||

|

||||

* password-protection

|

||||

* expire after a certain time; `0` or blank means infinite

|

||||

* allow visitors to upload (if the user who creates the share has write-access)

|

||||

|

||||

semi-intentional limitations:

|

||||

|

||||

* cleanup of expired shares only works when global option `e2d` is set, and/or at least one volume on the server has volflag `e2d`

|

||||

* only folders from the same volume are shared; if you are sharing a folder which contains other volumes, then the contents of those volumes will not be available

|

||||

* related to [IdP volumes being forgotten on shutdown](https://github.com/9001/copyparty/blob/hovudstraum/docs/idp.md#idp-volumes-are-forgotten-on-shutdown), any shares pointing into a user's IdP volume will be unavailable until that user makes their first request after a restart

|

||||

* no option to "delete after first access" because tricky

|

||||

* when linking something to discord (for example) it'll get accessed by their scraper and that would count as a hit

|

||||

* browsers wouldn't be able to resume a broken download unless the requester's IP gets allowlisted for X minutes (ref. tricky)

|

||||

|

||||

specify `--shr /foobar` to enable this feature; a toplevel virtual folder named `foobar` is then created, and that's where all the shares will be served from

|

||||

|

||||

* you can name it whatever, `foobar` is just an example

|

||||

* if you're using config files, put `shr: /foobar` inside the `[global]` section instead

|

||||

|

||||

users can delete their own shares in the controlpanel, and a list of privileged users (`--shr-adm`) are allowed to see and/or delet any share on the server

|

||||

|

||||

after a share has expired, it remains visible in the controlpanel for `--shr-rt` minutes (default is 1 day), and the owner can revive it by extending the expiration time there

|

||||

|

||||

**security note:** using this feature does not mean that you can skip the [accounts and volumes](#accounts-and-volumes) section -- you still need to restrict access to volumes that you do not intend to share with unauthenticated users! it is not sufficient to use rules in the reverseproxy to restrict access to just the `/share` folder.

|

||||

|

||||

|

||||

## batch rename

|

||||

@@ -773,6 +855,41 @@ or a mix of both:

|

||||

the metadata keys you can use in the format field are the ones in the file-browser table header (whatever is collected with `-mte` and `-mtp`)

|

||||

|

||||

|

||||

## rss feeds

|

||||

|

||||

monitor a folder with your RSS reader , optionally recursive

|

||||

|

||||

must be enabled per-volume with volflag `rss` or globally with `--rss`

|

||||

|

||||

the feed includes itunes metadata for use with podcast readers such as [AntennaPod](https://antennapod.org/)

|

||||

|

||||

a feed example: https://cd.ocv.me/a/d2/d22/?rss&fext=mp3

|

||||

|

||||

url parameters:

|

||||

|

||||

* `pw=hunter2` for password auth

|

||||

* `recursive` to also include subfolders

|

||||

* `title=foo` changes the feed title (default: folder name)

|

||||

* `fext=mp3,opus` only include mp3 and opus files (default: all)

|

||||

* `nf=30` only show the first 30 results (default: 250)

|

||||

* `sort=m` sort by mtime (file last-modified), newest first (default)

|

||||

* `u` = upload-time; NOTE: non-uploaded files have upload-time `0`

|

||||

* `n` = filename

|

||||

* `a` = filesize

|

||||

* uppercase = reverse-sort; `M` = oldest file first

|

||||

|

||||

|

||||

## recent uploads

|

||||

|

||||

list all recent uploads by clicking "show recent uploads" in the controlpanel

|

||||

|

||||

will show uploader IP and upload-time if the visitor has the admin permission

|

||||

|

||||

* global-option `--ups-when` makes upload-time visible to all users, and not just admins

|

||||

|

||||

note that the [🧯 unpost](#unpost) feature is better suited for viewing *your own* recent uploads, as it includes the option to undo/delete them

|

||||

|

||||

|

||||

## media player

|

||||

|

||||

plays almost every audio format there is (if the server has FFmpeg installed for on-demand transcoding)

|

||||

@@ -783,6 +900,7 @@ some hilights:

|

||||

* OS integration; control playback from your phone's lockscreen ([windows](https://user-images.githubusercontent.com/241032/233213022-298a98ba-721a-4cf1-a3d4-f62634bc53d5.png) // [iOS](https://user-images.githubusercontent.com/241032/142711926-0700be6c-3e31-47b3-9928-53722221f722.png) // [android](https://user-images.githubusercontent.com/241032/233212311-a7368590-08c7-4f9f-a1af-48ccf3f36fad.png))

|

||||

* shows the audio waveform in the seekbar

|

||||

* not perfectly gapless but can get really close (see settings + eq below); good enough to enjoy gapless albums as intended

|

||||

* videos can be played as audio, without wasting bandwidth on the video

|

||||

|

||||

click the `play` link next to an audio file, or copy the link target to [share it](https://a.ocv.me/pub/demo/music/Ubiktune%20-%20SOUNDSHOCK%202%20-%20FM%20FUNK%20TERRROR!!/#af-1fbfba61&t=18) (optionally with a timestamp to start playing from, like that example does)

|

||||

|

||||

@@ -843,6 +961,13 @@ other notes,

|

||||

* the document preview has a max-width which is the same as an A4 paper when printed

|

||||

|

||||

|

||||

### markdown vars

|

||||

|

||||

dynamic docs with serverside variable expansion to replace stuff like `{{self.ip}}` with the client's IP, or `{{srv.htime}}` with the current time on the server

|

||||

|

||||

see [./srv/expand/](./srv/expand/) for usage and examples

|

||||

|

||||

|

||||

## other tricks

|

||||

|

||||

* you can link a particular timestamp in an audio file by adding it to the URL, such as `&20` / `&20s` / `&1m20` / `&t=1:20` after the `.../#af-c8960dab`

|

||||

@@ -857,8 +982,12 @@ other notes,

|

||||

|

||||

* files named `.prologue.html` / `.epilogue.html` will be rendered before/after directory listings unless `--no-logues`

|

||||

|

||||

* files named `descript.ion` / `DESCRIPT.ION` are parsed and displayed in the file listing, or as the epilogue if nonstandard

|

||||

|

||||

* files named `README.md` / `readme.md` will be rendered after directory listings unless `--no-readme` (but `.epilogue.html` takes precedence)

|

||||

|

||||

* and `PREADME.md` / `preadme.md` is shown above directory listings unless `--no-readme` or `.prologue.html`

|

||||

|

||||

* `README.md` and `*logue.html` can contain placeholder values which are replaced server-side before embedding into directory listings; see `--help-exp`

|

||||

|

||||

|

||||

@@ -891,6 +1020,8 @@ using arguments or config files, or a mix of both:

|

||||

|

||||

**NB:** as humongous as this readme is, there is also a lot of undocumented features. Run copyparty with `--help` to see all available global options; all of those can be used in the `[global]` section of config files, and everything listed in `--help-flags` can be used in volumes as volflags.

|

||||

* if running in docker/podman, try this: `docker run --rm -it copyparty/ac --help`

|

||||

* or see this (probably outdated): https://ocv.me/copyparty/helptext.html

|

||||

* or if you prefer plaintext, https://ocv.me/copyparty/helptext.txt

|

||||

|

||||

|

||||

## zeroconf

|

||||

@@ -908,7 +1039,11 @@ uses [multicast dns](https://en.wikipedia.org/wiki/Multicast_DNS) to give copypa

|

||||

|

||||

all enabled services ([webdav](#webdav-server), [ftp](#ftp-server), [smb](#smb-server)) will appear in mDNS-aware file managers (KDE, gnome, macOS, ...)

|

||||

|

||||

the domain will be http://partybox.local if the machine's hostname is `partybox` unless `--name` specifies soemthing else

|

||||

the domain will be `partybox.local` if the machine's hostname is `partybox` unless `--name` specifies something else

|

||||

|

||||

and the web-UI will be available at http://partybox.local:3923/

|

||||

|

||||

* if you want to get rid of the `:3923` so you can use http://partybox.local/ instead then see [listen on port 80 and 443](#listen-on-port-80-and-443)

|

||||

|

||||

|

||||

### ssdp

|

||||

@@ -934,7 +1069,7 @@ print a qr-code [(screenshot)](https://user-images.githubusercontent.com/241032/

|

||||

* `--qrz 1` forces 1x zoom instead of autoscaling to fit the terminal size

|

||||

* 1x may render incorrectly on some terminals/fonts, but 2x should always work

|

||||

|

||||

it uses the server hostname if [mdns](#mdns) is enbled, otherwise it'll use your external ip (default route) unless `--qri` specifies a specific ip-prefix or domain

|

||||

it uses the server hostname if [mdns](#mdns) is enabled, otherwise it'll use your external ip (default route) unless `--qri` specifies a specific ip-prefix or domain

|

||||

|

||||

|

||||

## ftp server

|

||||

@@ -959,7 +1094,7 @@ some recommended FTP / FTPS clients; `wark` = example password:

|

||||

|

||||

## webdav server

|

||||

|

||||

with read-write support, supports winXP and later, macos, nautilus/gvfs

|

||||

with read-write support, supports winXP and later, macos, nautilus/gvfs ... a great way to [access copyparty straight from the file explorer in your OS](#mount-as-drive)

|

||||

|

||||

click the [connect](http://127.0.0.1:3923/?hc) button in the control-panel to see connection instructions for windows, linux, macos

|

||||

|

||||

@@ -971,6 +1106,8 @@ on macos, connect from finder:

|

||||

|

||||

in order to grant full write-access to webdav clients, the volflag `daw` must be set and the account must also have delete-access (otherwise the client won't be allowed to replace the contents of existing files, which is how webdav works)

|

||||

|

||||

> note: if you have enabled [IdP authentication](#identity-providers) then that may cause issues for some/most webdav clients; see [the webdav section in the IdP docs](https://github.com/9001/copyparty/blob/hovudstraum/docs/idp.md#connecting-webdav-clients)

|

||||

|

||||

|

||||

### connecting to webdav from windows

|

||||

|

||||

@@ -979,11 +1116,12 @@ using the GUI (winXP or later):

|

||||

* on winXP only, click the `Sign up for online storage` hyperlink instead and put the URL there

|

||||

* providing your password as the username is recommended; the password field can be anything or empty

|

||||

|

||||

known client bugs:

|

||||

the webdav client that's built into windows has the following list of bugs; you can avoid all of these by connecting with rclone instead:

|

||||

* win7+ doesn't actually send the password to the server when reauthenticating after a reboot unless you first try to login with an incorrect password and then switch to the correct password

|

||||

* or just type your password into the username field instead to get around it entirely

|

||||

* connecting to a folder which allows anonymous read will make writing impossible, as windows has decided it doesn't need to login

|

||||

* workaround: connect twice; first to a folder which requires auth, then to the folder you actually want, and leave both of those mounted

|

||||

* or set the server-option `--dav-auth` to force password-auth for all webdav clients

|

||||

* win7+ may open a new tcp connection for every file and sometimes forgets to close them, eventually needing a reboot

|

||||

* maybe NIC-related (??), happens with win10-ltsc on e1000e but not virtio

|

||||

* windows cannot access folders which contain filenames with invalid unicode or forbidden characters (`<>:"/\|?*`), or names ending with `.`

|

||||

@@ -1036,12 +1174,12 @@ some **BIG WARNINGS** specific to SMB/CIFS, in decreasing importance:

|

||||

* [shadowing](#shadowing) probably works as expected but no guarantees

|

||||

|

||||

and some minor issues,

|

||||

* clients only see the first ~400 files in big folders; [impacket#1433](https://github.com/SecureAuthCorp/impacket/issues/1433)

|

||||

* clients only see the first ~400 files in big folders;

|

||||

* this was originally due to [impacket#1433](https://github.com/SecureAuthCorp/impacket/issues/1433) which was fixed in impacket-0.12, so you can disable the workaround with `--smb-nwa-1` but then you get unacceptably poor performance instead

|

||||

* hot-reload of server config (`/?reload=cfg`) does not include the `[global]` section (commandline args)

|

||||

* listens on the first IPv4 `-i` interface only (default = :: = 0.0.0.0 = all)

|

||||

* login doesn't work on winxp, but anonymous access is ok -- remove all accounts from copyparty config for that to work

|

||||

* win10 onwards does not allow connecting anonymously / without accounts

|

||||

* on windows, creating a new file through rightclick --> new --> textfile throws an error due to impacket limitations -- hit OK and F5 to get your file

|

||||

* python3 only

|

||||

* slow (the builtin webdav support in windows is 5x faster, and rclone-webdav is 30x faster)

|

||||

|

||||

@@ -1063,16 +1201,65 @@ authenticate with one of the following:

|

||||

tweaking the ui

|

||||

|

||||

* set default sort order globally with `--sort` or per-volume with the `sort` volflag; specify one or more comma-separated columns to sort by, and prefix the column name with `-` for reverse sort

|

||||

* the column names you can use are visible as tooltips when hovering over the column headers in the directory listing, for example `href ext sz ts tags/.up_at tags/Cirle tags/.tn tags/Artist tags/Title`

|

||||

* to sort in music order (album, track, artist, title) with filename as fallback, you could `--sort tags/Cirle,tags/.tn,tags/Artist,tags/Title,href`

|

||||

* the column names you can use are visible as tooltips when hovering over the column headers in the directory listing, for example `href ext sz ts tags/.up_at tags/Circle tags/.tn tags/Artist tags/Title`

|

||||

* to sort in music order (album, track, artist, title) with filename as fallback, you could `--sort tags/Circle,tags/.tn,tags/Artist,tags/Title,href`

|

||||

* to sort by upload date, first enable showing the upload date in the listing with `-e2d -mte +.up_at` and then `--sort tags/.up_at`

|

||||

|

||||

see [./docs/rice](./docs/rice) for more

|

||||

see [./docs/rice](./docs/rice) for more, including how to add stuff (css/`<meta>`/...) to the html `<head>` tag, or to add your own translation

|

||||

|

||||

|

||||

## opengraph

|

||||

|

||||

discord and social-media embeds

|

||||

|

||||

can be enabled globally with `--og` or per-volume with volflag `og`

|

||||

|

||||

note that this disables hotlinking because the opengraph spec demands it; to sneak past this intentional limitation, you can enable opengraph selectively by user-agent, for example `--og-ua '(Discord|Twitter|Slack)bot'` (or volflag `og_ua`)

|

||||

|

||||

you can also hotlink files regardless by appending `?raw` to the url

|

||||

|

||||

if you want to entirely replace the copyparty response with your own jinja2 template, give the template filepath to `--og-tpl` or volflag `og_tpl` (all members of `HttpCli` are available through the `this` object)

|

||||

|

||||

|

||||

## file deduplication

|

||||

|

||||

enable symlink-based upload deduplication globally with `--dedup` or per-volume with volflag `dedup`

|

||||

|

||||

by default, when someone tries to upload a file that already exists on the server, the upload will be politely declined, and the server will copy the existing file over to where the upload would have gone

|

||||

|

||||

if you enable deduplication with `--dedup` then it'll create a symlink instead of a full copy, thus reducing disk space usage

|

||||

|

||||

* on the contrary, if your server is hooked up to s3-glacier or similar storage where reading is expensive, and you cannot use `--safe-dedup=1` because you have other software tampering with your files, so you want to entirely disable detection of duplicate data instead, then you can specify `--no-clone` globally or `noclone` as a volflag

|

||||

|

||||

**warning:** when enabling dedup, you should also:

|

||||

* enable indexing with `-e2dsa` or volflag `e2dsa` (see [file indexing](#file-indexing) section below); strongly recommended

|

||||

* ...and/or `--hardlink-only` to use hardlink-based deduplication instead of symlinks; see explanation below

|

||||

|

||||

it will not be safe to rename/delete files if you only enable dedup and none of the above; if you enable indexing then it is not *necessary* to also do hardlinks (but you may still want to)

|

||||

|

||||

by default, deduplication is done based on symlinks (symbolic links); these are tiny files which are pointers to the nearest full copy of the file

|

||||

|

||||

you can choose to use hardlinks instead of softlinks, globally with `--hardlink-only` or volflag `hardlinkonly`;

|

||||

|

||||

advantages of using hardlinks:

|

||||

* hardlinks are more compatible with other software; they behave entirely like regular files

|

||||

* you can safely move and rename files using other file managers

|

||||

* symlinks need to be managed by copyparty to ensure the destinations remain correct

|

||||

|

||||

advantages of using symlinks (default):

|

||||

* each symlink can have its own last-modified timestamp, but a single timestamp is shared by all hardlinks

|

||||

* symlinks make it more obvious to other software that the file is not a regular file, so this can be less dangerous

|

||||

* hardlinks look like regular files, so other software may assume they are safe to edit without affecting the other copies

|

||||

|

||||

**warning:** if you edit the contents of a deduplicated file, then you will also edit all other copies of that file! This is especially surprising with hardlinks, because they look like regular files, but that same file exists in multiple locations

|

||||

|

||||

global-option `--xlink` / volflag `xlink` additionally enables deduplication across volumes, but this is probably buggy and not recommended

|

||||

|

||||

|

||||

|

||||

## file indexing

|

||||

|

||||

enables dedup and music search ++

|

||||

enable music search, upload-undo, and better dedup

|

||||

|

||||

file indexing relies on two database tables, the up2k filetree (`-e2d`) and the metadata tags (`-e2t`), stored in `.hist/up2k.db`. Configuration can be done through arguments, volflags, or a mix of both.

|

||||

|

||||

@@ -1083,10 +1270,9 @@ through arguments:

|

||||

* `-e2t` enables metadata indexing on upload

|

||||

* `-e2ts` also scans for tags in all files that don't have tags yet

|

||||

* `-e2tsr` also deletes all existing tags, doing a full reindex

|

||||

* `-e2v` verfies file integrity at startup, comparing hashes from the db

|

||||

* `-e2v` verifies file integrity at startup, comparing hashes from the db

|

||||

* `-e2vu` patches the database with the new hashes from the filesystem

|

||||

* `-e2vp` panics and kills copyparty instead

|

||||

* `--xlink` enables deduplication across volumes

|

||||

|

||||

the same arguments can be set as volflags, in addition to `d2d`, `d2ds`, `d2t`, `d2ts`, `d2v` for disabling:

|

||||

* `-v ~/music::r:c,e2ds,e2tsr` does a full reindex of everything on startup

|

||||

@@ -1099,11 +1285,10 @@ note:

|

||||

* upload-times can be displayed in the file listing by enabling the `.up_at` metadata key, either globally with `-e2d -mte +.up_at` or per-volume with volflags `e2d,mte=+.up_at` (will have a ~17% performance impact on directory listings)

|

||||

* `e2tsr` is probably always overkill, since `e2ds`/`e2dsa` would pick up any file modifications and `e2ts` would then reindex those, unless there is a new copyparty version with new parsers and the release note says otherwise

|

||||

* the rescan button in the admin panel has no effect unless the volume has `-e2ds` or higher

|

||||

* deduplication is possible on windows if you run copyparty as administrator (not saying you should!)

|

||||

|

||||

### exclude-patterns

|

||||

|

||||

to save some time, you can provide a regex pattern for filepaths to only index by filename/path/size/last-modified (and not the hash of the file contents) by setting `--no-hash \.iso$` or the volflag `:c,nohash=\.iso$`, this has the following consequences:

|

||||

to save some time, you can provide a regex pattern for filepaths to only index by filename/path/size/last-modified (and not the hash of the file contents) by setting `--no-hash '\.iso$'` or the volflag `:c,nohash=\.iso$`, this has the following consequences:

|

||||

* initial indexing is way faster, especially when the volume is on a network disk

|

||||

* makes it impossible to [file-search](#file-search)

|

||||

* if someone uploads the same file contents, the upload will not be detected as a dupe, so it will not get symlinked or rejected

|

||||

@@ -1114,6 +1299,8 @@ similarly, you can fully ignore files/folders using `--no-idx [...]` and `:c,noi

|

||||

|

||||

if you set `--no-hash [...]` globally, you can enable hashing for specific volumes using flag `:c,nohash=`

|

||||

|

||||

to exclude certain filepaths from search-results, use `--srch-excl` or volflag `srch_excl` instead of `--no-idx`, for example `--srch-excl 'password|logs/[0-9]'`

|

||||

|

||||

### filesystem guards

|

||||

|

||||

avoid traversing into other filesystems using `--xdev` / volflag `:c,xdev`, skipping any symlinks or bind-mounts to another HDD for example

|

||||

@@ -1268,6 +1455,8 @@ you can set hooks before and/or after an event happens, and currently you can ho

|

||||

|

||||

there's a bunch of flags and stuff, see `--help-hooks`

|

||||

|

||||

if you want to write your own hooks, see [devnotes](./docs/devnotes.md#event-hooks)

|

||||

|

||||

|

||||

### upload events

|

||||

|

||||

@@ -1296,18 +1485,59 @@ redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

replace 404 and 403 errors with something completely different (that's it for now)

|

||||

|

||||

|

||||

## ip auth

|

||||

|

||||

autologin based on IP range (CIDR) , using the global-option `--ipu`

|

||||

|

||||

for example, if everyone with an IP that starts with `192.168.123` should automatically log in as the user `spartacus`, then you can either specify `--ipu=192.168.123.0/24=spartacus` as a commandline option, or put this in a config file:

|

||||

|

||||

```yaml

|

||||

[global]

|

||||

ipu: 192.168.123.0/24=spartacus

|

||||

```

|

||||

|

||||

repeat the option to map additional subnets

|

||||

|

||||

**be careful with this one!** if you have a reverseproxy, then you definitely want to make sure you have [real-ip](#real-ip) configured correctly, and it's probably a good idea to nullmap the reverseproxy's IP just in case; so if your reverseproxy is sending requests from `172.24.27.9` then that would be `--ipu=172.24.27.9/32=`

|

||||

|

||||

|

||||

## identity providers

|

||||

|

||||

replace copyparty passwords with oauth and such

|

||||

|

||||

you can disable the built-in password-based login sysem, and instead replace it with a separate piece of software (an identity provider) which will then handle authenticating / authorizing of users; this makes it possible to login with passkeys / fido2 / webauthn / yubikey / ldap / active directory / oauth / many other single-sign-on contraptions

|

||||

you can disable the built-in password-based login system, and instead replace it with a separate piece of software (an identity provider) which will then handle authenticating / authorizing of users; this makes it possible to login with passkeys / fido2 / webauthn / yubikey / ldap / active directory / oauth / many other single-sign-on contraptions

|

||||

|

||||

a popular choice is [Authelia](https://www.authelia.com/) (config-file based), another one is [authentik](https://goauthentik.io/) (GUI-based, more complex)

|

||||

* the regular config-defined users will be used as a fallback for requests which don't include a valid (trusted) IdP username header

|

||||

|

||||

some popular identity providers are [Authelia](https://www.authelia.com/) (config-file based) and [authentik](https://goauthentik.io/) (GUI-based, more complex)

|

||||

|

||||

there is a [docker-compose example](./docs/examples/docker/idp-authelia-traefik) which is hopefully a good starting point (alternatively see [./docs/idp.md](./docs/idp.md) if you're the DIY type)

|

||||

|

||||

a more complete example of the copyparty configuration options [look like this](./docs/examples/docker/idp/copyparty.conf)

|

||||

|

||||

but if you just want to let users change their own passwords, then you probably want [user-changeable passwords](#user-changeable-passwords) instead

|

||||

|

||||

|

||||

## user-changeable passwords

|

||||

|

||||

if permitted, users can change their own passwords in the control-panel

|

||||

|

||||

* not compatible with [identity providers](#identity-providers)

|

||||

|

||||

* must be enabled with `--chpw` because account-sharing is a popular usecase

|

||||

|

||||

* if you want to enable the feature but deny password-changing for a specific list of accounts, you can do that with `--chpw-no name1,name2,name3,...`

|

||||

|

||||

* to perform a password reset, edit the server config and give the user another password there, then do a [config reload](#server-config) or server restart

|

||||

|

||||

* the custom passwords are kept in a textfile at filesystem-path `--chpw-db`, by default `chpw.json` in the copyparty config folder

|

||||

|

||||

* if you run multiple copyparty instances with different users you *almost definitely* want to specify separate DBs for each instance

|

||||

|

||||

* if [password hashing](#password-hashing) is enabled, the passwords in the db are also hashed

|

||||

|

||||

* ...which means that all user-defined passwords will be forgotten if you change password-hashing settings

|

||||

|

||||

|

||||

## using the cloud as storage

|

||||

|

||||

@@ -1324,7 +1554,7 @@ you may improve performance by specifying larger values for `--iobuf` / `--s-rd-

|

||||

|

||||

## hiding from google

|

||||

|

||||

tell search engines you dont wanna be indexed, either using the good old [robots.txt](https://www.robotstxt.org/robotstxt.html) or through copyparty settings:

|

||||

tell search engines you don't wanna be indexed, either using the good old [robots.txt](https://www.robotstxt.org/robotstxt.html) or through copyparty settings:

|

||||

|

||||

* `--no-robots` adds HTTP (`X-Robots-Tag`) and HTML (`<meta>`) headers with `noindex, nofollow` globally

|

||||

* volflag `[...]:c,norobots` does the same thing for that single volume

|

||||

@@ -1399,6 +1629,33 @@ if you want to change the fonts, see [./docs/rice/](./docs/rice/)

|

||||

`-lo log/cpp-%Y-%m%d-%H%M%S.txt.xz`

|

||||

|

||||

|

||||

## listen on port 80 and 443

|

||||

|

||||