Compare commits

118 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

d3ccacccb1 | ||

|

|

df386c8fbc | ||

|

|

4d15dd6e17 | ||

|

|

56a0499636 | ||

|

|

10fc4768e8 | ||

|

|

2b63d7d10d | ||

|

|

1f177528c1 | ||

|

|

fc3bbb70a3 | ||

|

|

ce3cab0295 | ||

|

|

c784e5285e | ||

|

|

2bf9055cae | ||

|

|

8aba5aed4f | ||

|

|

0ce7cf5e10 | ||

|

|

96edcbccd7 | ||

|

|

4603afb6de | ||

|

|

56317b00af | ||

|

|

cacec9c1f3 | ||

|

|

44ee07f0b2 | ||

|

|

6a8d5e1731 | ||

|

|

d9962f65b3 | ||

|

|

119e88d87b | ||

|

|

71d9e010d9 | ||

|

|

5718caa957 | ||

|

|

efd8a32ed6 | ||

|

|

b22d700e16 | ||

|

|

ccdacea0c4 | ||

|

|

4bdcbc1cb5 | ||

|

|

833c6cf2ec | ||

|

|

dd6dbdd90a | ||

|

|

63013cc565 | ||

|

|

912402364a | ||

|

|

159f51b12b | ||

|

|

7678a91b0e | ||

|

|

b13899c63d | ||

|

|

3a0d882c5e | ||

|

|

cb81f0ad6d | ||

|

|

518bacf628 | ||

|

|

ca63b03e55 | ||

|

|

cecef88d6b | ||

|

|

7ffd805a03 | ||

|

|

a7e2a0c981 | ||

|

|

2a570bb4ca | ||

|

|

5ca8f0706d | ||

|

|

a9b4436cdc | ||

|

|

5f91999512 | ||

|

|

9f000beeaf | ||

|

|

ff0a71f212 | ||

|

|

22dfc6ec24 | ||

|

|

48147c079e | ||

|

|

d715479ef6 | ||

|

|

fc8298c468 | ||

|

|

e94ca5dc91 | ||

|

|

114b71b751 | ||

|

|

b2770a2087 | ||

|

|

cba1878bb2 | ||

|

|

a2e037d6af | ||

|

|

65a2b6a223 | ||

|

|

9ed799e803 | ||

|

|

c1c0ecca13 | ||

|

|

ee62836383 | ||

|

|

705f598b1a | ||

|

|

414de88925 | ||

|

|

53ffd245dd | ||

|

|

cf1b756206 | ||

|

|

22b58e31ef | ||

|

|

b7f9bf5a28 | ||

|

|

aba680b6c2 | ||

|

|

fabada95f6 | ||

|

|

9ccd8bb3ea | ||

|

|

1d68acf8f0 | ||

|

|

1e7697b551 | ||

|

|

4a4ec88d00 | ||

|

|

6adc778d62 | ||

|

|

6b7ebdb7e9 | ||

|

|

3d7facd774 | ||

|

|

eaee1f2cab | ||

|

|

ff012221ae | ||

|

|

c398553748 | ||

|

|

3ccbcf6185 | ||

|

|

f0abc0ef59 | ||

|

|

a99fa3375d | ||

|

|

22c7e09b3f | ||

|

|

0dfe1d5b35 | ||

|

|

a99a3bc6d7 | ||

|

|

9804f25de3 | ||

|

|

ae98200660 | ||

|

|

e45420646f | ||

|

|

21be82ef8b | ||

|

|

001afe00cb | ||

|

|

19a5985f29 | ||

|

|

2715ee6c61 | ||

|

|

dc157fa28f | ||

|

|

1ff14b4e05 | ||

|

|

480ac254ab | ||

|

|

4b95db81aa | ||

|

|

c81e898435 | ||

|

|

f1646b96ca | ||

|

|

44f2b63e43 | ||

|

|

847a2bdc85 | ||

|

|

03f0f99469 | ||

|

|

3900e66158 | ||

|

|

3dff6cda40 | ||

|

|

73d05095b5 | ||

|

|

fcdc1728eb | ||

|

|

8b942ea237 | ||

|

|

88a1c5ca5d | ||

|

|

047176b297 | ||

|

|

dc4d0d8e71 | ||

|

|

b9c5c7bbde | ||

|

|

9daeed923f | ||

|

|

66b260cea9 | ||

|

|

58cf01c2ad | ||

|

|

d866841c19 | ||

|

|

a462a644fb | ||

|

|

678675a9a6 | ||

|

|

de9069ef1d | ||

|

|

c0c0a1a83a | ||

|

|

1d004b6dbd |

1

.gitignore

vendored

1

.gitignore

vendored

@@ -30,6 +30,7 @@ copyparty/res/COPYING.txt

|

||||

copyparty/web/deps/

|

||||

srv/

|

||||

scripts/docker/i/

|

||||

scripts/deps-docker/uncomment.py

|

||||

contrib/package/arch/pkg/

|

||||

contrib/package/arch/src/

|

||||

|

||||

|

||||

157

README.md

157

README.md

@@ -47,6 +47,7 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [file manager](#file-manager) - cut/paste, rename, and delete files/folders (if you have permission)

|

||||

* [shares](#shares) - share a file or folder by creating a temporary link

|

||||

* [batch rename](#batch-rename) - select some files and press `F2` to bring up the rename UI

|

||||

* [rss feeds](#rss-feeds) - monitor a folder with your RSS reader

|

||||

* [media player](#media-player) - plays almost every audio format there is

|

||||

* [audio equalizer](#audio-equalizer) - and [dynamic range compressor](https://en.wikipedia.org/wiki/Dynamic_range_compression)

|

||||

* [fix unreliable playback on android](#fix-unreliable-playback-on-android) - due to phone / app settings

|

||||

@@ -80,12 +81,14 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [event hooks](#event-hooks) - trigger a program on uploads, renames etc ([examples](./bin/hooks/))

|

||||

* [upload events](#upload-events) - the older, more powerful approach ([examples](./bin/mtag/))

|

||||

* [handlers](#handlers) - redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

* [ip auth](#ip-auth) - autologin based on IP range (CIDR)

|

||||

* [identity providers](#identity-providers) - replace copyparty passwords with oauth and such

|

||||

* [user-changeable passwords](#user-changeable-passwords) - if permitted, users can change their own passwords

|

||||

* [using the cloud as storage](#using-the-cloud-as-storage) - connecting to an aws s3 bucket and similar

|

||||

* [hiding from google](#hiding-from-google) - tell search engines you dont wanna be indexed

|

||||

* [hiding from google](#hiding-from-google) - tell search engines you don't wanna be indexed

|

||||

* [themes](#themes)

|

||||

* [complete examples](#complete-examples)

|

||||

* [listen on port 80 and 443](#listen-on-port-80-and-443) - become a *real* webserver

|

||||

* [reverse-proxy](#reverse-proxy) - running copyparty next to other websites

|

||||

* [real-ip](#real-ip) - teaching copyparty how to see client IPs

|

||||

* [prometheus](#prometheus) - metrics/stats can be enabled

|

||||

@@ -114,7 +117,7 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [https](#https) - both HTTP and HTTPS are accepted

|

||||

* [recovering from crashes](#recovering-from-crashes)

|

||||

* [client crashes](#client-crashes)

|

||||

* [frefox wsod](#frefox-wsod) - firefox 87 can crash during uploads

|

||||

* [firefox wsod](#firefox-wsod) - firefox 87 can crash during uploads

|

||||

* [HTTP API](#HTTP-API) - see [devnotes](./docs/devnotes.md#http-api)

|

||||

* [dependencies](#dependencies) - mandatory deps

|

||||

* [optional dependencies](#optional-dependencies) - install these to enable bonus features

|

||||

@@ -217,7 +220,7 @@ also see [comparison to similar software](./docs/versus.md)

|

||||

* upload

|

||||

* ☑ basic: plain multipart, ie6 support

|

||||

* ☑ [up2k](#uploading): js, resumable, multithreaded

|

||||

* **no filesize limit!** ...unless you use Cloudflare, then it's 383.9 GiB

|

||||

* **no filesize limit!** even on Cloudflare

|

||||

* ☑ stash: simple PUT filedropper

|

||||

* ☑ filename randomizer

|

||||

* ☑ write-only folders

|

||||

@@ -425,7 +428,7 @@ configuring accounts/volumes with arguments:

|

||||

|

||||

permissions:

|

||||

* `r` (read): browse folder contents, download files, download as zip/tar, see filekeys/dirkeys

|

||||

* `w` (write): upload files, move files *into* this folder

|

||||

* `w` (write): upload files, move/copy files *into* this folder

|

||||

* `m` (move): move files/folders *from* this folder

|

||||

* `d` (delete): delete files/folders

|

||||

* `.` (dots): user can ask to show dotfiles in directory listings

|

||||

@@ -505,7 +508,8 @@ the browser has the following hotkeys (always qwerty)

|

||||

* `ESC` close various things

|

||||

* `ctrl-K` delete selected files/folders

|

||||

* `ctrl-X` cut selected files/folders

|

||||

* `ctrl-V` paste

|

||||

* `ctrl-C` copy selected files/folders to clipboard

|

||||

* `ctrl-V` paste (move/copy)

|

||||

* `Y` download selected files

|

||||

* `F2` [rename](#batch-rename) selected file/folder

|

||||

* when a file/folder is selected (in not-grid-view):

|

||||

@@ -574,6 +578,7 @@ click the `🌲` or pressing the `B` hotkey to toggle between breadcrumbs path (

|

||||

|

||||

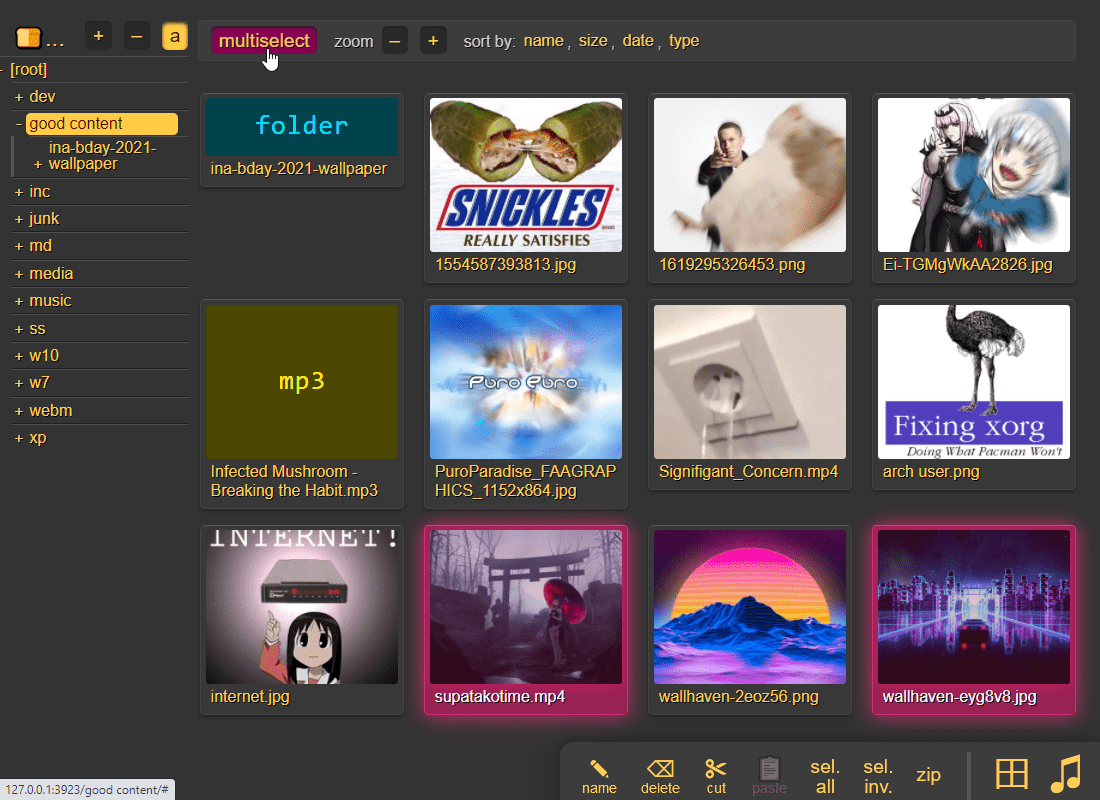

press `g` or `田` to toggle grid-view instead of the file listing and `t` toggles icons / thumbnails

|

||||

* can be made default globally with `--grid` or per-volume with volflag `grid`

|

||||

* enable by adding `?imgs` to a link, or disable with `?imgs=0`

|

||||

|

||||

|

||||

|

||||

@@ -581,7 +586,7 @@ it does static images with Pillow / pyvips / FFmpeg, and uses FFmpeg for video f

|

||||

* pyvips is 3x faster than Pillow, Pillow is 3x faster than FFmpeg

|

||||

* disable thumbnails for specific volumes with volflag `dthumb` for all, or `dvthumb` / `dathumb` / `dithumb` for video/audio/images only

|

||||

|

||||

audio files are covnerted into spectrograms using FFmpeg unless you `--no-athumb` (and some FFmpeg builds may need `--th-ff-swr`)

|

||||

audio files are converted into spectrograms using FFmpeg unless you `--no-athumb` (and some FFmpeg builds may need `--th-ff-swr`)

|

||||

|

||||

images with the following names (see `--th-covers`) become the thumbnail of the folder they're in: `folder.png`, `folder.jpg`, `cover.png`, `cover.jpg`

|

||||

* the order is significant, so if both `cover.png` and `folder.jpg` exist in a folder, it will pick the first matching `--th-covers` entry (`folder.jpg`)

|

||||

@@ -652,7 +657,7 @@ up2k has several advantages:

|

||||

* uploads resume if you reboot your browser or pc, just upload the same files again

|

||||

* server detects any corruption; the client reuploads affected chunks

|

||||

* the client doesn't upload anything that already exists on the server

|

||||

* no filesize limit unless imposed by a proxy, for example Cloudflare, which blocks uploads over 383.9 GiB

|

||||

* no filesize limit, even when a proxy limits the request size (for example Cloudflare)

|

||||

* much higher speeds than ftp/scp/tarpipe on some internet connections (mainly american ones) thanks to parallel connections

|

||||

* the last-modified timestamp of the file is preserved

|

||||

|

||||

@@ -667,7 +672,7 @@ see [up2k](./docs/devnotes.md#up2k) for details on how it works, or watch a [dem

|

||||

|

||||

**protip:** if you enable `favicon` in the `[⚙️] settings` tab (by typing something into the textbox), the icon in the browser tab will indicate upload progress -- also, the `[🔔]` and/or `[🔊]` switches enable visible and/or audible notifications on upload completion

|

||||

|

||||

the up2k UI is the epitome of polished inutitive experiences:

|

||||

the up2k UI is the epitome of polished intuitive experiences:

|

||||

* "parallel uploads" specifies how many chunks to upload at the same time

|

||||

* `[🏃]` analysis of other files should continue while one is uploading

|

||||

* `[🥔]` shows a simpler UI for faster uploads from slow devices

|

||||

@@ -688,6 +693,8 @@ note that since up2k has to read each file twice, `[🎈] bup` can *theoreticall

|

||||

|

||||

if you are resuming a massive upload and want to skip hashing the files which already finished, you can enable `turbo` in the `[⚙️] config` tab, but please read the tooltip on that button

|

||||

|

||||

if the server is behind a proxy which imposes a request-size limit, you can configure up2k to sneak below the limit with server-option `--u2sz` (the default is 96 MiB to support Cloudflare)

|

||||

|

||||

|

||||

### file-search

|

||||

|

||||

@@ -716,7 +723,7 @@ you can unpost even if you don't have regular move/delete access, however only f

|

||||

|

||||

### self-destruct

|

||||

|

||||

uploads can be given a lifetime, afer which they expire / self-destruct

|

||||

uploads can be given a lifetime, after which they expire / self-destruct

|

||||

|

||||

the feature must be enabled per-volume with the `lifetime` [upload rule](#upload-rules) which sets the upper limit for how long a file gets to stay on the server

|

||||

|

||||

@@ -743,7 +750,7 @@ the control-panel shows the ETA for all incoming files , but only for files bei

|

||||

|

||||

cut/paste, rename, and delete files/folders (if you have permission)

|

||||

|

||||

file selection: click somewhere on the line (not the link itsef), then:

|

||||

file selection: click somewhere on the line (not the link itself), then:

|

||||

* `space` to toggle

|

||||

* `up/down` to move

|

||||

* `shift-up/down` to move-and-select

|

||||

@@ -751,10 +758,11 @@ file selection: click somewhere on the line (not the link itsef), then:

|

||||

* shift-click another line for range-select

|

||||

|

||||

* cut: select some files and `ctrl-x`

|

||||

* copy: select some files and `ctrl-c`

|

||||

* paste: `ctrl-v` in another folder

|

||||

* rename: `F2`

|

||||

|

||||

you can move files across browser tabs (cut in one tab, paste in another)

|

||||

you can copy/move files across browser tabs (cut/copy in one tab, paste in another)

|

||||

|

||||

|

||||

## shares

|

||||

@@ -777,6 +785,7 @@ semi-intentional limitations:

|

||||

|

||||

* cleanup of expired shares only works when global option `e2d` is set, and/or at least one volume on the server has volflag `e2d`

|

||||

* only folders from the same volume are shared; if you are sharing a folder which contains other volumes, then the contents of those volumes will not be available

|

||||

* related to [IdP volumes being forgotten on shutdown](https://github.com/9001/copyparty/blob/hovudstraum/docs/idp.md#idp-volumes-are-forgotten-on-shutdown), any shares pointing into a user's IdP volume will be unavailable until that user makes their first request after a restart

|

||||

* no option to "delete after first access" because tricky

|

||||

* when linking something to discord (for example) it'll get accessed by their scraper and that would count as a hit

|

||||

* browsers wouldn't be able to resume a broken download unless the requester's IP gets allowlisted for X minutes (ref. tricky)

|

||||

@@ -840,6 +849,30 @@ or a mix of both:

|

||||

the metadata keys you can use in the format field are the ones in the file-browser table header (whatever is collected with `-mte` and `-mtp`)

|

||||

|

||||

|

||||

## rss feeds

|

||||

|

||||

monitor a folder with your RSS reader , optionally recursive

|

||||

|

||||

must be enabled per-volume with volflag `rss` or globally with `--rss`

|

||||

|

||||

the feed includes itunes metadata for use with podcast readers such as [AntennaPod](https://antennapod.org/)

|

||||

|

||||

a feed example: https://cd.ocv.me/a/d2/d22/?rss&fext=mp3

|

||||

|

||||

url parameters:

|

||||

|

||||

* `pw=hunter2` for password auth

|

||||

* `recursive` to also include subfolders

|

||||

* `title=foo` changes the feed title (default: folder name)

|

||||

* `fext=mp3,opus` only include mp3 and opus files (default: all)

|

||||

* `nf=30` only show the first 30 results (default: 250)

|

||||

* `sort=m` sort by mtime (file last-modified), newest first (default)

|

||||

* `u` = upload-time; NOTE: non-uploaded files have upload-time `0`

|

||||

* `n` = filename

|

||||

* `a` = filesize

|

||||

* uppercase = reverse-sort; `M` = oldest file first

|

||||

|

||||

|

||||

## media player

|

||||

|

||||

plays almost every audio format there is (if the server has FFmpeg installed for on-demand transcoding)

|

||||

@@ -936,6 +969,8 @@ see [./srv/expand/](./srv/expand/) for usage and examples

|

||||

|

||||

* files named `README.md` / `readme.md` will be rendered after directory listings unless `--no-readme` (but `.epilogue.html` takes precedence)

|

||||

|

||||

* and `PREADME.md` / `preadme.md` is shown above directory listings unless `--no-readme` or `.prologue.html`

|

||||

|

||||

* `README.md` and `*logue.html` can contain placeholder values which are replaced server-side before embedding into directory listings; see `--help-exp`

|

||||

|

||||

|

||||

@@ -987,7 +1022,11 @@ uses [multicast dns](https://en.wikipedia.org/wiki/Multicast_DNS) to give copypa

|

||||

|

||||

all enabled services ([webdav](#webdav-server), [ftp](#ftp-server), [smb](#smb-server)) will appear in mDNS-aware file managers (KDE, gnome, macOS, ...)

|

||||

|

||||

the domain will be http://partybox.local if the machine's hostname is `partybox` unless `--name` specifies soemthing else

|

||||

the domain will be `partybox.local` if the machine's hostname is `partybox` unless `--name` specifies something else

|

||||

|

||||

and the web-UI will be available at http://partybox.local:3923/

|

||||

|

||||

* if you want to get rid of the `:3923` so you can use http://partybox.local/ instead then see [listen on port 80 and 443](#listen-on-port-80-and-443)

|

||||

|

||||

|

||||

### ssdp

|

||||

@@ -1013,7 +1052,7 @@ print a qr-code [(screenshot)](https://user-images.githubusercontent.com/241032/

|

||||

* `--qrz 1` forces 1x zoom instead of autoscaling to fit the terminal size

|

||||

* 1x may render incorrectly on some terminals/fonts, but 2x should always work

|

||||

|

||||

it uses the server hostname if [mdns](#mdns) is enbled, otherwise it'll use your external ip (default route) unless `--qri` specifies a specific ip-prefix or domain

|

||||

it uses the server hostname if [mdns](#mdns) is enabled, otherwise it'll use your external ip (default route) unless `--qri` specifies a specific ip-prefix or domain

|

||||

|

||||

|

||||

## ftp server

|

||||

@@ -1038,7 +1077,7 @@ some recommended FTP / FTPS clients; `wark` = example password:

|

||||

|

||||

## webdav server

|

||||

|

||||

with read-write support, supports winXP and later, macos, nautilus/gvfs ... a greay way to [access copyparty straight from the file explorer in your OS](#mount-as-drive)

|

||||

with read-write support, supports winXP and later, macos, nautilus/gvfs ... a great way to [access copyparty straight from the file explorer in your OS](#mount-as-drive)

|

||||

|

||||

click the [connect](http://127.0.0.1:3923/?hc) button in the control-panel to see connection instructions for windows, linux, macos

|

||||

|

||||

@@ -1115,12 +1154,12 @@ some **BIG WARNINGS** specific to SMB/CIFS, in decreasing importance:

|

||||

* [shadowing](#shadowing) probably works as expected but no guarantees

|

||||

|

||||

and some minor issues,

|

||||

* clients only see the first ~400 files in big folders; [impacket#1433](https://github.com/SecureAuthCorp/impacket/issues/1433)

|

||||

* clients only see the first ~400 files in big folders;

|

||||

* this was originally due to [impacket#1433](https://github.com/SecureAuthCorp/impacket/issues/1433) which was fixed in impacket-0.12, so you can disable the workaround with `--smb-nwa-1` but then you get unacceptably poor performance instead

|

||||

* hot-reload of server config (`/?reload=cfg`) does not include the `[global]` section (commandline args)

|

||||

* listens on the first IPv4 `-i` interface only (default = :: = 0.0.0.0 = all)

|

||||

* login doesn't work on winxp, but anonymous access is ok -- remove all accounts from copyparty config for that to work

|

||||

* win10 onwards does not allow connecting anonymously / without accounts

|

||||

* on windows, creating a new file through rightclick --> new --> textfile throws an error due to impacket limitations -- hit OK and F5 to get your file

|

||||

* python3 only

|

||||

* slow (the builtin webdav support in windows is 5x faster, and rclone-webdav is 30x faster)

|

||||

|

||||

@@ -1142,8 +1181,8 @@ authenticate with one of the following:

|

||||

tweaking the ui

|

||||

|

||||

* set default sort order globally with `--sort` or per-volume with the `sort` volflag; specify one or more comma-separated columns to sort by, and prefix the column name with `-` for reverse sort

|

||||

* the column names you can use are visible as tooltips when hovering over the column headers in the directory listing, for example `href ext sz ts tags/.up_at tags/Cirle tags/.tn tags/Artist tags/Title`

|

||||

* to sort in music order (album, track, artist, title) with filename as fallback, you could `--sort tags/Cirle,tags/.tn,tags/Artist,tags/Title,href`

|

||||

* the column names you can use are visible as tooltips when hovering over the column headers in the directory listing, for example `href ext sz ts tags/.up_at tags/Circle tags/.tn tags/Artist tags/Title`

|

||||

* to sort in music order (album, track, artist, title) with filename as fallback, you could `--sort tags/Circle,tags/.tn,tags/Artist,tags/Title,href`

|

||||

* to sort by upload date, first enable showing the upload date in the listing with `-e2d -mte +.up_at` and then `--sort tags/.up_at`

|

||||

|

||||

see [./docs/rice](./docs/rice) for more, including how to add stuff (css/`<meta>`/...) to the html `<head>` tag, or to add your own translation

|

||||

@@ -1159,8 +1198,6 @@ note that this disables hotlinking because the opengraph spec demands it; to sne

|

||||

|

||||

you can also hotlink files regardless by appending `?raw` to the url

|

||||

|

||||

NOTE: because discord (and maybe others) strip query args such as `?raw` in opengraph tags, any links which require a filekey or dirkey will not work

|

||||

|

||||

if you want to entirely replace the copyparty response with your own jinja2 template, give the template filepath to `--og-tpl` or volflag `og_tpl` (all members of `HttpCli` are available through the `this` object)

|

||||

|

||||

|

||||

@@ -1168,7 +1205,11 @@ if you want to entirely replace the copyparty response with your own jinja2 temp

|

||||

|

||||

enable symlink-based upload deduplication globally with `--dedup` or per-volume with volflag `dedup`

|

||||

|

||||

when someone tries to upload a file that already exists on the server, the upload will be politely declined and a symlink is created instead, pointing to the nearest copy on disk, thus reducinc disk space usage

|

||||

by default, when someone tries to upload a file that already exists on the server, the upload will be politely declined, and the server will copy the existing file over to where the upload would have gone

|

||||

|

||||

if you enable deduplication with `--dedup` then it'll create a symlink instead of a full copy, thus reducing disk space usage

|

||||

|

||||

* on the contrary, if your server is hooked up to s3-glacier or similar storage where reading is expensive, and you cannot use `--safe-dedup=1` because you have other software tampering with your files, so you want to entirely disable detection of duplicate data instead, then you can specify `--no-clone` globally or `noclone` as a volflag

|

||||

|

||||

**warning:** when enabling dedup, you should also:

|

||||

* enable indexing with `-e2dsa` or volflag `e2dsa` (see [file indexing](#file-indexing) section below); strongly recommended

|

||||

@@ -1209,7 +1250,7 @@ through arguments:

|

||||

* `-e2t` enables metadata indexing on upload

|

||||

* `-e2ts` also scans for tags in all files that don't have tags yet

|

||||

* `-e2tsr` also deletes all existing tags, doing a full reindex

|

||||

* `-e2v` verfies file integrity at startup, comparing hashes from the db

|

||||

* `-e2v` verifies file integrity at startup, comparing hashes from the db

|

||||

* `-e2vu` patches the database with the new hashes from the filesystem

|

||||

* `-e2vp` panics and kills copyparty instead

|

||||

|

||||

@@ -1422,11 +1463,27 @@ redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

replace 404 and 403 errors with something completely different (that's it for now)

|

||||

|

||||

|

||||

## ip auth

|

||||

|

||||

autologin based on IP range (CIDR) , using the global-option `--ipu`

|

||||

|

||||

for example, if everyone with an IP that starts with `192.168.123` should automatically log in as the user `spartacus`, then you can either specify `--ipu=192.168.123.0/24=spartacus` as a commandline option, or put this in a config file:

|

||||

|

||||

```yaml

|

||||

[global]

|

||||

ipu: 192.168.123.0/24=spartacus

|

||||

```

|

||||

|

||||

repeat the option to map additional subnets

|

||||

|

||||

**be careful with this one!** if you have a reverseproxy, then you definitely want to make sure you have [real-ip](#real-ip) configured correctly, and it's probably a good idea to nullmap the reverseproxy's IP just in case; so if your reverseproxy is sending requests from `172.24.27.9` then that would be `--ipu=172.24.27.9/32=`

|

||||

|

||||

|

||||

## identity providers

|

||||

|

||||

replace copyparty passwords with oauth and such

|

||||

|

||||

you can disable the built-in password-based login sysem, and instead replace it with a separate piece of software (an identity provider) which will then handle authenticating / authorizing of users; this makes it possible to login with passkeys / fido2 / webauthn / yubikey / ldap / active directory / oauth / many other single-sign-on contraptions

|

||||

you can disable the built-in password-based login system, and instead replace it with a separate piece of software (an identity provider) which will then handle authenticating / authorizing of users; this makes it possible to login with passkeys / fido2 / webauthn / yubikey / ldap / active directory / oauth / many other single-sign-on contraptions

|

||||

|

||||

a popular choice is [Authelia](https://www.authelia.com/) (config-file based), another one is [authentik](https://goauthentik.io/) (GUI-based, more complex)

|

||||

|

||||

@@ -1453,7 +1510,7 @@ if permitted, users can change their own passwords in the control-panel

|

||||

|

||||

* if you run multiple copyparty instances with different users you *almost definitely* want to specify separate DBs for each instance

|

||||

|

||||

* if [password hashing](#password-hashing) is enbled, the passwords in the db are also hashed

|

||||

* if [password hashing](#password-hashing) is enabled, the passwords in the db are also hashed

|

||||

|

||||

* ...which means that all user-defined passwords will be forgotten if you change password-hashing settings

|

||||

|

||||

@@ -1473,7 +1530,7 @@ you may improve performance by specifying larger values for `--iobuf` / `--s-rd-

|

||||

|

||||

## hiding from google

|

||||

|

||||

tell search engines you dont wanna be indexed, either using the good old [robots.txt](https://www.robotstxt.org/robotstxt.html) or through copyparty settings:

|

||||

tell search engines you don't wanna be indexed, either using the good old [robots.txt](https://www.robotstxt.org/robotstxt.html) or through copyparty settings:

|

||||

|

||||

* `--no-robots` adds HTTP (`X-Robots-Tag`) and HTML (`<meta>`) headers with `noindex, nofollow` globally

|

||||

* volflag `[...]:c,norobots` does the same thing for that single volume

|

||||

@@ -1548,6 +1605,33 @@ if you want to change the fonts, see [./docs/rice/](./docs/rice/)

|

||||

`-lo log/cpp-%Y-%m%d-%H%M%S.txt.xz`

|

||||

|

||||

|

||||

## listen on port 80 and 443

|

||||

|

||||

become a *real* webserver which people can access by just going to your IP or domain without specifying a port

|

||||

|

||||

**if you're on windows,** then you just need to add the commandline argument `-p 80,443` and you're done! nice

|

||||

|

||||

**if you're on macos,** sorry, I don't know

|

||||

|

||||

**if you're on Linux,** you have the following 4 options:

|

||||

|

||||

* **option 1:** set up a [reverse-proxy](#reverse-proxy) -- this one makes a lot of sense if you're running on a proper headless server, because that way you get real HTTPS too

|

||||

|

||||

* **option 2:** NAT to port 3923 -- this is cumbersome since you'll need to do it every time you reboot, and the exact command may depend on your linux distribution:

|

||||

```bash

|

||||

iptables -t nat -A PREROUTING -p tcp --dport 80 -j REDIRECT --to-port 3923

|

||||

iptables -t nat -A PREROUTING -p tcp --dport 443 -j REDIRECT --to-port 3923

|

||||

```

|

||||

|

||||

* **option 3:** disable the [security policy](https://www.w3.org/Daemon/User/Installation/PrivilegedPorts.html) which prevents the use of 80 and 443; this is *probably* fine:

|

||||

```

|

||||

setcap CAP_NET_BIND_SERVICE=+eip $(realpath $(which python))

|

||||

python copyparty-sfx.py -p 80,443

|

||||

```

|

||||

|

||||

* **option 4:** run copyparty as root (please don't)

|

||||

|

||||

|

||||

## reverse-proxy

|

||||

|

||||

running copyparty next to other websites hosted on an existing webserver such as nginx, caddy, or apache

|

||||

@@ -1603,6 +1687,7 @@ scrape_configs:

|

||||

currently the following metrics are available,

|

||||

* `cpp_uptime_seconds` time since last copyparty restart

|

||||

* `cpp_boot_unixtime_seconds` same but as an absolute timestamp

|

||||

* `cpp_active_dl` number of active downloads

|

||||

* `cpp_http_conns` number of open http(s) connections

|

||||

* `cpp_http_reqs` number of http(s) requests handled

|

||||

* `cpp_sus_reqs` number of 403/422/malicious requests

|

||||

@@ -1852,6 +1937,9 @@ quick summary of more eccentric web-browsers trying to view a directory index:

|

||||

| **ie4** and **netscape** 4.0 | can browse, upload with `?b=u`, auth with `&pw=wark` |

|

||||

| **ncsa mosaic** 2.7 | does not get a pass, [pic1](https://user-images.githubusercontent.com/241032/174189227-ae816026-cf6f-4be5-a26e-1b3b072c1b2f.png) - [pic2](https://user-images.githubusercontent.com/241032/174189225-5651c059-5152-46e9-ac26-7e98e497901b.png) |

|

||||

| **SerenityOS** (7e98457) | hits a page fault, works with `?b=u`, file upload not-impl |

|

||||

| **nintendo 3ds** | can browse, upload, view thumbnails (thx bnjmn) |

|

||||

|

||||

<p align="center"><img src="https://github.com/user-attachments/assets/88deab3d-6cad-4017-8841-2f041472b853" /></p>

|

||||

|

||||

|

||||

# client examples

|

||||

@@ -1885,6 +1973,7 @@ interact with copyparty using non-browser clients

|

||||

|

||||

* FUSE: mount a copyparty server as a local filesystem

|

||||

* cross-platform python client available in [./bin/](bin/)

|

||||

* able to mount nginx and iis directory listings too, not just copyparty

|

||||

* can be downloaded from copyparty: controlpanel -> connect -> [partyfuse.py](http://127.0.0.1:3923/.cpr/a/partyfuse.py)

|

||||

* [rclone](https://rclone.org/) as client can give ~5x performance, see [./docs/rclone.md](docs/rclone.md)

|

||||

|

||||

@@ -1895,7 +1984,7 @@ interact with copyparty using non-browser clients

|

||||

|

||||

* [igloo irc](https://iglooirc.com/): Method: `post` Host: `https://you.com/up/?want=url&pw=hunter2` Multipart: `yes` File parameter: `f`

|

||||

|

||||

copyparty returns a truncated sha512sum of your PUT/POST as base64; you can generate the same checksum locally to verify uplaods:

|

||||

copyparty returns a truncated sha512sum of your PUT/POST as base64; you can generate the same checksum locally to verify uploads:

|

||||

|

||||

b512(){ printf "$((sha512sum||shasum -a512)|sed -E 's/ .*//;s/(..)/\\x\1/g')"|base64|tr '+/' '-_'|head -c44;}

|

||||

b512 <movie.mkv

|

||||

@@ -1924,7 +2013,7 @@ alternatively, some alternatives roughly sorted by speed (unreproducible benchma

|

||||

|

||||

* [rclone-webdav](./docs/rclone.md) (25s), read/WRITE (rclone v1.63 or later)

|

||||

* [rclone-http](./docs/rclone.md) (26s), read-only

|

||||

* [partyfuse.py](./bin/#partyfusepy) (35s), read-only

|

||||

* [partyfuse.py](./bin/#partyfusepy) (26s), read-only

|

||||

* [rclone-ftp](./docs/rclone.md) (47s), read/WRITE

|

||||

* davfs2 (103s), read/WRITE

|

||||

* [win10-webdav](#webdav-server) (138s), read/WRITE

|

||||

@@ -1995,7 +2084,7 @@ when uploading files,

|

||||

* up to 30% faster uploads if you hide the upload status list by switching away from the `[🚀]` up2k ui-tab (or closing it)

|

||||

* optionally you can switch to the lightweight potato ui by clicking the `[🥔]`

|

||||

* switching to another browser-tab also works, the favicon will update every 10 seconds in that case

|

||||

* unlikely to be a problem, but can happen when uploding many small files, or your internet is too fast, or PC too slow

|

||||

* unlikely to be a problem, but can happen when uploading many small files, or your internet is too fast, or PC too slow

|

||||

|

||||

|

||||

# security

|

||||

@@ -2043,7 +2132,7 @@ other misc notes:

|

||||

|

||||

behavior that might be unexpected

|

||||

|

||||

* users without read-access to a folder can still see the `.prologue.html` / `.epilogue.html` / `README.md` contents, for the purpose of showing a description on how to use the uploader for example

|

||||

* users without read-access to a folder can still see the `.prologue.html` / `.epilogue.html` / `PREADME.md` / `README.md` contents, for the purpose of showing a description on how to use the uploader for example

|

||||

* users can submit `<script>`s which autorun (in a sandbox) for other visitors in a few ways;

|

||||

* uploading a `README.md` -- avoid with `--no-readme`

|

||||

* renaming `some.html` to `.epilogue.html` -- avoid with either `--no-logues` or `--no-dot-ren`

|

||||

@@ -2086,6 +2175,8 @@ volflag `dky` disables the actual key-check, meaning anyone can see the contents

|

||||

|

||||

volflag `dks` lets people enter subfolders as well, and also enables download-as-zip/tar

|

||||

|

||||

if you enable dirkeys, it is probably a good idea to enable filekeys too, otherwise it will be impossible to hotlink files from a folder which was accessed using a dirkey

|

||||

|

||||

dirkeys are generated based on another salt (`--dk-salt`) + filesystem-path and have a few limitations:

|

||||

* the key does not change if the contents of the folder is modified

|

||||

* if you need a new dirkey, either change the salt or rename the folder

|

||||

@@ -2119,13 +2210,13 @@ if [cfssl](https://github.com/cloudflare/cfssl/releases/latest) is installed, co

|

||||

|

||||

## client crashes

|

||||

|

||||

### frefox wsod

|

||||

### firefox wsod

|

||||

|

||||

firefox 87 can crash during uploads -- the entire browser goes, including all other browser tabs, everything turns white

|

||||

|

||||

however you can hit `F12` in the up2k tab and use the devtools to see how far you got in the uploads:

|

||||

|

||||

* get a complete list of all uploads, organized by statuts (ok / no-good / busy / queued):

|

||||

* get a complete list of all uploads, organized by status (ok / no-good / busy / queued):

|

||||

`var tabs = { ok:[], ng:[], bz:[], q:[] }; for (var a of up2k.ui.tab) tabs[a.in].push(a); tabs`

|

||||

|

||||

* list of filenames which failed:

|

||||

@@ -2168,7 +2259,7 @@ enable [thumbnails](#thumbnails) of...

|

||||

* **JPEG XL pictures:** `pyvips` or `ffmpeg`

|

||||

|

||||

enable [smb](#smb-server) support (**not** recommended):

|

||||

* `impacket==0.11.0`

|

||||

* `impacket==0.12.0`

|

||||

|

||||

`pyvips` gives higher quality thumbnails than `Pillow` and is 320% faster, using 270% more ram: `sudo apt install libvips42 && python3 -m pip install --user -U pyvips`

|

||||

|

||||

@@ -2242,7 +2333,7 @@ then again, if you are already into downloading shady binaries from the internet

|

||||

|

||||

## zipapp

|

||||

|

||||

another emergency alternative, [copyparty.pyz](https://github.com/9001/copyparty/releases/latest/download/copyparty.pyz) has less features, requires python 3.7 or newer, worse compression, and more importantly is unable to benefit from more recent versions of jinja2 and such (which makes it less secure)... lots of drawbacks with this one really -- but it *may* just work if the regular sfx fails to start because the computer is messed up in certain funky ways, so it's worth a shot if all else fails

|

||||

another emergency alternative, [copyparty.pyz](https://github.com/9001/copyparty/releases/latest/download/copyparty.pyz) has less features, is slow, requires python 3.7 or newer, worse compression, and more importantly is unable to benefit from more recent versions of jinja2 and such (which makes it less secure)... lots of drawbacks with this one really -- but it does not unpack any temporary files to disk, so it *may* just work if the regular sfx fails to start because the computer is messed up in certain funky ways, so it's worth a shot if all else fails

|

||||

|

||||

run it by doubleclicking it, or try typing `python copyparty.pyz` in your terminal/console/commandline/telex if that fails

|

||||

|

||||

|

||||

@@ -15,22 +15,18 @@ produces a chronological list of all uploads by collecting info from up2k databa

|

||||

# [`partyfuse.py`](partyfuse.py)

|

||||

* mount a copyparty server as a local filesystem (read-only)

|

||||

* **supports Windows!** -- expect `194 MiB/s` sequential read

|

||||

* **supports Linux** -- expect `117 MiB/s` sequential read

|

||||

* **supports Linux** -- expect `600 MiB/s` sequential read

|

||||

* **supports macos** -- expect `85 MiB/s` sequential read

|

||||

|

||||

filecache is default-on for windows and macos;

|

||||

* macos readsize is 64kB, so speed ~32 MiB/s without the cache

|

||||

* windows readsize varies by software; explorer=1M, pv=32k

|

||||

|

||||

note that copyparty should run with `-ed` to enable dotfiles (hidden otherwise)

|

||||

|

||||

also consider using [../docs/rclone.md](../docs/rclone.md) instead for 5x performance

|

||||

and consider using [../docs/rclone.md](../docs/rclone.md) instead; usually a bit faster, especially on windows

|

||||

|

||||

|

||||

## to run this on windows:

|

||||

* install [winfsp](https://github.com/billziss-gh/winfsp/releases/latest) and [python 3](https://www.python.org/downloads/)

|

||||

* [x] add python 3.x to PATH (it asks during install)

|

||||

* `python -m pip install --user fusepy`

|

||||

* `python -m pip install --user fusepy` (or grab a copy of `fuse.py` from the `connect` page on your copyparty, and keep it in the same folder)

|

||||

* `python ./partyfuse.py n: http://192.168.1.69:3923/`

|

||||

|

||||

10% faster in [msys2](https://www.msys2.org/), 700% faster if debug prints are enabled:

|

||||

|

||||

@@ -2,7 +2,7 @@ standalone programs which are executed by copyparty when an event happens (uploa

|

||||

|

||||

these programs either take zero arguments, or a filepath (the affected file), or a json message with filepath + additional info

|

||||

|

||||

run copyparty with `--help-hooks` for usage details / hook type explanations (xm/xbu/xau/xiu/xbr/xar/xbd/xad/xban)

|

||||

run copyparty with `--help-hooks` for usage details / hook type explanations (xm/xbu/xau/xiu/xbc/xac/xbr/xar/xbd/xad/xban)

|

||||

|

||||

> **note:** in addition to event hooks (the stuff described here), copyparty has another api to run your programs/scripts while providing way more information such as audio tags / video codecs / etc and optionally daisychaining data between scripts in a processing pipeline; if that's what you want then see [mtp plugins](../mtag/) instead

|

||||

|

||||

|

||||

676

bin/partyfuse.py

676

bin/partyfuse.py

File diff suppressed because it is too large

Load Diff

561

bin/u2c.py

561

bin/u2c.py

File diff suppressed because it is too large

Load Diff

@@ -1,6 +1,6 @@

|

||||

# Maintainer: icxes <dev.null@need.moe>

|

||||

pkgname=copyparty

|

||||

pkgver="1.15.1"

|

||||

pkgver="1.16.0"

|

||||

pkgrel=1

|

||||

pkgdesc="File server with accelerated resumable uploads, dedup, WebDAV, FTP, TFTP, zeroconf, media indexer, thumbnails++"

|

||||

arch=("any")

|

||||

@@ -21,7 +21,7 @@ optdepends=("ffmpeg: thumbnails for videos, images (slower) and audio, music tag

|

||||

)

|

||||

source=("https://github.com/9001/${pkgname}/releases/download/v${pkgver}/${pkgname}-${pkgver}.tar.gz")

|

||||

backup=("etc/${pkgname}.d/init" )

|

||||

sha256sums=("5fb048fe7e2aa5ad18c9cdb333af3ee5e51c338efa74b34aa8aa444675eac913")

|

||||

sha256sums=("8a802bbb4392ead6bc92bcb1c71ecd1855a05a5b4d0312499c33f65424c12a00")

|

||||

|

||||

build() {

|

||||

cd "${srcdir}/${pkgname}-${pkgver}"

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

{

|

||||

"url": "https://github.com/9001/copyparty/releases/download/v1.15.1/copyparty-sfx.py",

|

||||

"version": "1.15.1",

|

||||

"hash": "sha256-i4S/TmuAphv/wbndfoSUYztNqO+o+qh/v8GcslxWWUk="

|

||||

"url": "https://github.com/9001/copyparty/releases/download/v1.16.0/copyparty-sfx.py",

|

||||

"version": "1.16.0",

|

||||

"hash": "sha256-H9imF66HcE6I/gGPZdJ5zkzATC3Vkc4luTAbRy8GRh4="

|

||||

}

|

||||

@@ -16,7 +16,7 @@ except:

|

||||

TYPE_CHECKING = False

|

||||

|

||||

if True:

|

||||

from typing import Any, Callable

|

||||

from typing import Any, Callable, Optional

|

||||

|

||||

PY2 = sys.version_info < (3,)

|

||||

PY36 = sys.version_info > (3, 6)

|

||||

@@ -51,6 +51,61 @@ try:

|

||||

except:

|

||||

CORES = (os.cpu_count() if hasattr(os, "cpu_count") else 0) or 2

|

||||

|

||||

# all embedded resources to be retrievable over http

|

||||

zs = """

|

||||

web/a/partyfuse.py

|

||||

web/a/u2c.py

|

||||

web/a/webdav-cfg.bat

|

||||

web/baguettebox.js

|

||||

web/browser.css

|

||||

web/browser.html

|

||||

web/browser.js

|

||||

web/browser2.html

|

||||

web/cf.html

|

||||

web/copyparty.gif

|

||||

web/dd/2.png

|

||||

web/dd/3.png

|

||||

web/dd/4.png

|

||||

web/dd/5.png

|

||||

web/deps/busy.mp3

|

||||

web/deps/easymde.css

|

||||

web/deps/easymde.js

|

||||

web/deps/marked.js

|

||||

web/deps/fuse.py

|

||||

web/deps/mini-fa.css

|

||||

web/deps/mini-fa.woff

|

||||

web/deps/prism.css

|

||||

web/deps/prism.js

|

||||

web/deps/prismd.css

|

||||

web/deps/scp.woff2

|

||||

web/deps/sha512.ac.js

|

||||

web/deps/sha512.hw.js

|

||||

web/iiam.gif

|

||||

web/md.css

|

||||

web/md.html

|

||||

web/md.js

|

||||

web/md2.css

|

||||

web/md2.js

|

||||

web/mde.css

|

||||

web/mde.html

|

||||

web/mde.js

|

||||

web/msg.css

|

||||

web/msg.html

|

||||

web/shares.css

|

||||

web/shares.html

|

||||

web/shares.js

|

||||

web/splash.css

|

||||

web/splash.html

|

||||

web/splash.js

|

||||

web/svcs.html

|

||||

web/svcs.js

|

||||

web/ui.css

|

||||

web/up2k.js

|

||||

web/util.js

|

||||

web/w.hash.js

|

||||

"""

|

||||

RES = set(zs.strip().split("\n"))

|

||||

|

||||

|

||||

class EnvParams(object):

|

||||

def __init__(self) -> None:

|

||||

|

||||

@@ -50,6 +50,8 @@ from .util import (

|

||||

PARTFTPY_VER,

|

||||

PY_DESC,

|

||||

PYFTPD_VER,

|

||||

RAM_AVAIL,

|

||||

RAM_TOTAL,

|

||||

SQLITE_VER,

|

||||

UNPLICATIONS,

|

||||

Daemon,

|

||||

@@ -57,6 +59,8 @@ from .util import (

|

||||

ansi_re,

|

||||

b64enc,

|

||||

dedent,

|

||||

has_resource,

|

||||

load_resource,

|

||||

min_ex,

|

||||

pybin,

|

||||

termsize,

|

||||

@@ -325,8 +329,7 @@ def ensure_locale() -> None:

|

||||

|

||||

|

||||

def ensure_webdeps() -> None:

|

||||

ap = os.path.join(E.mod, "web/deps/mini-fa.woff")

|

||||

if os.path.exists(ap):

|

||||

if has_resource(E, "web/deps/mini-fa.woff"):

|

||||

return

|

||||

|

||||

warn(

|

||||

@@ -519,14 +522,18 @@ def sfx_tpoke(top: str):

|

||||

|

||||

|

||||

def showlic() -> None:

|

||||

p = os.path.join(E.mod, "res", "COPYING.txt")

|

||||

if not os.path.exists(p):

|

||||

try:

|

||||

with load_resource(E, "res/COPYING.txt") as f:

|

||||

buf = f.read()

|

||||

except:

|

||||

buf = b""

|

||||

|

||||

if buf:

|

||||

print(buf.decode("utf-8", "replace"))

|

||||

else:

|

||||

print("no relevant license info to display")

|

||||

return

|

||||

|

||||

with open(p, "rb") as f:

|

||||

print(f.read().decode("utf-8", "replace"))

|

||||

|

||||

|

||||

def get_sects():

|

||||

return [

|

||||

@@ -679,6 +686,8 @@ def get_sects():

|

||||

\033[36mxbu\033[35m executes CMD before a file upload starts

|

||||

\033[36mxau\033[35m executes CMD after a file upload finishes

|

||||

\033[36mxiu\033[35m executes CMD after all uploads finish and volume is idle

|

||||

\033[36mxbc\033[35m executes CMD before a file copy

|

||||

\033[36mxac\033[35m executes CMD after a file copy

|

||||

\033[36mxbr\033[35m executes CMD before a file rename/move

|

||||

\033[36mxar\033[35m executes CMD after a file rename/move

|

||||

\033[36mxbd\033[35m executes CMD before a file delete

|

||||

@@ -775,7 +784,7 @@ def get_sects():

|

||||

dedent(

|

||||

"""

|

||||

specify --exp or the "exp" volflag to enable placeholder expansions

|

||||

in README.md / .prologue.html / .epilogue.html

|

||||

in README.md / PREADME.md / .prologue.html / .epilogue.html

|

||||

|

||||

--exp-md (volflag exp_md) holds the list of placeholders which can be

|

||||

expanded in READMEs, and --exp-lg (volflag exp_lg) likewise for logues;

|

||||

@@ -869,8 +878,9 @@ def get_sects():

|

||||

use argon2id with timecost 3, 256 MiB, 4 threads, version 19 (0x13/v1.3)

|

||||

|

||||

\033[36m--ah-alg scrypt\033[0m # which is the same as:

|

||||

\033[36m--ah-alg scrypt,13,2,8,4\033[0m

|

||||

use scrypt with cost 2**13, 2 iterations, blocksize 8, 4 threads

|

||||

\033[36m--ah-alg scrypt,13,2,8,4,32\033[0m

|

||||

use scrypt with cost 2**13, 2 iterations, blocksize 8, 4 threads,

|

||||

and allow using up to 32 MiB RAM (ram=cost*blksz roughly)

|

||||

|

||||

\033[36m--ah-alg sha2\033[0m # which is the same as:

|

||||

\033[36m--ah-alg sha2,424242\033[0m

|

||||

@@ -891,7 +901,7 @@ def get_sects():

|

||||

dedent(

|

||||

"""

|

||||

the mDNS protocol is multicast-based, which means there are thousands

|

||||

of fun and intersesting ways for it to break unexpectedly

|

||||

of fun and interesting ways for it to break unexpectedly

|

||||

|

||||

things to check if it does not work at all:

|

||||

|

||||

@@ -1000,6 +1010,7 @@ def add_upload(ap):

|

||||

ap2.add_argument("--hardlink", action="store_true", help="enable hardlink-based dedup; will fallback on symlinks when that is impossible (across filesystems) (volflag=hardlink)")

|

||||

ap2.add_argument("--hardlink-only", action="store_true", help="do not fallback to symlinks when a hardlink cannot be made (volflag=hardlinkonly)")

|

||||

ap2.add_argument("--no-dupe", action="store_true", help="reject duplicate files during upload; only matches within the same volume (volflag=nodupe)")

|

||||

ap2.add_argument("--no-clone", action="store_true", help="do not use existing data on disk to satisfy dupe uploads; reduces server HDD reads in exchange for much more network load (volflag=noclone)")

|

||||

ap2.add_argument("--no-snap", action="store_true", help="disable snapshots -- forget unfinished uploads on shutdown; don't create .hist/up2k.snap files -- abandoned/interrupted uploads must be cleaned up manually")

|

||||

ap2.add_argument("--snap-wri", metavar="SEC", type=int, default=300, help="write upload state to ./hist/up2k.snap every \033[33mSEC\033[0m seconds; allows resuming incomplete uploads after a server crash")

|

||||

ap2.add_argument("--snap-drop", metavar="MIN", type=float, default=1440.0, help="forget unfinished uploads after \033[33mMIN\033[0m minutes; impossible to resume them after that (360=6h, 1440=24h)")

|

||||

@@ -1011,7 +1022,7 @@ def add_upload(ap):

|

||||

ap2.add_argument("--sparse", metavar="MiB", type=int, default=4, help="windows-only: minimum size of incoming uploads through up2k before they are made into sparse files")

|

||||

ap2.add_argument("--turbo", metavar="LVL", type=int, default=0, help="configure turbo-mode in up2k client; [\033[32m-1\033[0m] = forbidden/always-off, [\033[32m0\033[0m] = default-off and warn if enabled, [\033[32m1\033[0m] = default-off, [\033[32m2\033[0m] = on, [\033[32m3\033[0m] = on and disable datecheck")

|

||||

ap2.add_argument("--u2j", metavar="JOBS", type=int, default=2, help="web-client: number of file chunks to upload in parallel; 1 or 2 is good for low-latency (same-country) connections, 4-8 for android clients, 16 for cross-atlantic (max=64)")

|

||||

ap2.add_argument("--u2sz", metavar="N,N,N", type=u, default="1,64,96", help="web-client: default upload chunksize (MiB); sets \033[33mmin,default,max\033[0m in the settings gui. Each HTTP POST will aim for this size. Cloudflare max is 96. Big values are good for cross-atlantic but may increase HDD fragmentation on some FS. Disable this optimization with [\033[32m1,1,1\033[0m]")

|

||||

ap2.add_argument("--u2sz", metavar="N,N,N", type=u, default="1,64,96", help="web-client: default upload chunksize (MiB); sets \033[33mmin,default,max\033[0m in the settings gui. Each HTTP POST will aim for \033[33mdefault\033[0m, and never exceed \033[33mmax\033[0m. Cloudflare max is 96. Big values are good for cross-atlantic but may increase HDD fragmentation on some FS. Disable this optimization with [\033[32m1,1,1\033[0m]")

|

||||

ap2.add_argument("--u2sort", metavar="TXT", type=u, default="s", help="upload order; [\033[32ms\033[0m]=smallest-first, [\033[32mn\033[0m]=alphabetical, [\033[32mfs\033[0m]=force-s, [\033[32mfn\033[0m]=force-n -- alphabetical is a bit slower on fiber/LAN but makes it easier to eyeball if everything went fine")

|

||||

ap2.add_argument("--write-uplog", action="store_true", help="write POST reports to textfiles in working-directory")

|

||||

|

||||

@@ -1031,7 +1042,7 @@ def add_network(ap):

|

||||

else:

|

||||

ap2.add_argument("--freebind", action="store_true", help="allow listening on IPs which do not yet exist, for example if the network interfaces haven't finished going up. Only makes sense for IPs other than '0.0.0.0', '127.0.0.1', '::', and '::1'. May require running as root (unless net.ipv6.ip_nonlocal_bind)")

|

||||

ap2.add_argument("--s-thead", metavar="SEC", type=int, default=120, help="socket timeout (read request header)")

|

||||

ap2.add_argument("--s-tbody", metavar="SEC", type=float, default=186.0, help="socket timeout (read/write request/response bodies). Use 60 on fast servers (default is extremely safe). Disable with 0 if reverse-proxied for a 2%% speed boost")

|

||||

ap2.add_argument("--s-tbody", metavar="SEC", type=float, default=128.0, help="socket timeout (read/write request/response bodies). Use 60 on fast servers (default is extremely safe). Disable with 0 if reverse-proxied for a 2%% speed boost")

|

||||

ap2.add_argument("--s-rd-sz", metavar="B", type=int, default=256*1024, help="socket read size in bytes (indirectly affects filesystem writes; recommendation: keep equal-to or lower-than \033[33m--iobuf\033[0m)")

|

||||

ap2.add_argument("--s-wr-sz", metavar="B", type=int, default=256*1024, help="socket write size in bytes")

|

||||

ap2.add_argument("--s-wr-slp", metavar="SEC", type=float, default=0.0, help="debug: socket write delay in seconds")

|

||||

@@ -1081,6 +1092,7 @@ def add_auth(ap):

|

||||

ap2.add_argument("--ses-db", metavar="PATH", type=u, default=ses_db, help="where to store the sessions database (if you run multiple copyparty instances, make sure they use different DBs)")

|

||||

ap2.add_argument("--ses-len", metavar="CHARS", type=int, default=20, help="session key length; default is 120 bits ((20//4)*4*6)")

|

||||

ap2.add_argument("--no-ses", action="store_true", help="disable sessions; use plaintext passwords in cookies")

|

||||

ap2.add_argument("--ipu", metavar="CIDR=USR", type=u, action="append", help="users with IP matching \033[33mCIDR\033[0m are auto-authenticated as username \033[33mUSR\033[0m; example: [\033[32m172.16.24.0/24=dave]")

|

||||

|

||||

|

||||

def add_chpw(ap):

|

||||

@@ -1174,7 +1186,7 @@ def add_smb(ap):

|

||||

ap2.add_argument("--smbw", action="store_true", help="enable write support (please dont)")

|

||||

ap2.add_argument("--smb1", action="store_true", help="disable SMBv2, only enable SMBv1 (CIFS)")

|

||||

ap2.add_argument("--smb-port", metavar="PORT", type=int, default=445, help="port to listen on -- if you change this value, you must NAT from TCP:445 to this port using iptables or similar")

|

||||

ap2.add_argument("--smb-nwa-1", action="store_true", help="disable impacket#1433 workaround (truncate directory listings to 64kB)")

|

||||

ap2.add_argument("--smb-nwa-1", action="store_true", help="truncate directory listings to 64kB (~400 files); avoids impacket-0.11 bug, fixes impacket-0.12 performance")

|

||||

ap2.add_argument("--smb-nwa-2", action="store_true", help="disable impacket workaround for filecopy globs")

|

||||

ap2.add_argument("--smba", action="store_true", help="small performance boost: disable per-account permissions, enables account coalescing instead (if one user has write/delete-access, then everyone does)")

|

||||

ap2.add_argument("--smbv", action="store_true", help="verbose")

|

||||

@@ -1194,6 +1206,8 @@ def add_hooks(ap):

|

||||

ap2.add_argument("--xbu", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file upload starts")

|

||||

ap2.add_argument("--xau", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after a file upload finishes")

|

||||

ap2.add_argument("--xiu", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after all uploads finish and volume is idle")

|

||||

ap2.add_argument("--xbc", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file copy")

|

||||

ap2.add_argument("--xac", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after a file copy")

|

||||

ap2.add_argument("--xbr", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file move/rename")

|

||||

ap2.add_argument("--xar", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after a file move/rename")

|

||||

ap2.add_argument("--xbd", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file delete")

|

||||

@@ -1226,6 +1240,7 @@ def add_optouts(ap):

|

||||

ap2.add_argument("--no-dav", action="store_true", help="disable webdav support")

|

||||

ap2.add_argument("--no-del", action="store_true", help="disable delete operations")

|

||||

ap2.add_argument("--no-mv", action="store_true", help="disable move/rename operations")

|

||||

ap2.add_argument("--no-cp", action="store_true", help="disable copy operations")

|

||||

ap2.add_argument("-nth", action="store_true", help="no title hostname; don't show \033[33m--name\033[0m in <title>")

|

||||

ap2.add_argument("-nih", action="store_true", help="no info hostname -- don't show in UI")

|

||||

ap2.add_argument("-nid", action="store_true", help="no info disk-usage -- don't show in UI")

|

||||

@@ -1249,7 +1264,7 @@ def add_safety(ap):

|

||||

ap2.add_argument("--no-dot-mv", action="store_true", help="disallow moving dotfiles; makes it impossible to move folders containing dotfiles")

|

||||

ap2.add_argument("--no-dot-ren", action="store_true", help="disallow renaming dotfiles; makes it impossible to turn something into a dotfile")

|

||||

ap2.add_argument("--no-logues", action="store_true", help="disable rendering .prologue/.epilogue.html into directory listings")

|

||||

ap2.add_argument("--no-readme", action="store_true", help="disable rendering readme.md into directory listings")

|

||||

ap2.add_argument("--no-readme", action="store_true", help="disable rendering readme/preadme.md into directory listings")

|

||||

ap2.add_argument("--vague-403", action="store_true", help="send 404 instead of 403 (security through ambiguity, very enterprise)")

|

||||

ap2.add_argument("--force-js", action="store_true", help="don't send folder listings as HTML, force clients to use the embedded json instead -- slight protection against misbehaving search engines which ignore \033[33m--no-robots\033[0m")

|

||||

ap2.add_argument("--no-robots", action="store_true", help="adds http and html headers asking search engines to not index anything (volflag=norobots)")

|

||||

@@ -1300,6 +1315,7 @@ def add_logging(ap):

|

||||

ap2.add_argument("--log-conn", action="store_true", help="debug: print tcp-server msgs")

|

||||

ap2.add_argument("--log-htp", action="store_true", help="debug: print http-server threadpool scaling")

|

||||

ap2.add_argument("--ihead", metavar="HEADER", type=u, action='append', help="print request \033[33mHEADER\033[0m; [\033[32m*\033[0m]=all")

|

||||

ap2.add_argument("--ohead", metavar="HEADER", type=u, action='append', help="print response \033[33mHEADER\033[0m; [\033[32m*\033[0m]=all")

|

||||

ap2.add_argument("--lf-url", metavar="RE", type=u, default=r"^/\.cpr/|\?th=[wj]$|/\.(_|ql_|DS_Store$|localized$)", help="dont log URLs matching regex \033[33mRE\033[0m")

|

||||

|

||||

|

||||

@@ -1308,9 +1324,12 @@ def add_admin(ap):

|

||||

ap2.add_argument("--no-reload", action="store_true", help="disable ?reload=cfg (reload users/volumes/volflags from config file)")

|

||||

ap2.add_argument("--no-rescan", action="store_true", help="disable ?scan (volume reindexing)")

|

||||

ap2.add_argument("--no-stack", action="store_true", help="disable ?stack (list all stacks)")

|

||||

ap2.add_argument("--dl-list", metavar="LVL", type=int, default=2, help="who can see active downloads in the controlpanel? [\033[32m0\033[0m]=nobody, [\033[32m1\033[0m]=admins, [\033[32m2\033[0m]=everyone")

|

||||

|

||||

|

||||

def add_thumbnail(ap):

|

||||

th_ram = (RAM_AVAIL or RAM_TOTAL or 9) * 0.6

|

||||

th_ram = int(max(min(th_ram, 6), 1) * 10) / 10

|

||||

ap2 = ap.add_argument_group('thumbnail options')

|

||||

ap2.add_argument("--no-thumb", action="store_true", help="disable all thumbnails (volflag=dthumb)")

|

||||

ap2.add_argument("--no-vthumb", action="store_true", help="disable video thumbnails (volflag=dvthumb)")

|

||||

@@ -1318,7 +1337,7 @@ def add_thumbnail(ap):

|

||||

ap2.add_argument("--th-size", metavar="WxH", default="320x256", help="thumbnail res (volflag=thsize)")

|

||||

ap2.add_argument("--th-mt", metavar="CORES", type=int, default=CORES, help="num cpu cores to use for generating thumbnails")

|

||||

ap2.add_argument("--th-convt", metavar="SEC", type=float, default=60.0, help="conversion timeout in seconds (volflag=convt)")

|

||||

ap2.add_argument("--th-ram-max", metavar="GB", type=float, default=6.0, help="max memory usage (GiB) permitted by thumbnailer; not very accurate")

|

||||

ap2.add_argument("--th-ram-max", metavar="GB", type=float, default=th_ram, help="max memory usage (GiB) permitted by thumbnailer; not very accurate")

|

||||

ap2.add_argument("--th-crop", metavar="TXT", type=u, default="y", help="crop thumbnails to 4:3 or keep dynamic height; client can override in UI unless force. [\033[32my\033[0m]=crop, [\033[32mn\033[0m]=nocrop, [\033[32mfy\033[0m]=force-y, [\033[32mfn\033[0m]=force-n (volflag=crop)")

|

||||

ap2.add_argument("--th-x3", metavar="TXT", type=u, default="n", help="show thumbs at 3x resolution; client can override in UI unless force. [\033[32my\033[0m]=yes, [\033[32mn\033[0m]=no, [\033[32mfy\033[0m]=force-yes, [\033[32mfn\033[0m]=force-no (volflag=th3x)")

|

||||

ap2.add_argument("--th-dec", metavar="LIBS", default="vips,pil,ff", help="image decoders, in order of preference")

|

||||

@@ -1333,12 +1352,12 @@ def add_thumbnail(ap):

|

||||

# https://pillow.readthedocs.io/en/stable/handbook/image-file-formats.html

|

||||

# https://github.com/libvips/libvips

|

||||

# ffmpeg -hide_banner -demuxers | awk '/^ D /{print$2}' | while IFS= read -r x; do ffmpeg -hide_banner -h demuxer=$x; done | grep -E '^Demuxer |extensions:'

|

||||

ap2.add_argument("--th-r-pil", metavar="T,T", type=u, default="avif,avifs,blp,bmp,dcx,dds,dib,emf,eps,fits,flc,fli,fpx,gif,heic,heics,heif,heifs,icns,ico,im,j2p,j2k,jp2,jpeg,jpg,jpx,pbm,pcx,pgm,png,pnm,ppm,psd,qoi,sgi,spi,tga,tif,tiff,webp,wmf,xbm,xpm", help="image formats to decode using pillow")

|

||||

ap2.add_argument("--th-r-pil", metavar="T,T", type=u, default="avif,avifs,blp,bmp,cbz,dcx,dds,dib,emf,eps,fits,flc,fli,fpx,gif,heic,heics,heif,heifs,icns,ico,im,j2p,j2k,jp2,jpeg,jpg,jpx,pbm,pcx,pgm,png,pnm,ppm,psd,qoi,sgi,spi,tga,tif,tiff,webp,wmf,xbm,xpm", help="image formats to decode using pillow")

|

||||

ap2.add_argument("--th-r-vips", metavar="T,T", type=u, default="avif,exr,fit,fits,fts,gif,hdr,heic,jp2,jpeg,jpg,jpx,jxl,nii,pfm,pgm,png,ppm,svg,tif,tiff,webp", help="image formats to decode using pyvips")

|

||||

ap2.add_argument("--th-r-ffi", metavar="T,T", type=u, default="apng,avif,avifs,bmp,dds,dib,fit,fits,fts,gif,hdr,heic,heics,heif,heifs,icns,ico,jp2,jpeg,jpg,jpx,jxl,pbm,pcx,pfm,pgm,png,pnm,ppm,psd,qoi,sgi,tga,tif,tiff,webp,xbm,xpm", help="image formats to decode using ffmpeg")

|

||||

ap2.add_argument("--th-r-ffi", metavar="T,T", type=u, default="apng,avif,avifs,bmp,cbz,dds,dib,fit,fits,fts,gif,hdr,heic,heics,heif,heifs,icns,ico,jp2,jpeg,jpg,jpx,jxl,pbm,pcx,pfm,pgm,png,pnm,ppm,psd,qoi,sgi,tga,tif,tiff,webp,xbm,xpm", help="image formats to decode using ffmpeg")

|

||||

ap2.add_argument("--th-r-ffv", metavar="T,T", type=u, default="3gp,asf,av1,avc,avi,flv,h264,h265,hevc,m4v,mjpeg,mjpg,mkv,mov,mp4,mpeg,mpeg2,mpegts,mpg,mpg2,mts,nut,ogm,ogv,rm,ts,vob,webm,wmv", help="video formats to decode using ffmpeg")

|

||||

ap2.add_argument("--th-r-ffa", metavar="T,T", type=u, default="aac,ac3,aif,aiff,alac,alaw,amr,apac,ape,au,bonk,dfpwm,dts,flac,gsm,ilbc,it,itgz,itxz,itz,m4a,mdgz,mdxz,mdz,mo3,mod,mp2,mp3,mpc,mptm,mt2,mulaw,ogg,okt,opus,ra,s3m,s3gz,s3xz,s3z,tak,tta,ulaw,wav,wma,wv,xm,xmgz,xmxz,xmz,xpk", help="audio formats to decode using ffmpeg")

|

||||

ap2.add_argument("--au-unpk", metavar="E=F.C", type=u, default="mdz=mod.zip, mdgz=mod.gz, mdxz=mod.xz, s3z=s3m.zip, s3gz=s3m.gz, s3xz=s3m.xz, xmz=xm.zip, xmgz=xm.gz, xmxz=xm.xz, itz=it.zip, itgz=it.gz, itxz=it.xz", help="audio formats to decompress before passing to ffmpeg")

|

||||

ap2.add_argument("--au-unpk", metavar="E=F.C", type=u, default="mdz=mod.zip, mdgz=mod.gz, mdxz=mod.xz, s3z=s3m.zip, s3gz=s3m.gz, s3xz=s3m.xz, xmz=xm.zip, xmgz=xm.gz, xmxz=xm.xz, itz=it.zip, itgz=it.gz, itxz=it.xz, cbz=jpg.cbz", help="audio/image formats to decompress before passing to ffmpeg")

|

||||

|

||||

|

||||

def add_transcoding(ap):

|

||||

@@ -1350,6 +1369,14 @@ def add_transcoding(ap):

|

||||

ap2.add_argument("--ac-maxage", metavar="SEC", type=int, default=86400, help="delete cached transcode output after \033[33mSEC\033[0m seconds")

|

||||

|

||||

|

||||

def add_rss(ap):

|

||||

ap2 = ap.add_argument_group('RSS options')

|

||||

ap2.add_argument("--rss", action="store_true", help="enable RSS output (experimental)")

|

||||

ap2.add_argument("--rss-nf", metavar="HITS", type=int, default=250, help="default number of files to return (url-param 'nf')")

|

||||

ap2.add_argument("--rss-fext", metavar="E,E", type=u, default="", help="default list of file extensions to include (url-param 'fext'); blank=all")

|

||||

ap2.add_argument("--rss-sort", metavar="ORD", type=u, default="m", help="default sort order (url-param 'sort'); [\033[32mm\033[0m]=last-modified [\033[32mu\033[0m]=upload-time [\033[32mn\033[0m]=filename [\033[32ms\033[0m]=filesize; Uppercase=oldest-first. Note that upload-time is 0 for non-uploaded files")

|

||||

|

||||

|

||||

def add_db_general(ap, hcores):

|

||||

noidx = APPLESAN_TXT if MACOS else ""

|

||||

ap2 = ap.add_argument_group('general db options')

|

||||

@@ -1445,9 +1472,10 @@ def add_ui(ap, retry):

|

||||

ap2.add_argument("--pb-url", metavar="URL", type=u, default="https://github.com/9001/copyparty", help="powered-by link; disable with \033[33m-np\033[0m")

|

||||

ap2.add_argument("--ver", action="store_true", help="show version on the control panel (incompatible with \033[33m-nb\033[0m)")

|

||||

ap2.add_argument("--k304", metavar="NUM", type=int, default=0, help="configure the option to enable/disable k304 on the controlpanel (workaround for buggy reverse-proxies); [\033[32m0\033[0m] = hidden and default-off, [\033[32m1\033[0m] = visible and default-off, [\033[32m2\033[0m] = visible and default-on")

|

||||

ap2.add_argument("--no304", metavar="NUM", type=int, default=0, help="configure the option to enable/disable no304 on the controlpanel (workaround for buggy caching in browsers); [\033[32m0\033[0m] = hidden and default-off, [\033[32m1\033[0m] = visible and default-off, [\033[32m2\033[0m] = visible and default-on")

|

||||

ap2.add_argument("--md-sbf", metavar="FLAGS", type=u, default="downloads forms popups scripts top-navigation-by-user-activation", help="list of capabilities to ALLOW for README.md docs (volflag=md_sbf); see https://developer.mozilla.org/en-US/docs/Web/HTML/Element/iframe#attr-sandbox")

|

||||