Compare commits

71 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

ce3cab0295 | ||

|

|

c784e5285e | ||

|

|

2bf9055cae | ||

|

|

8aba5aed4f | ||

|

|

0ce7cf5e10 | ||

|

|

96edcbccd7 | ||

|

|

4603afb6de | ||

|

|

56317b00af | ||

|

|

cacec9c1f3 | ||

|

|

44ee07f0b2 | ||

|

|

6a8d5e1731 | ||

|

|

d9962f65b3 | ||

|

|

119e88d87b | ||

|

|

71d9e010d9 | ||

|

|

5718caa957 | ||

|

|

efd8a32ed6 | ||

|

|

b22d700e16 | ||

|

|

ccdacea0c4 | ||

|

|

4bdcbc1cb5 | ||

|

|

833c6cf2ec | ||

|

|

dd6dbdd90a | ||

|

|

63013cc565 | ||

|

|

912402364a | ||

|

|

159f51b12b | ||

|

|

7678a91b0e | ||

|

|

b13899c63d | ||

|

|

3a0d882c5e | ||

|

|

cb81f0ad6d | ||

|

|

518bacf628 | ||

|

|

ca63b03e55 | ||

|

|

cecef88d6b | ||

|

|

7ffd805a03 | ||

|

|

a7e2a0c981 | ||

|

|

2a570bb4ca | ||

|

|

5ca8f0706d | ||

|

|

a9b4436cdc | ||

|

|

5f91999512 | ||

|

|

9f000beeaf | ||

|

|

ff0a71f212 | ||

|

|

22dfc6ec24 | ||

|

|

48147c079e | ||

|

|

d715479ef6 | ||

|

|

fc8298c468 | ||

|

|

e94ca5dc91 | ||

|

|

114b71b751 | ||

|

|

b2770a2087 | ||

|

|

cba1878bb2 | ||

|

|

a2e037d6af | ||

|

|

65a2b6a223 | ||

|

|

9ed799e803 | ||

|

|

c1c0ecca13 | ||

|

|

ee62836383 | ||

|

|

705f598b1a | ||

|

|

414de88925 | ||

|

|

53ffd245dd | ||

|

|

cf1b756206 | ||

|

|

22b58e31ef | ||

|

|

b7f9bf5a28 | ||

|

|

aba680b6c2 | ||

|

|

fabada95f6 | ||

|

|

9ccd8bb3ea | ||

|

|

1d68acf8f0 | ||

|

|

1e7697b551 | ||

|

|

4a4ec88d00 | ||

|

|

6adc778d62 | ||

|

|

6b7ebdb7e9 | ||

|

|

3d7facd774 | ||

|

|

eaee1f2cab | ||

|

|

ff012221ae | ||

|

|

c398553748 | ||

|

|

3ccbcf6185 |

144

README.md

144

README.md

@@ -47,6 +47,7 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [file manager](#file-manager) - cut/paste, rename, and delete files/folders (if you have permission)

|

||||

* [shares](#shares) - share a file or folder by creating a temporary link

|

||||

* [batch rename](#batch-rename) - select some files and press `F2` to bring up the rename UI

|

||||

* [rss feeds](#rss-feeds) - monitor a folder with your RSS reader

|

||||

* [media player](#media-player) - plays almost every audio format there is

|

||||

* [audio equalizer](#audio-equalizer) - and [dynamic range compressor](https://en.wikipedia.org/wiki/Dynamic_range_compression)

|

||||

* [fix unreliable playback on android](#fix-unreliable-playback-on-android) - due to phone / app settings

|

||||

@@ -80,12 +81,14 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [event hooks](#event-hooks) - trigger a program on uploads, renames etc ([examples](./bin/hooks/))

|

||||

* [upload events](#upload-events) - the older, more powerful approach ([examples](./bin/mtag/))

|

||||

* [handlers](#handlers) - redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

* [ip auth](#ip-auth) - autologin based on IP range (CIDR)

|

||||

* [identity providers](#identity-providers) - replace copyparty passwords with oauth and such

|

||||

* [user-changeable passwords](#user-changeable-passwords) - if permitted, users can change their own passwords

|

||||

* [using the cloud as storage](#using-the-cloud-as-storage) - connecting to an aws s3 bucket and similar

|

||||

* [hiding from google](#hiding-from-google) - tell search engines you dont wanna be indexed

|

||||

* [hiding from google](#hiding-from-google) - tell search engines you don't wanna be indexed

|

||||

* [themes](#themes)

|

||||

* [complete examples](#complete-examples)

|

||||

* [listen on port 80 and 443](#listen-on-port-80-and-443) - become a *real* webserver

|

||||

* [reverse-proxy](#reverse-proxy) - running copyparty next to other websites

|

||||

* [real-ip](#real-ip) - teaching copyparty how to see client IPs

|

||||

* [prometheus](#prometheus) - metrics/stats can be enabled

|

||||

@@ -114,7 +117,7 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [https](#https) - both HTTP and HTTPS are accepted

|

||||

* [recovering from crashes](#recovering-from-crashes)

|

||||

* [client crashes](#client-crashes)

|

||||

* [frefox wsod](#frefox-wsod) - firefox 87 can crash during uploads

|

||||

* [firefox wsod](#firefox-wsod) - firefox 87 can crash during uploads

|

||||

* [HTTP API](#HTTP-API) - see [devnotes](./docs/devnotes.md#http-api)

|

||||

* [dependencies](#dependencies) - mandatory deps

|

||||

* [optional dependencies](#optional-dependencies) - install these to enable bonus features

|

||||

@@ -217,7 +220,7 @@ also see [comparison to similar software](./docs/versus.md)

|

||||

* upload

|

||||

* ☑ basic: plain multipart, ie6 support

|

||||

* ☑ [up2k](#uploading): js, resumable, multithreaded

|

||||

* **no filesize limit!** ...unless you use Cloudflare, then it's 383.9 GiB

|

||||

* **no filesize limit!** even on Cloudflare

|

||||

* ☑ stash: simple PUT filedropper

|

||||

* ☑ filename randomizer

|

||||

* ☑ write-only folders

|

||||

@@ -425,7 +428,7 @@ configuring accounts/volumes with arguments:

|

||||

|

||||

permissions:

|

||||

* `r` (read): browse folder contents, download files, download as zip/tar, see filekeys/dirkeys

|

||||

* `w` (write): upload files, move files *into* this folder

|

||||

* `w` (write): upload files, move/copy files *into* this folder

|

||||

* `m` (move): move files/folders *from* this folder

|

||||

* `d` (delete): delete files/folders

|

||||

* `.` (dots): user can ask to show dotfiles in directory listings

|

||||

@@ -505,7 +508,8 @@ the browser has the following hotkeys (always qwerty)

|

||||

* `ESC` close various things

|

||||

* `ctrl-K` delete selected files/folders

|

||||

* `ctrl-X` cut selected files/folders

|

||||

* `ctrl-V` paste

|

||||

* `ctrl-C` copy selected files/folders to clipboard

|

||||

* `ctrl-V` paste (move/copy)

|

||||

* `Y` download selected files

|

||||

* `F2` [rename](#batch-rename) selected file/folder

|

||||

* when a file/folder is selected (in not-grid-view):

|

||||

@@ -574,6 +578,7 @@ click the `🌲` or pressing the `B` hotkey to toggle between breadcrumbs path (

|

||||

|

||||

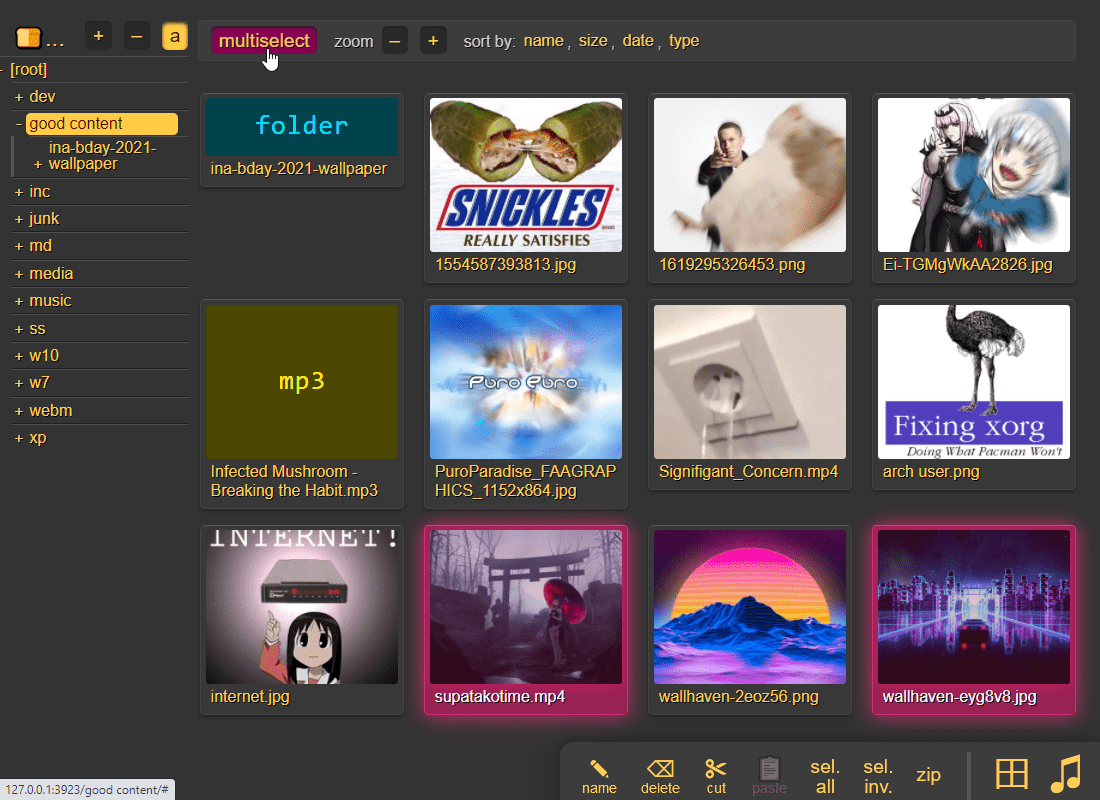

press `g` or `田` to toggle grid-view instead of the file listing and `t` toggles icons / thumbnails

|

||||

* can be made default globally with `--grid` or per-volume with volflag `grid`

|

||||

* enable by adding `?imgs` to a link, or disable with `?imgs=0`

|

||||

|

||||

|

||||

|

||||

@@ -581,7 +586,7 @@ it does static images with Pillow / pyvips / FFmpeg, and uses FFmpeg for video f

|

||||

* pyvips is 3x faster than Pillow, Pillow is 3x faster than FFmpeg

|

||||

* disable thumbnails for specific volumes with volflag `dthumb` for all, or `dvthumb` / `dathumb` / `dithumb` for video/audio/images only

|

||||

|

||||

audio files are covnerted into spectrograms using FFmpeg unless you `--no-athumb` (and some FFmpeg builds may need `--th-ff-swr`)

|

||||

audio files are converted into spectrograms using FFmpeg unless you `--no-athumb` (and some FFmpeg builds may need `--th-ff-swr`)

|

||||

|

||||

images with the following names (see `--th-covers`) become the thumbnail of the folder they're in: `folder.png`, `folder.jpg`, `cover.png`, `cover.jpg`

|

||||

* the order is significant, so if both `cover.png` and `folder.jpg` exist in a folder, it will pick the first matching `--th-covers` entry (`folder.jpg`)

|

||||

@@ -652,7 +657,7 @@ up2k has several advantages:

|

||||

* uploads resume if you reboot your browser or pc, just upload the same files again

|

||||

* server detects any corruption; the client reuploads affected chunks

|

||||

* the client doesn't upload anything that already exists on the server

|

||||

* no filesize limit unless imposed by a proxy, for example Cloudflare, which blocks uploads over 383.9 GiB

|

||||

* no filesize limit, even when a proxy limits the request size (for example Cloudflare)

|

||||

* much higher speeds than ftp/scp/tarpipe on some internet connections (mainly american ones) thanks to parallel connections

|

||||

* the last-modified timestamp of the file is preserved

|

||||

|

||||

@@ -667,7 +672,7 @@ see [up2k](./docs/devnotes.md#up2k) for details on how it works, or watch a [dem

|

||||

|

||||

**protip:** if you enable `favicon` in the `[⚙️] settings` tab (by typing something into the textbox), the icon in the browser tab will indicate upload progress -- also, the `[🔔]` and/or `[🔊]` switches enable visible and/or audible notifications on upload completion

|

||||

|

||||

the up2k UI is the epitome of polished inutitive experiences:

|

||||

the up2k UI is the epitome of polished intuitive experiences:

|

||||

* "parallel uploads" specifies how many chunks to upload at the same time

|

||||

* `[🏃]` analysis of other files should continue while one is uploading

|

||||

* `[🥔]` shows a simpler UI for faster uploads from slow devices

|

||||

@@ -688,6 +693,8 @@ note that since up2k has to read each file twice, `[🎈] bup` can *theoreticall

|

||||

|

||||

if you are resuming a massive upload and want to skip hashing the files which already finished, you can enable `turbo` in the `[⚙️] config` tab, but please read the tooltip on that button

|

||||

|

||||

if the server is behind a proxy which imposes a request-size limit, you can configure up2k to sneak below the limit with server-option `--u2sz` (the default is 96 MiB to support Cloudflare)

|

||||

|

||||

|

||||

### file-search

|

||||

|

||||

@@ -716,7 +723,7 @@ you can unpost even if you don't have regular move/delete access, however only f

|

||||

|

||||

### self-destruct

|

||||

|

||||

uploads can be given a lifetime, afer which they expire / self-destruct

|

||||

uploads can be given a lifetime, after which they expire / self-destruct

|

||||

|

||||

the feature must be enabled per-volume with the `lifetime` [upload rule](#upload-rules) which sets the upper limit for how long a file gets to stay on the server

|

||||

|

||||

@@ -743,7 +750,7 @@ the control-panel shows the ETA for all incoming files , but only for files bei

|

||||

|

||||

cut/paste, rename, and delete files/folders (if you have permission)

|

||||

|

||||

file selection: click somewhere on the line (not the link itsef), then:

|

||||

file selection: click somewhere on the line (not the link itself), then:

|

||||

* `space` to toggle

|

||||

* `up/down` to move

|

||||

* `shift-up/down` to move-and-select

|

||||

@@ -751,10 +758,11 @@ file selection: click somewhere on the line (not the link itsef), then:

|

||||

* shift-click another line for range-select

|

||||

|

||||

* cut: select some files and `ctrl-x`

|

||||

* copy: select some files and `ctrl-c`

|

||||

* paste: `ctrl-v` in another folder

|

||||

* rename: `F2`

|

||||

|

||||

you can move files across browser tabs (cut in one tab, paste in another)

|

||||

you can copy/move files across browser tabs (cut/copy in one tab, paste in another)

|

||||

|

||||

|

||||

## shares

|

||||

@@ -777,6 +785,7 @@ semi-intentional limitations:

|

||||

|

||||

* cleanup of expired shares only works when global option `e2d` is set, and/or at least one volume on the server has volflag `e2d`

|

||||

* only folders from the same volume are shared; if you are sharing a folder which contains other volumes, then the contents of those volumes will not be available

|

||||

* related to [IdP volumes being forgotten on shutdown](https://github.com/9001/copyparty/blob/hovudstraum/docs/idp.md#idp-volumes-are-forgotten-on-shutdown), any shares pointing into a user's IdP volume will be unavailable until that user makes their first request after a restart

|

||||

* no option to "delete after first access" because tricky

|

||||

* when linking something to discord (for example) it'll get accessed by their scraper and that would count as a hit

|

||||

* browsers wouldn't be able to resume a broken download unless the requester's IP gets allowlisted for X minutes (ref. tricky)

|

||||

@@ -840,6 +849,30 @@ or a mix of both:

|

||||

the metadata keys you can use in the format field are the ones in the file-browser table header (whatever is collected with `-mte` and `-mtp`)

|

||||

|

||||

|

||||

## rss feeds

|

||||

|

||||

monitor a folder with your RSS reader , optionally recursive

|

||||

|

||||

must be enabled per-volume with volflag `rss` or globally with `--rss`

|

||||

|

||||

the feed includes itunes metadata for use with podcast readers such as [AntennaPod](https://antennapod.org/)

|

||||

|

||||

a feed example: https://cd.ocv.me/a/d2/d22/?rss&fext=mp3

|

||||

|

||||

url parameters:

|

||||

|

||||

* `pw=hunter2` for password auth

|

||||

* `recursive` to also include subfolders

|

||||

* `title=foo` changes the feed title (default: folder name)

|

||||

* `fext=mp3,opus` only include mp3 and opus files (default: all)

|

||||

* `nf=30` only show the first 30 results (default: 250)

|

||||

* `sort=m` sort by mtime (file last-modified), newest first (default)

|

||||

* `u` = upload-time; NOTE: non-uploaded files have upload-time `0`

|

||||

* `n` = filename

|

||||

* `a` = filesize

|

||||

* uppercase = reverse-sort; `M` = oldest file first

|

||||

|

||||

|

||||

## media player

|

||||

|

||||

plays almost every audio format there is (if the server has FFmpeg installed for on-demand transcoding)

|

||||

@@ -936,6 +969,8 @@ see [./srv/expand/](./srv/expand/) for usage and examples

|

||||

|

||||

* files named `README.md` / `readme.md` will be rendered after directory listings unless `--no-readme` (but `.epilogue.html` takes precedence)

|

||||

|

||||

* and `PREADME.md` / `preadme.md` is shown above directory listings unless `--no-readme` or `.prologue.html`

|

||||

|

||||

* `README.md` and `*logue.html` can contain placeholder values which are replaced server-side before embedding into directory listings; see `--help-exp`

|

||||

|

||||

|

||||

@@ -987,7 +1022,11 @@ uses [multicast dns](https://en.wikipedia.org/wiki/Multicast_DNS) to give copypa

|

||||

|

||||

all enabled services ([webdav](#webdav-server), [ftp](#ftp-server), [smb](#smb-server)) will appear in mDNS-aware file managers (KDE, gnome, macOS, ...)

|

||||

|

||||

the domain will be http://partybox.local if the machine's hostname is `partybox` unless `--name` specifies soemthing else

|

||||

the domain will be `partybox.local` if the machine's hostname is `partybox` unless `--name` specifies something else

|

||||

|

||||

and the web-UI will be available at http://partybox.local:3923/

|

||||

|

||||

* if you want to get rid of the `:3923` so you can use http://partybox.local/ instead then see [listen on port 80 and 443](#listen-on-port-80-and-443)

|

||||

|

||||

|

||||

### ssdp

|

||||

@@ -1013,7 +1052,7 @@ print a qr-code [(screenshot)](https://user-images.githubusercontent.com/241032/

|

||||

* `--qrz 1` forces 1x zoom instead of autoscaling to fit the terminal size

|

||||

* 1x may render incorrectly on some terminals/fonts, but 2x should always work

|

||||

|

||||

it uses the server hostname if [mdns](#mdns) is enbled, otherwise it'll use your external ip (default route) unless `--qri` specifies a specific ip-prefix or domain

|

||||

it uses the server hostname if [mdns](#mdns) is enabled, otherwise it'll use your external ip (default route) unless `--qri` specifies a specific ip-prefix or domain

|

||||

|

||||

|

||||

## ftp server

|

||||

@@ -1038,7 +1077,7 @@ some recommended FTP / FTPS clients; `wark` = example password:

|

||||

|

||||

## webdav server

|

||||

|

||||

with read-write support, supports winXP and later, macos, nautilus/gvfs ... a greay way to [access copyparty straight from the file explorer in your OS](#mount-as-drive)

|

||||

with read-write support, supports winXP and later, macos, nautilus/gvfs ... a great way to [access copyparty straight from the file explorer in your OS](#mount-as-drive)

|

||||

|

||||

click the [connect](http://127.0.0.1:3923/?hc) button in the control-panel to see connection instructions for windows, linux, macos

|

||||

|

||||

@@ -1142,8 +1181,8 @@ authenticate with one of the following:

|

||||

tweaking the ui

|

||||

|

||||

* set default sort order globally with `--sort` or per-volume with the `sort` volflag; specify one or more comma-separated columns to sort by, and prefix the column name with `-` for reverse sort

|

||||

* the column names you can use are visible as tooltips when hovering over the column headers in the directory listing, for example `href ext sz ts tags/.up_at tags/Cirle tags/.tn tags/Artist tags/Title`

|

||||

* to sort in music order (album, track, artist, title) with filename as fallback, you could `--sort tags/Cirle,tags/.tn,tags/Artist,tags/Title,href`

|

||||

* the column names you can use are visible as tooltips when hovering over the column headers in the directory listing, for example `href ext sz ts tags/.up_at tags/Circle tags/.tn tags/Artist tags/Title`

|

||||

* to sort in music order (album, track, artist, title) with filename as fallback, you could `--sort tags/Circle,tags/.tn,tags/Artist,tags/Title,href`

|

||||

* to sort by upload date, first enable showing the upload date in the listing with `-e2d -mte +.up_at` and then `--sort tags/.up_at`

|

||||

|

||||

see [./docs/rice](./docs/rice) for more, including how to add stuff (css/`<meta>`/...) to the html `<head>` tag, or to add your own translation

|

||||

@@ -1166,7 +1205,11 @@ if you want to entirely replace the copyparty response with your own jinja2 temp

|

||||

|

||||

enable symlink-based upload deduplication globally with `--dedup` or per-volume with volflag `dedup`

|

||||

|

||||

when someone tries to upload a file that already exists on the server, the upload will be politely declined and a symlink is created instead, pointing to the nearest copy on disk, thus reducinc disk space usage

|

||||

by default, when someone tries to upload a file that already exists on the server, the upload will be politely declined, and the server will copy the existing file over to where the upload would have gone

|

||||

|

||||

if you enable deduplication with `--dedup` then it'll create a symlink instead of a full copy, thus reducing disk space usage

|

||||

|

||||

* on the contrary, if your server is hooked up to s3-glacier or similar storage where reading is expensive, and you cannot use `--safe-dedup=1` because you have other software tampering with your files, so you want to entirely disable detection of duplicate data instead, then you can specify `--no-clone` globally or `noclone` as a volflag

|

||||

|

||||

**warning:** when enabling dedup, you should also:

|

||||

* enable indexing with `-e2dsa` or volflag `e2dsa` (see [file indexing](#file-indexing) section below); strongly recommended

|

||||

@@ -1207,7 +1250,7 @@ through arguments:

|

||||

* `-e2t` enables metadata indexing on upload

|

||||

* `-e2ts` also scans for tags in all files that don't have tags yet

|

||||

* `-e2tsr` also deletes all existing tags, doing a full reindex

|

||||

* `-e2v` verfies file integrity at startup, comparing hashes from the db

|

||||

* `-e2v` verifies file integrity at startup, comparing hashes from the db

|

||||

* `-e2vu` patches the database with the new hashes from the filesystem

|

||||

* `-e2vp` panics and kills copyparty instead

|

||||

|

||||

@@ -1420,11 +1463,27 @@ redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

replace 404 and 403 errors with something completely different (that's it for now)

|

||||

|

||||

|

||||

## ip auth

|

||||

|

||||

autologin based on IP range (CIDR) , using the global-option `--ipu`

|

||||

|

||||

for example, if everyone with an IP that starts with `192.168.123` should automatically log in as the user `spartacus`, then you can either specify `--ipu=192.168.123.0/24=spartacus` as a commandline option, or put this in a config file:

|

||||

|

||||

```yaml

|

||||

[global]

|

||||

ipu: 192.168.123.0/24=spartacus

|

||||

```

|

||||

|

||||

repeat the option to map additional subnets

|

||||

|

||||

**be careful with this one!** if you have a reverseproxy, then you definitely want to make sure you have [real-ip](#real-ip) configured correctly, and it's probably a good idea to nullmap the reverseproxy's IP just in case; so if your reverseproxy is sending requests from `172.24.27.9` then that would be `--ipu=172.24.27.9/32=`

|

||||

|

||||

|

||||

## identity providers

|

||||

|

||||

replace copyparty passwords with oauth and such

|

||||

|

||||

you can disable the built-in password-based login sysem, and instead replace it with a separate piece of software (an identity provider) which will then handle authenticating / authorizing of users; this makes it possible to login with passkeys / fido2 / webauthn / yubikey / ldap / active directory / oauth / many other single-sign-on contraptions

|

||||

you can disable the built-in password-based login system, and instead replace it with a separate piece of software (an identity provider) which will then handle authenticating / authorizing of users; this makes it possible to login with passkeys / fido2 / webauthn / yubikey / ldap / active directory / oauth / many other single-sign-on contraptions

|

||||

|

||||

a popular choice is [Authelia](https://www.authelia.com/) (config-file based), another one is [authentik](https://goauthentik.io/) (GUI-based, more complex)

|

||||

|

||||

@@ -1451,7 +1510,7 @@ if permitted, users can change their own passwords in the control-panel

|

||||

|

||||

* if you run multiple copyparty instances with different users you *almost definitely* want to specify separate DBs for each instance

|

||||

|

||||

* if [password hashing](#password-hashing) is enbled, the passwords in the db are also hashed

|

||||

* if [password hashing](#password-hashing) is enabled, the passwords in the db are also hashed

|

||||

|

||||

* ...which means that all user-defined passwords will be forgotten if you change password-hashing settings

|

||||

|

||||

@@ -1471,7 +1530,7 @@ you may improve performance by specifying larger values for `--iobuf` / `--s-rd-

|

||||

|

||||

## hiding from google

|

||||

|

||||

tell search engines you dont wanna be indexed, either using the good old [robots.txt](https://www.robotstxt.org/robotstxt.html) or through copyparty settings:

|

||||

tell search engines you don't wanna be indexed, either using the good old [robots.txt](https://www.robotstxt.org/robotstxt.html) or through copyparty settings:

|

||||

|

||||

* `--no-robots` adds HTTP (`X-Robots-Tag`) and HTML (`<meta>`) headers with `noindex, nofollow` globally

|

||||

* volflag `[...]:c,norobots` does the same thing for that single volume

|

||||

@@ -1546,6 +1605,33 @@ if you want to change the fonts, see [./docs/rice/](./docs/rice/)

|

||||

`-lo log/cpp-%Y-%m%d-%H%M%S.txt.xz`

|

||||

|

||||

|

||||

## listen on port 80 and 443

|

||||

|

||||

become a *real* webserver which people can access by just going to your IP or domain without specifying a port

|

||||

|

||||

**if you're on windows,** then you just need to add the commandline argument `-p 80,443` and you're done! nice

|

||||

|

||||

**if you're on macos,** sorry, I don't know

|

||||

|

||||

**if you're on Linux,** you have the following 4 options:

|

||||

|

||||

* **option 1:** set up a [reverse-proxy](#reverse-proxy) -- this one makes a lot of sense if you're running on a proper headless server, because that way you get real HTTPS too

|

||||

|

||||

* **option 2:** NAT to port 3923 -- this is cumbersome since you'll need to do it every time you reboot, and the exact command may depend on your linux distribution:

|

||||

```bash

|

||||

iptables -t nat -A PREROUTING -p tcp --dport 80 -j REDIRECT --to-port 3923

|

||||

iptables -t nat -A PREROUTING -p tcp --dport 443 -j REDIRECT --to-port 3923

|

||||

```

|

||||

|

||||

* **option 3:** disable the [security policy](https://www.w3.org/Daemon/User/Installation/PrivilegedPorts.html) which prevents the use of 80 and 443; this is *probably* fine:

|

||||

```

|

||||

setcap CAP_NET_BIND_SERVICE=+eip $(realpath $(which python))

|

||||

python copyparty-sfx.py -p 80,443

|

||||

```

|

||||

|

||||

* **option 4:** run copyparty as root (please don't)

|

||||

|

||||

|

||||

## reverse-proxy

|

||||

|

||||

running copyparty next to other websites hosted on an existing webserver such as nginx, caddy, or apache

|

||||

@@ -1601,6 +1687,7 @@ scrape_configs:

|

||||

currently the following metrics are available,

|

||||

* `cpp_uptime_seconds` time since last copyparty restart

|

||||

* `cpp_boot_unixtime_seconds` same but as an absolute timestamp

|

||||

* `cpp_active_dl` number of active downloads

|

||||

* `cpp_http_conns` number of open http(s) connections

|

||||

* `cpp_http_reqs` number of http(s) requests handled

|

||||

* `cpp_sus_reqs` number of 403/422/malicious requests

|

||||

@@ -1850,6 +1937,9 @@ quick summary of more eccentric web-browsers trying to view a directory index:

|

||||

| **ie4** and **netscape** 4.0 | can browse, upload with `?b=u`, auth with `&pw=wark` |

|

||||

| **ncsa mosaic** 2.7 | does not get a pass, [pic1](https://user-images.githubusercontent.com/241032/174189227-ae816026-cf6f-4be5-a26e-1b3b072c1b2f.png) - [pic2](https://user-images.githubusercontent.com/241032/174189225-5651c059-5152-46e9-ac26-7e98e497901b.png) |

|

||||

| **SerenityOS** (7e98457) | hits a page fault, works with `?b=u`, file upload not-impl |

|

||||

| **nintendo 3ds** | can browse, upload, view thumbnails (thx bnjmn) |

|

||||

|

||||

<p align="center"><img src="https://github.com/user-attachments/assets/88deab3d-6cad-4017-8841-2f041472b853" /></p>

|

||||

|

||||

|

||||

# client examples

|

||||

@@ -1894,7 +1984,7 @@ interact with copyparty using non-browser clients

|

||||

|

||||

* [igloo irc](https://iglooirc.com/): Method: `post` Host: `https://you.com/up/?want=url&pw=hunter2` Multipart: `yes` File parameter: `f`

|

||||

|

||||

copyparty returns a truncated sha512sum of your PUT/POST as base64; you can generate the same checksum locally to verify uplaods:

|

||||

copyparty returns a truncated sha512sum of your PUT/POST as base64; you can generate the same checksum locally to verify uploads:

|

||||

|

||||

b512(){ printf "$((sha512sum||shasum -a512)|sed -E 's/ .*//;s/(..)/\\x\1/g')"|base64|tr '+/' '-_'|head -c44;}

|

||||

b512 <movie.mkv

|

||||

@@ -1994,7 +2084,7 @@ when uploading files,

|

||||

* up to 30% faster uploads if you hide the upload status list by switching away from the `[🚀]` up2k ui-tab (or closing it)

|

||||

* optionally you can switch to the lightweight potato ui by clicking the `[🥔]`

|

||||

* switching to another browser-tab also works, the favicon will update every 10 seconds in that case

|

||||

* unlikely to be a problem, but can happen when uploding many small files, or your internet is too fast, or PC too slow

|

||||

* unlikely to be a problem, but can happen when uploading many small files, or your internet is too fast, or PC too slow

|

||||

|

||||

|

||||

# security

|

||||

@@ -2042,7 +2132,7 @@ other misc notes:

|

||||

|

||||

behavior that might be unexpected

|

||||

|

||||

* users without read-access to a folder can still see the `.prologue.html` / `.epilogue.html` / `README.md` contents, for the purpose of showing a description on how to use the uploader for example

|

||||

* users without read-access to a folder can still see the `.prologue.html` / `.epilogue.html` / `PREADME.md` / `README.md` contents, for the purpose of showing a description on how to use the uploader for example

|

||||

* users can submit `<script>`s which autorun (in a sandbox) for other visitors in a few ways;

|

||||

* uploading a `README.md` -- avoid with `--no-readme`

|

||||

* renaming `some.html` to `.epilogue.html` -- avoid with either `--no-logues` or `--no-dot-ren`

|

||||

@@ -2120,13 +2210,13 @@ if [cfssl](https://github.com/cloudflare/cfssl/releases/latest) is installed, co

|

||||

|

||||

## client crashes

|

||||

|

||||

### frefox wsod

|

||||

### firefox wsod

|

||||

|

||||

firefox 87 can crash during uploads -- the entire browser goes, including all other browser tabs, everything turns white

|

||||

|

||||

however you can hit `F12` in the up2k tab and use the devtools to see how far you got in the uploads:

|

||||

|

||||

* get a complete list of all uploads, organized by statuts (ok / no-good / busy / queued):

|

||||

* get a complete list of all uploads, organized by status (ok / no-good / busy / queued):

|

||||

`var tabs = { ok:[], ng:[], bz:[], q:[] }; for (var a of up2k.ui.tab) tabs[a.in].push(a); tabs`

|

||||

|

||||

* list of filenames which failed:

|

||||

@@ -2243,7 +2333,7 @@ then again, if you are already into downloading shady binaries from the internet

|

||||

|

||||

## zipapp

|

||||

|

||||

another emergency alternative, [copyparty.pyz](https://github.com/9001/copyparty/releases/latest/download/copyparty.pyz) has less features, is slow, requires python 3.7 or newer, worse compression, and more importantly is unable to benefit from more recent versions of jinja2 and such (which makes it less secure)... lots of drawbacks with this one really -- but it does not unpack any temporay files to disk, so it *may* just work if the regular sfx fails to start because the computer is messed up in certain funky ways, so it's worth a shot if all else fails

|

||||

another emergency alternative, [copyparty.pyz](https://github.com/9001/copyparty/releases/latest/download/copyparty.pyz) has less features, is slow, requires python 3.7 or newer, worse compression, and more importantly is unable to benefit from more recent versions of jinja2 and such (which makes it less secure)... lots of drawbacks with this one really -- but it does not unpack any temporary files to disk, so it *may* just work if the regular sfx fails to start because the computer is messed up in certain funky ways, so it's worth a shot if all else fails

|

||||

|

||||

run it by doubleclicking it, or try typing `python copyparty.pyz` in your terminal/console/commandline/telex if that fails

|

||||

|

||||

|

||||

@@ -2,7 +2,7 @@ standalone programs which are executed by copyparty when an event happens (uploa

|

||||

|

||||

these programs either take zero arguments, or a filepath (the affected file), or a json message with filepath + additional info

|

||||

|

||||

run copyparty with `--help-hooks` for usage details / hook type explanations (xm/xbu/xau/xiu/xbr/xar/xbd/xad/xban)

|

||||

run copyparty with `--help-hooks` for usage details / hook type explanations (xm/xbu/xau/xiu/xbc/xac/xbr/xar/xbd/xad/xban)

|

||||

|

||||

> **note:** in addition to event hooks (the stuff described here), copyparty has another api to run your programs/scripts while providing way more information such as audio tags / video codecs / etc and optionally daisychaining data between scripts in a processing pipeline; if that's what you want then see [mtp plugins](../mtag/) instead

|

||||

|

||||

|

||||

@@ -393,7 +393,8 @@ class Gateway(object):

|

||||

if r.status != 200:

|

||||

self.closeconn()

|

||||

info("http error %s reading dir %r", r.status, web_path)

|

||||

raise FuseOSError(errno.ENOENT)

|

||||

err = errno.ENOENT if r.status == 404 else errno.EIO

|

||||

raise FuseOSError(err)

|

||||

|

||||

ctype = r.getheader("Content-Type", "")

|

||||

if ctype == "application/json":

|

||||

@@ -1128,7 +1129,7 @@ def main():

|

||||

|

||||

# dircache is always a boost,

|

||||

# only want to disable it for tests etc,

|

||||

cdn = 9 # max num dirs; 0=disable

|

||||

cdn = 24 # max num dirs; keep larger than max dir depth; 0=disable

|

||||

cds = 1 # numsec until an entry goes stale

|

||||

|

||||

where = "local directory"

|

||||

|

||||

199

bin/u2c.py

199

bin/u2c.py

@@ -1,8 +1,8 @@

|

||||

#!/usr/bin/env python3

|

||||

from __future__ import print_function, unicode_literals

|

||||

|

||||

S_VERSION = "2.1"

|

||||

S_BUILD_DT = "2024-09-23"

|

||||

S_VERSION = "2.6"

|

||||

S_BUILD_DT = "2024-11-10"

|

||||

|

||||

"""

|

||||

u2c.py: upload to copyparty

|

||||

@@ -62,6 +62,9 @@ else:

|

||||

|

||||

unicode = str

|

||||

|

||||

|

||||

WTF8 = "replace" if PY2 else "surrogateescape"

|

||||

|

||||

VT100 = platform.system() != "Windows"

|

||||

|

||||

|

||||

@@ -151,6 +154,7 @@ class HCli(object):

|

||||

self.tls = tls

|

||||

self.verify = ar.te or not ar.td

|

||||

self.conns = []

|

||||

self.hconns = []

|

||||

if tls:

|

||||

import ssl

|

||||

|

||||

@@ -170,7 +174,7 @@ class HCli(object):

|

||||

"User-Agent": "u2c/%s" % (S_VERSION,),

|

||||

}

|

||||

|

||||

def _connect(self):

|

||||

def _connect(self, timeout):

|

||||

args = {}

|

||||

if PY37:

|

||||

args["blocksize"] = 1048576

|

||||

@@ -182,9 +186,11 @@ class HCli(object):

|

||||

if self.ctx:

|

||||

args = {"context": self.ctx}

|

||||

|

||||

return C(self.addr, self.port, timeout=999, **args)

|

||||

return C(self.addr, self.port, timeout=timeout, **args)

|

||||

|

||||

def req(self, meth, vpath, hdrs, body=None, ctype=None):

|

||||

now = time.time()

|

||||

|

||||

hdrs.update(self.base_hdrs)

|

||||

if self.ar.a:

|

||||

hdrs["PW"] = self.ar.a

|

||||

@@ -195,7 +201,11 @@ class HCli(object):

|

||||

0 if not body else body.len if hasattr(body, "len") else len(body)

|

||||

)

|

||||

|

||||

c = self.conns.pop() if self.conns else self._connect()

|

||||

# large timeout for handshakes (safededup)

|

||||

conns = self.hconns if ctype == MJ else self.conns

|

||||

while conns and self.ar.cxp < now - conns[0][0]:

|

||||

conns.pop(0)[1].close()

|

||||

c = conns.pop()[1] if conns else self._connect(999 if ctype == MJ else 128)

|

||||

try:

|

||||

c.request(meth, vpath, body, hdrs)

|

||||

if PY27:

|

||||

@@ -204,8 +214,15 @@ class HCli(object):

|

||||

rsp = c.getresponse()

|

||||

|

||||

data = rsp.read()

|

||||

self.conns.append(c)

|

||||

conns.append((time.time(), c))

|

||||

return rsp.status, data.decode("utf-8")

|

||||

except http_client.BadStatusLine:

|

||||

if self.ar.cxp > 4:

|

||||

t = "\nWARNING: --cxp probably too high; reducing from %d to 4"

|

||||

print(t % (self.ar.cxp,))

|

||||

self.ar.cxp = 4

|

||||

c.close()

|

||||

raise

|

||||

except:

|

||||

c.close()

|

||||

raise

|

||||

@@ -228,7 +245,7 @@ class File(object):

|

||||

self.lmod = lmod # type: float

|

||||

|

||||

self.abs = os.path.join(top, rel) # type: bytes

|

||||

self.name = self.rel.split(b"/")[-1].decode("utf-8", "replace") # type: str

|

||||

self.name = self.rel.split(b"/")[-1].decode("utf-8", WTF8) # type: str

|

||||

|

||||

# set by get_hashlist

|

||||

self.cids = [] # type: list[tuple[str, int, int]] # [ hash, ofs, sz ]

|

||||

@@ -267,10 +284,41 @@ class FileSlice(object):

|

||||

raise Exception(9)

|

||||

tlen += clen

|

||||

|

||||

self.len = tlen

|

||||

self.len = self.tlen = tlen

|

||||

self.cdr = self.car + self.len

|

||||

self.ofs = 0 # type: int

|

||||

self.f = open(file.abs, "rb", 512 * 1024)

|

||||

|

||||

self.f = None

|

||||

self.seek = self._seek0

|

||||

self.read = self._read0

|

||||

|

||||

def subchunk(self, maxsz, nth):

|

||||

if self.tlen <= maxsz:

|

||||

return -1

|

||||

|

||||

if not nth:

|

||||

self.car0 = self.car

|

||||

self.cdr0 = self.cdr

|

||||

|

||||

self.car = self.car0 + maxsz * nth

|

||||

if self.car >= self.cdr0:

|

||||

return -2

|

||||

|

||||

self.cdr = self.car + min(self.cdr0 - self.car, maxsz)

|

||||

self.len = self.cdr - self.car

|

||||

self.seek(0)

|

||||

return nth

|

||||

|

||||

def unsub(self):

|

||||

self.car = self.car0

|

||||

self.cdr = self.cdr0

|

||||

self.len = self.tlen

|

||||

|

||||

def _open(self):

|

||||

self.seek = self._seek

|

||||

self.read = self._read

|

||||

|

||||

self.f = open(self.file.abs, "rb", 512 * 1024)

|

||||

self.f.seek(self.car)

|

||||

|

||||

# https://stackoverflow.com/questions/4359495/what-is-exactly-a-file-like-object-in-python

|

||||

@@ -282,10 +330,15 @@ class FileSlice(object):

|

||||

except:

|

||||

pass # py27 probably

|

||||

|

||||

def close(self, *a, **ka):

|

||||

return # until _open

|

||||

|

||||

def tell(self):

|

||||

return self.ofs

|

||||

|

||||

def seek(self, ofs, wh=0):

|

||||

def _seek(self, ofs, wh=0):

|

||||

assert self.f # !rm

|

||||

|

||||

if wh == 1:

|

||||

ofs = self.ofs + ofs

|

||||

elif wh == 2:

|

||||

@@ -299,12 +352,22 @@ class FileSlice(object):

|

||||

self.ofs = ofs

|

||||

self.f.seek(self.car + ofs)

|

||||

|

||||

def read(self, sz):

|

||||

def _read(self, sz):

|

||||

assert self.f # !rm

|

||||

|

||||

sz = min(sz, self.len - self.ofs)

|

||||

ret = self.f.read(sz)

|

||||

self.ofs += len(ret)

|

||||

return ret

|

||||

|

||||

def _seek0(self, ofs, wh=0):

|

||||

self._open()

|

||||

return self.seek(ofs, wh)

|

||||

|

||||

def _read0(self, sz):

|

||||

self._open()

|

||||

return self.read(sz)

|

||||

|

||||

|

||||

class MTHash(object):

|

||||

def __init__(self, cores):

|

||||

@@ -557,13 +620,17 @@ def walkdir(err, top, excl, seen):

|

||||

for ap, inf in sorted(statdir(err, top)):

|

||||

if excl.match(ap):

|

||||

continue

|

||||

yield ap, inf

|

||||

if stat.S_ISDIR(inf.st_mode):

|

||||

yield ap, inf

|

||||

try:

|

||||

for x in walkdir(err, ap, excl, seen):

|

||||

yield x

|

||||

except Exception as ex:

|

||||

err.append((ap, str(ex)))

|

||||

elif stat.S_ISREG(inf.st_mode):

|

||||

yield ap, inf

|

||||

else:

|

||||

err.append((ap, "irregular filetype 0%o" % (inf.st_mode,)))

|

||||

|

||||

|

||||

def walkdirs(err, tops, excl):

|

||||

@@ -609,11 +676,12 @@ def walkdirs(err, tops, excl):

|

||||

|

||||

# mostly from copyparty/util.py

|

||||

def quotep(btxt):

|

||||

# type: (bytes) -> bytes

|

||||

quot1 = quote(btxt, safe=b"/")

|

||||

if not PY2:

|

||||

quot1 = quot1.encode("ascii")

|

||||

|

||||

return quot1.replace(b" ", b"+") # type: ignore

|

||||

return quot1.replace(b" ", b"%20") # type: ignore

|

||||

|

||||

|

||||

# from copyparty/util.py

|

||||

@@ -641,7 +709,7 @@ def up2k_chunksize(filesize):

|

||||

while True:

|

||||

for mul in [1, 2]:

|

||||

nchunks = math.ceil(filesize * 1.0 / chunksize)

|

||||

if nchunks <= 256 or (chunksize >= 32 * 1024 * 1024 and nchunks < 4096):

|

||||

if nchunks <= 256 or (chunksize >= 32 * 1024 * 1024 and nchunks <= 4096):

|

||||

return chunksize

|

||||

|

||||

chunksize += stepsize

|

||||

@@ -720,7 +788,7 @@ def handshake(ar, file, search):

|

||||

url = file.url

|

||||

else:

|

||||

if b"/" in file.rel:

|

||||

url = quotep(file.rel.rsplit(b"/", 1)[0]).decode("utf-8", "replace")

|

||||

url = quotep(file.rel.rsplit(b"/", 1)[0]).decode("utf-8")

|

||||

else:

|

||||

url = ""

|

||||

url = ar.vtop + url

|

||||

@@ -728,6 +796,7 @@ def handshake(ar, file, search):

|

||||

while True:

|

||||

sc = 600

|

||||

txt = ""

|

||||

t0 = time.time()

|

||||

try:

|

||||

zs = json.dumps(req, separators=(",\n", ": "))

|

||||

sc, txt = web.req("POST", url, {}, zs.encode("utf-8"), MJ)

|

||||

@@ -752,7 +821,9 @@ def handshake(ar, file, search):

|

||||

print("\nERROR: login required, or wrong password:\n%s" % (txt,))

|

||||

raise BadAuth()

|

||||

|

||||

eprint("handshake failed, retrying: %s\n %s\n\n" % (file.name, em))

|

||||

t = "handshake failed, retrying: %s\n t0=%.3f t1=%.3f td=%.3f\n %s\n\n"

|

||||

now = time.time()

|

||||

eprint(t % (file.name, t0, now, now - t0, em))

|

||||

time.sleep(ar.cd)

|

||||

|

||||

try:

|

||||

@@ -763,15 +834,15 @@ def handshake(ar, file, search):

|

||||

if search:

|

||||

return r["hits"], False

|

||||

|

||||

file.url = r["purl"]

|

||||

file.url = quotep(r["purl"].encode("utf-8", WTF8)).decode("utf-8")

|

||||

file.name = r["name"]

|

||||

file.wark = r["wark"]

|

||||

|

||||

return r["hash"], r["sprs"]

|

||||

|

||||

|

||||

def upload(fsl, stats):

|

||||

# type: (FileSlice, str) -> None

|

||||

def upload(fsl, stats, maxsz):

|

||||

# type: (FileSlice, str, int) -> None

|

||||

"""upload a range of file data, defined by one or more `cid` (chunk-hash)"""

|

||||

|

||||

ctxt = fsl.cids[0]

|

||||

@@ -789,21 +860,34 @@ def upload(fsl, stats):

|

||||

if stats:

|

||||

headers["X-Up2k-Stat"] = stats

|

||||

|

||||

nsub = 0

|

||||

try:

|

||||

sc, txt = web.req("POST", fsl.file.url, headers, fsl, MO)

|

||||

while nsub != -1:

|

||||

nsub = fsl.subchunk(maxsz, nsub)

|

||||

if nsub == -2:

|

||||

return

|

||||

if nsub >= 0:

|

||||

headers["X-Up2k-Subc"] = str(maxsz * nsub)

|

||||

headers.pop(CLEN, None)

|

||||

nsub += 1

|

||||

|

||||

if sc == 400:

|

||||

if (

|

||||

"already being written" in txt

|

||||

or "already got that" in txt

|

||||

or "only sibling chunks" in txt

|

||||

):

|

||||

fsl.file.nojoin = 1

|

||||

sc, txt = web.req("POST", fsl.file.url, headers, fsl, MO)

|

||||

|

||||

if sc >= 400:

|

||||

raise Exception("http %s: %s" % (sc, txt))

|

||||

if sc == 400:

|

||||

if (

|

||||

"already being written" in txt

|

||||

or "already got that" in txt

|

||||

or "only sibling chunks" in txt

|

||||

):

|

||||

fsl.file.nojoin = 1

|

||||

|

||||

if sc >= 400:

|

||||

raise Exception("http %s: %s" % (sc, txt))

|

||||

finally:

|

||||

fsl.f.close()

|

||||

if fsl.f:

|

||||

fsl.f.close()

|

||||

if nsub != -1:

|

||||

fsl.unsub()

|

||||

|

||||

|

||||

class Ctl(object):

|

||||

@@ -869,8 +953,8 @@ class Ctl(object):

|

||||

self.hash_b = 0

|

||||

self.up_f = 0

|

||||

self.up_c = 0

|

||||

self.up_b = 0

|

||||

self.up_br = 0

|

||||

self.up_b = 0 # num bytes handled

|

||||

self.up_br = 0 # num bytes actually transferred

|

||||

self.uploader_busy = 0

|

||||

self.serialized = False

|

||||

|

||||

@@ -935,7 +1019,7 @@ class Ctl(object):

|

||||

print(" %d up %s" % (ncs - nc, cid))

|

||||

stats = "%d/0/0/%d" % (nf, self.nfiles - nf)

|

||||

fslice = FileSlice(file, [cid])

|

||||

upload(fslice, stats)

|

||||

upload(fslice, stats, self.ar.szm)

|

||||

|

||||

print(" ok!")

|

||||

if file.recheck:

|

||||

@@ -1013,11 +1097,14 @@ class Ctl(object):

|

||||

t = "%s eta @ %s/s, %s, %d# left\033[K" % (self.eta, spd, sleft, nleft)

|

||||

eprint(txt + "\033]0;{0}\033\\\r{0}{1}".format(t, tail))

|

||||

|

||||

if self.ar.wlist:

|

||||

self.at_hash = time.time() - self.t0

|

||||

|

||||

if self.hash_b and self.at_hash:

|

||||

spd = humansize(self.hash_b / self.at_hash)

|

||||

eprint("\nhasher: %.2f sec, %s/s\n" % (self.at_hash, spd))

|

||||

if self.up_b and self.at_up:

|

||||

spd = humansize(self.up_b / self.at_up)

|

||||

if self.up_br and self.at_up:

|

||||

spd = humansize(self.up_br / self.at_up)

|

||||

eprint("upload: %.2f sec, %s/s\n" % (self.at_up, spd))

|

||||

|

||||

if not self.recheck:

|

||||

@@ -1051,7 +1138,7 @@ class Ctl(object):

|

||||

print(" ls ~{0}".format(srd))

|

||||

zt = (

|

||||

self.ar.vtop,

|

||||

quotep(rd.replace(b"\\", b"/")).decode("utf-8", "replace"),

|

||||

quotep(rd.replace(b"\\", b"/")).decode("utf-8"),

|

||||

)

|

||||

sc, txt = web.req("GET", "%s%s?ls<&dots" % zt, {})

|

||||

if sc >= 400:

|

||||

@@ -1060,13 +1147,16 @@ class Ctl(object):

|

||||

j = json.loads(txt)

|

||||

for f in j["dirs"] + j["files"]:

|

||||

rfn = f["href"].split("?")[0].rstrip("/")

|

||||

ls[unquote(rfn.encode("utf-8", "replace"))] = f

|

||||

ls[unquote(rfn.encode("utf-8", WTF8))] = f

|

||||

except Exception as ex:

|

||||

print(" mkdir ~{0} ({1})".format(srd, ex))

|

||||

|

||||

if self.ar.drd:

|

||||

dp = os.path.join(top, rd)

|

||||

lnodes = set(os.listdir(dp))

|

||||

try:

|

||||

lnodes = set(os.listdir(dp))

|

||||

except:

|

||||

lnodes = list(ls) # fs eio; don't delete

|

||||

if ptn:

|

||||

zs = dp.replace(sep, b"/").rstrip(b"/") + b"/"

|

||||

zls = [zs + x for x in lnodes]

|

||||

@@ -1074,7 +1164,7 @@ class Ctl(object):

|

||||

lnodes = [x.split(b"/")[-1] for x in zls]

|

||||

bnames = [x for x in ls if x not in lnodes and x != b".hist"]

|

||||

vpath = self.ar.url.split("://")[-1].split("/", 1)[-1]

|

||||

names = [x.decode("utf-8", "replace") for x in bnames]

|

||||

names = [x.decode("utf-8", WTF8) for x in bnames]

|

||||

locs = [vpath + srd + "/" + x for x in names]

|

||||

while locs:

|

||||

req = locs

|

||||

@@ -1136,10 +1226,16 @@ class Ctl(object):

|

||||

self.up_b = self.hash_b

|

||||

|

||||

if self.ar.wlist:

|

||||

vp = file.rel.decode("utf-8")

|

||||

if self.ar.chs:

|

||||

zsl = [

|

||||

"%s %d %d" % (zsii[0], n, zsii[1])

|

||||

for n, zsii in enumerate(file.cids)

|

||||

]

|

||||

print("chs: %s\n%s" % (vp, "\n".join(zsl)))

|

||||

zsl = [self.ar.wsalt, str(file.size)] + [x[0] for x in file.kchunks]

|

||||

zb = hashlib.sha512("\n".join(zsl).encode("utf-8")).digest()[:33]

|

||||

wark = ub64enc(zb).decode("utf-8")

|

||||

vp = file.rel.decode("utf-8")

|

||||

if self.ar.jw:

|

||||

print("%s %s" % (wark, vp))

|

||||

else:

|

||||

@@ -1177,6 +1273,7 @@ class Ctl(object):

|

||||

self.q_upload.put(None)

|

||||

return

|

||||

|

||||

chunksz = up2k_chunksize(file.size)

|

||||

upath = file.abs.decode("utf-8", "replace")

|

||||

if not VT100:

|

||||

upath = upath.lstrip("\\?")

|

||||

@@ -1236,9 +1333,14 @@ class Ctl(object):

|

||||

file.up_c -= len(hs)

|

||||

for cid in hs:

|

||||

sz = file.kchunks[cid][1]

|

||||

self.up_br -= sz

|

||||

self.up_b -= sz

|

||||

file.up_b -= sz

|

||||

|

||||

if hs and not file.up_b:

|

||||

# first hs of this file; is this an upload resume?

|

||||

file.up_b = chunksz * max(0, len(file.kchunks) - len(hs))

|

||||

|

||||

file.ucids = hs

|

||||

|

||||

if not hs:

|

||||

@@ -1252,7 +1354,7 @@ class Ctl(object):

|

||||

c1 = c2 = ""

|

||||

|

||||

spd_h = humansize(file.size / file.t_hash, True)

|

||||

if file.up_b:

|

||||

if file.up_c:

|

||||

t_up = file.t1_up - file.t0_up

|

||||

spd_u = humansize(file.size / t_up, True)

|

||||

|

||||

@@ -1262,14 +1364,13 @@ class Ctl(object):

|

||||

t = " found %s %s(%.2fs,%s/s)%s"

|

||||

print(t % (upath, c1, file.t_hash, spd_h, c2))

|

||||

else:

|

||||

kw = "uploaded" if file.up_b else " found"

|

||||

kw = "uploaded" if file.up_c else " found"

|

||||

print("{0} {1}".format(kw, upath))

|

||||

|

||||

self._check_if_done()

|

||||

continue

|

||||

|

||||

chunksz = up2k_chunksize(file.size)

|

||||

njoin = (self.ar.sz * 1024 * 1024) // chunksz

|

||||

njoin = self.ar.sz // chunksz

|

||||

cs = hs[:]

|

||||

while cs:

|

||||

fsl = FileSlice(file, cs[:1])

|

||||

@@ -1321,7 +1422,7 @@ class Ctl(object):

|

||||

)

|

||||

|

||||

try:

|

||||

upload(fsl, stats)

|

||||

upload(fsl, stats, self.ar.szm)

|

||||

except Exception as ex:

|

||||

t = "upload failed, retrying: %s #%s+%d (%s)\n"

|

||||

eprint(t % (file.name, cids[0][:8], len(cids) - 1, ex))

|

||||

@@ -1365,7 +1466,7 @@ def main():

|

||||

cores = (os.cpu_count() if hasattr(os, "cpu_count") else 0) or 2

|

||||

hcores = min(cores, 3) # 4% faster than 4+ on py3.9 @ r5-4500U

|

||||

|

||||

ver = "{0} v{1} https://youtu.be/BIcOO6TLKaY".format(S_BUILD_DT, S_VERSION)

|

||||

ver = "{0}, v{1}".format(S_BUILD_DT, S_VERSION)

|

||||

if "--version" in sys.argv:

|

||||

print(ver)

|

||||

return

|

||||

@@ -1403,14 +1504,17 @@ source file/folder selection uses rsync syntax, meaning that:

|

||||

|

||||

ap = app.add_argument_group("file-ID calculator; enable with url '-' to list warks (file identifiers) instead of upload/search")

|

||||

ap.add_argument("--wsalt", type=unicode, metavar="S", default="hunter2", help="salt to use when creating warks; must match server config")

|

||||

ap.add_argument("--chs", action="store_true", help="verbose (print the hash/offset of each chunk in each file)")

|

||||

ap.add_argument("--jw", action="store_true", help="just identifier+filepath, not mtime/size too")

|

||||

|

||||

ap = app.add_argument_group("performance tweaks")

|

||||

ap.add_argument("-j", type=int, metavar="CONNS", default=2, help="parallel connections")

|

||||

ap.add_argument("-J", type=int, metavar="CORES", default=hcores, help="num cpu-cores to use for hashing; set 0 or 1 for single-core hashing")

|

||||

ap.add_argument("--sz", type=int, metavar="MiB", default=64, help="try to make each POST this big")

|

||||

ap.add_argument("--szm", type=int, metavar="MiB", default=96, help="max size of each POST (default is cloudflare max)")

|

||||

ap.add_argument("-nh", action="store_true", help="disable hashing while uploading")

|

||||

ap.add_argument("-ns", action="store_true", help="no status panel (for slow consoles and macos)")

|

||||

ap.add_argument("--cxp", type=float, metavar="SEC", default=57, help="assume http connections expired after SEConds")

|

||||

ap.add_argument("--cd", type=float, metavar="SEC", default=5, help="delay before reattempting a failed handshake/upload")

|

||||

ap.add_argument("--safe", action="store_true", help="use simple fallback approach")

|

||||

ap.add_argument("-z", action="store_true", help="ZOOMIN' (skip uploading files if they exist at the destination with the ~same last-modified timestamp, so same as yolo / turbo with date-chk but even faster)")

|

||||

@@ -1436,6 +1540,9 @@ source file/folder selection uses rsync syntax, meaning that:

|

||||

if ar.dr:

|

||||

ar.ow = True

|

||||

|

||||

ar.sz *= 1024 * 1024

|

||||

ar.szm *= 1024 * 1024

|

||||

|

||||

ar.x = "|".join(ar.x or [])

|

||||

|

||||

setattr(ar, "wlist", ar.url == "-")

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Maintainer: icxes <dev.null@need.moe>

|

||||

pkgname=copyparty

|

||||

pkgver="1.15.3"

|

||||

pkgver="1.15.10"

|

||||

pkgrel=1

|

||||

pkgdesc="File server with accelerated resumable uploads, dedup, WebDAV, FTP, TFTP, zeroconf, media indexer, thumbnails++"

|

||||

arch=("any")

|

||||

@@ -21,7 +21,7 @@ optdepends=("ffmpeg: thumbnails for videos, images (slower) and audio, music tag

|

||||

)

|

||||

source=("https://github.com/9001/${pkgname}/releases/download/v${pkgver}/${pkgname}-${pkgver}.tar.gz")

|

||||

backup=("etc/${pkgname}.d/init" )

|

||||

sha256sums=("d4b02a8d618749c317161773fdd3b66992557f682b7cccd8c4c8583497c4cb24")

|

||||

sha256sums=("070d5bdebe57c247427ceea6b9029f93097e4b8996cabfc59d0ec248d063b993")

|

||||

|

||||

build() {

|

||||

cd "${srcdir}/${pkgname}-${pkgver}"

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

{

|

||||

"url": "https://github.com/9001/copyparty/releases/download/v1.15.3/copyparty-sfx.py",

|

||||

"version": "1.15.3",

|

||||

"hash": "sha256-OmoLwakVaZM9QwkujT4wwhqC5KcaS5u81DDTjS2r7MI="

|

||||

"url": "https://github.com/9001/copyparty/releases/download/v1.15.10/copyparty-sfx.py",

|

||||

"version": "1.15.10",

|

||||

"hash": "sha256-9InxXpCfgnsvcNdRwWhQ74TpI24Osdr0lN0IwIULL3I="

|

||||

}

|

||||

@@ -16,8 +16,6 @@ except:

|

||||

TYPE_CHECKING = False

|

||||

|

||||

if True:

|

||||

from types import ModuleType

|

||||

|

||||

from typing import Any, Callable, Optional

|

||||

|

||||

PY2 = sys.version_info < (3,)

|

||||

@@ -82,6 +80,7 @@ web/deps/prismd.css

|

||||

web/deps/scp.woff2

|

||||

web/deps/sha512.ac.js

|

||||

web/deps/sha512.hw.js

|

||||

web/iiam.gif

|

||||

web/md.css

|

||||

web/md.html

|

||||

web/md.js

|

||||

@@ -110,7 +109,6 @@ RES = set(zs.strip().split("\n"))

|

||||

|

||||

class EnvParams(object):

|

||||

def __init__(self) -> None:

|

||||

self.pkg: Optional[ModuleType] = None

|

||||

self.t0 = time.time()

|

||||

self.mod = ""

|

||||

self.cfg = ""

|

||||

|

||||

@@ -50,6 +50,8 @@ from .util import (

|

||||

PARTFTPY_VER,

|

||||

PY_DESC,

|

||||

PYFTPD_VER,

|

||||

RAM_AVAIL,

|

||||

RAM_TOTAL,

|

||||

SQLITE_VER,

|

||||

UNPLICATIONS,

|

||||

Daemon,

|

||||

@@ -218,8 +220,6 @@ def init_E(EE: EnvParams) -> None:

|

||||

|

||||

raise Exception("could not find a writable path for config")

|

||||

|

||||

assert __package__ # !rm

|

||||

E.pkg = sys.modules[__package__]

|

||||

E.mod = os.path.dirname(os.path.realpath(__file__))

|

||||

if E.mod.endswith("__init__"):

|

||||

E.mod = os.path.dirname(E.mod)

|

||||

@@ -686,6 +686,8 @@ def get_sects():

|

||||

\033[36mxbu\033[35m executes CMD before a file upload starts

|

||||

\033[36mxau\033[35m executes CMD after a file upload finishes

|

||||

\033[36mxiu\033[35m executes CMD after all uploads finish and volume is idle

|

||||

\033[36mxbc\033[35m executes CMD before a file copy

|

||||

\033[36mxac\033[35m executes CMD after a file copy

|

||||

\033[36mxbr\033[35m executes CMD before a file rename/move

|

||||

\033[36mxar\033[35m executes CMD after a file rename/move

|

||||

\033[36mxbd\033[35m executes CMD before a file delete

|

||||

@@ -782,7 +784,7 @@ def get_sects():

|

||||

dedent(

|

||||

"""

|

||||

specify --exp or the "exp" volflag to enable placeholder expansions

|

||||

in README.md / .prologue.html / .epilogue.html

|

||||

in README.md / PREADME.md / .prologue.html / .epilogue.html

|

||||

|

||||

--exp-md (volflag exp_md) holds the list of placeholders which can be

|

||||

expanded in READMEs, and --exp-lg (volflag exp_lg) likewise for logues;

|

||||

@@ -898,7 +900,7 @@ def get_sects():

|

||||

dedent(

|

||||

"""

|

||||

the mDNS protocol is multicast-based, which means there are thousands

|

||||

of fun and intersesting ways for it to break unexpectedly

|

||||

of fun and interesting ways for it to break unexpectedly

|

||||

|

||||

things to check if it does not work at all:

|

||||

|

||||

@@ -1007,6 +1009,7 @@ def add_upload(ap):

|

||||

ap2.add_argument("--hardlink", action="store_true", help="enable hardlink-based dedup; will fallback on symlinks when that is impossible (across filesystems) (volflag=hardlink)")

|

||||

ap2.add_argument("--hardlink-only", action="store_true", help="do not fallback to symlinks when a hardlink cannot be made (volflag=hardlinkonly)")

|

||||

ap2.add_argument("--no-dupe", action="store_true", help="reject duplicate files during upload; only matches within the same volume (volflag=nodupe)")

|

||||

ap2.add_argument("--no-clone", action="store_true", help="do not use existing data on disk to satisfy dupe uploads; reduces server HDD reads in exchange for much more network load (volflag=noclone)")

|

||||

ap2.add_argument("--no-snap", action="store_true", help="disable snapshots -- forget unfinished uploads on shutdown; don't create .hist/up2k.snap files -- abandoned/interrupted uploads must be cleaned up manually")

|

||||

ap2.add_argument("--snap-wri", metavar="SEC", type=int, default=300, help="write upload state to ./hist/up2k.snap every \033[33mSEC\033[0m seconds; allows resuming incomplete uploads after a server crash")

|

||||

ap2.add_argument("--snap-drop", metavar="MIN", type=float, default=1440.0, help="forget unfinished uploads after \033[33mMIN\033[0m minutes; impossible to resume them after that (360=6h, 1440=24h)")

|

||||

@@ -1018,7 +1021,7 @@ def add_upload(ap):

|

||||

ap2.add_argument("--sparse", metavar="MiB", type=int, default=4, help="windows-only: minimum size of incoming uploads through up2k before they are made into sparse files")

|

||||

ap2.add_argument("--turbo", metavar="LVL", type=int, default=0, help="configure turbo-mode in up2k client; [\033[32m-1\033[0m] = forbidden/always-off, [\033[32m0\033[0m] = default-off and warn if enabled, [\033[32m1\033[0m] = default-off, [\033[32m2\033[0m] = on, [\033[32m3\033[0m] = on and disable datecheck")

|

||||

ap2.add_argument("--u2j", metavar="JOBS", type=int, default=2, help="web-client: number of file chunks to upload in parallel; 1 or 2 is good for low-latency (same-country) connections, 4-8 for android clients, 16 for cross-atlantic (max=64)")

|

||||

ap2.add_argument("--u2sz", metavar="N,N,N", type=u, default="1,64,96", help="web-client: default upload chunksize (MiB); sets \033[33mmin,default,max\033[0m in the settings gui. Each HTTP POST will aim for this size. Cloudflare max is 96. Big values are good for cross-atlantic but may increase HDD fragmentation on some FS. Disable this optimization with [\033[32m1,1,1\033[0m]")

|

||||

ap2.add_argument("--u2sz", metavar="N,N,N", type=u, default="1,64,96", help="web-client: default upload chunksize (MiB); sets \033[33mmin,default,max\033[0m in the settings gui. Each HTTP POST will aim for \033[33mdefault\033[0m, and never exceed \033[33mmax\033[0m. Cloudflare max is 96. Big values are good for cross-atlantic but may increase HDD fragmentation on some FS. Disable this optimization with [\033[32m1,1,1\033[0m]")

|

||||

ap2.add_argument("--u2sort", metavar="TXT", type=u, default="s", help="upload order; [\033[32ms\033[0m]=smallest-first, [\033[32mn\033[0m]=alphabetical, [\033[32mfs\033[0m]=force-s, [\033[32mfn\033[0m]=force-n -- alphabetical is a bit slower on fiber/LAN but makes it easier to eyeball if everything went fine")

|

||||

ap2.add_argument("--write-uplog", action="store_true", help="write POST reports to textfiles in working-directory")

|

||||

|

||||

@@ -1038,7 +1041,7 @@ def add_network(ap):

|

||||

else:

|

||||

ap2.add_argument("--freebind", action="store_true", help="allow listening on IPs which do not yet exist, for example if the network interfaces haven't finished going up. Only makes sense for IPs other than '0.0.0.0', '127.0.0.1', '::', and '::1'. May require running as root (unless net.ipv6.ip_nonlocal_bind)")

|

||||

ap2.add_argument("--s-thead", metavar="SEC", type=int, default=120, help="socket timeout (read request header)")

|

||||

ap2.add_argument("--s-tbody", metavar="SEC", type=float, default=186.0, help="socket timeout (read/write request/response bodies). Use 60 on fast servers (default is extremely safe). Disable with 0 if reverse-proxied for a 2%% speed boost")

|

||||

ap2.add_argument("--s-tbody", metavar="SEC", type=float, default=128.0, help="socket timeout (read/write request/response bodies). Use 60 on fast servers (default is extremely safe). Disable with 0 if reverse-proxied for a 2%% speed boost")

|

||||

ap2.add_argument("--s-rd-sz", metavar="B", type=int, default=256*1024, help="socket read size in bytes (indirectly affects filesystem writes; recommendation: keep equal-to or lower-than \033[33m--iobuf\033[0m)")

|

||||

ap2.add_argument("--s-wr-sz", metavar="B", type=int, default=256*1024, help="socket write size in bytes")

|

||||

ap2.add_argument("--s-wr-slp", metavar="SEC", type=float, default=0.0, help="debug: socket write delay in seconds")

|

||||

@@ -1088,6 +1091,7 @@ def add_auth(ap):

|

||||

ap2.add_argument("--ses-db", metavar="PATH", type=u, default=ses_db, help="where to store the sessions database (if you run multiple copyparty instances, make sure they use different DBs)")

|

||||

ap2.add_argument("--ses-len", metavar="CHARS", type=int, default=20, help="session key length; default is 120 bits ((20//4)*4*6)")

|

||||

ap2.add_argument("--no-ses", action="store_true", help="disable sessions; use plaintext passwords in cookies")

|

||||

ap2.add_argument("--ipu", metavar="CIDR=USR", type=u, action="append", help="users with IP matching \033[33mCIDR\033[0m are auto-authenticated as username \033[33mUSR\033[0m; example: [\033[32m172.16.24.0/24=dave]")

|

||||

|

||||

|

||||

def add_chpw(ap):

|

||||

@@ -1201,6 +1205,8 @@ def add_hooks(ap):

|

||||

ap2.add_argument("--xbu", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file upload starts")

|

||||

ap2.add_argument("--xau", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after a file upload finishes")

|

||||

ap2.add_argument("--xiu", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after all uploads finish and volume is idle")

|

||||

ap2.add_argument("--xbc", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file copy")

|

||||

ap2.add_argument("--xac", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after a file copy")

|

||||

ap2.add_argument("--xbr", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file move/rename")

|

||||

ap2.add_argument("--xar", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after a file move/rename")

|

||||

ap2.add_argument("--xbd", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file delete")

|