Compare commits

82 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

a90dde94e1 | ||

|

|

7dfbfc7227 | ||

|

|

b10843d051 | ||

|

|

520ac8f4dc | ||

|

|

537a6e50e9 | ||

|

|

2d0cbdf1a8 | ||

|

|

5afb562aa3 | ||

|

|

db069c3d4a | ||

|

|

fae40c7e2f | ||

|

|

0c43b592dc | ||

|

|

2ab8924e2d | ||

|

|

0e31cfa784 | ||

|

|

8f7ffcf350 | ||

|

|

9c8507a0fd | ||

|

|

e9b2cab088 | ||

|

|

d3ccacccb1 | ||

|

|

df386c8fbc | ||

|

|

4d15dd6e17 | ||

|

|

56a0499636 | ||

|

|

10fc4768e8 | ||

|

|

2b63d7d10d | ||

|

|

1f177528c1 | ||

|

|

fc3bbb70a3 | ||

|

|

ce3cab0295 | ||

|

|

c784e5285e | ||

|

|

2bf9055cae | ||

|

|

8aba5aed4f | ||

|

|

0ce7cf5e10 | ||

|

|

96edcbccd7 | ||

|

|

4603afb6de | ||

|

|

56317b00af | ||

|

|

cacec9c1f3 | ||

|

|

44ee07f0b2 | ||

|

|

6a8d5e1731 | ||

|

|

d9962f65b3 | ||

|

|

119e88d87b | ||

|

|

71d9e010d9 | ||

|

|

5718caa957 | ||

|

|

efd8a32ed6 | ||

|

|

b22d700e16 | ||

|

|

ccdacea0c4 | ||

|

|

4bdcbc1cb5 | ||

|

|

833c6cf2ec | ||

|

|

dd6dbdd90a | ||

|

|

63013cc565 | ||

|

|

912402364a | ||

|

|

159f51b12b | ||

|

|

7678a91b0e | ||

|

|

b13899c63d | ||

|

|

3a0d882c5e | ||

|

|

cb81f0ad6d | ||

|

|

518bacf628 | ||

|

|

ca63b03e55 | ||

|

|

cecef88d6b | ||

|

|

7ffd805a03 | ||

|

|

a7e2a0c981 | ||

|

|

2a570bb4ca | ||

|

|

5ca8f0706d | ||

|

|

a9b4436cdc | ||

|

|

5f91999512 | ||

|

|

9f000beeaf | ||

|

|

ff0a71f212 | ||

|

|

22dfc6ec24 | ||

|

|

48147c079e | ||

|

|

d715479ef6 | ||

|

|

fc8298c468 | ||

|

|

e94ca5dc91 | ||

|

|

114b71b751 | ||

|

|

b2770a2087 | ||

|

|

cba1878bb2 | ||

|

|

a2e037d6af | ||

|

|

65a2b6a223 | ||

|

|

9ed799e803 | ||

|

|

c1c0ecca13 | ||

|

|

ee62836383 | ||

|

|

705f598b1a | ||

|

|

414de88925 | ||

|

|

53ffd245dd | ||

|

|

cf1b756206 | ||

|

|

22b58e31ef | ||

|

|

b7f9bf5a28 | ||

|

|

aba680b6c2 |

61

README.md

61

README.md

@@ -47,6 +47,7 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [file manager](#file-manager) - cut/paste, rename, and delete files/folders (if you have permission)

|

||||

* [shares](#shares) - share a file or folder by creating a temporary link

|

||||

* [batch rename](#batch-rename) - select some files and press `F2` to bring up the rename UI

|

||||

* [rss feeds](#rss-feeds) - monitor a folder with your RSS reader

|

||||

* [media player](#media-player) - plays almost every audio format there is

|

||||

* [audio equalizer](#audio-equalizer) - and [dynamic range compressor](https://en.wikipedia.org/wiki/Dynamic_range_compression)

|

||||

* [fix unreliable playback on android](#fix-unreliable-playback-on-android) - due to phone / app settings

|

||||

@@ -80,6 +81,7 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [event hooks](#event-hooks) - trigger a program on uploads, renames etc ([examples](./bin/hooks/))

|

||||

* [upload events](#upload-events) - the older, more powerful approach ([examples](./bin/mtag/))

|

||||

* [handlers](#handlers) - redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

* [ip auth](#ip-auth) - autologin based on IP range (CIDR)

|

||||

* [identity providers](#identity-providers) - replace copyparty passwords with oauth and such

|

||||

* [user-changeable passwords](#user-changeable-passwords) - if permitted, users can change their own passwords

|

||||

* [using the cloud as storage](#using-the-cloud-as-storage) - connecting to an aws s3 bucket and similar

|

||||

@@ -218,7 +220,7 @@ also see [comparison to similar software](./docs/versus.md)

|

||||

* upload

|

||||

* ☑ basic: plain multipart, ie6 support

|

||||

* ☑ [up2k](#uploading): js, resumable, multithreaded

|

||||

* **no filesize limit!** ...unless you use Cloudflare, then it's 383.9 GiB

|

||||

* **no filesize limit!** even on Cloudflare

|

||||

* ☑ stash: simple PUT filedropper

|

||||

* ☑ filename randomizer

|

||||

* ☑ write-only folders

|

||||

@@ -426,7 +428,7 @@ configuring accounts/volumes with arguments:

|

||||

|

||||

permissions:

|

||||

* `r` (read): browse folder contents, download files, download as zip/tar, see filekeys/dirkeys

|

||||

* `w` (write): upload files, move files *into* this folder

|

||||

* `w` (write): upload files, move/copy files *into* this folder

|

||||

* `m` (move): move files/folders *from* this folder

|

||||

* `d` (delete): delete files/folders

|

||||

* `.` (dots): user can ask to show dotfiles in directory listings

|

||||

@@ -506,7 +508,8 @@ the browser has the following hotkeys (always qwerty)

|

||||

* `ESC` close various things

|

||||

* `ctrl-K` delete selected files/folders

|

||||

* `ctrl-X` cut selected files/folders

|

||||

* `ctrl-V` paste

|

||||

* `ctrl-C` copy selected files/folders to clipboard

|

||||

* `ctrl-V` paste (move/copy)

|

||||

* `Y` download selected files

|

||||

* `F2` [rename](#batch-rename) selected file/folder

|

||||

* when a file/folder is selected (in not-grid-view):

|

||||

@@ -575,6 +578,7 @@ click the `🌲` or pressing the `B` hotkey to toggle between breadcrumbs path (

|

||||

|

||||

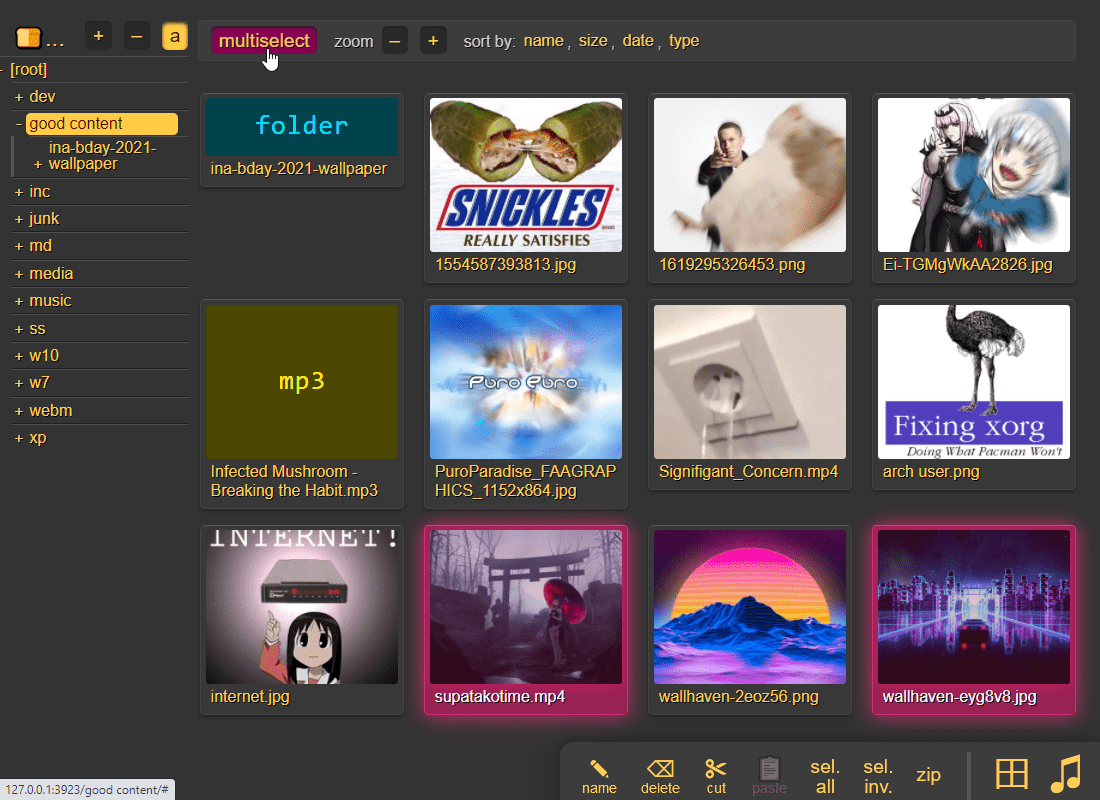

press `g` or `田` to toggle grid-view instead of the file listing and `t` toggles icons / thumbnails

|

||||

* can be made default globally with `--grid` or per-volume with volflag `grid`

|

||||

* enable by adding `?imgs` to a link, or disable with `?imgs=0`

|

||||

|

||||

|

||||

|

||||

@@ -653,7 +657,7 @@ up2k has several advantages:

|

||||

* uploads resume if you reboot your browser or pc, just upload the same files again

|

||||

* server detects any corruption; the client reuploads affected chunks

|

||||

* the client doesn't upload anything that already exists on the server

|

||||

* no filesize limit unless imposed by a proxy, for example Cloudflare, which blocks uploads over 383.9 GiB

|

||||

* no filesize limit, even when a proxy limits the request size (for example Cloudflare)

|

||||

* much higher speeds than ftp/scp/tarpipe on some internet connections (mainly american ones) thanks to parallel connections

|

||||

* the last-modified timestamp of the file is preserved

|

||||

|

||||

@@ -689,6 +693,8 @@ note that since up2k has to read each file twice, `[🎈] bup` can *theoreticall

|

||||

|

||||

if you are resuming a massive upload and want to skip hashing the files which already finished, you can enable `turbo` in the `[⚙️] config` tab, but please read the tooltip on that button

|

||||

|

||||

if the server is behind a proxy which imposes a request-size limit, you can configure up2k to sneak below the limit with server-option `--u2sz` (the default is 96 MiB to support Cloudflare)

|

||||

|

||||

|

||||

### file-search

|

||||

|

||||

@@ -752,10 +758,11 @@ file selection: click somewhere on the line (not the link itself), then:

|

||||

* shift-click another line for range-select

|

||||

|

||||

* cut: select some files and `ctrl-x`

|

||||

* copy: select some files and `ctrl-c`

|

||||

* paste: `ctrl-v` in another folder

|

||||

* rename: `F2`

|

||||

|

||||

you can move files across browser tabs (cut in one tab, paste in another)

|

||||

you can copy/move files across browser tabs (cut/copy in one tab, paste in another)

|

||||

|

||||

|

||||

## shares

|

||||

@@ -842,6 +849,30 @@ or a mix of both:

|

||||

the metadata keys you can use in the format field are the ones in the file-browser table header (whatever is collected with `-mte` and `-mtp`)

|

||||

|

||||

|

||||

## rss feeds

|

||||

|

||||

monitor a folder with your RSS reader , optionally recursive

|

||||

|

||||

must be enabled per-volume with volflag `rss` or globally with `--rss`

|

||||

|

||||

the feed includes itunes metadata for use with podcast readers such as [AntennaPod](https://antennapod.org/)

|

||||

|

||||

a feed example: https://cd.ocv.me/a/d2/d22/?rss&fext=mp3

|

||||

|

||||

url parameters:

|

||||

|

||||

* `pw=hunter2` for password auth

|

||||

* `recursive` to also include subfolders

|

||||

* `title=foo` changes the feed title (default: folder name)

|

||||

* `fext=mp3,opus` only include mp3 and opus files (default: all)

|

||||

* `nf=30` only show the first 30 results (default: 250)

|

||||

* `sort=m` sort by mtime (file last-modified), newest first (default)

|

||||

* `u` = upload-time; NOTE: non-uploaded files have upload-time `0`

|

||||

* `n` = filename

|

||||

* `a` = filesize

|

||||

* uppercase = reverse-sort; `M` = oldest file first

|

||||

|

||||

|

||||

## media player

|

||||

|

||||

plays almost every audio format there is (if the server has FFmpeg installed for on-demand transcoding)

|

||||

@@ -1432,6 +1463,22 @@ redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

replace 404 and 403 errors with something completely different (that's it for now)

|

||||

|

||||

|

||||

## ip auth

|

||||

|

||||

autologin based on IP range (CIDR) , using the global-option `--ipu`

|

||||

|

||||

for example, if everyone with an IP that starts with `192.168.123` should automatically log in as the user `spartacus`, then you can either specify `--ipu=192.168.123.0/24=spartacus` as a commandline option, or put this in a config file:

|

||||

|

||||

```yaml

|

||||

[global]

|

||||

ipu: 192.168.123.0/24=spartacus

|

||||

```

|

||||

|

||||

repeat the option to map additional subnets

|

||||

|

||||

**be careful with this one!** if you have a reverseproxy, then you definitely want to make sure you have [real-ip](#real-ip) configured correctly, and it's probably a good idea to nullmap the reverseproxy's IP just in case; so if your reverseproxy is sending requests from `172.24.27.9` then that would be `--ipu=172.24.27.9/32=`

|

||||

|

||||

|

||||

## identity providers

|

||||

|

||||

replace copyparty passwords with oauth and such

|

||||

@@ -1640,6 +1687,7 @@ scrape_configs:

|

||||

currently the following metrics are available,

|

||||

* `cpp_uptime_seconds` time since last copyparty restart

|

||||

* `cpp_boot_unixtime_seconds` same but as an absolute timestamp

|

||||

* `cpp_active_dl` number of active downloads

|

||||

* `cpp_http_conns` number of open http(s) connections

|

||||

* `cpp_http_reqs` number of http(s) requests handled

|

||||

* `cpp_sus_reqs` number of 403/422/malicious requests

|

||||

@@ -1889,6 +1937,9 @@ quick summary of more eccentric web-browsers trying to view a directory index:

|

||||

| **ie4** and **netscape** 4.0 | can browse, upload with `?b=u`, auth with `&pw=wark` |

|

||||

| **ncsa mosaic** 2.7 | does not get a pass, [pic1](https://user-images.githubusercontent.com/241032/174189227-ae816026-cf6f-4be5-a26e-1b3b072c1b2f.png) - [pic2](https://user-images.githubusercontent.com/241032/174189225-5651c059-5152-46e9-ac26-7e98e497901b.png) |

|

||||

| **SerenityOS** (7e98457) | hits a page fault, works with `?b=u`, file upload not-impl |

|

||||

| **nintendo 3ds** | can browse, upload, view thumbnails (thx bnjmn) |

|

||||

|

||||

<p align="center"><img src="https://github.com/user-attachments/assets/88deab3d-6cad-4017-8841-2f041472b853" /></p>

|

||||

|

||||

|

||||

# client examples

|

||||

|

||||

@@ -2,7 +2,7 @@ standalone programs which are executed by copyparty when an event happens (uploa

|

||||

|

||||

these programs either take zero arguments, or a filepath (the affected file), or a json message with filepath + additional info

|

||||

|

||||

run copyparty with `--help-hooks` for usage details / hook type explanations (xm/xbu/xau/xiu/xbr/xar/xbd/xad/xban)

|

||||

run copyparty with `--help-hooks` for usage details / hook type explanations (xm/xbu/xau/xiu/xbc/xac/xbr/xar/xbd/xad/xban)

|

||||

|

||||

> **note:** in addition to event hooks (the stuff described here), copyparty has another api to run your programs/scripts while providing way more information such as audio tags / video codecs / etc and optionally daisychaining data between scripts in a processing pipeline; if that's what you want then see [mtp plugins](../mtag/) instead

|

||||

|

||||

|

||||

@@ -393,7 +393,8 @@ class Gateway(object):

|

||||

if r.status != 200:

|

||||

self.closeconn()

|

||||

info("http error %s reading dir %r", r.status, web_path)

|

||||

raise FuseOSError(errno.ENOENT)

|

||||

err = errno.ENOENT if r.status == 404 else errno.EIO

|

||||

raise FuseOSError(err)

|

||||

|

||||

ctype = r.getheader("Content-Type", "")

|

||||

if ctype == "application/json":

|

||||

@@ -1128,7 +1129,7 @@ def main():

|

||||

|

||||

# dircache is always a boost,

|

||||

# only want to disable it for tests etc,

|

||||

cdn = 9 # max num dirs; 0=disable

|

||||

cdn = 24 # max num dirs; keep larger than max dir depth; 0=disable

|

||||

cds = 1 # numsec until an entry goes stale

|

||||

|

||||

where = "local directory"

|

||||

|

||||

201

bin/u2c.py

201

bin/u2c.py

@@ -1,8 +1,8 @@

|

||||

#!/usr/bin/env python3

|

||||

from __future__ import print_function, unicode_literals

|

||||

|

||||

S_VERSION = "2.1"

|

||||

S_BUILD_DT = "2024-09-23"

|

||||

S_VERSION = "2.6"

|

||||

S_BUILD_DT = "2024-11-10"

|

||||

|

||||

"""

|

||||

u2c.py: upload to copyparty

|

||||

@@ -62,6 +62,9 @@ else:

|

||||

|

||||

unicode = str

|

||||

|

||||

|

||||

WTF8 = "replace" if PY2 else "surrogateescape"

|

||||

|

||||

VT100 = platform.system() != "Windows"

|

||||

|

||||

|

||||

@@ -151,6 +154,7 @@ class HCli(object):

|

||||

self.tls = tls

|

||||

self.verify = ar.te or not ar.td

|

||||

self.conns = []

|

||||

self.hconns = []

|

||||

if tls:

|

||||

import ssl

|

||||

|

||||

@@ -170,7 +174,7 @@ class HCli(object):

|

||||

"User-Agent": "u2c/%s" % (S_VERSION,),

|

||||

}

|

||||

|

||||

def _connect(self):

|

||||

def _connect(self, timeout):

|

||||

args = {}

|

||||

if PY37:

|

||||

args["blocksize"] = 1048576

|

||||

@@ -182,9 +186,11 @@ class HCli(object):

|

||||

if self.ctx:

|

||||

args = {"context": self.ctx}

|

||||

|

||||

return C(self.addr, self.port, timeout=999, **args)

|

||||

return C(self.addr, self.port, timeout=timeout, **args)

|

||||

|

||||

def req(self, meth, vpath, hdrs, body=None, ctype=None):

|

||||

now = time.time()

|

||||

|

||||

hdrs.update(self.base_hdrs)

|

||||

if self.ar.a:

|

||||

hdrs["PW"] = self.ar.a

|

||||

@@ -195,7 +201,11 @@ class HCli(object):

|

||||

0 if not body else body.len if hasattr(body, "len") else len(body)

|

||||

)

|

||||

|

||||

c = self.conns.pop() if self.conns else self._connect()

|

||||

# large timeout for handshakes (safededup)

|

||||

conns = self.hconns if ctype == MJ else self.conns

|

||||

while conns and self.ar.cxp < now - conns[0][0]:

|

||||

conns.pop(0)[1].close()

|

||||

c = conns.pop()[1] if conns else self._connect(999 if ctype == MJ else 128)

|

||||

try:

|

||||

c.request(meth, vpath, body, hdrs)

|

||||

if PY27:

|

||||

@@ -204,8 +214,15 @@ class HCli(object):

|

||||

rsp = c.getresponse()

|

||||

|

||||

data = rsp.read()

|

||||

self.conns.append(c)

|

||||

conns.append((time.time(), c))

|

||||

return rsp.status, data.decode("utf-8")

|

||||

except http_client.BadStatusLine:

|

||||

if self.ar.cxp > 4:

|

||||

t = "\nWARNING: --cxp probably too high; reducing from %d to 4"

|

||||

print(t % (self.ar.cxp,))

|

||||

self.ar.cxp = 4

|

||||

c.close()

|

||||

raise

|

||||

except:

|

||||

c.close()

|

||||

raise

|

||||

@@ -228,7 +245,7 @@ class File(object):

|

||||

self.lmod = lmod # type: float

|

||||

|

||||

self.abs = os.path.join(top, rel) # type: bytes

|

||||

self.name = self.rel.split(b"/")[-1].decode("utf-8", "replace") # type: str

|

||||

self.name = self.rel.split(b"/")[-1].decode("utf-8", WTF8) # type: str

|

||||

|

||||

# set by get_hashlist

|

||||

self.cids = [] # type: list[tuple[str, int, int]] # [ hash, ofs, sz ]

|

||||

@@ -267,10 +284,41 @@ class FileSlice(object):

|

||||

raise Exception(9)

|

||||

tlen += clen

|

||||

|

||||

self.len = tlen

|

||||

self.len = self.tlen = tlen

|

||||

self.cdr = self.car + self.len

|

||||

self.ofs = 0 # type: int

|

||||

self.f = open(file.abs, "rb", 512 * 1024)

|

||||

|

||||

self.f = None

|

||||

self.seek = self._seek0

|

||||

self.read = self._read0

|

||||

|

||||

def subchunk(self, maxsz, nth):

|

||||

if self.tlen <= maxsz:

|

||||

return -1

|

||||

|

||||

if not nth:

|

||||

self.car0 = self.car

|

||||

self.cdr0 = self.cdr

|

||||

|

||||

self.car = self.car0 + maxsz * nth

|

||||

if self.car >= self.cdr0:

|

||||

return -2

|

||||

|

||||

self.cdr = self.car + min(self.cdr0 - self.car, maxsz)

|

||||

self.len = self.cdr - self.car

|

||||

self.seek(0)

|

||||

return nth

|

||||

|

||||

def unsub(self):

|

||||

self.car = self.car0

|

||||

self.cdr = self.cdr0

|

||||

self.len = self.tlen

|

||||

|

||||

def _open(self):

|

||||

self.seek = self._seek

|

||||

self.read = self._read

|

||||

|

||||

self.f = open(self.file.abs, "rb", 512 * 1024)

|

||||

self.f.seek(self.car)

|

||||

|

||||

# https://stackoverflow.com/questions/4359495/what-is-exactly-a-file-like-object-in-python

|

||||

@@ -282,10 +330,15 @@ class FileSlice(object):

|

||||

except:

|

||||

pass # py27 probably

|

||||

|

||||

def close(self, *a, **ka):

|

||||

return # until _open

|

||||

|

||||

def tell(self):

|

||||

return self.ofs

|

||||

|

||||

def seek(self, ofs, wh=0):

|

||||

def _seek(self, ofs, wh=0):

|

||||

assert self.f # !rm

|

||||

|

||||

if wh == 1:

|

||||

ofs = self.ofs + ofs

|

||||

elif wh == 2:

|

||||

@@ -299,12 +352,22 @@ class FileSlice(object):

|

||||

self.ofs = ofs

|

||||

self.f.seek(self.car + ofs)

|

||||

|

||||

def read(self, sz):

|

||||

def _read(self, sz):

|

||||

assert self.f # !rm

|

||||

|

||||

sz = min(sz, self.len - self.ofs)

|

||||

ret = self.f.read(sz)

|

||||

self.ofs += len(ret)

|

||||

return ret

|

||||

|

||||

def _seek0(self, ofs, wh=0):

|

||||

self._open()

|

||||

return self.seek(ofs, wh)

|

||||

|

||||

def _read0(self, sz):

|

||||

self._open()

|

||||

return self.read(sz)

|

||||

|

||||

|

||||

class MTHash(object):

|

||||

def __init__(self, cores):

|

||||

@@ -557,13 +620,17 @@ def walkdir(err, top, excl, seen):

|

||||

for ap, inf in sorted(statdir(err, top)):

|

||||

if excl.match(ap):

|

||||

continue

|

||||

yield ap, inf

|

||||

if stat.S_ISDIR(inf.st_mode):

|

||||

yield ap, inf

|

||||

try:

|

||||

for x in walkdir(err, ap, excl, seen):

|

||||

yield x

|

||||

except Exception as ex:

|

||||

err.append((ap, str(ex)))

|

||||

elif stat.S_ISREG(inf.st_mode):

|

||||

yield ap, inf

|

||||

else:

|

||||

err.append((ap, "irregular filetype 0%o" % (inf.st_mode,)))

|

||||

|

||||

|

||||

def walkdirs(err, tops, excl):

|

||||

@@ -609,11 +676,12 @@ def walkdirs(err, tops, excl):

|

||||

|

||||

# mostly from copyparty/util.py

|

||||

def quotep(btxt):

|

||||

# type: (bytes) -> bytes

|

||||

quot1 = quote(btxt, safe=b"/")

|

||||

if not PY2:

|

||||

quot1 = quot1.encode("ascii")

|

||||

|

||||

return quot1.replace(b" ", b"+") # type: ignore

|

||||

return quot1.replace(b" ", b"%20") # type: ignore

|

||||

|

||||

|

||||

# from copyparty/util.py

|

||||

@@ -641,7 +709,7 @@ def up2k_chunksize(filesize):

|

||||

while True:

|

||||

for mul in [1, 2]:

|

||||

nchunks = math.ceil(filesize * 1.0 / chunksize)

|

||||

if nchunks <= 256 or (chunksize >= 32 * 1024 * 1024 and nchunks < 4096):

|

||||

if nchunks <= 256 or (chunksize >= 32 * 1024 * 1024 and nchunks <= 4096):

|

||||

return chunksize

|

||||

|

||||

chunksize += stepsize

|

||||

@@ -720,7 +788,7 @@ def handshake(ar, file, search):

|

||||

url = file.url

|

||||

else:

|

||||

if b"/" in file.rel:

|

||||

url = quotep(file.rel.rsplit(b"/", 1)[0]).decode("utf-8", "replace")

|

||||

url = quotep(file.rel.rsplit(b"/", 1)[0]).decode("utf-8")

|

||||

else:

|

||||

url = ""

|

||||

url = ar.vtop + url

|

||||

@@ -728,6 +796,7 @@ def handshake(ar, file, search):

|

||||

while True:

|

||||

sc = 600

|

||||

txt = ""

|

||||

t0 = time.time()

|

||||

try:

|

||||

zs = json.dumps(req, separators=(",\n", ": "))

|

||||

sc, txt = web.req("POST", url, {}, zs.encode("utf-8"), MJ)

|

||||

@@ -752,7 +821,9 @@ def handshake(ar, file, search):

|

||||

print("\nERROR: login required, or wrong password:\n%s" % (txt,))

|

||||

raise BadAuth()

|

||||

|

||||

eprint("handshake failed, retrying: %s\n %s\n\n" % (file.name, em))

|

||||

t = "handshake failed, retrying: %s\n t0=%.3f t1=%.3f td=%.3f\n %s\n\n"

|

||||

now = time.time()

|

||||

eprint(t % (file.name, t0, now, now - t0, em))

|

||||

time.sleep(ar.cd)

|

||||

|

||||

try:

|

||||

@@ -763,15 +834,15 @@ def handshake(ar, file, search):

|

||||

if search:

|

||||

return r["hits"], False

|

||||

|

||||

file.url = r["purl"]

|

||||

file.url = quotep(r["purl"].encode("utf-8", WTF8)).decode("utf-8")

|

||||

file.name = r["name"]

|

||||

file.wark = r["wark"]

|

||||

|

||||

return r["hash"], r["sprs"]

|

||||

|

||||

|

||||

def upload(fsl, stats):

|

||||

# type: (FileSlice, str) -> None

|

||||

def upload(fsl, stats, maxsz):

|

||||

# type: (FileSlice, str, int) -> None

|

||||

"""upload a range of file data, defined by one or more `cid` (chunk-hash)"""

|

||||

|

||||

ctxt = fsl.cids[0]

|

||||

@@ -789,21 +860,34 @@ def upload(fsl, stats):

|

||||

if stats:

|

||||

headers["X-Up2k-Stat"] = stats

|

||||

|

||||

nsub = 0

|

||||

try:

|

||||

sc, txt = web.req("POST", fsl.file.url, headers, fsl, MO)

|

||||

while nsub != -1:

|

||||

nsub = fsl.subchunk(maxsz, nsub)

|

||||

if nsub == -2:

|

||||

return

|

||||

if nsub >= 0:

|

||||

headers["X-Up2k-Subc"] = str(maxsz * nsub)

|

||||

headers.pop(CLEN, None)

|

||||

nsub += 1

|

||||

|

||||

if sc == 400:

|

||||

if (

|

||||

"already being written" in txt

|

||||

or "already got that" in txt

|

||||

or "only sibling chunks" in txt

|

||||

):

|

||||

fsl.file.nojoin = 1

|

||||

sc, txt = web.req("POST", fsl.file.url, headers, fsl, MO)

|

||||

|

||||

if sc >= 400:

|

||||

raise Exception("http %s: %s" % (sc, txt))

|

||||

if sc == 400:

|

||||

if (

|

||||

"already being written" in txt

|

||||

or "already got that" in txt

|

||||

or "only sibling chunks" in txt

|

||||

):

|

||||

fsl.file.nojoin = 1

|

||||

|

||||

if sc >= 400:

|

||||

raise Exception("http %s: %s" % (sc, txt))

|

||||

finally:

|

||||

fsl.f.close()

|

||||

if fsl.f:

|

||||

fsl.f.close()

|

||||

if nsub != -1:

|

||||

fsl.unsub()

|

||||

|

||||

|

||||

class Ctl(object):

|

||||

@@ -869,8 +953,8 @@ class Ctl(object):

|

||||

self.hash_b = 0

|

||||

self.up_f = 0

|

||||

self.up_c = 0

|

||||

self.up_b = 0

|

||||

self.up_br = 0

|

||||

self.up_b = 0 # num bytes handled

|

||||

self.up_br = 0 # num bytes actually transferred

|

||||

self.uploader_busy = 0

|

||||

self.serialized = False

|

||||

|

||||

@@ -935,7 +1019,7 @@ class Ctl(object):

|

||||

print(" %d up %s" % (ncs - nc, cid))

|

||||

stats = "%d/0/0/%d" % (nf, self.nfiles - nf)

|

||||

fslice = FileSlice(file, [cid])

|

||||

upload(fslice, stats)

|

||||

upload(fslice, stats, self.ar.szm)

|

||||

|

||||

print(" ok!")

|

||||

if file.recheck:

|

||||

@@ -1006,6 +1090,8 @@ class Ctl(object):

|

||||

|

||||

spd = humansize(spd)

|

||||

self.eta = str(datetime.timedelta(seconds=int(eta)))

|

||||

if eta > 2591999:

|

||||

self.eta = self.eta.split(",")[0] # truncate HH:MM:SS

|

||||

sleft = humansize(self.nbytes - self.up_b)

|

||||

nleft = self.nfiles - self.up_f

|

||||

tail = "\033[K\033[u" if VT100 and not self.ar.ns else "\r"

|

||||

@@ -1013,11 +1099,14 @@ class Ctl(object):

|

||||

t = "%s eta @ %s/s, %s, %d# left\033[K" % (self.eta, spd, sleft, nleft)

|

||||

eprint(txt + "\033]0;{0}\033\\\r{0}{1}".format(t, tail))

|

||||

|

||||

if self.ar.wlist:

|

||||

self.at_hash = time.time() - self.t0

|

||||

|

||||

if self.hash_b and self.at_hash:

|

||||

spd = humansize(self.hash_b / self.at_hash)

|

||||

eprint("\nhasher: %.2f sec, %s/s\n" % (self.at_hash, spd))

|

||||

if self.up_b and self.at_up:

|

||||

spd = humansize(self.up_b / self.at_up)

|

||||

if self.up_br and self.at_up:

|

||||

spd = humansize(self.up_br / self.at_up)

|

||||

eprint("upload: %.2f sec, %s/s\n" % (self.at_up, spd))

|

||||

|

||||

if not self.recheck:

|

||||

@@ -1051,7 +1140,7 @@ class Ctl(object):

|

||||

print(" ls ~{0}".format(srd))

|

||||

zt = (

|

||||

self.ar.vtop,

|

||||

quotep(rd.replace(b"\\", b"/")).decode("utf-8", "replace"),

|

||||

quotep(rd.replace(b"\\", b"/")).decode("utf-8"),

|

||||

)

|

||||

sc, txt = web.req("GET", "%s%s?ls<&dots" % zt, {})

|

||||

if sc >= 400:

|

||||

@@ -1060,13 +1149,16 @@ class Ctl(object):

|

||||

j = json.loads(txt)

|

||||

for f in j["dirs"] + j["files"]:

|

||||

rfn = f["href"].split("?")[0].rstrip("/")

|

||||

ls[unquote(rfn.encode("utf-8", "replace"))] = f

|

||||

ls[unquote(rfn.encode("utf-8", WTF8))] = f

|

||||

except Exception as ex:

|

||||

print(" mkdir ~{0} ({1})".format(srd, ex))

|

||||

|

||||

if self.ar.drd:

|

||||

dp = os.path.join(top, rd)

|

||||

lnodes = set(os.listdir(dp))

|

||||

try:

|

||||

lnodes = set(os.listdir(dp))

|

||||

except:

|

||||

lnodes = list(ls) # fs eio; don't delete

|

||||

if ptn:

|

||||

zs = dp.replace(sep, b"/").rstrip(b"/") + b"/"

|

||||

zls = [zs + x for x in lnodes]

|

||||

@@ -1074,7 +1166,7 @@ class Ctl(object):

|

||||

lnodes = [x.split(b"/")[-1] for x in zls]

|

||||

bnames = [x for x in ls if x not in lnodes and x != b".hist"]

|

||||

vpath = self.ar.url.split("://")[-1].split("/", 1)[-1]

|

||||

names = [x.decode("utf-8", "replace") for x in bnames]

|

||||

names = [x.decode("utf-8", WTF8) for x in bnames]

|

||||

locs = [vpath + srd + "/" + x for x in names]

|

||||

while locs:

|

||||

req = locs

|

||||

@@ -1136,10 +1228,16 @@ class Ctl(object):

|

||||

self.up_b = self.hash_b

|

||||

|

||||

if self.ar.wlist:

|

||||

vp = file.rel.decode("utf-8")

|

||||

if self.ar.chs:

|

||||

zsl = [

|

||||

"%s %d %d" % (zsii[0], n, zsii[1])

|

||||

for n, zsii in enumerate(file.cids)

|

||||

]

|

||||

print("chs: %s\n%s" % (vp, "\n".join(zsl)))

|

||||

zsl = [self.ar.wsalt, str(file.size)] + [x[0] for x in file.kchunks]

|

||||

zb = hashlib.sha512("\n".join(zsl).encode("utf-8")).digest()[:33]

|

||||

wark = ub64enc(zb).decode("utf-8")

|

||||

vp = file.rel.decode("utf-8")

|

||||

if self.ar.jw:

|

||||

print("%s %s" % (wark, vp))

|

||||

else:

|

||||

@@ -1177,6 +1275,7 @@ class Ctl(object):

|

||||

self.q_upload.put(None)

|

||||

return

|

||||

|

||||

chunksz = up2k_chunksize(file.size)

|

||||

upath = file.abs.decode("utf-8", "replace")

|

||||

if not VT100:

|

||||

upath = upath.lstrip("\\?")

|

||||

@@ -1236,9 +1335,14 @@ class Ctl(object):

|

||||

file.up_c -= len(hs)

|

||||

for cid in hs:

|

||||

sz = file.kchunks[cid][1]

|

||||

self.up_br -= sz

|

||||

self.up_b -= sz

|

||||

file.up_b -= sz

|

||||

|

||||

if hs and not file.up_b:

|

||||

# first hs of this file; is this an upload resume?

|

||||

file.up_b = chunksz * max(0, len(file.kchunks) - len(hs))

|

||||

|

||||

file.ucids = hs

|

||||

|

||||

if not hs:

|

||||

@@ -1252,7 +1356,7 @@ class Ctl(object):

|

||||

c1 = c2 = ""

|

||||

|

||||

spd_h = humansize(file.size / file.t_hash, True)

|

||||

if file.up_b:

|

||||

if file.up_c:

|

||||

t_up = file.t1_up - file.t0_up

|

||||

spd_u = humansize(file.size / t_up, True)

|

||||

|

||||

@@ -1262,14 +1366,13 @@ class Ctl(object):

|

||||

t = " found %s %s(%.2fs,%s/s)%s"

|

||||

print(t % (upath, c1, file.t_hash, spd_h, c2))

|

||||

else:

|

||||

kw = "uploaded" if file.up_b else " found"

|

||||

kw = "uploaded" if file.up_c else " found"

|

||||

print("{0} {1}".format(kw, upath))

|

||||

|

||||

self._check_if_done()

|

||||

continue

|

||||

|

||||

chunksz = up2k_chunksize(file.size)

|

||||

njoin = (self.ar.sz * 1024 * 1024) // chunksz

|

||||

njoin = self.ar.sz // chunksz

|

||||

cs = hs[:]

|

||||

while cs:

|

||||

fsl = FileSlice(file, cs[:1])

|

||||

@@ -1321,7 +1424,7 @@ class Ctl(object):

|

||||

)

|

||||

|

||||

try:

|

||||

upload(fsl, stats)

|

||||

upload(fsl, stats, self.ar.szm)

|

||||

except Exception as ex:

|

||||

t = "upload failed, retrying: %s #%s+%d (%s)\n"

|

||||

eprint(t % (file.name, cids[0][:8], len(cids) - 1, ex))

|

||||

@@ -1365,7 +1468,7 @@ def main():

|

||||

cores = (os.cpu_count() if hasattr(os, "cpu_count") else 0) or 2

|

||||

hcores = min(cores, 3) # 4% faster than 4+ on py3.9 @ r5-4500U

|

||||

|

||||

ver = "{0} v{1} https://youtu.be/BIcOO6TLKaY".format(S_BUILD_DT, S_VERSION)

|

||||

ver = "{0}, v{1}".format(S_BUILD_DT, S_VERSION)

|

||||

if "--version" in sys.argv:

|

||||

print(ver)

|

||||

return

|

||||

@@ -1403,14 +1506,17 @@ source file/folder selection uses rsync syntax, meaning that:

|

||||

|

||||

ap = app.add_argument_group("file-ID calculator; enable with url '-' to list warks (file identifiers) instead of upload/search")

|

||||

ap.add_argument("--wsalt", type=unicode, metavar="S", default="hunter2", help="salt to use when creating warks; must match server config")

|

||||

ap.add_argument("--chs", action="store_true", help="verbose (print the hash/offset of each chunk in each file)")

|

||||

ap.add_argument("--jw", action="store_true", help="just identifier+filepath, not mtime/size too")

|

||||

|

||||

ap = app.add_argument_group("performance tweaks")

|

||||

ap.add_argument("-j", type=int, metavar="CONNS", default=2, help="parallel connections")

|

||||

ap.add_argument("-J", type=int, metavar="CORES", default=hcores, help="num cpu-cores to use for hashing; set 0 or 1 for single-core hashing")

|

||||

ap.add_argument("--sz", type=int, metavar="MiB", default=64, help="try to make each POST this big")

|

||||

ap.add_argument("--szm", type=int, metavar="MiB", default=96, help="max size of each POST (default is cloudflare max)")

|

||||

ap.add_argument("-nh", action="store_true", help="disable hashing while uploading")

|

||||

ap.add_argument("-ns", action="store_true", help="no status panel (for slow consoles and macos)")

|

||||

ap.add_argument("--cxp", type=float, metavar="SEC", default=57, help="assume http connections expired after SEConds")

|

||||

ap.add_argument("--cd", type=float, metavar="SEC", default=5, help="delay before reattempting a failed handshake/upload")

|

||||

ap.add_argument("--safe", action="store_true", help="use simple fallback approach")

|

||||

ap.add_argument("-z", action="store_true", help="ZOOMIN' (skip uploading files if they exist at the destination with the ~same last-modified timestamp, so same as yolo / turbo with date-chk but even faster)")

|

||||

@@ -1436,6 +1542,9 @@ source file/folder selection uses rsync syntax, meaning that:

|

||||

if ar.dr:

|

||||

ar.ow = True

|

||||

|

||||

ar.sz *= 1024 * 1024

|

||||

ar.szm *= 1024 * 1024

|

||||

|

||||

ar.x = "|".join(ar.x or [])

|

||||

|

||||

setattr(ar, "wlist", ar.url == "-")

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Maintainer: icxes <dev.null@need.moe>

|

||||

pkgname=copyparty

|

||||

pkgver="1.15.5"

|

||||

pkgver="1.16.1"

|

||||

pkgrel=1

|

||||

pkgdesc="File server with accelerated resumable uploads, dedup, WebDAV, FTP, TFTP, zeroconf, media indexer, thumbnails++"

|

||||

arch=("any")

|

||||

@@ -21,7 +21,7 @@ optdepends=("ffmpeg: thumbnails for videos, images (slower) and audio, music tag

|

||||

)

|

||||

source=("https://github.com/9001/${pkgname}/releases/download/v${pkgver}/${pkgname}-${pkgver}.tar.gz")

|

||||

backup=("etc/${pkgname}.d/init" )

|

||||

sha256sums=("c380ad1d20787d80077123ced583d45bc26467386bbceac35436662f435a6b8c")

|

||||

sha256sums=("48506881f7920ad9d528763833a8cc3d1b6df39402bbe1cb90c3ff58c865dfc6")

|

||||

|

||||

build() {

|

||||

cd "${srcdir}/${pkgname}-${pkgver}"

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

{

|

||||

"url": "https://github.com/9001/copyparty/releases/download/v1.15.5/copyparty-sfx.py",

|

||||

"version": "1.15.5",

|

||||

"hash": "sha256-2JcXSbtyEn+EtpyQTcE9U4XuckVKvAowVGqBZ110Jt4="

|

||||

"url": "https://github.com/9001/copyparty/releases/download/v1.16.1/copyparty-sfx.py",

|

||||

"version": "1.16.1",

|

||||

"hash": "sha256-vlxAuVtd/o11CIC6E6K6UDUdtYDzQ6u7kG3Qc3eqJ+U="

|

||||

}

|

||||

@@ -80,6 +80,7 @@ web/deps/prismd.css

|

||||

web/deps/scp.woff2

|

||||

web/deps/sha512.ac.js

|

||||

web/deps/sha512.hw.js

|

||||

web/iiam.gif

|

||||

web/md.css

|

||||

web/md.html

|

||||

web/md.js

|

||||

|

||||

@@ -50,6 +50,8 @@ from .util import (

|

||||

PARTFTPY_VER,

|

||||

PY_DESC,

|

||||

PYFTPD_VER,

|

||||

RAM_AVAIL,

|

||||

RAM_TOTAL,

|

||||

SQLITE_VER,

|

||||

UNPLICATIONS,

|

||||

Daemon,

|

||||

@@ -684,6 +686,8 @@ def get_sects():

|

||||

\033[36mxbu\033[35m executes CMD before a file upload starts

|

||||

\033[36mxau\033[35m executes CMD after a file upload finishes

|

||||

\033[36mxiu\033[35m executes CMD after all uploads finish and volume is idle

|

||||

\033[36mxbc\033[35m executes CMD before a file copy

|

||||

\033[36mxac\033[35m executes CMD after a file copy

|

||||

\033[36mxbr\033[35m executes CMD before a file rename/move

|

||||

\033[36mxar\033[35m executes CMD after a file rename/move

|

||||

\033[36mxbd\033[35m executes CMD before a file delete

|

||||

@@ -874,8 +878,9 @@ def get_sects():

|

||||

use argon2id with timecost 3, 256 MiB, 4 threads, version 19 (0x13/v1.3)

|

||||

|

||||

\033[36m--ah-alg scrypt\033[0m # which is the same as:

|

||||

\033[36m--ah-alg scrypt,13,2,8,4\033[0m

|

||||

use scrypt with cost 2**13, 2 iterations, blocksize 8, 4 threads

|

||||

\033[36m--ah-alg scrypt,13,2,8,4,32\033[0m

|

||||

use scrypt with cost 2**13, 2 iterations, blocksize 8, 4 threads,

|

||||

and allow using up to 32 MiB RAM (ram=cost*blksz roughly)

|

||||

|

||||

\033[36m--ah-alg sha2\033[0m # which is the same as:

|

||||

\033[36m--ah-alg sha2,424242\033[0m

|

||||

@@ -1017,7 +1022,7 @@ def add_upload(ap):

|

||||

ap2.add_argument("--sparse", metavar="MiB", type=int, default=4, help="windows-only: minimum size of incoming uploads through up2k before they are made into sparse files")

|

||||

ap2.add_argument("--turbo", metavar="LVL", type=int, default=0, help="configure turbo-mode in up2k client; [\033[32m-1\033[0m] = forbidden/always-off, [\033[32m0\033[0m] = default-off and warn if enabled, [\033[32m1\033[0m] = default-off, [\033[32m2\033[0m] = on, [\033[32m3\033[0m] = on and disable datecheck")

|

||||

ap2.add_argument("--u2j", metavar="JOBS", type=int, default=2, help="web-client: number of file chunks to upload in parallel; 1 or 2 is good for low-latency (same-country) connections, 4-8 for android clients, 16 for cross-atlantic (max=64)")

|

||||

ap2.add_argument("--u2sz", metavar="N,N,N", type=u, default="1,64,96", help="web-client: default upload chunksize (MiB); sets \033[33mmin,default,max\033[0m in the settings gui. Each HTTP POST will aim for this size. Cloudflare max is 96. Big values are good for cross-atlantic but may increase HDD fragmentation on some FS. Disable this optimization with [\033[32m1,1,1\033[0m]")

|

||||

ap2.add_argument("--u2sz", metavar="N,N,N", type=u, default="1,64,96", help="web-client: default upload chunksize (MiB); sets \033[33mmin,default,max\033[0m in the settings gui. Each HTTP POST will aim for \033[33mdefault\033[0m, and never exceed \033[33mmax\033[0m. Cloudflare max is 96. Big values are good for cross-atlantic but may increase HDD fragmentation on some FS. Disable this optimization with [\033[32m1,1,1\033[0m]")

|

||||

ap2.add_argument("--u2sort", metavar="TXT", type=u, default="s", help="upload order; [\033[32ms\033[0m]=smallest-first, [\033[32mn\033[0m]=alphabetical, [\033[32mfs\033[0m]=force-s, [\033[32mfn\033[0m]=force-n -- alphabetical is a bit slower on fiber/LAN but makes it easier to eyeball if everything went fine")

|

||||

ap2.add_argument("--write-uplog", action="store_true", help="write POST reports to textfiles in working-directory")

|

||||

|

||||

@@ -1037,7 +1042,7 @@ def add_network(ap):

|

||||

else:

|

||||

ap2.add_argument("--freebind", action="store_true", help="allow listening on IPs which do not yet exist, for example if the network interfaces haven't finished going up. Only makes sense for IPs other than '0.0.0.0', '127.0.0.1', '::', and '::1'. May require running as root (unless net.ipv6.ip_nonlocal_bind)")

|

||||

ap2.add_argument("--s-thead", metavar="SEC", type=int, default=120, help="socket timeout (read request header)")

|

||||

ap2.add_argument("--s-tbody", metavar="SEC", type=float, default=186.0, help="socket timeout (read/write request/response bodies). Use 60 on fast servers (default is extremely safe). Disable with 0 if reverse-proxied for a 2%% speed boost")

|

||||

ap2.add_argument("--s-tbody", metavar="SEC", type=float, default=128.0, help="socket timeout (read/write request/response bodies). Use 60 on fast servers (default is extremely safe). Disable with 0 if reverse-proxied for a 2%% speed boost")

|

||||

ap2.add_argument("--s-rd-sz", metavar="B", type=int, default=256*1024, help="socket read size in bytes (indirectly affects filesystem writes; recommendation: keep equal-to or lower-than \033[33m--iobuf\033[0m)")

|

||||

ap2.add_argument("--s-wr-sz", metavar="B", type=int, default=256*1024, help="socket write size in bytes")

|

||||

ap2.add_argument("--s-wr-slp", metavar="SEC", type=float, default=0.0, help="debug: socket write delay in seconds")

|

||||

@@ -1087,6 +1092,7 @@ def add_auth(ap):

|

||||

ap2.add_argument("--ses-db", metavar="PATH", type=u, default=ses_db, help="where to store the sessions database (if you run multiple copyparty instances, make sure they use different DBs)")

|

||||

ap2.add_argument("--ses-len", metavar="CHARS", type=int, default=20, help="session key length; default is 120 bits ((20//4)*4*6)")

|

||||

ap2.add_argument("--no-ses", action="store_true", help="disable sessions; use plaintext passwords in cookies")

|

||||

ap2.add_argument("--ipu", metavar="CIDR=USR", type=u, action="append", help="users with IP matching \033[33mCIDR\033[0m are auto-authenticated as username \033[33mUSR\033[0m; example: [\033[32m172.16.24.0/24=dave]")

|

||||

|

||||

|

||||

def add_chpw(ap):

|

||||

@@ -1200,6 +1206,8 @@ def add_hooks(ap):

|

||||

ap2.add_argument("--xbu", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file upload starts")

|

||||

ap2.add_argument("--xau", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after a file upload finishes")

|

||||

ap2.add_argument("--xiu", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after all uploads finish and volume is idle")

|

||||

ap2.add_argument("--xbc", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file copy")

|

||||

ap2.add_argument("--xac", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after a file copy")

|

||||

ap2.add_argument("--xbr", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file move/rename")

|

||||

ap2.add_argument("--xar", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m after a file move/rename")

|

||||

ap2.add_argument("--xbd", metavar="CMD", type=u, action="append", help="execute \033[33mCMD\033[0m before a file delete")

|

||||

@@ -1232,6 +1240,7 @@ def add_optouts(ap):

|

||||

ap2.add_argument("--no-dav", action="store_true", help="disable webdav support")

|

||||

ap2.add_argument("--no-del", action="store_true", help="disable delete operations")

|

||||

ap2.add_argument("--no-mv", action="store_true", help="disable move/rename operations")

|

||||

ap2.add_argument("--no-cp", action="store_true", help="disable copy operations")

|

||||

ap2.add_argument("-nth", action="store_true", help="no title hostname; don't show \033[33m--name\033[0m in <title>")

|

||||

ap2.add_argument("-nih", action="store_true", help="no info hostname -- don't show in UI")

|

||||

ap2.add_argument("-nid", action="store_true", help="no info disk-usage -- don't show in UI")

|

||||

@@ -1306,6 +1315,7 @@ def add_logging(ap):

|

||||

ap2.add_argument("--log-conn", action="store_true", help="debug: print tcp-server msgs")

|

||||

ap2.add_argument("--log-htp", action="store_true", help="debug: print http-server threadpool scaling")

|

||||

ap2.add_argument("--ihead", metavar="HEADER", type=u, action='append', help="print request \033[33mHEADER\033[0m; [\033[32m*\033[0m]=all")

|

||||

ap2.add_argument("--ohead", metavar="HEADER", type=u, action='append', help="print response \033[33mHEADER\033[0m; [\033[32m*\033[0m]=all")

|

||||

ap2.add_argument("--lf-url", metavar="RE", type=u, default=r"^/\.cpr/|\?th=[wj]$|/\.(_|ql_|DS_Store$|localized$)", help="dont log URLs matching regex \033[33mRE\033[0m")

|

||||

|

||||

|

||||

@@ -1314,9 +1324,12 @@ def add_admin(ap):

|

||||

ap2.add_argument("--no-reload", action="store_true", help="disable ?reload=cfg (reload users/volumes/volflags from config file)")

|

||||

ap2.add_argument("--no-rescan", action="store_true", help="disable ?scan (volume reindexing)")

|

||||

ap2.add_argument("--no-stack", action="store_true", help="disable ?stack (list all stacks)")

|

||||

ap2.add_argument("--dl-list", metavar="LVL", type=int, default=2, help="who can see active downloads in the controlpanel? [\033[32m0\033[0m]=nobody, [\033[32m1\033[0m]=admins, [\033[32m2\033[0m]=everyone")

|

||||

|

||||

|

||||

def add_thumbnail(ap):

|

||||

th_ram = (RAM_AVAIL or RAM_TOTAL or 9) * 0.6

|

||||

th_ram = int(max(min(th_ram, 6), 1) * 10) / 10

|

||||

ap2 = ap.add_argument_group('thumbnail options')

|

||||

ap2.add_argument("--no-thumb", action="store_true", help="disable all thumbnails (volflag=dthumb)")

|

||||

ap2.add_argument("--no-vthumb", action="store_true", help="disable video thumbnails (volflag=dvthumb)")

|

||||

@@ -1324,7 +1337,7 @@ def add_thumbnail(ap):

|

||||

ap2.add_argument("--th-size", metavar="WxH", default="320x256", help="thumbnail res (volflag=thsize)")

|

||||

ap2.add_argument("--th-mt", metavar="CORES", type=int, default=CORES, help="num cpu cores to use for generating thumbnails")

|

||||

ap2.add_argument("--th-convt", metavar="SEC", type=float, default=60.0, help="conversion timeout in seconds (volflag=convt)")

|

||||

ap2.add_argument("--th-ram-max", metavar="GB", type=float, default=6.0, help="max memory usage (GiB) permitted by thumbnailer; not very accurate")

|

||||

ap2.add_argument("--th-ram-max", metavar="GB", type=float, default=th_ram, help="max memory usage (GiB) permitted by thumbnailer; not very accurate")

|

||||

ap2.add_argument("--th-crop", metavar="TXT", type=u, default="y", help="crop thumbnails to 4:3 or keep dynamic height; client can override in UI unless force. [\033[32my\033[0m]=crop, [\033[32mn\033[0m]=nocrop, [\033[32mfy\033[0m]=force-y, [\033[32mfn\033[0m]=force-n (volflag=crop)")

|

||||

ap2.add_argument("--th-x3", metavar="TXT", type=u, default="n", help="show thumbs at 3x resolution; client can override in UI unless force. [\033[32my\033[0m]=yes, [\033[32mn\033[0m]=no, [\033[32mfy\033[0m]=force-yes, [\033[32mfn\033[0m]=force-no (volflag=th3x)")

|

||||

ap2.add_argument("--th-dec", metavar="LIBS", default="vips,pil,ff", help="image decoders, in order of preference")

|

||||

@@ -1339,12 +1352,12 @@ def add_thumbnail(ap):

|

||||

# https://pillow.readthedocs.io/en/stable/handbook/image-file-formats.html

|

||||

# https://github.com/libvips/libvips

|

||||

# ffmpeg -hide_banner -demuxers | awk '/^ D /{print$2}' | while IFS= read -r x; do ffmpeg -hide_banner -h demuxer=$x; done | grep -E '^Demuxer |extensions:'

|

||||

ap2.add_argument("--th-r-pil", metavar="T,T", type=u, default="avif,avifs,blp,bmp,dcx,dds,dib,emf,eps,fits,flc,fli,fpx,gif,heic,heics,heif,heifs,icns,ico,im,j2p,j2k,jp2,jpeg,jpg,jpx,pbm,pcx,pgm,png,pnm,ppm,psd,qoi,sgi,spi,tga,tif,tiff,webp,wmf,xbm,xpm", help="image formats to decode using pillow")

|

||||

ap2.add_argument("--th-r-pil", metavar="T,T", type=u, default="avif,avifs,blp,bmp,cbz,dcx,dds,dib,emf,eps,fits,flc,fli,fpx,gif,heic,heics,heif,heifs,icns,ico,im,j2p,j2k,jp2,jpeg,jpg,jpx,pbm,pcx,pgm,png,pnm,ppm,psd,qoi,sgi,spi,tga,tif,tiff,webp,wmf,xbm,xpm", help="image formats to decode using pillow")

|

||||

ap2.add_argument("--th-r-vips", metavar="T,T", type=u, default="avif,exr,fit,fits,fts,gif,hdr,heic,jp2,jpeg,jpg,jpx,jxl,nii,pfm,pgm,png,ppm,svg,tif,tiff,webp", help="image formats to decode using pyvips")

|

||||

ap2.add_argument("--th-r-ffi", metavar="T,T", type=u, default="apng,avif,avifs,bmp,dds,dib,fit,fits,fts,gif,hdr,heic,heics,heif,heifs,icns,ico,jp2,jpeg,jpg,jpx,jxl,pbm,pcx,pfm,pgm,png,pnm,ppm,psd,qoi,sgi,tga,tif,tiff,webp,xbm,xpm", help="image formats to decode using ffmpeg")

|

||||

ap2.add_argument("--th-r-ffi", metavar="T,T", type=u, default="apng,avif,avifs,bmp,cbz,dds,dib,fit,fits,fts,gif,hdr,heic,heics,heif,heifs,icns,ico,jp2,jpeg,jpg,jpx,jxl,pbm,pcx,pfm,pgm,png,pnm,ppm,psd,qoi,sgi,tga,tif,tiff,webp,xbm,xpm", help="image formats to decode using ffmpeg")

|

||||

ap2.add_argument("--th-r-ffv", metavar="T,T", type=u, default="3gp,asf,av1,avc,avi,flv,h264,h265,hevc,m4v,mjpeg,mjpg,mkv,mov,mp4,mpeg,mpeg2,mpegts,mpg,mpg2,mts,nut,ogm,ogv,rm,ts,vob,webm,wmv", help="video formats to decode using ffmpeg")

|

||||

ap2.add_argument("--th-r-ffa", metavar="T,T", type=u, default="aac,ac3,aif,aiff,alac,alaw,amr,apac,ape,au,bonk,dfpwm,dts,flac,gsm,ilbc,it,itgz,itxz,itz,m4a,mdgz,mdxz,mdz,mo3,mod,mp2,mp3,mpc,mptm,mt2,mulaw,ogg,okt,opus,ra,s3m,s3gz,s3xz,s3z,tak,tta,ulaw,wav,wma,wv,xm,xmgz,xmxz,xmz,xpk", help="audio formats to decode using ffmpeg")

|

||||

ap2.add_argument("--au-unpk", metavar="E=F.C", type=u, default="mdz=mod.zip, mdgz=mod.gz, mdxz=mod.xz, s3z=s3m.zip, s3gz=s3m.gz, s3xz=s3m.xz, xmz=xm.zip, xmgz=xm.gz, xmxz=xm.xz, itz=it.zip, itgz=it.gz, itxz=it.xz", help="audio formats to decompress before passing to ffmpeg")

|

||||

ap2.add_argument("--au-unpk", metavar="E=F.C", type=u, default="mdz=mod.zip, mdgz=mod.gz, mdxz=mod.xz, s3z=s3m.zip, s3gz=s3m.gz, s3xz=s3m.xz, xmz=xm.zip, xmgz=xm.gz, xmxz=xm.xz, itz=it.zip, itgz=it.gz, itxz=it.xz, cbz=jpg.cbz", help="audio/image formats to decompress before passing to ffmpeg")

|

||||

|

||||

|

||||

def add_transcoding(ap):

|

||||

@@ -1356,6 +1369,14 @@ def add_transcoding(ap):

|

||||

ap2.add_argument("--ac-maxage", metavar="SEC", type=int, default=86400, help="delete cached transcode output after \033[33mSEC\033[0m seconds")

|

||||

|

||||

|

||||

def add_rss(ap):

|

||||

ap2 = ap.add_argument_group('RSS options')

|

||||

ap2.add_argument("--rss", action="store_true", help="enable RSS output (experimental)")

|

||||

ap2.add_argument("--rss-nf", metavar="HITS", type=int, default=250, help="default number of files to return (url-param 'nf')")

|

||||

ap2.add_argument("--rss-fext", metavar="E,E", type=u, default="", help="default list of file extensions to include (url-param 'fext'); blank=all")

|

||||

ap2.add_argument("--rss-sort", metavar="ORD", type=u, default="m", help="default sort order (url-param 'sort'); [\033[32mm\033[0m]=last-modified [\033[32mu\033[0m]=upload-time [\033[32mn\033[0m]=filename [\033[32ms\033[0m]=filesize; Uppercase=oldest-first. Note that upload-time is 0 for non-uploaded files")

|

||||

|

||||

|

||||

def add_db_general(ap, hcores):

|

||||

noidx = APPLESAN_TXT if MACOS else ""

|

||||

ap2 = ap.add_argument_group('general db options')

|

||||

@@ -1436,6 +1457,7 @@ def add_ui(ap, retry):

|

||||

ap2.add_argument("--themes", metavar="NUM", type=int, default=8, help="number of themes installed")

|

||||

ap2.add_argument("--au-vol", metavar="0-100", type=int, default=50, choices=range(0, 101), help="default audio/video volume percent")

|

||||

ap2.add_argument("--sort", metavar="C,C,C", type=u, default="href", help="default sort order, comma-separated column IDs (see header tooltips), prefix with '-' for descending. Examples: \033[32mhref -href ext sz ts tags/Album tags/.tn\033[0m (volflag=sort)")

|

||||

ap2.add_argument("--nsort", action="store_true", help="default-enable natural sort of filenames with leading numbers (volflag=nsort)")

|

||||

ap2.add_argument("--unlist", metavar="REGEX", type=u, default="", help="don't show files matching \033[33mREGEX\033[0m in file list. Purely cosmetic! Does not affect API calls, just the browser. Example: [\033[32m\\.(js|css)$\033[0m] (volflag=unlist)")

|

||||

ap2.add_argument("--favico", metavar="TXT", type=u, default="c 000 none" if retry else "🎉 000 none", help="\033[33mfavicon-text\033[0m [ \033[33mforeground\033[0m [ \033[33mbackground\033[0m ] ], set blank to disable")

|

||||

ap2.add_argument("--mpmc", metavar="URL", type=u, default="", help="change the mediaplayer-toggle mouse cursor; URL to a folder with {2..5}.png inside (or disable with [\033[32m.\033[0m])")

|

||||

@@ -1451,6 +1473,7 @@ def add_ui(ap, retry):

|

||||

ap2.add_argument("--pb-url", metavar="URL", type=u, default="https://github.com/9001/copyparty", help="powered-by link; disable with \033[33m-np\033[0m")

|

||||

ap2.add_argument("--ver", action="store_true", help="show version on the control panel (incompatible with \033[33m-nb\033[0m)")

|

||||

ap2.add_argument("--k304", metavar="NUM", type=int, default=0, help="configure the option to enable/disable k304 on the controlpanel (workaround for buggy reverse-proxies); [\033[32m0\033[0m] = hidden and default-off, [\033[32m1\033[0m] = visible and default-off, [\033[32m2\033[0m] = visible and default-on")

|

||||

ap2.add_argument("--no304", metavar="NUM", type=int, default=0, help="configure the option to enable/disable no304 on the controlpanel (workaround for buggy caching in browsers); [\033[32m0\033[0m] = hidden and default-off, [\033[32m1\033[0m] = visible and default-off, [\033[32m2\033[0m] = visible and default-on")

|

||||

ap2.add_argument("--md-sbf", metavar="FLAGS", type=u, default="downloads forms popups scripts top-navigation-by-user-activation", help="list of capabilities to ALLOW for README.md docs (volflag=md_sbf); see https://developer.mozilla.org/en-US/docs/Web/HTML/Element/iframe#attr-sandbox")

|

||||

ap2.add_argument("--lg-sbf", metavar="FLAGS", type=u, default="downloads forms popups scripts top-navigation-by-user-activation", help="list of capabilities to ALLOW for prologue/epilogue docs (volflag=lg_sbf)")

|

||||

ap2.add_argument("--no-sb-md", action="store_true", help="don't sandbox README/PREADME.md documents (volflags: no_sb_md | sb_md)")

|

||||

@@ -1477,6 +1500,7 @@ def add_debug(ap):

|

||||

ap2.add_argument("--bak-flips", action="store_true", help="[up2k] if a client uploads a bitflipped/corrupted chunk, store a copy according to \033[33m--bf-nc\033[0m and \033[33m--bf-dir\033[0m")

|

||||

ap2.add_argument("--bf-nc", metavar="NUM", type=int, default=200, help="bak-flips: stop if there's more than \033[33mNUM\033[0m files at \033[33m--kf-dir\033[0m already; default: 6.3 GiB max (200*32M)")

|

||||

ap2.add_argument("--bf-dir", metavar="PATH", type=u, default="bf", help="bak-flips: store corrupted chunks at \033[33mPATH\033[0m; default: folder named 'bf' wherever copyparty was started")

|

||||

ap2.add_argument("--bf-log", metavar="PATH", type=u, default="", help="bak-flips: log corruption info to a textfile at \033[33mPATH\033[0m")

|

||||

|

||||

|

||||

# fmt: on

|

||||

@@ -1524,6 +1548,7 @@ def run_argparse(

|

||||

add_db_metadata(ap)

|

||||

add_thumbnail(ap)

|

||||

add_transcoding(ap)

|

||||

add_rss(ap)

|

||||

add_ftp(ap)

|

||||

add_webdav(ap)

|

||||

add_tftp(ap)

|

||||

@@ -1746,6 +1771,9 @@ def main(argv: Optional[list[str]] = None) -> None:

|

||||

if al.ihead:

|

||||

al.ihead = [x.lower() for x in al.ihead]

|

||||

|

||||

if al.ohead:

|

||||

al.ohead = [x.lower() for x in al.ohead]

|

||||

|

||||

if HAVE_SSL:

|

||||

if al.ssl_ver:

|

||||

configure_ssl_ver(al)

|

||||

|

||||

@@ -1,8 +1,8 @@

|

||||

# coding: utf-8

|

||||

|

||||

VERSION = (1, 15, 6)

|

||||

CODENAME = "fill the drives"

|

||||

BUILD_DT = (2024, 10, 12)

|

||||

VERSION = (1, 16, 2)

|

||||

CODENAME = "COPYparty"

|

||||

BUILD_DT = (2024, 11, 23)

|

||||

|

||||

S_VERSION = ".".join(map(str, VERSION))

|

||||

S_BUILD_DT = "{0:04d}-{1:02d}-{2:02d}".format(*BUILD_DT)

|

||||

|

||||

@@ -66,6 +66,7 @@ if PY2:

|

||||

LEELOO_DALLAS = "leeloo_dallas"

|

||||

|

||||

SEE_LOG = "see log for details"

|

||||

SEESLOG = " (see serverlog for details)"

|

||||

SSEELOG = " ({})".format(SEE_LOG)

|

||||

BAD_CFG = "invalid config; {}".format(SEE_LOG)

|

||||

SBADCFG = " ({})".format(BAD_CFG)

|

||||

@@ -164,8 +165,11 @@ class Lim(object):

|

||||

self.chk_rem(rem)

|

||||

if sz != -1:

|

||||

self.chk_sz(sz)

|

||||

self.chk_vsz(broker, ptop, sz, volgetter)

|

||||

self.chk_df(abspath, sz) # side effects; keep last-ish

|

||||

else:

|

||||

sz = 0

|

||||

|

||||

self.chk_vsz(broker, ptop, sz, volgetter)

|

||||

self.chk_df(abspath, sz) # side effects; keep last-ish

|

||||

|

||||

ap2, vp2 = self.rot(abspath)

|

||||

if abspath == ap2:

|

||||

@@ -205,7 +209,15 @@ class Lim(object):

|

||||

|

||||

if self.dft < time.time():

|

||||

self.dft = int(time.time()) + 300

|

||||

self.dfv = get_df(abspath)[0] or 0

|

||||

|

||||

df, du, err = get_df(abspath, True)

|

||||

if err:

|

||||

t = "failed to read disk space usage for [%s]: %s"

|

||||

self.log(t % (abspath, err), 3)

|

||||

self.dfv = 0xAAAAAAAAA # 42.6 GiB

|

||||

else:

|

||||

self.dfv = df or 0

|

||||

|

||||

for j in list(self.reg.values()) if self.reg else []:

|

||||

self.dfv -= int(j["size"] / (len(j["hash"]) or 999) * len(j["need"]))

|

||||

|

||||

@@ -355,18 +367,21 @@ class VFS(object):

|

||||

self.ahtml: dict[str, list[str]] = {}

|

||||

self.aadmin: dict[str, list[str]] = {}

|

||||

self.adot: dict[str, list[str]] = {}

|

||||

self.all_vols: dict[str, VFS] = {}

|

||||

self.js_ls = {}

|

||||

self.js_htm = ""

|

||||

|

||||

if realpath:

|

||||

rp = realpath + ("" if realpath.endswith(os.sep) else os.sep)

|

||||

vp = vpath + ("/" if vpath else "")

|

||||

self.histpath = os.path.join(realpath, ".hist") # db / thumbcache

|

||||

self.all_vols = {vpath: self} # flattened recursive

|

||||

self.all_nodes = {vpath: self} # also jumpvols

|

||||

self.all_aps = [(rp, self)]

|

||||

self.all_vps = [(vp, self)]

|

||||

else:

|

||||

self.histpath = ""

|

||||

self.all_vols = {}

|

||||

self.all_nodes = {}

|

||||

self.all_aps = []

|

||||

self.all_vps = []

|

||||

|

||||

@@ -384,9 +399,11 @@ class VFS(object):

|

||||

def get_all_vols(

|

||||

self,

|

||||

vols: dict[str, "VFS"],

|

||||

nodes: dict[str, "VFS"],

|

||||

aps: list[tuple[str, "VFS"]],

|

||||

vps: list[tuple[str, "VFS"]],

|

||||

) -> None:

|

||||

nodes[self.vpath] = self

|

||||

if self.realpath:

|

||||

vols[self.vpath] = self

|

||||

rp = self.realpath

|

||||

@@ -396,7 +413,7 @@ class VFS(object):

|

||||

vps.append((vp, self))

|

||||

|

||||

for v in self.nodes.values():

|

||||

v.get_all_vols(vols, aps, vps)

|

||||

v.get_all_vols(vols, nodes, aps, vps)

|

||||

|

||||

def add(self, src: str, dst: str) -> "VFS":

|

||||

"""get existing, or add new path to the vfs"""

|

||||

@@ -540,15 +557,14 @@ class VFS(object):

|

||||

return self._get_dbv(vrem)

|

||||

|

||||

shv, srem = src

|

||||

return shv, vjoin(srem, vrem)

|

||||

return shv._get_dbv(vjoin(srem, vrem))

|

||||

|

||||

def _get_dbv(self, vrem: str) -> tuple["VFS", str]:

|

||||

dbv = self.dbv

|

||||

if not dbv:

|

||||

return self, vrem

|

||||

|

||||

tv = [self.vpath[len(dbv.vpath) :].lstrip("/"), vrem]

|

||||

vrem = "/".join([x for x in tv if x])

|

||||

vrem = vjoin(self.vpath[len(dbv.vpath) :].lstrip("/"), vrem)

|

||||

return dbv, vrem

|

||||

|

||||

def canonical(self, rem: str, resolve: bool = True) -> str:

|

||||

@@ -580,10 +596,11 @@ class VFS(object):

|

||||

scandir: bool,

|

||||

permsets: list[list[bool]],

|

||||

lstat: bool = False,

|

||||

throw: bool = False,

|

||||

) -> tuple[str, list[tuple[str, os.stat_result]], dict[str, "VFS"]]:

|

||||

"""replaces _ls for certain shares (single-file, or file selection)"""

|

||||

vn, rem = self.shr_src # type: ignore

|

||||

abspath, real, _ = vn.ls(rem, "\n", scandir, permsets, lstat)

|

||||

abspath, real, _ = vn.ls(rem, "\n", scandir, permsets, lstat, throw)

|

||||

real = [x for x in real if os.path.basename(x[0]) in self.shr_files]

|

||||

return abspath, real, {}

|

||||

|

||||

@@ -594,11 +611,12 @@ class VFS(object):

|

||||

scandir: bool,

|

||||

permsets: list[list[bool]],

|

||||

lstat: bool = False,

|

||||

throw: bool = False,

|

||||

) -> tuple[str, list[tuple[str, os.stat_result]], dict[str, "VFS"]]:

|

||||

"""return user-readable [fsdir,real,virt] items at vpath"""

|

||||

virt_vis = {} # nodes readable by user

|

||||

abspath = self.canonical(rem)

|

||||

real = list(statdir(self.log, scandir, lstat, abspath))

|

||||

real = list(statdir(self.log, scandir, lstat, abspath, throw))

|

||||

real.sort()

|

||||

if not rem:

|

||||

# no vfs nodes in the list of real inodes

|

||||

@@ -660,6 +678,10 @@ class VFS(object):

|

||||

"""

|

||||

recursively yields from ./rem;

|

||||

rel is a unix-style user-defined vpath (not vfs-related)

|

||||

|

||||

NOTE: don't invoke this function from a dbv; subvols are only

|

||||

descended into if rem is blank due to the _ls `if not rem:`

|

||||

which intention is to prevent unintended access to subvols

|

||||

"""

|

||||

|

||||

fsroot, vfs_ls, vfs_virt = self.ls(rem, uname, scandir, permsets, lstat=lstat)

|

||||

@@ -900,7 +922,7 @@ class AuthSrv(object):

|

||||

self._reload()

|

||||

return True

|

||||

|

||||

broker.ask("_reload_blocking", False).get()

|

||||

broker.ask("reload", False, True).get()

|

||||

return True

|

||||

|

||||

def _map_volume_idp(

|

||||

@@ -1370,7 +1392,7 @@ class AuthSrv(object):

|

||||

flags[name] = True

|

||||

return

|

||||

|

||||

zs = "mtp on403 on404 xbu xau xiu xbr xar xbd xad xm xban"

|

||||

zs = "mtp on403 on404 xbu xau xiu xbc xac xbr xar xbd xad xm xban"

|

||||

if name not in zs.split():

|

||||

if value is True:

|

||||

t = "└─add volflag [{}] = {} ({})"

|

||||

@@ -1518,10 +1540,11 @@ class AuthSrv(object):

|

||||

|

||||

assert vfs # type: ignore

|

||||

vfs.all_vols = {}

|

||||

vfs.all_nodes = {}

|

||||

vfs.all_aps = []

|

||||

vfs.all_vps = []

|

||||

vfs.get_all_vols(vfs.all_vols, vfs.all_aps, vfs.all_vps)

|

||||

for vol in vfs.all_vols.values():

|

||||

vfs.get_all_vols(vfs.all_vols, vfs.all_nodes, vfs.all_aps, vfs.all_vps)

|

||||

for vol in vfs.all_nodes.values():

|

||||

vol.all_aps.sort(key=lambda x: len(x[0]), reverse=True)

|

||||

vol.all_vps.sort(key=lambda x: len(x[0]), reverse=True)

|

||||

vol.root = vfs

|

||||

@@ -1572,7 +1595,7 @@ class AuthSrv(object):

|

||||

|

||||

vfs.nodes[shr] = vfs.all_vols[shr] = shv

|

||||

for vol in shv.nodes.values():

|

||||

vfs.all_vols[vol.vpath] = vol

|

||||

vfs.all_vols[vol.vpath] = vfs.all_nodes[vol.vpath] = vol

|

||||

vol.get_dbv = vol._get_share_src

|

||||

vol.ls = vol._ls_nope

|

||||

|

||||

@@ -1715,7 +1738,19 @@ class AuthSrv(object):

|

||||

|

||||

self.log("\n\n".join(ta) + "\n", c=3)

|

||||

|

||||