Compare commits

127 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

e2dec2510f | ||

|

|

da5ad2ab9f | ||

|

|

eaa4b04a22 | ||

|

|

3051b13108 | ||

|

|

4c4e48bab7 | ||

|

|

01a3eb29cb | ||

|

|

73f7249c5f | ||

|

|

18c6559199 | ||

|

|

e66ece993f | ||

|

|

0686860624 | ||

|

|

24ce46b380 | ||

|

|

a49bf81ff2 | ||

|

|

64501fd7f1 | ||

|

|

db3c0b0907 | ||

|

|

edda117a7a | ||

|

|

cdface0dd5 | ||

|

|

be6afe2d3a | ||

|

|

9163780000 | ||

|

|

d7aa7dfe64 | ||

|

|

f1decb531d | ||

|

|

99399c698b | ||

|

|

1f5f42f216 | ||

|

|

9082c4702f | ||

|

|

6cedcfbf77 | ||

|

|

8a631f045e | ||

|

|

a6a2ee5b6b | ||

|

|

016708276c | ||

|

|

4cfdc4c513 | ||

|

|

0f257c9308 | ||

|

|

c8104b6e78 | ||

|

|

1a1d731043 | ||

|

|

c5a000d2ae | ||

|

|

94d1924fa9 | ||

|

|

6c1cf68bca | ||

|

|

395af051bd | ||

|

|

42fd66675e | ||

|

|

21a3f3699b | ||

|

|

d168b2acac | ||

|

|

2ce8233921 | ||

|

|

697a4fa8a4 | ||

|

|

2f83c6c7d1 | ||

|

|

127f414e9c | ||

|

|

33c4ccffab | ||

|

|

bafe7f5a09 | ||

|

|

baf41112d1 | ||

|

|

a90dde94e1 | ||

|

|

7dfbfc7227 | ||

|

|

b10843d051 | ||

|

|

520ac8f4dc | ||

|

|

537a6e50e9 | ||

|

|

2d0cbdf1a8 | ||

|

|

5afb562aa3 | ||

|

|

db069c3d4a | ||

|

|

fae40c7e2f | ||

|

|

0c43b592dc | ||

|

|

2ab8924e2d | ||

|

|

0e31cfa784 | ||

|

|

8f7ffcf350 | ||

|

|

9c8507a0fd | ||

|

|

e9b2cab088 | ||

|

|

d3ccacccb1 | ||

|

|

df386c8fbc | ||

|

|

4d15dd6e17 | ||

|

|

56a0499636 | ||

|

|

10fc4768e8 | ||

|

|

2b63d7d10d | ||

|

|

1f177528c1 | ||

|

|

fc3bbb70a3 | ||

|

|

ce3cab0295 | ||

|

|

c784e5285e | ||

|

|

2bf9055cae | ||

|

|

8aba5aed4f | ||

|

|

0ce7cf5e10 | ||

|

|

96edcbccd7 | ||

|

|

4603afb6de | ||

|

|

56317b00af | ||

|

|

cacec9c1f3 | ||

|

|

44ee07f0b2 | ||

|

|

6a8d5e1731 | ||

|

|

d9962f65b3 | ||

|

|

119e88d87b | ||

|

|

71d9e010d9 | ||

|

|

5718caa957 | ||

|

|

efd8a32ed6 | ||

|

|

b22d700e16 | ||

|

|

ccdacea0c4 | ||

|

|

4bdcbc1cb5 | ||

|

|

833c6cf2ec | ||

|

|

dd6dbdd90a | ||

|

|

63013cc565 | ||

|

|

912402364a | ||

|

|

159f51b12b | ||

|

|

7678a91b0e | ||

|

|

b13899c63d | ||

|

|

3a0d882c5e | ||

|

|

cb81f0ad6d | ||

|

|

518bacf628 | ||

|

|

ca63b03e55 | ||

|

|

cecef88d6b | ||

|

|

7ffd805a03 | ||

|

|

a7e2a0c981 | ||

|

|

2a570bb4ca | ||

|

|

5ca8f0706d | ||

|

|

a9b4436cdc | ||

|

|

5f91999512 | ||

|

|

9f000beeaf | ||

|

|

ff0a71f212 | ||

|

|

22dfc6ec24 | ||

|

|

48147c079e | ||

|

|

d715479ef6 | ||

|

|

fc8298c468 | ||

|

|

e94ca5dc91 | ||

|

|

114b71b751 | ||

|

|

b2770a2087 | ||

|

|

cba1878bb2 | ||

|

|

a2e037d6af | ||

|

|

65a2b6a223 | ||

|

|

9ed799e803 | ||

|

|

c1c0ecca13 | ||

|

|

ee62836383 | ||

|

|

705f598b1a | ||

|

|

414de88925 | ||

|

|

53ffd245dd | ||

|

|

cf1b756206 | ||

|

|

22b58e31ef | ||

|

|

b7f9bf5a28 | ||

|

|

aba680b6c2 |

89

README.md

89

README.md

@@ -47,6 +47,8 @@ turn almost any device into a file server with resumable uploads/downloads using

|

|||||||

* [file manager](#file-manager) - cut/paste, rename, and delete files/folders (if you have permission)

|

* [file manager](#file-manager) - cut/paste, rename, and delete files/folders (if you have permission)

|

||||||

* [shares](#shares) - share a file or folder by creating a temporary link

|

* [shares](#shares) - share a file or folder by creating a temporary link

|

||||||

* [batch rename](#batch-rename) - select some files and press `F2` to bring up the rename UI

|

* [batch rename](#batch-rename) - select some files and press `F2` to bring up the rename UI

|

||||||

|

* [rss feeds](#rss-feeds) - monitor a folder with your RSS reader

|

||||||

|

* [recent uploads](#recent-uploads) - list all recent uploads

|

||||||

* [media player](#media-player) - plays almost every audio format there is

|

* [media player](#media-player) - plays almost every audio format there is

|

||||||

* [audio equalizer](#audio-equalizer) - and [dynamic range compressor](https://en.wikipedia.org/wiki/Dynamic_range_compression)

|

* [audio equalizer](#audio-equalizer) - and [dynamic range compressor](https://en.wikipedia.org/wiki/Dynamic_range_compression)

|

||||||

* [fix unreliable playback on android](#fix-unreliable-playback-on-android) - due to phone / app settings

|

* [fix unreliable playback on android](#fix-unreliable-playback-on-android) - due to phone / app settings

|

||||||

@@ -80,6 +82,7 @@ turn almost any device into a file server with resumable uploads/downloads using

|

|||||||

* [event hooks](#event-hooks) - trigger a program on uploads, renames etc ([examples](./bin/hooks/))

|

* [event hooks](#event-hooks) - trigger a program on uploads, renames etc ([examples](./bin/hooks/))

|

||||||

* [upload events](#upload-events) - the older, more powerful approach ([examples](./bin/mtag/))

|

* [upload events](#upload-events) - the older, more powerful approach ([examples](./bin/mtag/))

|

||||||

* [handlers](#handlers) - redefine behavior with plugins ([examples](./bin/handlers/))

|

* [handlers](#handlers) - redefine behavior with plugins ([examples](./bin/handlers/))

|

||||||

|

* [ip auth](#ip-auth) - autologin based on IP range (CIDR)

|

||||||

* [identity providers](#identity-providers) - replace copyparty passwords with oauth and such

|

* [identity providers](#identity-providers) - replace copyparty passwords with oauth and such

|

||||||

* [user-changeable passwords](#user-changeable-passwords) - if permitted, users can change their own passwords

|

* [user-changeable passwords](#user-changeable-passwords) - if permitted, users can change their own passwords

|

||||||

* [using the cloud as storage](#using-the-cloud-as-storage) - connecting to an aws s3 bucket and similar

|

* [using the cloud as storage](#using-the-cloud-as-storage) - connecting to an aws s3 bucket and similar

|

||||||

@@ -218,7 +221,7 @@ also see [comparison to similar software](./docs/versus.md)

|

|||||||

* upload

|

* upload

|

||||||

* ☑ basic: plain multipart, ie6 support

|

* ☑ basic: plain multipart, ie6 support

|

||||||

* ☑ [up2k](#uploading): js, resumable, multithreaded

|

* ☑ [up2k](#uploading): js, resumable, multithreaded

|

||||||

* **no filesize limit!** ...unless you use Cloudflare, then it's 383.9 GiB

|

* **no filesize limit!** even on Cloudflare

|

||||||

* ☑ stash: simple PUT filedropper

|

* ☑ stash: simple PUT filedropper

|

||||||

* ☑ filename randomizer

|

* ☑ filename randomizer

|

||||||

* ☑ write-only folders

|

* ☑ write-only folders

|

||||||

@@ -337,6 +340,9 @@ same order here too

|

|||||||

|

|

||||||

* [Chrome issue 1352210](https://bugs.chromium.org/p/chromium/issues/detail?id=1352210) -- plaintext http may be faster at filehashing than https (but also extremely CPU-intensive)

|

* [Chrome issue 1352210](https://bugs.chromium.org/p/chromium/issues/detail?id=1352210) -- plaintext http may be faster at filehashing than https (but also extremely CPU-intensive)

|

||||||

|

|

||||||

|

* [Chrome issue 383568268](https://issues.chromium.org/issues/383568268) -- filereaders in webworkers can OOM / crash the browser-tab

|

||||||

|

* copyparty has a workaround which seems to work well enough

|

||||||

|

|

||||||

* [Firefox issue 1790500](https://bugzilla.mozilla.org/show_bug.cgi?id=1790500) -- entire browser can crash after uploading ~4000 small files

|

* [Firefox issue 1790500](https://bugzilla.mozilla.org/show_bug.cgi?id=1790500) -- entire browser can crash after uploading ~4000 small files

|

||||||

|

|

||||||

* Android: music playback randomly stops due to [battery usage settings](#fix-unreliable-playback-on-android)

|

* Android: music playback randomly stops due to [battery usage settings](#fix-unreliable-playback-on-android)

|

||||||

@@ -426,7 +432,7 @@ configuring accounts/volumes with arguments:

|

|||||||

|

|

||||||

permissions:

|

permissions:

|

||||||

* `r` (read): browse folder contents, download files, download as zip/tar, see filekeys/dirkeys

|

* `r` (read): browse folder contents, download files, download as zip/tar, see filekeys/dirkeys

|

||||||

* `w` (write): upload files, move files *into* this folder

|

* `w` (write): upload files, move/copy files *into* this folder

|

||||||

* `m` (move): move files/folders *from* this folder

|

* `m` (move): move files/folders *from* this folder

|

||||||

* `d` (delete): delete files/folders

|

* `d` (delete): delete files/folders

|

||||||

* `.` (dots): user can ask to show dotfiles in directory listings

|

* `.` (dots): user can ask to show dotfiles in directory listings

|

||||||

@@ -506,7 +512,8 @@ the browser has the following hotkeys (always qwerty)

|

|||||||

* `ESC` close various things

|

* `ESC` close various things

|

||||||

* `ctrl-K` delete selected files/folders

|

* `ctrl-K` delete selected files/folders

|

||||||

* `ctrl-X` cut selected files/folders

|

* `ctrl-X` cut selected files/folders

|

||||||

* `ctrl-V` paste

|

* `ctrl-C` copy selected files/folders to clipboard

|

||||||

|

* `ctrl-V` paste (move/copy)

|

||||||

* `Y` download selected files

|

* `Y` download selected files

|

||||||

* `F2` [rename](#batch-rename) selected file/folder

|

* `F2` [rename](#batch-rename) selected file/folder

|

||||||

* when a file/folder is selected (in not-grid-view):

|

* when a file/folder is selected (in not-grid-view):

|

||||||

@@ -575,6 +582,7 @@ click the `🌲` or pressing the `B` hotkey to toggle between breadcrumbs path (

|

|||||||

|

|

||||||

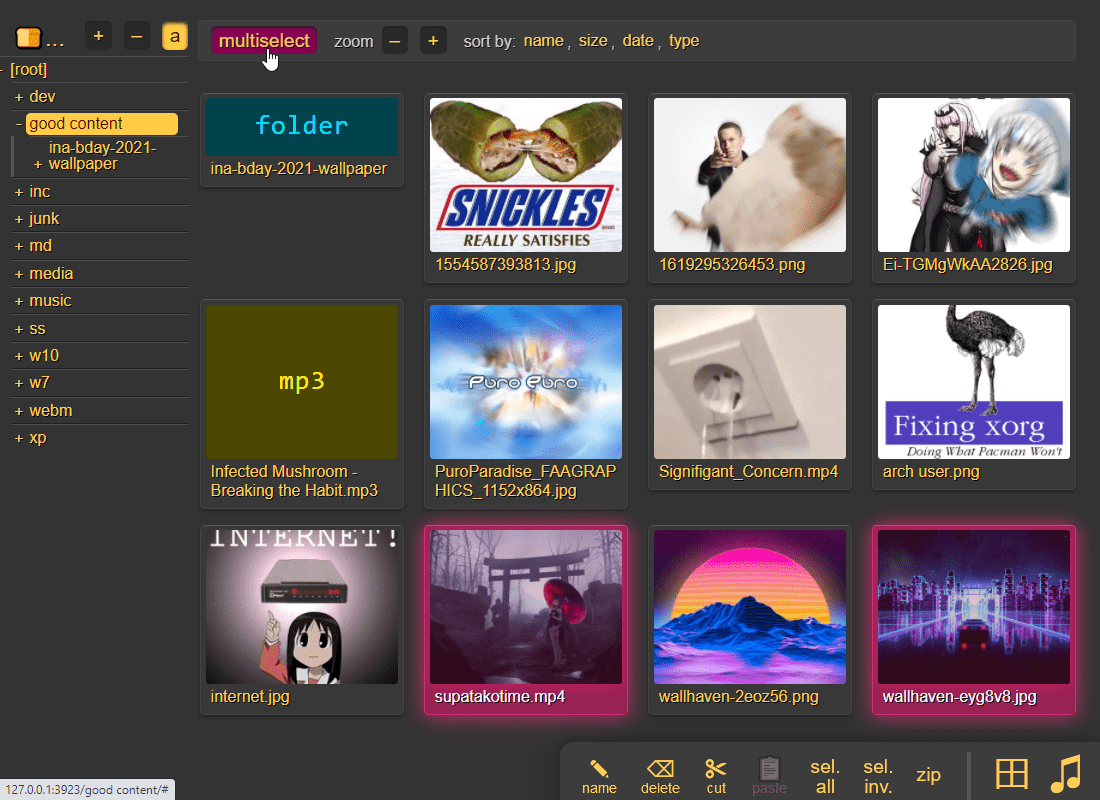

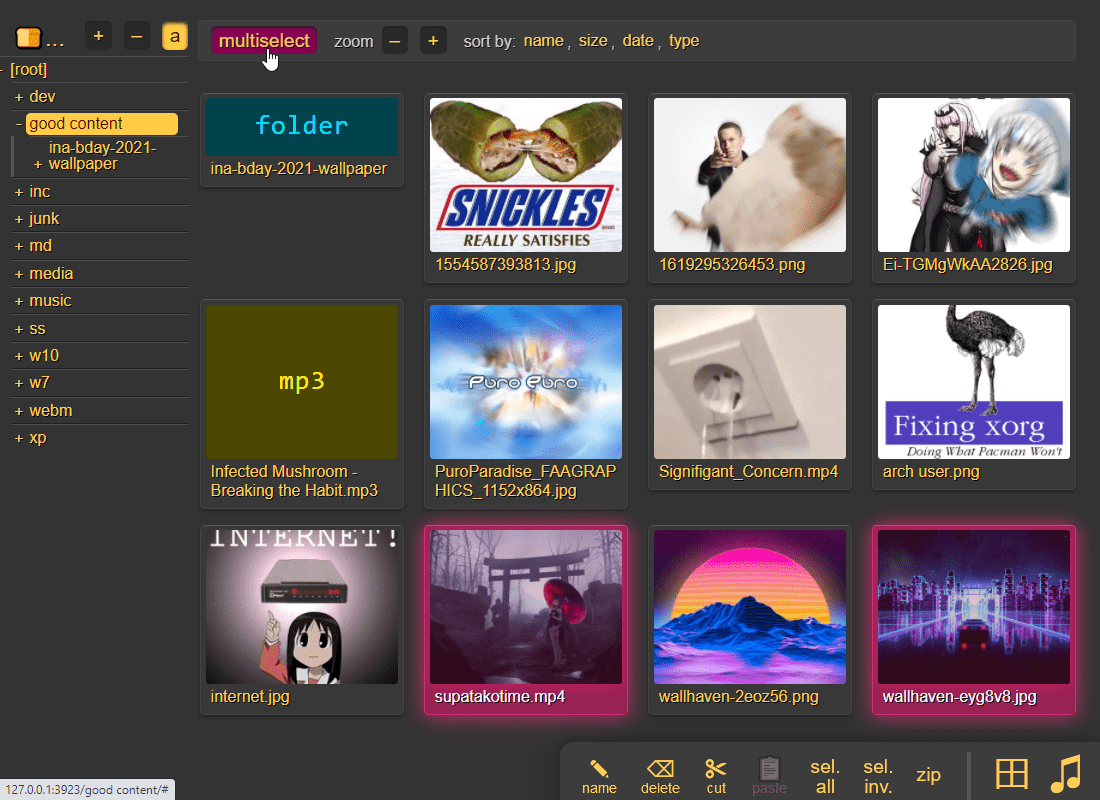

press `g` or `田` to toggle grid-view instead of the file listing and `t` toggles icons / thumbnails

|

press `g` or `田` to toggle grid-view instead of the file listing and `t` toggles icons / thumbnails

|

||||||

* can be made default globally with `--grid` or per-volume with volflag `grid`

|

* can be made default globally with `--grid` or per-volume with volflag `grid`

|

||||||

|

* enable by adding `?imgs` to a link, or disable with `?imgs=0`

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -653,7 +661,7 @@ up2k has several advantages:

|

|||||||

* uploads resume if you reboot your browser or pc, just upload the same files again

|

* uploads resume if you reboot your browser or pc, just upload the same files again

|

||||||

* server detects any corruption; the client reuploads affected chunks

|

* server detects any corruption; the client reuploads affected chunks

|

||||||

* the client doesn't upload anything that already exists on the server

|

* the client doesn't upload anything that already exists on the server

|

||||||

* no filesize limit unless imposed by a proxy, for example Cloudflare, which blocks uploads over 383.9 GiB

|

* no filesize limit, even when a proxy limits the request size (for example Cloudflare)

|

||||||

* much higher speeds than ftp/scp/tarpipe on some internet connections (mainly american ones) thanks to parallel connections

|

* much higher speeds than ftp/scp/tarpipe on some internet connections (mainly american ones) thanks to parallel connections

|

||||||

* the last-modified timestamp of the file is preserved

|

* the last-modified timestamp of the file is preserved

|

||||||

|

|

||||||

@@ -689,6 +697,8 @@ note that since up2k has to read each file twice, `[🎈] bup` can *theoreticall

|

|||||||

|

|

||||||

if you are resuming a massive upload and want to skip hashing the files which already finished, you can enable `turbo` in the `[⚙️] config` tab, but please read the tooltip on that button

|

if you are resuming a massive upload and want to skip hashing the files which already finished, you can enable `turbo` in the `[⚙️] config` tab, but please read the tooltip on that button

|

||||||

|

|

||||||

|

if the server is behind a proxy which imposes a request-size limit, you can configure up2k to sneak below the limit with server-option `--u2sz` (the default is 96 MiB to support Cloudflare)

|

||||||

|

|

||||||

|

|

||||||

### file-search

|

### file-search

|

||||||

|

|

||||||

@@ -708,7 +718,7 @@ files go into `[ok]` if they exist (and you get a link to where it is), otherwis

|

|||||||

|

|

||||||

### unpost

|

### unpost

|

||||||

|

|

||||||

undo/delete accidental uploads

|

undo/delete accidental uploads using the `[🧯]` tab in the UI

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@@ -752,10 +762,11 @@ file selection: click somewhere on the line (not the link itself), then:

|

|||||||

* shift-click another line for range-select

|

* shift-click another line for range-select

|

||||||

|

|

||||||

* cut: select some files and `ctrl-x`

|

* cut: select some files and `ctrl-x`

|

||||||

|

* copy: select some files and `ctrl-c`

|

||||||

* paste: `ctrl-v` in another folder

|

* paste: `ctrl-v` in another folder

|

||||||

* rename: `F2`

|

* rename: `F2`

|

||||||

|

|

||||||

you can move files across browser tabs (cut in one tab, paste in another)

|

you can copy/move files across browser tabs (cut/copy in one tab, paste in another)

|

||||||

|

|

||||||

|

|

||||||

## shares

|

## shares

|

||||||

@@ -842,6 +853,41 @@ or a mix of both:

|

|||||||

the metadata keys you can use in the format field are the ones in the file-browser table header (whatever is collected with `-mte` and `-mtp`)

|

the metadata keys you can use in the format field are the ones in the file-browser table header (whatever is collected with `-mte` and `-mtp`)

|

||||||

|

|

||||||

|

|

||||||

|

## rss feeds

|

||||||

|

|

||||||

|

monitor a folder with your RSS reader , optionally recursive

|

||||||

|

|

||||||

|

must be enabled per-volume with volflag `rss` or globally with `--rss`

|

||||||

|

|

||||||

|

the feed includes itunes metadata for use with podcast readers such as [AntennaPod](https://antennapod.org/)

|

||||||

|

|

||||||

|

a feed example: https://cd.ocv.me/a/d2/d22/?rss&fext=mp3

|

||||||

|

|

||||||

|

url parameters:

|

||||||

|

|

||||||

|

* `pw=hunter2` for password auth

|

||||||

|

* `recursive` to also include subfolders

|

||||||

|

* `title=foo` changes the feed title (default: folder name)

|

||||||

|

* `fext=mp3,opus` only include mp3 and opus files (default: all)

|

||||||

|

* `nf=30` only show the first 30 results (default: 250)

|

||||||

|

* `sort=m` sort by mtime (file last-modified), newest first (default)

|

||||||

|

* `u` = upload-time; NOTE: non-uploaded files have upload-time `0`

|

||||||

|

* `n` = filename

|

||||||

|

* `a` = filesize

|

||||||

|

* uppercase = reverse-sort; `M` = oldest file first

|

||||||

|

|

||||||

|

|

||||||

|

## recent uploads

|

||||||

|

|

||||||

|

list all recent uploads by clicking "show recent uploads" in the controlpanel

|

||||||

|

|

||||||

|

will show uploader IP and upload-time if the visitor has the admin permission

|

||||||

|

|

||||||

|

* global-option `--ups-when` makes upload-time visible to all users, and not just admins

|

||||||

|

|

||||||

|

note that the [🧯 unpost](#unpost) feature is better suited for viewing *your own* recent uploads, as it includes the option to undo/delete them

|

||||||

|

|

||||||

|

|

||||||

## media player

|

## media player

|

||||||

|

|

||||||

plays almost every audio format there is (if the server has FFmpeg installed for on-demand transcoding)

|

plays almost every audio format there is (if the server has FFmpeg installed for on-demand transcoding)

|

||||||

@@ -1066,11 +1112,12 @@ using the GUI (winXP or later):

|

|||||||

* on winXP only, click the `Sign up for online storage` hyperlink instead and put the URL there

|

* on winXP only, click the `Sign up for online storage` hyperlink instead and put the URL there

|

||||||

* providing your password as the username is recommended; the password field can be anything or empty

|

* providing your password as the username is recommended; the password field can be anything or empty

|

||||||

|

|

||||||

known client bugs:

|

the webdav client that's built into windows has the following list of bugs; you can avoid all of these by connecting with rclone instead:

|

||||||

* win7+ doesn't actually send the password to the server when reauthenticating after a reboot unless you first try to login with an incorrect password and then switch to the correct password

|

* win7+ doesn't actually send the password to the server when reauthenticating after a reboot unless you first try to login with an incorrect password and then switch to the correct password

|

||||||

* or just type your password into the username field instead to get around it entirely

|

* or just type your password into the username field instead to get around it entirely

|

||||||

* connecting to a folder which allows anonymous read will make writing impossible, as windows has decided it doesn't need to login

|

* connecting to a folder which allows anonymous read will make writing impossible, as windows has decided it doesn't need to login

|

||||||

* workaround: connect twice; first to a folder which requires auth, then to the folder you actually want, and leave both of those mounted

|

* workaround: connect twice; first to a folder which requires auth, then to the folder you actually want, and leave both of those mounted

|

||||||

|

* or set the server-option `--dav-auth` to force password-auth for all webdav clients

|

||||||

* win7+ may open a new tcp connection for every file and sometimes forgets to close them, eventually needing a reboot

|

* win7+ may open a new tcp connection for every file and sometimes forgets to close them, eventually needing a reboot

|

||||||

* maybe NIC-related (??), happens with win10-ltsc on e1000e but not virtio

|

* maybe NIC-related (??), happens with win10-ltsc on e1000e but not virtio

|

||||||

* windows cannot access folders which contain filenames with invalid unicode or forbidden characters (`<>:"/\|?*`), or names ending with `.`

|

* windows cannot access folders which contain filenames with invalid unicode or forbidden characters (`<>:"/\|?*`), or names ending with `.`

|

||||||

@@ -1237,7 +1284,7 @@ note:

|

|||||||

|

|

||||||

### exclude-patterns

|

### exclude-patterns

|

||||||

|

|

||||||

to save some time, you can provide a regex pattern for filepaths to only index by filename/path/size/last-modified (and not the hash of the file contents) by setting `--no-hash \.iso$` or the volflag `:c,nohash=\.iso$`, this has the following consequences:

|

to save some time, you can provide a regex pattern for filepaths to only index by filename/path/size/last-modified (and not the hash of the file contents) by setting `--no-hash '\.iso$'` or the volflag `:c,nohash=\.iso$`, this has the following consequences:

|

||||||

* initial indexing is way faster, especially when the volume is on a network disk

|

* initial indexing is way faster, especially when the volume is on a network disk

|

||||||

* makes it impossible to [file-search](#file-search)

|

* makes it impossible to [file-search](#file-search)

|

||||||

* if someone uploads the same file contents, the upload will not be detected as a dupe, so it will not get symlinked or rejected

|

* if someone uploads the same file contents, the upload will not be detected as a dupe, so it will not get symlinked or rejected

|

||||||

@@ -1248,6 +1295,8 @@ similarly, you can fully ignore files/folders using `--no-idx [...]` and `:c,noi

|

|||||||

|

|

||||||

if you set `--no-hash [...]` globally, you can enable hashing for specific volumes using flag `:c,nohash=`

|

if you set `--no-hash [...]` globally, you can enable hashing for specific volumes using flag `:c,nohash=`

|

||||||

|

|

||||||

|

to exclude certain filepaths from search-results, use `--srch-excl` or volflag `srch_excl` instead of `--no-idx`, for example `--srch-excl 'password|logs/[0-9]'`

|

||||||

|

|

||||||

### filesystem guards

|

### filesystem guards

|

||||||

|

|

||||||

avoid traversing into other filesystems using `--xdev` / volflag `:c,xdev`, skipping any symlinks or bind-mounts to another HDD for example

|

avoid traversing into other filesystems using `--xdev` / volflag `:c,xdev`, skipping any symlinks or bind-mounts to another HDD for example

|

||||||

@@ -1432,13 +1481,31 @@ redefine behavior with plugins ([examples](./bin/handlers/))

|

|||||||

replace 404 and 403 errors with something completely different (that's it for now)

|

replace 404 and 403 errors with something completely different (that's it for now)

|

||||||

|

|

||||||

|

|

||||||

|

## ip auth

|

||||||

|

|

||||||

|

autologin based on IP range (CIDR) , using the global-option `--ipu`

|

||||||

|

|

||||||

|

for example, if everyone with an IP that starts with `192.168.123` should automatically log in as the user `spartacus`, then you can either specify `--ipu=192.168.123.0/24=spartacus` as a commandline option, or put this in a config file:

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

[global]

|

||||||

|

ipu: 192.168.123.0/24=spartacus

|

||||||

|

```

|

||||||

|

|

||||||

|

repeat the option to map additional subnets

|

||||||

|

|

||||||

|

**be careful with this one!** if you have a reverseproxy, then you definitely want to make sure you have [real-ip](#real-ip) configured correctly, and it's probably a good idea to nullmap the reverseproxy's IP just in case; so if your reverseproxy is sending requests from `172.24.27.9` then that would be `--ipu=172.24.27.9/32=`

|

||||||

|

|

||||||

|

|

||||||

## identity providers

|

## identity providers

|

||||||

|

|

||||||

replace copyparty passwords with oauth and such

|

replace copyparty passwords with oauth and such

|

||||||

|

|

||||||

you can disable the built-in password-based login system, and instead replace it with a separate piece of software (an identity provider) which will then handle authenticating / authorizing of users; this makes it possible to login with passkeys / fido2 / webauthn / yubikey / ldap / active directory / oauth / many other single-sign-on contraptions

|

you can disable the built-in password-based login system, and instead replace it with a separate piece of software (an identity provider) which will then handle authenticating / authorizing of users; this makes it possible to login with passkeys / fido2 / webauthn / yubikey / ldap / active directory / oauth / many other single-sign-on contraptions

|

||||||

|

|

||||||

a popular choice is [Authelia](https://www.authelia.com/) (config-file based), another one is [authentik](https://goauthentik.io/) (GUI-based, more complex)

|

* the regular config-defined users will be used as a fallback for requests which don't include a valid (trusted) IdP username header

|

||||||

|

|

||||||

|

some popular identity providers are [Authelia](https://www.authelia.com/) (config-file based) and [authentik](https://goauthentik.io/) (GUI-based, more complex)

|

||||||

|

|

||||||

there is a [docker-compose example](./docs/examples/docker/idp-authelia-traefik) which is hopefully a good starting point (alternatively see [./docs/idp.md](./docs/idp.md) if you're the DIY type)

|

there is a [docker-compose example](./docs/examples/docker/idp-authelia-traefik) which is hopefully a good starting point (alternatively see [./docs/idp.md](./docs/idp.md) if you're the DIY type)

|

||||||

|

|

||||||

@@ -1640,6 +1707,7 @@ scrape_configs:

|

|||||||

currently the following metrics are available,

|

currently the following metrics are available,

|

||||||

* `cpp_uptime_seconds` time since last copyparty restart

|

* `cpp_uptime_seconds` time since last copyparty restart

|

||||||

* `cpp_boot_unixtime_seconds` same but as an absolute timestamp

|

* `cpp_boot_unixtime_seconds` same but as an absolute timestamp

|

||||||

|

* `cpp_active_dl` number of active downloads

|

||||||

* `cpp_http_conns` number of open http(s) connections

|

* `cpp_http_conns` number of open http(s) connections

|

||||||

* `cpp_http_reqs` number of http(s) requests handled

|

* `cpp_http_reqs` number of http(s) requests handled

|

||||||

* `cpp_sus_reqs` number of 403/422/malicious requests

|

* `cpp_sus_reqs` number of 403/422/malicious requests

|

||||||

@@ -1889,6 +1957,9 @@ quick summary of more eccentric web-browsers trying to view a directory index:

|

|||||||

| **ie4** and **netscape** 4.0 | can browse, upload with `?b=u`, auth with `&pw=wark` |

|

| **ie4** and **netscape** 4.0 | can browse, upload with `?b=u`, auth with `&pw=wark` |

|

||||||

| **ncsa mosaic** 2.7 | does not get a pass, [pic1](https://user-images.githubusercontent.com/241032/174189227-ae816026-cf6f-4be5-a26e-1b3b072c1b2f.png) - [pic2](https://user-images.githubusercontent.com/241032/174189225-5651c059-5152-46e9-ac26-7e98e497901b.png) |

|

| **ncsa mosaic** 2.7 | does not get a pass, [pic1](https://user-images.githubusercontent.com/241032/174189227-ae816026-cf6f-4be5-a26e-1b3b072c1b2f.png) - [pic2](https://user-images.githubusercontent.com/241032/174189225-5651c059-5152-46e9-ac26-7e98e497901b.png) |

|

||||||

| **SerenityOS** (7e98457) | hits a page fault, works with `?b=u`, file upload not-impl |

|

| **SerenityOS** (7e98457) | hits a page fault, works with `?b=u`, file upload not-impl |

|

||||||

|

| **nintendo 3ds** | can browse, upload, view thumbnails (thx bnjmn) |

|

||||||

|

|

||||||

|

<p align="center"><img src="https://github.com/user-attachments/assets/88deab3d-6cad-4017-8841-2f041472b853" /></p>

|

||||||

|

|

||||||

|

|

||||||

# client examples

|

# client examples

|

||||||

|

|||||||

@@ -2,7 +2,7 @@ standalone programs which are executed by copyparty when an event happens (uploa

|

|||||||

|

|

||||||

these programs either take zero arguments, or a filepath (the affected file), or a json message with filepath + additional info

|

these programs either take zero arguments, or a filepath (the affected file), or a json message with filepath + additional info

|

||||||

|

|

||||||

run copyparty with `--help-hooks` for usage details / hook type explanations (xm/xbu/xau/xiu/xbr/xar/xbd/xad/xban)

|

run copyparty with `--help-hooks` for usage details / hook type explanations (xm/xbu/xau/xiu/xbc/xac/xbr/xar/xbd/xad/xban)

|

||||||

|

|

||||||

> **note:** in addition to event hooks (the stuff described here), copyparty has another api to run your programs/scripts while providing way more information such as audio tags / video codecs / etc and optionally daisychaining data between scripts in a processing pipeline; if that's what you want then see [mtp plugins](../mtag/) instead

|

> **note:** in addition to event hooks (the stuff described here), copyparty has another api to run your programs/scripts while providing way more information such as audio tags / video codecs / etc and optionally daisychaining data between scripts in a processing pipeline; if that's what you want then see [mtp plugins](../mtag/) instead

|

||||||

|

|

||||||

|

|||||||

@@ -393,7 +393,8 @@ class Gateway(object):

|

|||||||

if r.status != 200:

|

if r.status != 200:

|

||||||

self.closeconn()

|

self.closeconn()

|

||||||

info("http error %s reading dir %r", r.status, web_path)

|

info("http error %s reading dir %r", r.status, web_path)

|

||||||

raise FuseOSError(errno.ENOENT)

|

err = errno.ENOENT if r.status == 404 else errno.EIO

|

||||||

|

raise FuseOSError(err)

|

||||||

|

|

||||||

ctype = r.getheader("Content-Type", "")

|

ctype = r.getheader("Content-Type", "")

|

||||||

if ctype == "application/json":

|

if ctype == "application/json":

|

||||||

@@ -1128,7 +1129,7 @@ def main():

|

|||||||

|

|

||||||

# dircache is always a boost,

|

# dircache is always a boost,

|

||||||

# only want to disable it for tests etc,

|

# only want to disable it for tests etc,

|

||||||

cdn = 9 # max num dirs; 0=disable

|

cdn = 24 # max num dirs; keep larger than max dir depth; 0=disable

|

||||||

cds = 1 # numsec until an entry goes stale

|

cds = 1 # numsec until an entry goes stale

|

||||||

|

|

||||||

where = "local directory"

|

where = "local directory"

|

||||||

|

|||||||

231

bin/u2c.py

231

bin/u2c.py

@@ -1,8 +1,8 @@

|

|||||||

#!/usr/bin/env python3

|

#!/usr/bin/env python3

|

||||||

from __future__ import print_function, unicode_literals

|

from __future__ import print_function, unicode_literals

|

||||||

|

|

||||||

S_VERSION = "2.1"

|

S_VERSION = "2.7"

|

||||||

S_BUILD_DT = "2024-09-23"

|

S_BUILD_DT = "2024-12-06"

|

||||||

|

|

||||||

"""

|

"""

|

||||||

u2c.py: upload to copyparty

|

u2c.py: upload to copyparty

|

||||||

@@ -62,6 +62,9 @@ else:

|

|||||||

|

|

||||||

unicode = str

|

unicode = str

|

||||||

|

|

||||||

|

|

||||||

|

WTF8 = "replace" if PY2 else "surrogateescape"

|

||||||

|

|

||||||

VT100 = platform.system() != "Windows"

|

VT100 = platform.system() != "Windows"

|

||||||

|

|

||||||

|

|

||||||

@@ -151,6 +154,7 @@ class HCli(object):

|

|||||||

self.tls = tls

|

self.tls = tls

|

||||||

self.verify = ar.te or not ar.td

|

self.verify = ar.te or not ar.td

|

||||||

self.conns = []

|

self.conns = []

|

||||||

|

self.hconns = []

|

||||||

if tls:

|

if tls:

|

||||||

import ssl

|

import ssl

|

||||||

|

|

||||||

@@ -170,7 +174,7 @@ class HCli(object):

|

|||||||

"User-Agent": "u2c/%s" % (S_VERSION,),

|

"User-Agent": "u2c/%s" % (S_VERSION,),

|

||||||

}

|

}

|

||||||

|

|

||||||

def _connect(self):

|

def _connect(self, timeout):

|

||||||

args = {}

|

args = {}

|

||||||

if PY37:

|

if PY37:

|

||||||

args["blocksize"] = 1048576

|

args["blocksize"] = 1048576

|

||||||

@@ -182,9 +186,11 @@ class HCli(object):

|

|||||||

if self.ctx:

|

if self.ctx:

|

||||||

args = {"context": self.ctx}

|

args = {"context": self.ctx}

|

||||||

|

|

||||||

return C(self.addr, self.port, timeout=999, **args)

|

return C(self.addr, self.port, timeout=timeout, **args)

|

||||||

|

|

||||||

def req(self, meth, vpath, hdrs, body=None, ctype=None):

|

def req(self, meth, vpath, hdrs, body=None, ctype=None):

|

||||||

|

now = time.time()

|

||||||

|

|

||||||

hdrs.update(self.base_hdrs)

|

hdrs.update(self.base_hdrs)

|

||||||

if self.ar.a:

|

if self.ar.a:

|

||||||

hdrs["PW"] = self.ar.a

|

hdrs["PW"] = self.ar.a

|

||||||

@@ -195,7 +201,11 @@ class HCli(object):

|

|||||||

0 if not body else body.len if hasattr(body, "len") else len(body)

|

0 if not body else body.len if hasattr(body, "len") else len(body)

|

||||||

)

|

)

|

||||||

|

|

||||||

c = self.conns.pop() if self.conns else self._connect()

|

# large timeout for handshakes (safededup)

|

||||||

|

conns = self.hconns if ctype == MJ else self.conns

|

||||||

|

while conns and self.ar.cxp < now - conns[0][0]:

|

||||||

|

conns.pop(0)[1].close()

|

||||||

|

c = conns.pop()[1] if conns else self._connect(999 if ctype == MJ else 128)

|

||||||

try:

|

try:

|

||||||

c.request(meth, vpath, body, hdrs)

|

c.request(meth, vpath, body, hdrs)

|

||||||

if PY27:

|

if PY27:

|

||||||

@@ -204,8 +214,15 @@ class HCli(object):

|

|||||||

rsp = c.getresponse()

|

rsp = c.getresponse()

|

||||||

|

|

||||||

data = rsp.read()

|

data = rsp.read()

|

||||||

self.conns.append(c)

|

conns.append((time.time(), c))

|

||||||

return rsp.status, data.decode("utf-8")

|

return rsp.status, data.decode("utf-8")

|

||||||

|

except http_client.BadStatusLine:

|

||||||

|

if self.ar.cxp > 4:

|

||||||

|

t = "\nWARNING: --cxp probably too high; reducing from %d to 4"

|

||||||

|

print(t % (self.ar.cxp,))

|

||||||

|

self.ar.cxp = 4

|

||||||

|

c.close()

|

||||||

|

raise

|

||||||

except:

|

except:

|

||||||

c.close()

|

c.close()

|

||||||

raise

|

raise

|

||||||

@@ -228,7 +245,7 @@ class File(object):

|

|||||||

self.lmod = lmod # type: float

|

self.lmod = lmod # type: float

|

||||||

|

|

||||||

self.abs = os.path.join(top, rel) # type: bytes

|

self.abs = os.path.join(top, rel) # type: bytes

|

||||||

self.name = self.rel.split(b"/")[-1].decode("utf-8", "replace") # type: str

|

self.name = self.rel.split(b"/")[-1].decode("utf-8", WTF8) # type: str

|

||||||

|

|

||||||

# set by get_hashlist

|

# set by get_hashlist

|

||||||

self.cids = [] # type: list[tuple[str, int, int]] # [ hash, ofs, sz ]

|

self.cids = [] # type: list[tuple[str, int, int]] # [ hash, ofs, sz ]

|

||||||

@@ -267,10 +284,41 @@ class FileSlice(object):

|

|||||||

raise Exception(9)

|

raise Exception(9)

|

||||||

tlen += clen

|

tlen += clen

|

||||||

|

|

||||||

self.len = tlen

|

self.len = self.tlen = tlen

|

||||||

self.cdr = self.car + self.len

|

self.cdr = self.car + self.len

|

||||||

self.ofs = 0 # type: int

|

self.ofs = 0 # type: int

|

||||||

self.f = open(file.abs, "rb", 512 * 1024)

|

|

||||||

|

self.f = None

|

||||||

|

self.seek = self._seek0

|

||||||

|

self.read = self._read0

|

||||||

|

|

||||||

|

def subchunk(self, maxsz, nth):

|

||||||

|

if self.tlen <= maxsz:

|

||||||

|

return -1

|

||||||

|

|

||||||

|

if not nth:

|

||||||

|

self.car0 = self.car

|

||||||

|

self.cdr0 = self.cdr

|

||||||

|

|

||||||

|

self.car = self.car0 + maxsz * nth

|

||||||

|

if self.car >= self.cdr0:

|

||||||

|

return -2

|

||||||

|

|

||||||

|

self.cdr = self.car + min(self.cdr0 - self.car, maxsz)

|

||||||

|

self.len = self.cdr - self.car

|

||||||

|

self.seek(0)

|

||||||

|

return nth

|

||||||

|

|

||||||

|

def unsub(self):

|

||||||

|

self.car = self.car0

|

||||||

|

self.cdr = self.cdr0

|

||||||

|

self.len = self.tlen

|

||||||

|

|

||||||

|

def _open(self):

|

||||||

|

self.seek = self._seek

|

||||||

|

self.read = self._read

|

||||||

|

|

||||||

|

self.f = open(self.file.abs, "rb", 512 * 1024)

|

||||||

self.f.seek(self.car)

|

self.f.seek(self.car)

|

||||||

|

|

||||||

# https://stackoverflow.com/questions/4359495/what-is-exactly-a-file-like-object-in-python

|

# https://stackoverflow.com/questions/4359495/what-is-exactly-a-file-like-object-in-python

|

||||||

@@ -282,10 +330,15 @@ class FileSlice(object):

|

|||||||

except:

|

except:

|

||||||

pass # py27 probably

|

pass # py27 probably

|

||||||

|

|

||||||

|

def close(self, *a, **ka):

|

||||||

|

return # until _open

|

||||||

|

|

||||||

def tell(self):

|

def tell(self):

|

||||||

return self.ofs

|

return self.ofs

|

||||||

|

|

||||||

def seek(self, ofs, wh=0):

|

def _seek(self, ofs, wh=0):

|

||||||

|

assert self.f # !rm

|

||||||

|

|

||||||

if wh == 1:

|

if wh == 1:

|

||||||

ofs = self.ofs + ofs

|

ofs = self.ofs + ofs

|

||||||

elif wh == 2:

|

elif wh == 2:

|

||||||

@@ -299,12 +352,22 @@ class FileSlice(object):

|

|||||||

self.ofs = ofs

|

self.ofs = ofs

|

||||||

self.f.seek(self.car + ofs)

|

self.f.seek(self.car + ofs)

|

||||||

|

|

||||||

def read(self, sz):

|

def _read(self, sz):

|

||||||

|

assert self.f # !rm

|

||||||

|

|

||||||

sz = min(sz, self.len - self.ofs)

|

sz = min(sz, self.len - self.ofs)

|

||||||

ret = self.f.read(sz)

|

ret = self.f.read(sz)

|

||||||

self.ofs += len(ret)

|

self.ofs += len(ret)

|

||||||

return ret

|

return ret

|

||||||

|

|

||||||

|

def _seek0(self, ofs, wh=0):

|

||||||

|

self._open()

|

||||||

|

return self.seek(ofs, wh)

|

||||||

|

|

||||||

|

def _read0(self, sz):

|

||||||

|

self._open()

|

||||||

|

return self.read(sz)

|

||||||

|

|

||||||

|

|

||||||

class MTHash(object):

|

class MTHash(object):

|

||||||

def __init__(self, cores):

|

def __init__(self, cores):

|

||||||

@@ -557,13 +620,17 @@ def walkdir(err, top, excl, seen):

|

|||||||

for ap, inf in sorted(statdir(err, top)):

|

for ap, inf in sorted(statdir(err, top)):

|

||||||

if excl.match(ap):

|

if excl.match(ap):

|

||||||

continue

|

continue

|

||||||

yield ap, inf

|

|

||||||

if stat.S_ISDIR(inf.st_mode):

|

if stat.S_ISDIR(inf.st_mode):

|

||||||

|

yield ap, inf

|

||||||

try:

|

try:

|

||||||

for x in walkdir(err, ap, excl, seen):

|

for x in walkdir(err, ap, excl, seen):

|

||||||

yield x

|

yield x

|

||||||

except Exception as ex:

|

except Exception as ex:

|

||||||

err.append((ap, str(ex)))

|

err.append((ap, str(ex)))

|

||||||

|

elif stat.S_ISREG(inf.st_mode):

|

||||||

|

yield ap, inf

|

||||||

|

else:

|

||||||

|

err.append((ap, "irregular filetype 0%o" % (inf.st_mode,)))

|

||||||

|

|

||||||

|

|

||||||

def walkdirs(err, tops, excl):

|

def walkdirs(err, tops, excl):

|

||||||

@@ -609,11 +676,12 @@ def walkdirs(err, tops, excl):

|

|||||||

|

|

||||||

# mostly from copyparty/util.py

|

# mostly from copyparty/util.py

|

||||||

def quotep(btxt):

|

def quotep(btxt):

|

||||||

|

# type: (bytes) -> bytes

|

||||||

quot1 = quote(btxt, safe=b"/")

|

quot1 = quote(btxt, safe=b"/")

|

||||||

if not PY2:

|

if not PY2:

|

||||||

quot1 = quot1.encode("ascii")

|

quot1 = quot1.encode("ascii")

|

||||||

|

|

||||||

return quot1.replace(b" ", b"+") # type: ignore

|

return quot1.replace(b" ", b"%20") # type: ignore

|

||||||

|

|

||||||

|

|

||||||

# from copyparty/util.py

|

# from copyparty/util.py

|

||||||

@@ -641,7 +709,7 @@ def up2k_chunksize(filesize):

|

|||||||

while True:

|

while True:

|

||||||

for mul in [1, 2]:

|

for mul in [1, 2]:

|

||||||

nchunks = math.ceil(filesize * 1.0 / chunksize)

|

nchunks = math.ceil(filesize * 1.0 / chunksize)

|

||||||

if nchunks <= 256 or (chunksize >= 32 * 1024 * 1024 and nchunks < 4096):

|

if nchunks <= 256 or (chunksize >= 32 * 1024 * 1024 and nchunks <= 4096):

|

||||||

return chunksize

|

return chunksize

|

||||||

|

|

||||||

chunksize += stepsize

|

chunksize += stepsize

|

||||||

@@ -720,7 +788,7 @@ def handshake(ar, file, search):

|

|||||||

url = file.url

|

url = file.url

|

||||||

else:

|

else:

|

||||||

if b"/" in file.rel:

|

if b"/" in file.rel:

|

||||||

url = quotep(file.rel.rsplit(b"/", 1)[0]).decode("utf-8", "replace")

|

url = quotep(file.rel.rsplit(b"/", 1)[0]).decode("utf-8")

|

||||||

else:

|

else:

|

||||||

url = ""

|

url = ""

|

||||||

url = ar.vtop + url

|

url = ar.vtop + url

|

||||||

@@ -728,6 +796,7 @@ def handshake(ar, file, search):

|

|||||||

while True:

|

while True:

|

||||||

sc = 600

|

sc = 600

|

||||||

txt = ""

|

txt = ""

|

||||||

|

t0 = time.time()

|

||||||

try:

|

try:

|

||||||

zs = json.dumps(req, separators=(",\n", ": "))

|

zs = json.dumps(req, separators=(",\n", ": "))

|

||||||

sc, txt = web.req("POST", url, {}, zs.encode("utf-8"), MJ)

|

sc, txt = web.req("POST", url, {}, zs.encode("utf-8"), MJ)

|

||||||

@@ -752,7 +821,9 @@ def handshake(ar, file, search):

|

|||||||

print("\nERROR: login required, or wrong password:\n%s" % (txt,))

|

print("\nERROR: login required, or wrong password:\n%s" % (txt,))

|

||||||

raise BadAuth()

|

raise BadAuth()

|

||||||

|

|

||||||

eprint("handshake failed, retrying: %s\n %s\n\n" % (file.name, em))

|

t = "handshake failed, retrying: %s\n t0=%.3f t1=%.3f td=%.3f\n %s\n\n"

|

||||||

|

now = time.time()

|

||||||

|

eprint(t % (file.name, t0, now, now - t0, em))

|

||||||

time.sleep(ar.cd)

|

time.sleep(ar.cd)

|

||||||

|

|

||||||

try:

|

try:

|

||||||

@@ -763,15 +834,15 @@ def handshake(ar, file, search):

|

|||||||

if search:

|

if search:

|

||||||

return r["hits"], False

|

return r["hits"], False

|

||||||

|

|

||||||

file.url = r["purl"]

|

file.url = quotep(r["purl"].encode("utf-8", WTF8)).decode("utf-8")

|

||||||

file.name = r["name"]

|

file.name = r["name"]

|

||||||

file.wark = r["wark"]

|

file.wark = r["wark"]

|

||||||

|

|

||||||

return r["hash"], r["sprs"]

|

return r["hash"], r["sprs"]

|

||||||

|

|

||||||

|

|

||||||

def upload(fsl, stats):

|

def upload(fsl, stats, maxsz):

|

||||||

# type: (FileSlice, str) -> None

|

# type: (FileSlice, str, int) -> None

|

||||||

"""upload a range of file data, defined by one or more `cid` (chunk-hash)"""

|

"""upload a range of file data, defined by one or more `cid` (chunk-hash)"""

|

||||||

|

|

||||||

ctxt = fsl.cids[0]

|

ctxt = fsl.cids[0]

|

||||||

@@ -789,7 +860,17 @@ def upload(fsl, stats):

|

|||||||

if stats:

|

if stats:

|

||||||

headers["X-Up2k-Stat"] = stats

|

headers["X-Up2k-Stat"] = stats

|

||||||

|

|

||||||

|

nsub = 0

|

||||||

try:

|

try:

|

||||||

|

while nsub != -1:

|

||||||

|

nsub = fsl.subchunk(maxsz, nsub)

|

||||||

|

if nsub == -2:

|

||||||

|

return

|

||||||

|

if nsub >= 0:

|

||||||

|

headers["X-Up2k-Subc"] = str(maxsz * nsub)

|

||||||

|

headers.pop(CLEN, None)

|

||||||

|

nsub += 1

|

||||||

|

|

||||||

sc, txt = web.req("POST", fsl.file.url, headers, fsl, MO)

|

sc, txt = web.req("POST", fsl.file.url, headers, fsl, MO)

|

||||||

|

|

||||||

if sc == 400:

|

if sc == 400:

|

||||||

@@ -803,7 +884,10 @@ def upload(fsl, stats):

|

|||||||

if sc >= 400:

|

if sc >= 400:

|

||||||

raise Exception("http %s: %s" % (sc, txt))

|

raise Exception("http %s: %s" % (sc, txt))

|

||||||

finally:

|

finally:

|

||||||

|

if fsl.f:

|

||||||

fsl.f.close()

|

fsl.f.close()

|

||||||

|

if nsub != -1:

|

||||||

|

fsl.unsub()

|

||||||

|

|

||||||

|

|

||||||

class Ctl(object):

|

class Ctl(object):

|

||||||

@@ -869,8 +953,8 @@ class Ctl(object):

|

|||||||

self.hash_b = 0

|

self.hash_b = 0

|

||||||

self.up_f = 0

|

self.up_f = 0

|

||||||

self.up_c = 0

|

self.up_c = 0

|

||||||

self.up_b = 0

|

self.up_b = 0 # num bytes handled

|

||||||

self.up_br = 0

|

self.up_br = 0 # num bytes actually transferred

|

||||||

self.uploader_busy = 0

|

self.uploader_busy = 0

|

||||||

self.serialized = False

|

self.serialized = False

|

||||||

|

|

||||||

@@ -935,7 +1019,7 @@ class Ctl(object):

|

|||||||

print(" %d up %s" % (ncs - nc, cid))

|

print(" %d up %s" % (ncs - nc, cid))

|

||||||

stats = "%d/0/0/%d" % (nf, self.nfiles - nf)

|

stats = "%d/0/0/%d" % (nf, self.nfiles - nf)

|

||||||

fslice = FileSlice(file, [cid])

|

fslice = FileSlice(file, [cid])

|

||||||

upload(fslice, stats)

|

upload(fslice, stats, self.ar.szm)

|

||||||

|

|

||||||

print(" ok!")

|

print(" ok!")

|

||||||

if file.recheck:

|

if file.recheck:

|

||||||

@@ -949,8 +1033,8 @@ class Ctl(object):

|

|||||||

handshake(self.ar, file, False)

|

handshake(self.ar, file, False)

|

||||||

|

|

||||||

def _fancy(self):

|

def _fancy(self):

|

||||||

if VT100 and not self.ar.ns:

|

|

||||||

atexit.register(self.cleanup_vt100)

|

atexit.register(self.cleanup_vt100)

|

||||||

|

if VT100 and not self.ar.ns:

|

||||||

ss.scroll_region(3)

|

ss.scroll_region(3)

|

||||||

|

|

||||||

Daemon(self.hasher)

|

Daemon(self.hasher)

|

||||||

@@ -958,6 +1042,7 @@ class Ctl(object):

|

|||||||

Daemon(self.handshaker)

|

Daemon(self.handshaker)

|

||||||

Daemon(self.uploader)

|

Daemon(self.uploader)

|

||||||

|

|

||||||

|

last_sp = -1

|

||||||

while True:

|

while True:

|

||||||

with self.exit_cond:

|

with self.exit_cond:

|

||||||

self.exit_cond.wait(0.07)

|

self.exit_cond.wait(0.07)

|

||||||

@@ -996,6 +1081,12 @@ class Ctl(object):

|

|||||||

else:

|

else:

|

||||||

txt = " "

|

txt = " "

|

||||||

|

|

||||||

|

if not VT100: # OSC9;4 (taskbar-progress)

|

||||||

|

sp = int(self.up_b * 100 / self.nbytes) or 1

|

||||||

|

if last_sp != sp:

|

||||||

|

last_sp = sp

|

||||||

|

txt += "\033]9;4;1;%d\033\\" % (sp,)

|

||||||

|

|

||||||

if not self.up_br:

|

if not self.up_br:

|

||||||

spd = self.hash_b / ((time.time() - self.t0) or 1)

|

spd = self.hash_b / ((time.time() - self.t0) or 1)

|

||||||

eta = (self.nbytes - self.hash_b) / (spd or 1)

|

eta = (self.nbytes - self.hash_b) / (spd or 1)

|

||||||

@@ -1006,18 +1097,25 @@ class Ctl(object):

|

|||||||

|

|

||||||

spd = humansize(spd)

|

spd = humansize(spd)

|

||||||

self.eta = str(datetime.timedelta(seconds=int(eta)))

|

self.eta = str(datetime.timedelta(seconds=int(eta)))

|

||||||

|

if eta > 2591999:

|

||||||

|

self.eta = self.eta.split(",")[0] # truncate HH:MM:SS

|

||||||

sleft = humansize(self.nbytes - self.up_b)

|

sleft = humansize(self.nbytes - self.up_b)

|

||||||

nleft = self.nfiles - self.up_f

|

nleft = self.nfiles - self.up_f

|

||||||

tail = "\033[K\033[u" if VT100 and not self.ar.ns else "\r"

|

tail = "\033[K\033[u" if VT100 and not self.ar.ns else "\r"

|

||||||

|

|

||||||

t = "%s eta @ %s/s, %s, %d# left\033[K" % (self.eta, spd, sleft, nleft)

|

t = "%s eta @ %s/s, %s, %d# left\033[K" % (self.eta, spd, sleft, nleft)

|

||||||

|

if not self.hash_b:

|

||||||

|

t = " now hashing..."

|

||||||

eprint(txt + "\033]0;{0}\033\\\r{0}{1}".format(t, tail))

|

eprint(txt + "\033]0;{0}\033\\\r{0}{1}".format(t, tail))

|

||||||

|

|

||||||

|

if self.ar.wlist:

|

||||||

|

self.at_hash = time.time() - self.t0

|

||||||

|

|

||||||

if self.hash_b and self.at_hash:

|

if self.hash_b and self.at_hash:

|

||||||

spd = humansize(self.hash_b / self.at_hash)

|

spd = humansize(self.hash_b / self.at_hash)

|

||||||

eprint("\nhasher: %.2f sec, %s/s\n" % (self.at_hash, spd))

|

eprint("\nhasher: %.2f sec, %s/s\n" % (self.at_hash, spd))

|

||||||

if self.up_b and self.at_up:

|

if self.up_br and self.at_up:

|

||||||

spd = humansize(self.up_b / self.at_up)

|

spd = humansize(self.up_br / self.at_up)

|

||||||

eprint("upload: %.2f sec, %s/s\n" % (self.at_up, spd))

|

eprint("upload: %.2f sec, %s/s\n" % (self.at_up, spd))

|

||||||

|

|

||||||

if not self.recheck:

|

if not self.recheck:

|

||||||

@@ -1028,7 +1126,10 @@ class Ctl(object):

|

|||||||

handshake(self.ar, file, False)

|

handshake(self.ar, file, False)

|

||||||

|

|

||||||

def cleanup_vt100(self):

|

def cleanup_vt100(self):

|

||||||

|

if VT100:

|

||||||

ss.scroll_region(None)

|

ss.scroll_region(None)

|

||||||

|

else:

|

||||||

|

eprint("\033]9;4;0\033\\")

|

||||||

eprint("\033[J\033]0;\033\\")

|

eprint("\033[J\033]0;\033\\")

|

||||||

|

|

||||||

def cb_hasher(self, file, ofs):

|

def cb_hasher(self, file, ofs):

|

||||||

@@ -1043,7 +1144,9 @@ class Ctl(object):

|

|||||||

isdir = stat.S_ISDIR(inf.st_mode)

|

isdir = stat.S_ISDIR(inf.st_mode)

|

||||||

if self.ar.z or self.ar.drd:

|

if self.ar.z or self.ar.drd:

|

||||||

rd = rel if isdir else os.path.dirname(rel)

|

rd = rel if isdir else os.path.dirname(rel)

|

||||||

srd = rd.decode("utf-8", "replace").replace("\\", "/")

|

srd = rd.decode("utf-8", "replace").replace("\\", "/").rstrip("/")

|

||||||

|

if srd:

|

||||||

|

srd += "/"

|

||||||

if prd != rd:

|

if prd != rd:

|

||||||

prd = rd

|

prd = rd

|

||||||

ls = {}

|

ls = {}

|

||||||

@@ -1051,7 +1154,7 @@ class Ctl(object):

|

|||||||

print(" ls ~{0}".format(srd))

|

print(" ls ~{0}".format(srd))

|

||||||

zt = (

|

zt = (

|

||||||

self.ar.vtop,

|

self.ar.vtop,

|

||||||

quotep(rd.replace(b"\\", b"/")).decode("utf-8", "replace"),

|

quotep(rd.replace(b"\\", b"/")).decode("utf-8"),

|

||||||

)

|

)

|

||||||

sc, txt = web.req("GET", "%s%s?ls<&dots" % zt, {})

|

sc, txt = web.req("GET", "%s%s?ls<&dots" % zt, {})

|

||||||

if sc >= 400:

|

if sc >= 400:

|

||||||

@@ -1060,13 +1163,16 @@ class Ctl(object):

|

|||||||

j = json.loads(txt)

|

j = json.loads(txt)

|

||||||

for f in j["dirs"] + j["files"]:

|

for f in j["dirs"] + j["files"]:

|

||||||

rfn = f["href"].split("?")[0].rstrip("/")

|

rfn = f["href"].split("?")[0].rstrip("/")

|

||||||

ls[unquote(rfn.encode("utf-8", "replace"))] = f

|

ls[unquote(rfn.encode("utf-8", WTF8))] = f

|

||||||

except Exception as ex:

|

except Exception as ex:

|

||||||

print(" mkdir ~{0} ({1})".format(srd, ex))

|

print(" mkdir ~{0} ({1})".format(srd, ex))

|

||||||

|

|

||||||

if self.ar.drd:

|

if self.ar.drd:

|

||||||

dp = os.path.join(top, rd)

|

dp = os.path.join(top, rd)

|

||||||

|

try:

|

||||||

lnodes = set(os.listdir(dp))

|

lnodes = set(os.listdir(dp))

|

||||||

|

except:

|

||||||

|

lnodes = list(ls) # fs eio; don't delete

|

||||||

if ptn:

|

if ptn:

|

||||||

zs = dp.replace(sep, b"/").rstrip(b"/") + b"/"

|

zs = dp.replace(sep, b"/").rstrip(b"/") + b"/"

|

||||||

zls = [zs + x for x in lnodes]

|

zls = [zs + x for x in lnodes]

|

||||||

@@ -1074,12 +1180,12 @@ class Ctl(object):

|

|||||||

lnodes = [x.split(b"/")[-1] for x in zls]

|

lnodes = [x.split(b"/")[-1] for x in zls]

|

||||||

bnames = [x for x in ls if x not in lnodes and x != b".hist"]

|

bnames = [x for x in ls if x not in lnodes and x != b".hist"]

|

||||||

vpath = self.ar.url.split("://")[-1].split("/", 1)[-1]

|

vpath = self.ar.url.split("://")[-1].split("/", 1)[-1]

|

||||||

names = [x.decode("utf-8", "replace") for x in bnames]

|

names = [x.decode("utf-8", WTF8) for x in bnames]

|

||||||

locs = [vpath + srd + "/" + x for x in names]

|

locs = [vpath + srd + x for x in names]

|

||||||

while locs:

|

while locs:

|

||||||

req = locs

|

req = locs

|

||||||

while req:

|

while req:

|

||||||

print("DELETING ~%s/#%s" % (srd, len(req)))

|

print("DELETING ~%s#%s" % (srd, len(req)))

|

||||||

body = json.dumps(req).encode("utf-8")

|

body = json.dumps(req).encode("utf-8")

|

||||||

sc, txt = web.req(

|

sc, txt = web.req(

|

||||||

"POST", self.ar.url + "?delete", {}, body, MJ

|

"POST", self.ar.url + "?delete", {}, body, MJ

|

||||||

@@ -1136,10 +1242,16 @@ class Ctl(object):

|

|||||||

self.up_b = self.hash_b

|

self.up_b = self.hash_b

|

||||||

|

|

||||||

if self.ar.wlist:

|

if self.ar.wlist:

|

||||||

|

vp = file.rel.decode("utf-8")

|

||||||

|

if self.ar.chs:

|

||||||

|

zsl = [

|

||||||

|

"%s %d %d" % (zsii[0], n, zsii[1])

|

||||||

|

for n, zsii in enumerate(file.cids)

|

||||||

|

]

|

||||||

|

print("chs: %s\n%s" % (vp, "\n".join(zsl)))

|

||||||

zsl = [self.ar.wsalt, str(file.size)] + [x[0] for x in file.kchunks]

|

zsl = [self.ar.wsalt, str(file.size)] + [x[0] for x in file.kchunks]

|

||||||

zb = hashlib.sha512("\n".join(zsl).encode("utf-8")).digest()[:33]

|

zb = hashlib.sha512("\n".join(zsl).encode("utf-8")).digest()[:33]

|

||||||

wark = ub64enc(zb).decode("utf-8")

|

wark = ub64enc(zb).decode("utf-8")

|

||||||

vp = file.rel.decode("utf-8")

|

|

||||||

if self.ar.jw:

|

if self.ar.jw:

|

||||||

print("%s %s" % (wark, vp))

|

print("%s %s" % (wark, vp))

|

||||||

else:

|

else:

|

||||||

@@ -1177,6 +1289,7 @@ class Ctl(object):

|

|||||||

self.q_upload.put(None)

|

self.q_upload.put(None)

|

||||||

return

|

return

|

||||||

|

|

||||||

|

chunksz = up2k_chunksize(file.size)

|

||||||

upath = file.abs.decode("utf-8", "replace")

|

upath = file.abs.decode("utf-8", "replace")

|

||||||

if not VT100:

|

if not VT100:

|

||||||

upath = upath.lstrip("\\?")

|

upath = upath.lstrip("\\?")

|

||||||

@@ -1236,9 +1349,14 @@ class Ctl(object):

|

|||||||

file.up_c -= len(hs)

|

file.up_c -= len(hs)

|

||||||

for cid in hs:

|

for cid in hs:

|

||||||

sz = file.kchunks[cid][1]

|

sz = file.kchunks[cid][1]

|

||||||

|

self.up_br -= sz

|

||||||

self.up_b -= sz

|

self.up_b -= sz

|

||||||

file.up_b -= sz

|

file.up_b -= sz

|

||||||

|

|

||||||

|

if hs and not file.up_b:

|

||||||

|

# first hs of this file; is this an upload resume?

|

||||||

|

file.up_b = chunksz * max(0, len(file.kchunks) - len(hs))

|

||||||

|

|

||||||

file.ucids = hs

|

file.ucids = hs

|

||||||

|

|

||||||

if not hs:

|

if not hs:

|

||||||

@@ -1252,7 +1370,7 @@ class Ctl(object):

|

|||||||

c1 = c2 = ""

|

c1 = c2 = ""

|

||||||

|

|

||||||

spd_h = humansize(file.size / file.t_hash, True)

|

spd_h = humansize(file.size / file.t_hash, True)

|

||||||

if file.up_b:

|

if file.up_c:

|

||||||

t_up = file.t1_up - file.t0_up

|

t_up = file.t1_up - file.t0_up

|

||||||

spd_u = humansize(file.size / t_up, True)

|

spd_u = humansize(file.size / t_up, True)

|

||||||

|

|

||||||

@@ -1262,14 +1380,13 @@ class Ctl(object):

|

|||||||

t = " found %s %s(%.2fs,%s/s)%s"

|

t = " found %s %s(%.2fs,%s/s)%s"

|

||||||

print(t % (upath, c1, file.t_hash, spd_h, c2))

|

print(t % (upath, c1, file.t_hash, spd_h, c2))

|

||||||

else:

|

else:

|

||||||

kw = "uploaded" if file.up_b else " found"

|

kw = "uploaded" if file.up_c else " found"

|

||||||

print("{0} {1}".format(kw, upath))

|

print("{0} {1}".format(kw, upath))

|

||||||

|

|

||||||

self._check_if_done()

|

self._check_if_done()

|

||||||

continue

|

continue

|

||||||

|

|

||||||

chunksz = up2k_chunksize(file.size)

|

njoin = self.ar.sz // chunksz

|

||||||

njoin = (self.ar.sz * 1024 * 1024) // chunksz

|

|

||||||

cs = hs[:]

|

cs = hs[:]

|

||||||

while cs:

|

while cs:

|

||||||

fsl = FileSlice(file, cs[:1])

|

fsl = FileSlice(file, cs[:1])

|

||||||

@@ -1321,7 +1438,7 @@ class Ctl(object):

|

|||||||

)

|

)

|

||||||

|

|

||||||

try:

|

try:

|

||||||

upload(fsl, stats)

|

upload(fsl, stats, self.ar.szm)

|

||||||

except Exception as ex:

|

except Exception as ex:

|

||||||

t = "upload failed, retrying: %s #%s+%d (%s)\n"

|

t = "upload failed, retrying: %s #%s+%d (%s)\n"

|

||||||

eprint(t % (file.name, cids[0][:8], len(cids) - 1, ex))

|

eprint(t % (file.name, cids[0][:8], len(cids) - 1, ex))

|

||||||

@@ -1365,7 +1482,7 @@ def main():

|

|||||||

cores = (os.cpu_count() if hasattr(os, "cpu_count") else 0) or 2

|

cores = (os.cpu_count() if hasattr(os, "cpu_count") else 0) or 2

|

||||||

hcores = min(cores, 3) # 4% faster than 4+ on py3.9 @ r5-4500U

|

hcores = min(cores, 3) # 4% faster than 4+ on py3.9 @ r5-4500U

|

||||||

|

|

||||||

ver = "{0} v{1} https://youtu.be/BIcOO6TLKaY".format(S_BUILD_DT, S_VERSION)

|

ver = "{0}, v{1}".format(S_BUILD_DT, S_VERSION)

|

||||||

if "--version" in sys.argv:

|

if "--version" in sys.argv:

|

||||||

print(ver)

|

print(ver)

|

||||||

return

|

return

|

||||||

@@ -1403,14 +1520,17 @@ source file/folder selection uses rsync syntax, meaning that:

|

|||||||

|

|

||||||

ap = app.add_argument_group("file-ID calculator; enable with url '-' to list warks (file identifiers) instead of upload/search")

|

ap = app.add_argument_group("file-ID calculator; enable with url '-' to list warks (file identifiers) instead of upload/search")

|

||||||

ap.add_argument("--wsalt", type=unicode, metavar="S", default="hunter2", help="salt to use when creating warks; must match server config")

|

ap.add_argument("--wsalt", type=unicode, metavar="S", default="hunter2", help="salt to use when creating warks; must match server config")

|

||||||

|

ap.add_argument("--chs", action="store_true", help="verbose (print the hash/offset of each chunk in each file)")

|

||||||

ap.add_argument("--jw", action="store_true", help="just identifier+filepath, not mtime/size too")

|

ap.add_argument("--jw", action="store_true", help="just identifier+filepath, not mtime/size too")

|

||||||

|

|

||||||

ap = app.add_argument_group("performance tweaks")

|

ap = app.add_argument_group("performance tweaks")

|

||||||

ap.add_argument("-j", type=int, metavar="CONNS", default=2, help="parallel connections")

|

ap.add_argument("-j", type=int, metavar="CONNS", default=2, help="parallel connections")

|

||||||

ap.add_argument("-J", type=int, metavar="CORES", default=hcores, help="num cpu-cores to use for hashing; set 0 or 1 for single-core hashing")

|

ap.add_argument("-J", type=int, metavar="CORES", default=hcores, help="num cpu-cores to use for hashing; set 0 or 1 for single-core hashing")

|

||||||

ap.add_argument("--sz", type=int, metavar="MiB", default=64, help="try to make each POST this big")

|

ap.add_argument("--sz", type=int, metavar="MiB", default=64, help="try to make each POST this big")

|

||||||

|

ap.add_argument("--szm", type=int, metavar="MiB", default=96, help="max size of each POST (default is cloudflare max)")

|

||||||

ap.add_argument("-nh", action="store_true", help="disable hashing while uploading")

|

ap.add_argument("-nh", action="store_true", help="disable hashing while uploading")

|

||||||

ap.add_argument("-ns", action="store_true", help="no status panel (for slow consoles and macos)")

|

ap.add_argument("-ns", action="store_true", help="no status panel (for slow consoles and macos)")

|

||||||

|

ap.add_argument("--cxp", type=float, metavar="SEC", default=57, help="assume http connections expired after SEConds")

|

||||||

ap.add_argument("--cd", type=float, metavar="SEC", default=5, help="delay before reattempting a failed handshake/upload")

|

ap.add_argument("--cd", type=float, metavar="SEC", default=5, help="delay before reattempting a failed handshake/upload")

|

||||||

ap.add_argument("--safe", action="store_true", help="use simple fallback approach")

|

ap.add_argument("--safe", action="store_true", help="use simple fallback approach")

|

||||||

ap.add_argument("-z", action="store_true", help="ZOOMIN' (skip uploading files if they exist at the destination with the ~same last-modified timestamp, so same as yolo / turbo with date-chk but even faster)")

|

ap.add_argument("-z", action="store_true", help="ZOOMIN' (skip uploading files if they exist at the destination with the ~same last-modified timestamp, so same as yolo / turbo with date-chk but even faster)")

|

||||||

@@ -1430,12 +1550,47 @@ source file/folder selection uses rsync syntax, meaning that:

|

|||||||

except:

|

except:

|

||||||

pass

|

pass

|

||||||

|

|

||||||

|

# msys2 doesn't uncygpath absolute paths with whitespace

|

||||||

|

if not VT100:

|

||||||

|

zsl = []

|

||||||

|

for fn in ar.files:

|

||||||

|

if re.search("^/[a-z]/", fn):

|

||||||

|

fn = r"%s:\%s" % (fn[1:2], fn[3:])

|

||||||

|

zsl.append(fn.replace("/", "\\"))

|

||||||

|

ar.files = zsl

|

||||||

|

|

||||||

|

fok = []

|

||||||

|

fng = []

|

||||||

|

for fn in ar.files:

|

||||||

|

if os.path.exists(fn):

|

||||||

|

fok.append(fn)

|

||||||

|

elif VT100:

|

||||||

|

fng.append(fn)

|

||||||

|

else:

|

||||||

|

# windows leaves glob-expansion to the invoked process... okayyy let's get to work

|

||||||

|

from glob import glob

|

||||||

|

|

||||||

|

fns = glob(fn)

|

||||||

|

if fns:

|