Compare commits

125 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

e4acddc23b | ||

|

|

2b2d8e4e02 | ||

|

|

5501d49032 | ||

|

|

fa54b2eec4 | ||

|

|

cb0160021f | ||

|

|

93a723d588 | ||

|

|

8ebe1fb5e8 | ||

|

|

2acdf685b1 | ||

|

|

9f122ccd16 | ||

|

|

03be26fafc | ||

|

|

df5d309d6e | ||

|

|

c355f9bd91 | ||

|

|

9c28ba417e | ||

|

|

705b58c741 | ||

|

|

510302d667 | ||

|

|

025a537413 | ||

|

|

60a1ff0fc0 | ||

|

|

f94a0b1bff | ||

|

|

4ccfeeb2cd | ||

|

|

2646f6a4f2 | ||

|

|

b286ab539e | ||

|

|

2cca6e0922 | ||

|

|

db51f1b063 | ||

|

|

d979c47f50 | ||

|

|

e64b87b99b | ||

|

|

b985011a00 | ||

|

|

c2ed2314c8 | ||

|

|

cd496658c3 | ||

|

|

deca082623 | ||

|

|

0ea8bb7c83 | ||

|

|

1fb251a4c2 | ||

|

|

4295923b76 | ||

|

|

572aa4b26c | ||

|

|

b1359f039f | ||

|

|

867d8ee49e | ||

|

|

04c86e8a89 | ||

|

|

bc0cb43ef9 | ||

|

|

769454fdce | ||

|

|

4ee81af8f6 | ||

|

|

8b0e66122f | ||

|

|

8a98efb929 | ||

|

|

b6fd555038 | ||

|

|

7eb413ad51 | ||

|

|

4421d509eb | ||

|

|

793ffd7b01 | ||

|

|

1e22222c60 | ||

|

|

544e0549bc | ||

|

|

83178d0836 | ||

|

|

c44f5f5701 | ||

|

|

138f5bc989 | ||

|

|

e4759f86ef | ||

|

|

d71416437a | ||

|

|

a84c583b2c | ||

|

|

cdacdccdb8 | ||

|

|

d3ccd3f174 | ||

|

|

cb6de0387d | ||

|

|

abff40519d | ||

|

|

55c74ad164 | ||

|

|

673b4f7e23 | ||

|

|

d11e02da49 | ||

|

|

8790f89e08 | ||

|

|

33442026b8 | ||

|

|

03193de6d0 | ||

|

|

8675ff40f3 | ||

|

|

d88889d3fc | ||

|

|

6f244d4335 | ||

|

|

cacca663b3 | ||

|

|

d5109be559 | ||

|

|

d999f06bb9 | ||

|

|

a1a8a8c7b5 | ||

|

|

fdd6f3b4a6 | ||

|

|

f5191973df | ||

|

|

ddbaebe779 | ||

|

|

42099baeff | ||

|

|

2459965ca8 | ||

|

|

6acf436573 | ||

|

|

f217e1ce71 | ||

|

|

418000aee3 | ||

|

|

dbbba9625b | ||

|

|

397bc92fbc | ||

|

|

6e615dcd03 | ||

|

|

9ac5908b33 | ||

|

|

50912480b9 | ||

|

|

24b9b8319d | ||

|

|

b0f4f0b653 | ||

|

|

05bbd41c4b | ||

|

|

8f5f8a3cda | ||

|

|

c8938fc033 | ||

|

|

1550350e05 | ||

|

|

5cc190c026 | ||

|

|

d6a0a738ce | ||

|

|

f5fe3678ee | ||

|

|

f2a7925387 | ||

|

|

fa953ced52 | ||

|

|

f0000d9861 | ||

|

|

4e67516719 | ||

|

|

29db7a6270 | ||

|

|

852499e296 | ||

|

|

f1775fd51c | ||

|

|

4bb306932a | ||

|

|

2a37e81bd8 | ||

|

|

6a312ca856 | ||

|

|

e7f3e475a2 | ||

|

|

854ba0ec06 | ||

|

|

209b49d771 | ||

|

|

949baae539 | ||

|

|

5f4ea27586 | ||

|

|

099cc97247 | ||

|

|

592b7d6315 | ||

|

|

0880bf55a1 | ||

|

|

4cbffec0ec | ||

|

|

cc355417d4 | ||

|

|

e2bc573e61 | ||

|

|

41c0376177 | ||

|

|

c01cad091e | ||

|

|

eb349f339c | ||

|

|

24d8caaf3e | ||

|

|

5ac2c20959 | ||

|

|

bb72e6bf30 | ||

|

|

d8142e866a | ||

|

|

7b7979fd61 | ||

|

|

749616d09d | ||

|

|

5485c6d7ca | ||

|

|

b7aea38d77 | ||

|

|

0ecd9f99e6 |

2

.github/pull_request_template.md

vendored

2

.github/pull_request_template.md

vendored

@@ -1,2 +1,2 @@

|

||||

Please include the following text somewhere in this PR description:

|

||||

To show that your contribution is compatible with the MIT License, please include the following text somewhere in this PR description:

|

||||

This PR complies with the DCO; https://developercertificate.org/

|

||||

|

||||

7

.gitignore

vendored

7

.gitignore

vendored

@@ -21,6 +21,9 @@ copyparty.egg-info/

|

||||

# winmerge

|

||||

*.bak

|

||||

|

||||

# apple pls

|

||||

.DS_Store

|

||||

|

||||

# derived

|

||||

copyparty/res/COPYING.txt

|

||||

copyparty/web/deps/

|

||||

@@ -34,3 +37,7 @@ up.*.txt

|

||||

.hist/

|

||||

scripts/docker/*.out

|

||||

scripts/docker/*.err

|

||||

/perf.*

|

||||

|

||||

# nix build output link

|

||||

result

|

||||

|

||||

10

.vscode/launch.py

vendored

10

.vscode/launch.py

vendored

@@ -30,9 +30,17 @@ except:

|

||||

|

||||

argv = [os.path.expanduser(x) if x.startswith("~") else x for x in argv]

|

||||

|

||||

sfx = ""

|

||||

if len(sys.argv) > 1 and os.path.isfile(sys.argv[1]):

|

||||

sfx = sys.argv[1]

|

||||

sys.argv = [sys.argv[0]] + sys.argv[2:]

|

||||

|

||||

argv += sys.argv[1:]

|

||||

|

||||

if re.search(" -j ?[0-9]", " ".join(argv)):

|

||||

if sfx:

|

||||

argv = [sys.executable, sfx] + argv

|

||||

sp.check_call(argv)

|

||||

elif re.search(" -j ?[0-9]", " ".join(argv)):

|

||||

argv = [sys.executable, "-m", "copyparty"] + argv

|

||||

sp.check_call(argv)

|

||||

else:

|

||||

|

||||

32

.vscode/settings.json

vendored

32

.vscode/settings.json

vendored

@@ -35,34 +35,18 @@

|

||||

"python.linting.flake8Enabled": true,

|

||||

"python.linting.banditEnabled": true,

|

||||

"python.linting.mypyEnabled": true,

|

||||

"python.linting.mypyArgs": [

|

||||

"--ignore-missing-imports",

|

||||

"--follow-imports=silent",

|

||||

"--show-column-numbers",

|

||||

"--strict"

|

||||

],

|

||||

"python.linting.flake8Args": [

|

||||

"--max-line-length=120",

|

||||

"--ignore=E722,F405,E203,W503,W293,E402,E501,E128",

|

||||

"--ignore=E722,F405,E203,W503,W293,E402,E501,E128,E226",

|

||||

],

|

||||

"python.linting.banditArgs": [

|

||||

"--ignore=B104"

|

||||

],

|

||||

"python.linting.pylintArgs": [

|

||||

"--disable=missing-module-docstring",

|

||||

"--disable=missing-class-docstring",

|

||||

"--disable=missing-function-docstring",

|

||||

"--disable=import-outside-toplevel",

|

||||

"--disable=wrong-import-position",

|

||||

"--disable=raise-missing-from",

|

||||

"--disable=bare-except",

|

||||

"--disable=broad-except",

|

||||

"--disable=invalid-name",

|

||||

"--disable=line-too-long",

|

||||

"--disable=consider-using-f-string"

|

||||

"--ignore=B104,B110,B112"

|

||||

],

|

||||

// python3 -m isort --py=27 --profile=black copyparty/

|

||||

"python.formatting.provider": "black",

|

||||

"python.formatting.provider": "none",

|

||||

"[python]": {

|

||||

"editor.defaultFormatter": "ms-python.black-formatter"

|

||||

},

|

||||

"editor.formatOnSave": true,

|

||||

"[html]": {

|

||||

"editor.formatOnSave": false,

|

||||

@@ -74,10 +58,6 @@

|

||||

"files.associations": {

|

||||

"*.makefile": "makefile"

|

||||

},

|

||||

"python.formatting.blackArgs": [

|

||||

"-t",

|

||||

"py27"

|

||||

],

|

||||

"python.linting.enabled": true,

|

||||

"python.pythonPath": "/usr/bin/python3"

|

||||

}

|

||||

349

README.md

349

README.md

@@ -1,29 +1,16 @@

|

||||

# 💾🎉 copyparty

|

||||

|

||||

* portable file sharing hub (py2/py3) [(on PyPI)](https://pypi.org/project/copyparty/)

|

||||

* MIT-Licensed, 2019-05-26, ed @ irc.rizon.net

|

||||

turn almost any device into a file server with resumable uploads/downloads using [*any*](#browser-support) web browser

|

||||

|

||||

* server only needs Python (2 or 3), all dependencies optional

|

||||

* 🔌 protocols: [http](#the-browser) // [ftp](#ftp-server) // [webdav](#webdav-server) // [smb/cifs](#smb-server)

|

||||

* 📱 [android app](#android-app) // [iPhone shortcuts](#ios-shortcuts)

|

||||

|

||||

## summary

|

||||

|

||||

turn your phone or raspi into a portable file server with resumable uploads/downloads using *any* web browser

|

||||

|

||||

* server only needs Python (`2.7` or `3.3+`), all dependencies optional

|

||||

* browse/upload with [IE4](#browser-support) / netscape4.0 on win3.11 (heh)

|

||||

* protocols: [http](#the-browser) // [ftp](#ftp-server) // [webdav](#webdav-server) // [smb/cifs](#smb-server)

|

||||

|

||||

**[Get started](#quickstart)!** or visit the **[read-only demo server](https://a.ocv.me/pub/demo/)** 👀 running from a basement in finland

|

||||

👉 **[Get started](#quickstart)!** or visit the **[read-only demo server](https://a.ocv.me/pub/demo/)** 👀 running from a basement in finland

|

||||

|

||||

📷 **screenshots:** [browser](#the-browser) // [upload](#uploading) // [unpost](#unpost) // [thumbnails](#thumbnails) // [search](#searching) // [fsearch](#file-search) // [zip-DL](#zip-downloads) // [md-viewer](#markdown-viewer)

|

||||

|

||||

|

||||

## get the app

|

||||

|

||||

<a href="https://f-droid.org/packages/me.ocv.partyup/"><img src="https://ocv.me/fdroid.png" alt="Get it on F-Droid" height="50" /> '' <img src="https://img.shields.io/f-droid/v/me.ocv.partyup.svg" alt="f-droid version info" /></a> '' <a href="https://github.com/9001/party-up"><img src="https://img.shields.io/github/release/9001/party-up.svg?logo=github" alt="github version info" /></a>

|

||||

|

||||

(the app is **NOT** the full copyparty server! just a basic upload client, nothing fancy yet)

|

||||

|

||||

|

||||

## readme toc

|

||||

|

||||

* top

|

||||

@@ -52,6 +39,9 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

||||

* [self-destruct](#self-destruct) - uploads can be given a lifetime

|

||||

* [file manager](#file-manager) - cut/paste, rename, and delete files/folders (if you have permission)

|

||||

* [batch rename](#batch-rename) - select some files and press `F2` to bring up the rename UI

|

||||

* [media player](#media-player) - plays almost every audio format there is

|

||||

* [audio equalizer](#audio-equalizer) - bass boosted

|

||||

* [fix unreliable playback on android](#fix-unreliable-playback-on-android) - due to phone / app settings

|

||||

* [markdown viewer](#markdown-viewer) - and there are *two* editors

|

||||

* [other tricks](#other-tricks)

|

||||

* [searching](#searching) - search by size, date, path/name, mp3-tags, ...

|

||||

@@ -80,18 +70,26 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

||||

* [themes](#themes)

|

||||

* [complete examples](#complete-examples)

|

||||

* [reverse-proxy](#reverse-proxy) - running copyparty next to other websites

|

||||

* [packages](#packages) - the party might be closer than you think

|

||||

* [arch package](#arch-package) - now [available on aur](https://aur.archlinux.org/packages/copyparty) maintained by [@icxes](https://github.com/icxes)

|

||||

* [nix package](#nix-package) - `nix profile install github:9001/copyparty`

|

||||

* [nixos module](#nixos-module)

|

||||

* [browser support](#browser-support) - TLDR: yes

|

||||

* [client examples](#client-examples) - interact with copyparty using non-browser clients

|

||||

* [folder sync](#folder-sync) - sync folders to/from copyparty

|

||||

* [mount as drive](#mount-as-drive) - a remote copyparty server as a local filesystem

|

||||

* [android app](#android-app) - upload to copyparty with one tap

|

||||

* [iOS shortcuts](#iOS-shortcuts) - there is no iPhone app, but

|

||||

* [performance](#performance) - defaults are usually fine - expect `8 GiB/s` download, `1 GiB/s` upload

|

||||

* [client-side](#client-side) - when uploading files

|

||||

* [security](#security) - some notes on hardening

|

||||

* [gotchas](#gotchas) - behavior that might be unexpected

|

||||

* [cors](#cors) - cross-site request config

|

||||

* [https](#https) - both HTTP and HTTPS are accepted

|

||||

* [recovering from crashes](#recovering-from-crashes)

|

||||

* [client crashes](#client-crashes)

|

||||

* [frefox wsod](#frefox-wsod) - firefox 87 can crash during uploads

|

||||

* [HTTP API](#HTTP-API) - see [devnotes](#./docs/devnotes.md#http-api)

|

||||

* [HTTP API](#HTTP-API) - see [devnotes](./docs/devnotes.md#http-api)

|

||||

* [dependencies](#dependencies) - mandatory deps

|

||||

* [optional dependencies](#optional-dependencies) - install these to enable bonus features

|

||||

* [optional gpl stuff](#optional-gpl-stuff)

|

||||

@@ -106,8 +104,9 @@ turn your phone or raspi into a portable file server with resumable uploads/down

|

||||

|

||||

just run **[copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py)** -- that's it! 🎉

|

||||

|

||||

* or install through pypi (python3 only): `python3 -m pip install --user -U copyparty`

|

||||

* or install through pypi: `python3 -m pip install --user -U copyparty`

|

||||

* or if you cannot install python, you can use [copyparty.exe](#copypartyexe) instead

|

||||

* or install [on arch](#arch-package) ╱ [on NixOS](#nixos-module) ╱ [through nix](#nix-package)

|

||||

* or if you are on android, [install copyparty in termux](#install-on-android)

|

||||

* or if you prefer to [use docker](./scripts/docker/) 🐋 you can do that too

|

||||

* docker has all deps built-in, so skip this step:

|

||||

@@ -127,6 +126,8 @@ enable thumbnails (images/audio/video), media indexing, and audio transcoding by

|

||||

|

||||

running copyparty without arguments (for example doubleclicking it on Windows) will give everyone read/write access to the current folder; you may want [accounts and volumes](#accounts-and-volumes)

|

||||

|

||||

or see [some usage examples](#complete-examples) for inspiration, or the [complete windows example](./docs/examples/windows.md)

|

||||

|

||||

some recommended options:

|

||||

* `-e2dsa` enables general [file indexing](#file-indexing)

|

||||

* `-e2ts` enables audio metadata indexing (needs either FFprobe or Mutagen)

|

||||

@@ -139,10 +140,11 @@ some recommended options:

|

||||

|

||||

you may also want these, especially on servers:

|

||||

|

||||

* [contrib/systemd/copyparty.service](contrib/systemd/copyparty.service) to run copyparty as a systemd service

|

||||

* [contrib/systemd/copyparty.service](contrib/systemd/copyparty.service) to run copyparty as a systemd service (see guide inside)

|

||||

* [contrib/systemd/prisonparty.service](contrib/systemd/prisonparty.service) to run it in a chroot (for extra security)

|

||||

* [contrib/rc/copyparty](contrib/rc/copyparty) to run copyparty on FreeBSD

|

||||

* [contrib/nginx/copyparty.conf](contrib/nginx/copyparty.conf) to [reverse-proxy](#reverse-proxy) behind nginx (for better https)

|

||||

* [nixos module](#nixos-module) to run copyparty on NixOS hosts

|

||||

|

||||

and remember to open the ports you want; here's a complete example including every feature copyparty has to offer:

|

||||

```

|

||||

@@ -177,7 +179,7 @@ firewall-cmd --reload

|

||||

* ☑ write-only folders

|

||||

* ☑ [unpost](#unpost): undo/delete accidental uploads

|

||||

* ☑ [self-destruct](#self-destruct) (specified server-side or client-side)

|

||||

* ☑ symlink/discard existing files (content-matching)

|

||||

* ☑ symlink/discard duplicates (content-matching)

|

||||

* download

|

||||

* ☑ single files in browser

|

||||

* ☑ [folders as zip / tar files](#zip-downloads)

|

||||

@@ -199,7 +201,7 @@ firewall-cmd --reload

|

||||

* ☑ search by name/path/date/size

|

||||

* ☑ [search by ID3-tags etc.](#searching)

|

||||

* client support

|

||||

* ☑ [sync folder to server](https://github.com/9001/copyparty/tree/hovudstraum/bin#up2kpy)

|

||||

* ☑ [folder sync](#folder-sync)

|

||||

* ☑ [curl-friendly](https://user-images.githubusercontent.com/241032/215322619-ea5fd606-3654-40ad-94ee-2bc058647bb2.png)

|

||||

* markdown

|

||||

* ☑ [viewer](#markdown-viewer)

|

||||

@@ -276,9 +278,11 @@ server notes:

|

||||

|

||||

* [Firefox issue 1790500](https://bugzilla.mozilla.org/show_bug.cgi?id=1790500) -- entire browser can crash after uploading ~4000 small files

|

||||

|

||||

* Android: music playback randomly stops due to [battery usage settings](#fix-unreliable-playback-on-android)

|

||||

|

||||

* iPhones: the volume control doesn't work because [apple doesn't want it to](https://developer.apple.com/library/archive/documentation/AudioVideo/Conceptual/Using_HTML5_Audio_Video/Device-SpecificConsiderations/Device-SpecificConsiderations.html#//apple_ref/doc/uid/TP40009523-CH5-SW11)

|

||||

* *future workaround:* enable the equalizer, make it all-zero, and set a negative boost to reduce the volume

|

||||

* "future" because `AudioContext` is broken in the current iOS version (15.1), maybe one day...

|

||||

* "future" because `AudioContext` can't maintain a stable playback speed in the current iOS version (15.7), maybe one day...

|

||||

|

||||

* Windows: folders cannot be accessed if the name ends with `.`

|

||||

* python or windows bug

|

||||

@@ -301,7 +305,7 @@ upgrade notes

|

||||

* http-api: delete/move is now `POST` instead of `GET`

|

||||

* everything other than `GET` and `HEAD` must pass [cors validation](#cors)

|

||||

* `1.5.0` (2022-12-03): [new chunksize formula](https://github.com/9001/copyparty/commit/54e1c8d261df) for files larger than 128 GiB

|

||||

* **users:** upgrade to the latest [cli uploader](https://github.com/9001/copyparty/blob/hovudstraum/bin/up2k.py) if you use that

|

||||

* **users:** upgrade to the latest [cli uploader](https://github.com/9001/copyparty/blob/hovudstraum/bin/u2c.py) if you use that

|

||||

* **devs:** update third-party up2k clients (if those even exist)

|

||||

|

||||

|

||||

@@ -467,6 +471,7 @@ click the `🌲` or pressing the `B` hotkey to toggle between breadcrumbs path (

|

||||

## thumbnails

|

||||

|

||||

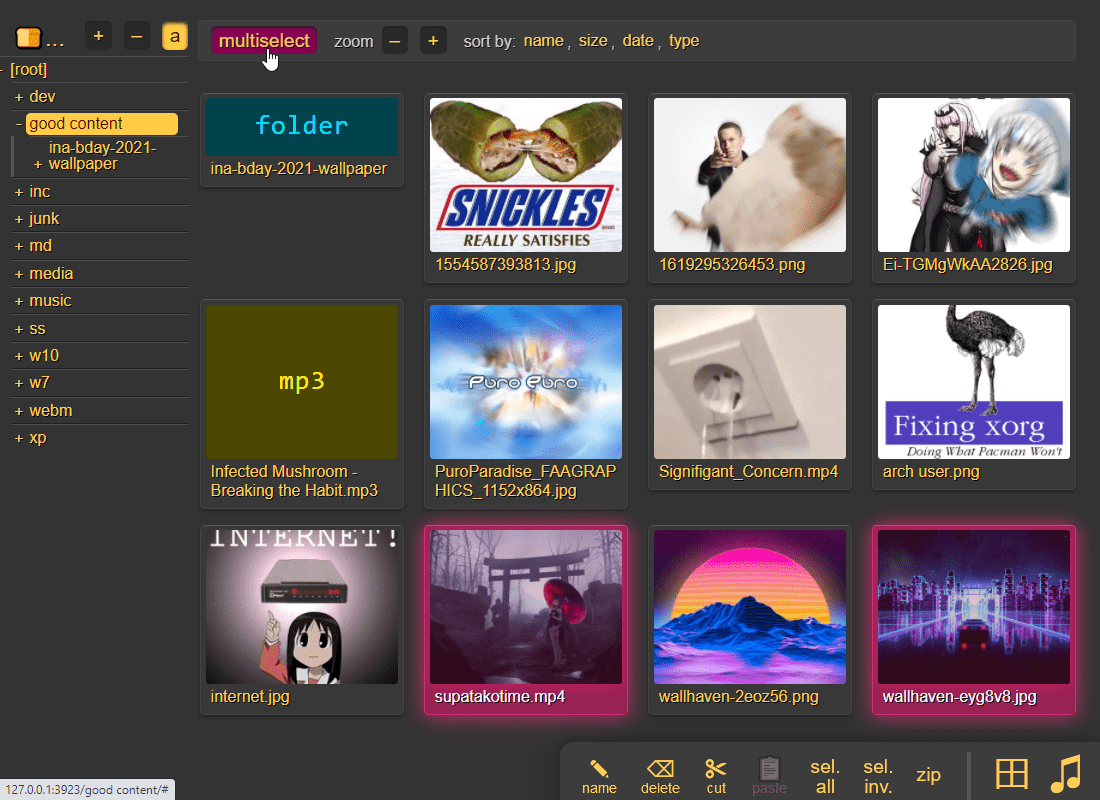

press `g` or `田` to toggle grid-view instead of the file listing and `t` toggles icons / thumbnails

|

||||

* can be made default globally with `--grid` or per-volume with volflag `grid`

|

||||

|

||||

|

||||

|

||||

@@ -477,6 +482,7 @@ it does static images with Pillow / pyvips / FFmpeg, and uses FFmpeg for video f

|

||||

audio files are covnerted into spectrograms using FFmpeg unless you `--no-athumb` (and some FFmpeg builds may need `--th-ff-swr`)

|

||||

|

||||

images with the following names (see `--th-covers`) become the thumbnail of the folder they're in: `folder.png`, `folder.jpg`, `cover.png`, `cover.jpg`

|

||||

* and, if you enable [file indexing](#file-indexing), all remaining folders will also get thumbnails (as long as they contain any pics at all)

|

||||

|

||||

in the grid/thumbnail view, if the audio player panel is open, songs will start playing when clicked

|

||||

* indicated by the audio files having the ▶ icon instead of 💾

|

||||

@@ -508,7 +514,7 @@ you can also zip a selection of files or folders by clicking them in the browser

|

||||

|

||||

## uploading

|

||||

|

||||

drag files/folders into the web-browser to upload (or use the [command-line uploader](https://github.com/9001/copyparty/tree/hovudstraum/bin#up2kpy))

|

||||

drag files/folders into the web-browser to upload (or use the [command-line uploader](https://github.com/9001/copyparty/tree/hovudstraum/bin#u2cpy))

|

||||

|

||||

this initiates an upload using `up2k`; there are two uploaders available:

|

||||

* `[🎈] bup`, the basic uploader, supports almost every browser since netscape 4.0

|

||||

@@ -658,12 +664,68 @@ or a mix of both:

|

||||

the metadata keys you can use in the format field are the ones in the file-browser table header (whatever is collected with `-mte` and `-mtp`)

|

||||

|

||||

|

||||

## media player

|

||||

|

||||

plays almost every audio format there is (if the server has FFmpeg installed for on-demand transcoding)

|

||||

|

||||

the following audio formats are usually always playable, even without FFmpeg: `aac|flac|m4a|mp3|ogg|opus|wav`

|

||||

|

||||

some hilights:

|

||||

* OS integration; control playback from your phone's lockscreen ([windows](https://user-images.githubusercontent.com/241032/233213022-298a98ba-721a-4cf1-a3d4-f62634bc53d5.png) // [iOS](https://user-images.githubusercontent.com/241032/142711926-0700be6c-3e31-47b3-9928-53722221f722.png) // [android](https://user-images.githubusercontent.com/241032/233212311-a7368590-08c7-4f9f-a1af-48ccf3f36fad.png))

|

||||

* shows the audio waveform in the seekbar

|

||||

* not perfectly gapless but can get really close (see settings + eq below); good enough to enjoy gapless albums as intended

|

||||

|

||||

click the `play` link next to an audio file, or copy the link target to [share it](https://a.ocv.me/pub/demo/music/Ubiktune%20-%20SOUNDSHOCK%202%20-%20FM%20FUNK%20TERRROR!!/#af-1fbfba61&t=18) (optionally with a timestamp to start playing from, like that example does)

|

||||

|

||||

open the `[🎺]` media-player-settings tab to configure it,

|

||||

* switches:

|

||||

* `[preload]` starts loading the next track when it's about to end, reduces the silence between songs

|

||||

* `[full]` does a full preload by downloading the entire next file; good for unreliable connections, bad for slow connections

|

||||

* `[~s]` toggles the seekbar waveform display

|

||||

* `[/np]` enables buttons to copy the now-playing info as an irc message

|

||||

* `[os-ctl]` makes it possible to control audio playback from the lockscreen of your device (enables [mediasession](https://developer.mozilla.org/en-US/docs/Web/API/MediaSession))

|

||||

* `[seek]` allows seeking with lockscreen controls (buggy on some devices)

|

||||

* `[art]` shows album art on the lockscreen

|

||||

* `[🎯]` keeps the playing song scrolled into view (good when using the player as a taskbar dock)

|

||||

* `[⟎]` shrinks the playback controls

|

||||

* playback mode:

|

||||

* `[loop]` keeps looping the folder

|

||||

* `[next]` plays into the next folder

|

||||

* transcode:

|

||||

* `[flac]` convers `flac` and `wav` files into opus

|

||||

* `[aac]` converts `aac` and `m4a` files into opus

|

||||

* `[oth]` converts all other known formats into opus

|

||||

* `aac|ac3|aif|aiff|alac|alaw|amr|ape|au|dfpwm|dts|flac|gsm|it|m4a|mo3|mod|mp2|mp3|mpc|mptm|mt2|mulaw|ogg|okt|opus|ra|s3m|tak|tta|ulaw|wav|wma|wv|xm|xpk`

|

||||

* "tint" reduces the contrast of the playback bar

|

||||

|

||||

|

||||

### audio equalizer

|

||||

|

||||

bass boosted

|

||||

|

||||

can also boost the volume in general, or increase/decrease stereo width (like [crossfeed](https://www.foobar2000.org/components/view/foo_dsp_meiercf) just worse)

|

||||

|

||||

has the convenient side-effect of reducing the pause between songs, so gapless albums play better with the eq enabled (just make it flat)

|

||||

|

||||

|

||||

### fix unreliable playback on android

|

||||

|

||||

due to phone / app settings, android phones may randomly stop playing music when the power saver kicks in, especially at the end of an album -- you can fix it by [disabling power saving](https://user-images.githubusercontent.com/241032/235262123-c328cca9-3930-4948-bd18-3949b9fd3fcf.png) in the [app settings](https://user-images.githubusercontent.com/241032/235262121-2ffc51ae-7821-4310-a322-c3b7a507890c.png) of the browser you use for music streaming (preferably a dedicated one)

|

||||

|

||||

|

||||

## markdown viewer

|

||||

|

||||

and there are *two* editors

|

||||

|

||||

|

||||

|

||||

there is a built-in extension for inline clickable thumbnails;

|

||||

* enable it by adding `<!-- th -->` somewhere in the doc

|

||||

* add thumbnails with `!th[l](your.jpg)` where `l` means left-align (`r` = right-align)

|

||||

* a single line with `---` clears the float / inlining

|

||||

* in the case of README.md being displayed below a file listing, thumbnails will open in the gallery viewer

|

||||

|

||||

other notes,

|

||||

* the document preview has a max-width which is the same as an A4 paper when printed

|

||||

|

||||

|

||||

@@ -768,6 +830,13 @@ an FTP server can be started using `--ftp 3921`, and/or `--ftps` for explicit T

|

||||

* some older software (filezilla on debian-stable) cannot passive-mode with TLS

|

||||

* login with any username + your password, or put your password in the username field

|

||||

|

||||

some recommended FTP / FTPS clients; `wark` = example password:

|

||||

* https://winscp.net/eng/download.php

|

||||

* https://filezilla-project.org/ struggles a bit with ftps in active-mode, but is fine otherwise

|

||||

* https://rclone.org/ does FTPS with `tls=false explicit_tls=true`

|

||||

* `lftp -u k,wark -p 3921 127.0.0.1 -e ls`

|

||||

* `lftp -u k,wark -p 3990 127.0.0.1 -e 'set ssl:verify-certificate no; ls'`

|

||||

|

||||

|

||||

## webdav server

|

||||

|

||||

@@ -862,14 +931,13 @@ through arguments:

|

||||

* `--xlink` enables deduplication across volumes

|

||||

|

||||

the same arguments can be set as volflags, in addition to `d2d`, `d2ds`, `d2t`, `d2ts`, `d2v` for disabling:

|

||||

* `-v ~/music::r:c,e2dsa,e2tsr` does a full reindex of everything on startup

|

||||

* `-v ~/music::r:c,e2ds,e2tsr` does a full reindex of everything on startup

|

||||

* `-v ~/music::r:c,d2d` disables **all** indexing, even if any `-e2*` are on

|

||||

* `-v ~/music::r:c,d2t` disables all `-e2t*` (tags), does not affect `-e2d*`

|

||||

* `-v ~/music::r:c,d2ds` disables on-boot scans; only index new uploads

|

||||

* `-v ~/music::r:c,d2ts` same except only affecting tags

|

||||

|

||||

note:

|

||||

* the parser can finally handle `c,e2dsa,e2tsr` so you no longer have to `c,e2dsa:c,e2tsr`

|

||||

* `e2tsr` is probably always overkill, since `e2ds`/`e2dsa` would pick up any file modifications and `e2ts` would then reindex those, unless there is a new copyparty version with new parsers and the release note says otherwise

|

||||

* the rescan button in the admin panel has no effect unless the volume has `-e2ds` or higher

|

||||

* deduplication is possible on windows if you run copyparty as administrator (not saying you should!)

|

||||

@@ -891,7 +959,11 @@ avoid traversing into other filesystems using `--xdev` / volflag `:c,xdev`, ski

|

||||

|

||||

and/or you can `--xvol` / `:c,xvol` to ignore all symlinks leaving the volume's top directory, but still allow bind-mounts pointing elsewhere

|

||||

|

||||

**NB: only affects the indexer** -- users can still access anything inside a volume, unless shadowed by another volume

|

||||

* symlinks are permitted with `xvol` if they point into another volume where the user has the same level of access

|

||||

|

||||

these options will reduce performance; unlikely worst-case estimates are 14% reduction for directory listings, 35% for download-as-tar

|

||||

|

||||

as of copyparty v1.7.0 these options also prevent file access at runtime -- in previous versions it was just hints for the indexer

|

||||

|

||||

### periodic rescan

|

||||

|

||||

@@ -1087,7 +1159,33 @@ see the top of [./copyparty/web/browser.css](./copyparty/web/browser.css) where

|

||||

|

||||

## complete examples

|

||||

|

||||

* read-only music server

|

||||

* see [running on windows](./docs/examples/windows.md) for a fancy windows setup

|

||||

|

||||

* or use any of the examples below, just replace `python copyparty-sfx.py` with `copyparty.exe` if you're using the exe edition

|

||||

|

||||

* allow anyone to download or upload files into the current folder:

|

||||

`python copyparty-sfx.py`

|

||||

|

||||

* enable searching and music indexing with `-e2dsa -e2ts`

|

||||

|

||||

* start an FTP server on port 3921 with `--ftp 3921`

|

||||

|

||||

* announce it on your LAN with `-z` so it appears in windows/Linux file managers

|

||||

|

||||

* anyone can upload, but nobody can see any files (even the uploader):

|

||||

`python copyparty-sfx.py -e2dsa -v .::w`

|

||||

|

||||

* block uploads if there's less than 4 GiB free disk space with `--df 4`

|

||||

|

||||

* show a popup on new uploads with `--xau bin/hooks/notify.py`

|

||||

|

||||

* anyone can upload, and receive "secret" links for each upload they do:

|

||||

`python copyparty-sfx.py -e2dsa -v .::wG:c,fk=8`

|

||||

|

||||

* anyone can browse, only `kevin` (password `okgo`) can upload/move/delete files:

|

||||

`python copyparty-sfx.py -e2dsa -a kevin:okgo -v .::r:rwmd,kevin`

|

||||

|

||||

* read-only music server:

|

||||

`python copyparty-sfx.py -v /mnt/nas/music:/music:r -e2dsa -e2ts --no-robots --force-js --theme 2`

|

||||

|

||||

* ...with bpm and key scanning

|

||||

@@ -1102,19 +1200,135 @@ see the top of [./copyparty/web/browser.css](./copyparty/web/browser.css) where

|

||||

|

||||

## reverse-proxy

|

||||

|

||||

running copyparty next to other websites hosted on an existing webserver such as nginx or apache

|

||||

running copyparty next to other websites hosted on an existing webserver such as nginx, caddy, or apache

|

||||

|

||||

you can either:

|

||||

* give copyparty its own domain or subdomain (recommended)

|

||||

* or do location-based proxying, using `--rp-loc=/stuff` to tell copyparty where it is mounted -- has a slight performance cost and higher chance of bugs

|

||||

* if copyparty says `incorrect --rp-loc or webserver config; expected vpath starting with [...]` it's likely because the webserver is stripping away the proxy location from the request URLs -- see the `ProxyPass` in the apache example below

|

||||

|

||||

some reverse proxies (such as [Caddy](https://caddyserver.com/)) can automatically obtain a valid https/tls certificate for you, and some support HTTP/2 and QUIC which could be a nice speed boost

|

||||

|

||||

example webserver configs:

|

||||

|

||||

* [nginx config](contrib/nginx/copyparty.conf) -- entire domain/subdomain

|

||||

* [apache2 config](contrib/apache/copyparty.conf) -- location-based

|

||||

|

||||

|

||||

# packages

|

||||

|

||||

the party might be closer than you think

|

||||

|

||||

|

||||

## arch package

|

||||

|

||||

now [available on aur](https://aur.archlinux.org/packages/copyparty) maintained by [@icxes](https://github.com/icxes)

|

||||

|

||||

|

||||

## nix package

|

||||

|

||||

`nix profile install github:9001/copyparty`

|

||||

|

||||

requires a [flake-enabled](https://nixos.wiki/wiki/Flakes) installation of nix

|

||||

|

||||

some recommended dependencies are enabled by default; [override the package](https://github.com/9001/copyparty/blob/hovudstraum/contrib/package/nix/copyparty/default.nix#L3-L22) if you want to add/remove some features/deps

|

||||

|

||||

`ffmpeg-full` was chosen over `ffmpeg-headless` mainly because we need `withWebp` (and `withOpenmpt` is also nice) and being able to use a cached build felt more important than optimizing for size at the time -- PRs welcome if you disagree 👍

|

||||

|

||||

|

||||

## nixos module

|

||||

|

||||

for this setup, you will need a [flake-enabled](https://nixos.wiki/wiki/Flakes) installation of NixOS.

|

||||

|

||||

```nix

|

||||

{

|

||||

# add copyparty flake to your inputs

|

||||

inputs.copyparty.url = "github:9001/copyparty";

|

||||

|

||||

# ensure that copyparty is an allowed argument to the outputs function

|

||||

outputs = { self, nixpkgs, copyparty }: {

|

||||

nixosConfigurations.yourHostName = nixpkgs.lib.nixosSystem {

|

||||

modules = [

|

||||

# load the copyparty NixOS module

|

||||

copyparty.nixosModules.default

|

||||

({ pkgs, ... }: {

|

||||

# add the copyparty overlay to expose the package to the module

|

||||

nixpkgs.overlays = [ copyparty.overlays.default ];

|

||||

# (optional) install the package globally

|

||||

environment.systemPackages = [ pkgs.copyparty ];

|

||||

# configure the copyparty module

|

||||

services.copyparty.enable = true;

|

||||

})

|

||||

];

|

||||

};

|

||||

};

|

||||

}

|

||||

```

|

||||

|

||||

copyparty on NixOS is configured via `services.copyparty` options, for example:

|

||||

```nix

|

||||

services.copyparty = {

|

||||

enable = true;

|

||||

# directly maps to values in the [global] section of the copyparty config.

|

||||

# see `copyparty --help` for available options

|

||||

settings = {

|

||||

i = "0.0.0.0";

|

||||

# use lists to set multiple values

|

||||

p = [ 3210 3211 ];

|

||||

# use booleans to set binary flags

|

||||

no-reload = true;

|

||||

# using 'false' will do nothing and omit the value when generating a config

|

||||

ignored-flag = false;

|

||||

};

|

||||

|

||||

# create users

|

||||

accounts = {

|

||||

# specify the account name as the key

|

||||

ed = {

|

||||

# provide the path to a file containing the password, keeping it out of /nix/store

|

||||

# must be readable by the copyparty service user

|

||||

passwordFile = "/run/keys/copyparty/ed_password";

|

||||

};

|

||||

# or do both in one go

|

||||

k.passwordFile = "/run/keys/copyparty/k_password";

|

||||

};

|

||||

|

||||

# create a volume

|

||||

volumes = {

|

||||

# create a volume at "/" (the webroot), which will

|

||||

"/" = {

|

||||

# share the contents of "/srv/copyparty"

|

||||

path = "/srv/copyparty";

|

||||

# see `copyparty --help-accounts` for available options

|

||||

access = {

|

||||

# everyone gets read-access, but

|

||||

r = "*";

|

||||

# users "ed" and "k" get read-write

|

||||

rw = [ "ed" "k" ];

|

||||

};

|

||||

# see `copyparty --help-flags` for available options

|

||||

flags = {

|

||||

# "fk" enables filekeys (necessary for upget permission) (4 chars long)

|

||||

fk = 4;

|

||||

# scan for new files every 60sec

|

||||

scan = 60;

|

||||

# volflag "e2d" enables the uploads database

|

||||

e2d = true;

|

||||

# "d2t" disables multimedia parsers (in case the uploads are malicious)

|

||||

d2t = true;

|

||||

# skips hashing file contents if path matches *.iso

|

||||

nohash = "\.iso$";

|

||||

};

|

||||

};

|

||||

};

|

||||

# you may increase the open file limit for the process

|

||||

openFilesLimit = 8192;

|

||||

};

|

||||

```

|

||||

|

||||

the passwordFile at /run/keys/copyparty/ could for example be generated by [agenix](https://github.com/ryantm/agenix), or you could just dump it in the nix store instead if that's acceptable

|

||||

|

||||

|

||||

# browser support

|

||||

|

||||

TLDR: yes

|

||||

@@ -1185,10 +1399,10 @@ interact with copyparty using non-browser clients

|

||||

* `(printf 'PUT /junk?pw=wark HTTP/1.1\r\n\r\n'; cat movie.mkv) | nc 127.0.0.1 3923`

|

||||

* `(printf 'PUT / HTTP/1.1\r\n\r\n'; cat movie.mkv) >/dev/tcp/127.0.0.1/3923`

|

||||

|

||||

* python: [up2k.py](https://github.com/9001/copyparty/blob/hovudstraum/bin/up2k.py) is a command-line up2k client [(webm)](https://ocv.me/stuff/u2cli.webm)

|

||||

* file uploads, file-search, folder sync, autoresume of aborted/broken uploads

|

||||

* can be downloaded from copyparty: controlpanel -> connect -> [up2k.py](http://127.0.0.1:3923/.cpr/a/up2k.py)

|

||||

* see [./bin/README.md#up2kpy](bin/README.md#up2kpy)

|

||||

* python: [u2c.py](https://github.com/9001/copyparty/blob/hovudstraum/bin/u2c.py) is a command-line up2k client [(webm)](https://ocv.me/stuff/u2cli.webm)

|

||||

* file uploads, file-search, [folder sync](#folder-sync), autoresume of aborted/broken uploads

|

||||

* can be downloaded from copyparty: controlpanel -> connect -> [u2c.py](http://127.0.0.1:3923/.cpr/a/u2c.py)

|

||||

* see [./bin/README.md#u2cpy](bin/README.md#u2cpy)

|

||||

|

||||

* FUSE: mount a copyparty server as a local filesystem

|

||||

* cross-platform python client available in [./bin/](bin/)

|

||||

@@ -1207,17 +1421,27 @@ you can provide passwords using header `PW: hunter2`, cookie `cppwd=hunter2`, ur

|

||||

NOTE: curl will not send the original filename if you use `-T` combined with url-params! Also, make sure to always leave a trailing slash in URLs unless you want to override the filename

|

||||

|

||||

|

||||

## folder sync

|

||||

|

||||

sync folders to/from copyparty

|

||||

|

||||

the commandline uploader [u2c.py](https://github.com/9001/copyparty/tree/hovudstraum/bin#u2cpy) with `--dr` is the best way to sync a folder to copyparty; verifies checksums and does files in parallel, and deletes unexpected files on the server after upload has finished which makes file-renames really cheap (it'll rename serverside and skip uploading)

|

||||

|

||||

alternatively there is [rclone](./docs/rclone.md) which allows for bidirectional sync and is *way* more flexible (stream files straight from sftp/s3/gcs to copyparty, ...), although there is no integrity check and it won't work with files over 100 MiB if copyparty is behind cloudflare

|

||||

|

||||

* starting from rclone v1.63 (currently [in beta](https://beta.rclone.org/?filter=latest)), rclone will also be faster than u2c.py

|

||||

|

||||

|

||||

## mount as drive

|

||||

|

||||

a remote copyparty server as a local filesystem; go to the control-panel and click `connect` to see a list of commands to do that

|

||||

|

||||

alternatively, some alternatives roughly sorted by speed (unreproducible benchmark), best first:

|

||||

|

||||

* [rclone-http](./docs/rclone.md) (25s), read-only

|

||||

* [rclone-webdav](./docs/rclone.md) (25s), read/WRITE ([v1.63-beta](https://beta.rclone.org/?filter=latest))

|

||||

* [rclone-http](./docs/rclone.md) (26s), read-only

|

||||

* [partyfuse.py](./bin/#partyfusepy) (35s), read-only

|

||||

* [rclone-ftp](./docs/rclone.md) (47s), read/WRITE

|

||||

* [rclone-webdav](./docs/rclone.md) (51s), read/WRITE

|

||||

* copyparty-1.5.0's webdav server is faster than rclone-1.60.0 (69s)

|

||||

* [partyfuse.py](./bin/#partyfusepy) (71s), read-only

|

||||

* davfs2 (103s), read/WRITE, *very fast* on small files

|

||||

* [win10-webdav](#webdav-server) (138s), read/WRITE

|

||||

* [win10-smb2](#smb-server) (387s), read/WRITE

|

||||

@@ -1225,6 +1449,27 @@ alternatively, some alternatives roughly sorted by speed (unreproducible benchma

|

||||

most clients will fail to mount the root of a copyparty server unless there is a root volume (so you get the admin-panel instead of a browser when accessing it) -- in that case, mount a specific volume instead

|

||||

|

||||

|

||||

# android app

|

||||

|

||||

upload to copyparty with one tap

|

||||

|

||||

<a href="https://f-droid.org/packages/me.ocv.partyup/"><img src="https://ocv.me/fdroid.png" alt="Get it on F-Droid" height="50" /> '' <img src="https://img.shields.io/f-droid/v/me.ocv.partyup.svg" alt="f-droid version info" /></a> '' <a href="https://github.com/9001/party-up"><img src="https://img.shields.io/github/release/9001/party-up.svg?logo=github" alt="github version info" /></a>

|

||||

|

||||

the app is **NOT** the full copyparty server! just a basic upload client, nothing fancy yet

|

||||

|

||||

if you want to run the copyparty server on your android device, see [install on android](#install-on-android)

|

||||

|

||||

|

||||

# iOS shortcuts

|

||||

|

||||

there is no iPhone app, but the following shortcuts are almost as good:

|

||||

|

||||

* [upload to copyparty](https://www.icloud.com/shortcuts/41e98dd985cb4d3bb433222bc1e9e770) ([offline](https://github.com/9001/copyparty/raw/hovudstraum/contrib/ios/upload-to-copyparty.shortcut)) ([png](https://user-images.githubusercontent.com/241032/226118053-78623554-b0ed-482e-98e4-6d57ada58ea4.png)) based on the [original](https://www.icloud.com/shortcuts/ab415d5b4de3467b9ce6f151b439a5d7) by [Daedren](https://github.com/Daedren) (thx!)

|

||||

* can strip exif, upload files, pics, vids, links, clipboard

|

||||

* can download links and rehost the target file on copyparty (see first comment inside the shortcut)

|

||||

* pics become lowres if you share from gallery to shortcut, so better to launch the shortcut and pick stuff from there

|

||||

|

||||

|

||||

# performance

|

||||

|

||||

defaults are usually fine - expect `8 GiB/s` download, `1 GiB/s` upload

|

||||

@@ -1232,15 +1477,16 @@ defaults are usually fine - expect `8 GiB/s` download, `1 GiB/s` upload

|

||||

below are some tweaks roughly ordered by usefulness:

|

||||

|

||||

* `-q` disables logging and can help a bunch, even when combined with `-lo` to redirect logs to file

|

||||

* `--http-only` or `--https-only` (unless you want to support both protocols) will reduce the delay before a new connection is established

|

||||

* `--hist` pointing to a fast location (ssd) will make directory listings and searches faster when `-e2d` or `-e2t` is set

|

||||

* `--no-hash .` when indexing a network-disk if you don't care about the actual filehashes and only want the names/tags searchable

|

||||

* `--no-htp --hash-mt=0 --mtag-mt=1 --th-mt=1` minimizes the number of threads; can help in some eccentric environments (like the vscode debugger)

|

||||

* `-j` enables multiprocessing (actual multithreading) and can make copyparty perform better in cpu-intensive workloads, for example:

|

||||

* huge amount of short-lived connections

|

||||

* `-j0` enables multiprocessing (actual multithreading), can reduce latency to `20+80/numCores` percent and generally improve performance in cpu-intensive workloads, for example:

|

||||

* lots of connections (many users or heavy clients)

|

||||

* simultaneous downloads and uploads saturating a 20gbps connection

|

||||

|

||||

...however it adds an overhead to internal communication so it might be a net loss, see if it works 4 u

|

||||

* using [pypy](https://www.pypy.org/) instead of [cpython](https://www.python.org/) *can* be 70% faster for some workloads, but slower for many others

|

||||

* and pypy can sometimes crash on startup with `-j0` (TODO make issue)

|

||||

|

||||

|

||||

## client-side

|

||||

@@ -1250,7 +1496,7 @@ when uploading files,

|

||||

* chrome is recommended, at least compared to firefox:

|

||||

* up to 90% faster when hashing, especially on SSDs

|

||||

* up to 40% faster when uploading over extremely fast internets

|

||||

* but [up2k.py](https://github.com/9001/copyparty/blob/hovudstraum/bin/up2k.py) can be 40% faster than chrome again

|

||||

* but [u2c.py](https://github.com/9001/copyparty/blob/hovudstraum/bin/u2c.py) can be 40% faster than chrome again

|

||||

|

||||

* if you're cpu-bottlenecked, or the browser is maxing a cpu core:

|

||||

* up to 30% faster uploads if you hide the upload status list by switching away from the `[🚀]` up2k ui-tab (or closing it)

|

||||

@@ -1320,6 +1566,18 @@ by default, except for `GET` and `HEAD` operations, all requests must either:

|

||||

cors can be configured with `--acao` and `--acam`, or the protections entirely disabled with `--allow-csrf`

|

||||

|

||||

|

||||

## https

|

||||

|

||||

both HTTP and HTTPS are accepted by default, but letting a [reverse proxy](#reverse-proxy) handle the https/tls/ssl would be better (probably more secure by default)

|

||||

|

||||

copyparty doesn't speak HTTP/2 or QUIC, so using a reverse proxy would solve that as well

|

||||

|

||||

if [cfssl](https://github.com/cloudflare/cfssl/releases/latest) is installed, copyparty will automatically create a CA and server-cert on startup

|

||||

* the certs are written to `--crt-dir` for distribution, see `--help` for the other `--crt` options

|

||||

* this will be a self-signed certificate so you must install your `ca.pem` into all your browsers/devices

|

||||

* if you want to avoid the hassle of distributing certs manually, please consider using a reverse proxy

|

||||

|

||||

|

||||

# recovering from crashes

|

||||

|

||||

## client crashes

|

||||

@@ -1342,7 +1600,7 @@ however you can hit `F12` in the up2k tab and use the devtools to see how far yo

|

||||

|

||||

# HTTP API

|

||||

|

||||

see [devnotes](#./docs/devnotes.md#http-api)

|

||||

see [devnotes](./docs/devnotes.md#http-api)

|

||||

|

||||

|

||||

# dependencies

|

||||

@@ -1387,7 +1645,7 @@ these are standalone programs and will never be imported / evaluated by copypart

|

||||

|

||||

the self-contained "binary" [copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py) will unpack itself and run copyparty, assuming you have python installed of course

|

||||

|

||||

you can reduce the sfx size by repacking it; see [./docs/devnotes.md#sfx-repack](#./docs/devnotes.md#sfx-repack)

|

||||

you can reduce the sfx size by repacking it; see [./docs/devnotes.md#sfx-repack](./docs/devnotes.md#sfx-repack)

|

||||

|

||||

|

||||

## copyparty.exe

|

||||

@@ -1401,6 +1659,7 @@ can be convenient on machines where installing python is problematic, however is

|

||||

* [copyparty.exe](https://github.com/9001/copyparty/releases/latest/download/copyparty.exe) runs on win8 or newer, was compiled on win10, does thumbnails + media tags, and is *currently* safe to use, but any future python/expat/pillow CVEs can only be remedied by downloading a newer version of the exe

|

||||

|

||||

* on win8 it needs [vc redist 2015](https://www.microsoft.com/en-us/download/details.aspx?id=48145), on win10 it just works

|

||||

* some antivirus may freak out (false-positive), possibly [Avast, AVG, and McAfee](https://www.virustotal.com/gui/file/52391a1e9842cf70ad243ef83844d46d29c0044d101ee0138fcdd3c8de2237d6/detection)

|

||||

|

||||

* dangerous: [copyparty32.exe](https://github.com/9001/copyparty/releases/latest/download/copyparty32.exe) is compatible with [windows7](https://user-images.githubusercontent.com/241032/221445944-ae85d1f4-d351-4837-b130-82cab57d6cca.png), which means it uses an ancient copy of python (3.7.9) which cannot be upgraded and should never be exposed to the internet (LAN is fine)

|

||||

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

# [`up2k.py`](up2k.py)

|

||||

# [`u2c.py`](u2c.py)

|

||||

* command-line up2k client [(webm)](https://ocv.me/stuff/u2cli.webm)

|

||||

* file uploads, file-search, autoresume of aborted/broken uploads

|

||||

* sync local folder to server

|

||||

|

||||

@@ -10,6 +10,7 @@ run copyparty with `--help-hooks` for usage details / hook type explanations (xb

|

||||

# after upload

|

||||

* [notify.py](notify.py) shows a desktop notification ([example](https://user-images.githubusercontent.com/241032/215335767-9c91ed24-d36e-4b6b-9766-fb95d12d163f.png))

|

||||

* [notify2.py](notify2.py) uses the json API to show more context

|

||||

* [image-noexif.py](image-noexif.py) removes image exif by overwriting / directly editing the uploaded file

|

||||

* [discord-announce.py](discord-announce.py) announces new uploads on discord using webhooks ([example](https://user-images.githubusercontent.com/241032/215304439-1c1cb3c8-ec6f-4c17-9f27-81f969b1811a.png))

|

||||

* [reject-mimetype.py](reject-mimetype.py) rejects uploads unless the mimetype is acceptable

|

||||

|

||||

|

||||

@@ -13,9 +13,15 @@ example usage as global config:

|

||||

--xau f,t5,j,bin/hooks/discord-announce.py

|

||||

|

||||

example usage as a volflag (per-volume config):

|

||||

-v srv/inc:inc:c,xau=f,t5,j,bin/hooks/discord-announce.py

|

||||

-v srv/inc:inc:r:rw,ed:c,xau=f,t5,j,bin/hooks/discord-announce.py

|

||||

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

||||

|

||||

(share filesystem-path srv/inc as volume /inc,

|

||||

readable by everyone, read-write for user 'ed',

|

||||

running this plugin on all uploads with the params listed below)

|

||||

|

||||

parameters explained,

|

||||

xbu = execute after upload

|

||||

f = fork; don't wait for it to finish

|

||||

t5 = timeout if it's still running after 5 sec

|

||||

j = provide upload information as json; not just the filename

|

||||

@@ -30,6 +36,7 @@ then use this to design your message: https://discohook.org/

|

||||

|

||||

def main():

|

||||

WEBHOOK = "https://discord.com/api/webhooks/1234/base64"

|

||||

WEBHOOK = "https://discord.com/api/webhooks/1066830390280597718/M1TDD110hQA-meRLMRhdurych8iyG35LDoI1YhzbrjGP--BXNZodZFczNVwK4Ce7Yme5"

|

||||

|

||||

# read info from copyparty

|

||||

inf = json.loads(sys.argv[1])

|

||||

|

||||

72

bin/hooks/image-noexif.py

Executable file

72

bin/hooks/image-noexif.py

Executable file

@@ -0,0 +1,72 @@

|

||||

#!/usr/bin/env python3

|

||||

|

||||

import os

|

||||

import sys

|

||||

import subprocess as sp

|

||||

|

||||

|

||||

_ = r"""

|

||||

remove exif tags from uploaded images; the eventhook edition of

|

||||

https://github.com/9001/copyparty/blob/hovudstraum/bin/mtag/image-noexif.py

|

||||

|

||||

dependencies:

|

||||

exiftool / perl-Image-ExifTool

|

||||

|

||||

being an upload hook, this will take effect after upload completion

|

||||

but before copyparty has hashed/indexed the file, which means that

|

||||

copyparty will never index the original file, so deduplication will

|

||||

not work as expected... which is mostly OK but ehhh

|

||||

|

||||

note: modifies the file in-place, so don't set the `f` (fork) flag

|

||||

|

||||

example usages; either as global config (all volumes) or as volflag:

|

||||

--xau bin/hooks/image-noexif.py

|

||||

-v srv/inc:inc:r:rw,ed:c,xau=bin/hooks/image-noexif.py

|

||||

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

||||

|

||||

explained:

|

||||

share fs-path srv/inc at /inc (readable by all, read-write for user ed)

|

||||

running this xau (execute-after-upload) plugin for all uploaded files

|

||||

"""

|

||||

|

||||

|

||||

# filetypes to process; ignores everything else

|

||||

EXTS = ("jpg", "jpeg", "avif", "heif", "heic")

|

||||

|

||||

|

||||

try:

|

||||

from copyparty.util import fsenc

|

||||

except:

|

||||

|

||||

def fsenc(p):

|

||||

return p.encode("utf-8")

|

||||

|

||||

|

||||

def main():

|

||||

fp = sys.argv[1]

|

||||

ext = fp.lower().split(".")[-1]

|

||||

if ext not in EXTS:

|

||||

return

|

||||

|

||||

cwd, fn = os.path.split(fp)

|

||||

os.chdir(cwd)

|

||||

f1 = fsenc(fn)

|

||||

cmd = [

|

||||

b"exiftool",

|

||||

b"-exif:all=",

|

||||

b"-iptc:all=",

|

||||

b"-xmp:all=",

|

||||

b"-P",

|

||||

b"-overwrite_original",

|

||||

b"--",

|

||||

f1,

|

||||

]

|

||||

sp.check_output(cmd)

|

||||

print("image-noexif: stripped")

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

try:

|

||||

main()

|

||||

except:

|

||||

pass

|

||||

@@ -17,8 +17,12 @@ depdencies:

|

||||

|

||||

example usages; either as global config (all volumes) or as volflag:

|

||||

--xau f,bin/hooks/notify.py

|

||||

-v srv/inc:inc:c,xau=f,bin/hooks/notify.py

|

||||

^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

||||

-v srv/inc:inc:r:rw,ed:c,xau=f,bin/hooks/notify.py

|

||||

^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

||||

|

||||

(share filesystem-path srv/inc as volume /inc,

|

||||

readable by everyone, read-write for user 'ed',

|

||||

running this plugin on all uploads with the params listed below)

|

||||

|

||||

parameters explained,

|

||||

xau = execute after upload

|

||||

|

||||

@@ -15,9 +15,13 @@ and also supports --xm (notify on 📟 message)

|

||||

example usages; either as global config (all volumes) or as volflag:

|

||||

--xm f,j,bin/hooks/notify2.py

|

||||

--xau f,j,bin/hooks/notify2.py

|

||||

-v srv/inc:inc:c,xm=f,j,bin/hooks/notify2.py

|

||||

-v srv/inc:inc:c,xau=f,j,bin/hooks/notify2.py

|

||||

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

||||

-v srv/inc:inc:r:rw,ed:c,xm=f,j,bin/hooks/notify2.py

|

||||

-v srv/inc:inc:r:rw,ed:c,xau=f,j,bin/hooks/notify2.py

|

||||

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

||||

|

||||

(share filesystem-path srv/inc as volume /inc,

|

||||

readable by everyone, read-write for user 'ed',

|

||||

running this plugin on all uploads / msgs with the params listed below)

|

||||

|

||||

parameters explained,

|

||||

xau = execute after upload

|

||||

|

||||

@@ -10,7 +10,12 @@ example usage as global config:

|

||||

--xbu c,bin/hooks/reject-extension.py

|

||||

|

||||

example usage as a volflag (per-volume config):

|

||||

-v srv/inc:inc:c,xbu=c,bin/hooks/reject-extension.py

|

||||

-v srv/inc:inc:r:rw,ed:c,xbu=c,bin/hooks/reject-extension.py

|

||||

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

||||

|

||||

(share filesystem-path srv/inc as volume /inc,

|

||||

readable by everyone, read-write for user 'ed',

|

||||

running this plugin on all uploads with the params listed below)

|

||||

|

||||

parameters explained,

|

||||

xbu = execute before upload

|

||||

|

||||

@@ -17,7 +17,12 @@ example usage as global config:

|

||||

--xau c,bin/hooks/reject-mimetype.py

|

||||

|

||||

example usage as a volflag (per-volume config):

|

||||

-v srv/inc:inc:c,xau=c,bin/hooks/reject-mimetype.py

|

||||

-v srv/inc:inc:r:rw,ed:c,xau=c,bin/hooks/reject-mimetype.py

|

||||

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

||||

|

||||

(share filesystem-path srv/inc as volume /inc,

|

||||

readable by everyone, read-write for user 'ed',

|

||||

running this plugin on all uploads with the params listed below)

|

||||

|

||||

parameters explained,

|

||||

xau = execute after upload

|

||||

|

||||

@@ -15,9 +15,15 @@ example usage as global config:

|

||||

--xm f,j,t3600,bin/hooks/wget.py

|

||||

|

||||

example usage as a volflag (per-volume config):

|

||||

-v srv/inc:inc:c,xm=f,j,t3600,bin/hooks/wget.py

|

||||

-v srv/inc:inc:r:rw,ed:c,xm=f,j,t3600,bin/hooks/wget.py

|

||||

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

||||

|

||||

(share filesystem-path srv/inc as volume /inc,

|

||||

readable by everyone, read-write for user 'ed',

|

||||

running this plugin on all messages with the params listed below)

|

||||

|

||||

parameters explained,

|

||||

xm = execute on message-to-server-log

|

||||

f = fork so it doesn't block uploads

|

||||

j = provide message information as json; not just the text

|

||||

c3 = mute all output

|

||||

|

||||

@@ -18,7 +18,12 @@ example usage as global config:

|

||||

--xiu i5,j,bin/hooks/xiu-sha.py

|

||||

|

||||

example usage as a volflag (per-volume config):

|

||||

-v srv/inc:inc:c,xiu=i5,j,bin/hooks/xiu-sha.py

|

||||

-v srv/inc:inc:r:rw,ed:c,xiu=i5,j,bin/hooks/xiu-sha.py

|

||||

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

||||

|

||||

(share filesystem-path srv/inc as volume /inc,

|

||||

readable by everyone, read-write for user 'ed',

|

||||

running this plugin on batches of uploads with the params listed below)

|

||||

|

||||

parameters explained,

|

||||

xiu = execute after uploads...

|

||||

|

||||

@@ -15,7 +15,12 @@ example usage as global config:

|

||||

--xiu i1,j,bin/hooks/xiu.py

|

||||

|

||||

example usage as a volflag (per-volume config):

|

||||

-v srv/inc:inc:c,xiu=i1,j,bin/hooks/xiu.py

|

||||

-v srv/inc:inc:r:rw,ed:c,xiu=i1,j,bin/hooks/xiu.py

|

||||

^^^^^^^^^^^^^^^^^^^^^^^^^^^

|

||||

|

||||

(share filesystem-path srv/inc as volume /inc,

|

||||

readable by everyone, read-write for user 'ed',

|

||||

running this plugin on batches of uploads with the params listed below)

|

||||

|

||||

parameters explained,

|

||||

xiu = execute after uploads...

|

||||

|

||||

@@ -16,6 +16,10 @@ dep: ffmpeg

|

||||

"""

|

||||

|

||||

|

||||

# save beat timestamps to ".beats/filename.txt"

|

||||

SAVE = False

|

||||

|

||||

|

||||

def det(tf):

|

||||

# fmt: off

|

||||

sp.check_call([

|

||||

@@ -23,12 +27,11 @@ def det(tf):

|

||||

b"-nostdin",

|

||||

b"-hide_banner",

|

||||

b"-v", b"fatal",

|

||||

b"-ss", b"13",

|

||||

b"-y", b"-i", fsenc(sys.argv[1]),

|

||||

b"-map", b"0:a:0",

|

||||

b"-ac", b"1",

|

||||

b"-ar", b"22050",

|

||||

b"-t", b"300",

|

||||

b"-t", b"360",

|

||||

b"-f", b"f32le",

|

||||

fsenc(tf)

|

||||

])

|

||||

@@ -47,10 +50,29 @@ def det(tf):

|

||||

print(c["list"][0]["label"].split(" ")[0])

|

||||

return

|

||||

|

||||

# throws if detection failed:

|

||||

bpm = float(cl[-1]["timestamp"] - cl[1]["timestamp"])

|

||||

bpm = round(60 * ((len(cl) - 1) / bpm), 2)

|

||||

print(f"{bpm:.2f}")

|

||||

# throws if detection failed:

|

||||

beats = [float(x["timestamp"]) for x in cl]

|

||||

bds = [b - a for a, b in zip(beats, beats[1:])]

|

||||

bds.sort()

|

||||

n0 = int(len(bds) * 0.2)

|

||||

n1 = int(len(bds) * 0.75) + 1

|

||||

bds = bds[n0:n1]

|

||||

bpm = sum(bds)

|

||||

bpm = round(60 * (len(bds) / bpm), 2)

|

||||

print(f"{bpm:.2f}")

|

||||

|

||||

if SAVE:

|

||||

fdir, fname = os.path.split(sys.argv[1])

|

||||

bdir = os.path.join(fdir, ".beats")

|

||||

try:

|

||||

os.mkdir(fsenc(bdir))

|

||||

except:

|

||||

pass

|

||||

|

||||

fp = os.path.join(bdir, fname) + ".txt"

|

||||

with open(fsenc(fp), "wb") as f:

|

||||

txt = "\n".join([f"{x:.2f}" for x in beats])

|

||||

f.write(txt.encode("utf-8"))

|

||||

|

||||

|

||||

def main():

|

||||

|

||||

@@ -118,6 +118,15 @@ mkdir -p "$jail/tmp"

|

||||

chmod 777 "$jail/tmp"

|

||||

|

||||

|

||||

# create a dev

|

||||

(cd $jail; mkdir -p dev; cd dev

|

||||

[ -e null ] || mknod -m 666 null c 1 3

|

||||

[ -e zero ] || mknod -m 666 zero c 1 5

|

||||

[ -e random ] || mknod -m 444 random c 1 8

|

||||

[ -e urandom ] || mknod -m 444 urandom c 1 9

|

||||

)

|

||||

|

||||

|

||||

# run copyparty

|

||||

export HOME=$(getent passwd $uid | cut -d: -f6)

|

||||

export USER=$(getent passwd $uid | cut -d: -f1)

|

||||

|

||||

@@ -1,13 +1,13 @@

|

||||

#!/usr/bin/env python3

|

||||

from __future__ import print_function, unicode_literals

|

||||

|

||||

S_VERSION = "1.5"

|

||||

S_BUILD_DT = "2023-03-12"

|

||||

S_VERSION = "1.9"

|

||||

S_BUILD_DT = "2023-05-07"

|

||||

|

||||

"""

|

||||

up2k.py: upload to copyparty

|

||||

u2c.py: upload to copyparty

|

||||

2021, ed <irc.rizon.net>, MIT-Licensed

|

||||

https://github.com/9001/copyparty/blob/hovudstraum/bin/up2k.py

|

||||

https://github.com/9001/copyparty/blob/hovudstraum/bin/u2c.py

|

||||

|

||||

- dependencies: requests

|

||||

- supports python 2.6, 2.7, and 3.3 through 3.12

|

||||

@@ -21,6 +21,7 @@ import math

|

||||

import time

|

||||

import atexit

|

||||

import signal

|

||||

import socket

|

||||

import base64

|

||||

import hashlib

|

||||

import platform

|

||||

@@ -58,6 +59,7 @@ PY2 = sys.version_info < (3,)

|

||||

if PY2:

|

||||

from Queue import Queue

|

||||

from urllib import quote, unquote

|

||||

from urlparse import urlsplit, urlunsplit

|

||||

|

||||

sys.dont_write_bytecode = True

|

||||

bytes = str

|

||||

@@ -65,6 +67,7 @@ else:

|

||||

from queue import Queue

|

||||

from urllib.parse import unquote_to_bytes as unquote

|

||||

from urllib.parse import quote_from_bytes as quote

|

||||

from urllib.parse import urlsplit, urlunsplit

|

||||

|

||||

unicode = str

|

||||

|

||||

@@ -337,6 +340,32 @@ class CTermsize(object):

|

||||

ss = CTermsize()

|

||||

|

||||

|

||||

def undns(url):

|

||||

usp = urlsplit(url)

|

||||

hn = usp.hostname

|

||||

gai = None

|

||||

eprint("resolving host [{0}] ...".format(hn), end="")

|

||||

try:

|

||||

gai = socket.getaddrinfo(hn, None)

|

||||

hn = gai[0][4][0]

|

||||

except KeyboardInterrupt:

|

||||

raise

|

||||

except:

|

||||

t = "\n\033[31mfailed to resolve upload destination host;\033[0m\ngai={0}\n"

|

||||

eprint(t.format(repr(gai)))

|

||||

raise

|

||||

|

||||

if usp.port:

|

||||

hn = "{0}:{1}".format(hn, usp.port)

|

||||

if usp.username or usp.password:

|

||||

hn = "{0}:{1}@{2}".format(usp.username, usp.password, hn)

|

||||

|

||||

usp = usp._replace(netloc=hn)

|

||||

url = urlunsplit(usp)

|

||||

eprint(" {0}".format(url))

|

||||

return url

|

||||

|

||||

|

||||

def _scd(err, top):

|

||||

"""non-recursive listing of directory contents, along with stat() info"""

|

||||

with os.scandir(top) as dh:

|

||||

@@ -653,6 +682,7 @@ class Ctl(object):

|

||||

return nfiles, nbytes

|

||||

|

||||

def __init__(self, ar, stats=None):

|

||||

self.ok = False

|

||||

self.ar = ar

|

||||

self.stats = stats or self._scan()

|

||||

if not self.stats:

|

||||

@@ -700,6 +730,8 @@ class Ctl(object):

|

||||

|

||||

self._fancy()

|

||||

|

||||

self.ok = True

|

||||

|

||||

def _safe(self):

|

||||

"""minimal basic slow boring fallback codepath"""

|

||||