Compare commits

368 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

cadaeeeace | ||

|

|

767696185b | ||

|

|

c1efd227b7 | ||

|

|

a50d0563c3 | ||

|

|

e5641ddd16 | ||

|

|

700111ffeb | ||

|

|

b8adeb824a | ||

|

|

30cc9defcb | ||

|

|

61875bd773 | ||

|

|

30905c6f5d | ||

|

|

9986136dfb | ||

|

|

1c0d978979 | ||

|

|

0a0364e9f8 | ||

|

|

3376fbde1a | ||

|

|

ac21fa7782 | ||

|

|

c1c8dc5e82 | ||

|

|

5a38311481 | ||

|

|

9f8edb7f32 | ||

|

|

c5a6ac8417 | ||

|

|

50e01d6904 | ||

|

|

9b46291a20 | ||

|

|

14497b2425 | ||

|

|

f7ceae5a5f | ||

|

|

c9492d16ba | ||

|

|

9fb9ada3aa | ||

|

|

db0abbfdda | ||

|

|

e7f0009e57 | ||

|

|

4444f0f6ff | ||

|

|

418842d2d3 | ||

|

|

cafe53c055 | ||

|

|

7673beef72 | ||

|

|

b28bfe64c0 | ||

|

|

135ece3fbd | ||

|

|

bd3640d256 | ||

|

|

fc0405c8f3 | ||

|

|

7df890d964 | ||

|

|

8341041857 | ||

|

|

1b7634932d | ||

|

|

48a3898aa6 | ||

|

|

5d13ebb4ac | ||

|

|

015b87ee99 | ||

|

|

0a48acf6be | ||

|

|

2b6a3afd38 | ||

|

|

18aa82fb2f | ||

|

|

f5407b2997 | ||

|

|

474d5a155b | ||

|

|

afcd98b794 | ||

|

|

4f80e44ff7 | ||

|

|

406e413594 | ||

|

|

033b50ae1b | ||

|

|

bee26e853b | ||

|

|

04a1f7040e | ||

|

|

f9d5bb3b29 | ||

|

|

ca0cd04085 | ||

|

|

999ee2e7bc | ||

|

|

1ff7f968e8 | ||

|

|

3966266207 | ||

|

|

d03e96a392 | ||

|

|

4c843c6df9 | ||

|

|

0896c5295c | ||

|

|

cc0c9839eb | ||

|

|

d0aa20e17c | ||

|

|

1a658dedb7 | ||

|

|

8d376b854c | ||

|

|

490c16b01d | ||

|

|

2437a4e864 | ||

|

|

007d948cb9 | ||

|

|

335fcc8535 | ||

|

|

9eaa9904e0 | ||

|

|

0778da6c4d | ||

|

|

a1bb10012d | ||

|

|

1441ccee4f | ||

|

|

491803d8b7 | ||

|

|

3dcc386b6f | ||

|

|

5aa54d1217 | ||

|

|

88b876027c | ||

|

|

fcc3aa98fd | ||

|

|

f2f5e266b4 | ||

|

|

e17bf8f325 | ||

|

|

d19cb32bf3 | ||

|

|

85a637af09 | ||

|

|

043e3c7dd6 | ||

|

|

8f59afb159 | ||

|

|

77f1e51444 | ||

|

|

22fc4bb938 | ||

|

|

50c7bba6ea | ||

|

|

551d99b71b | ||

|

|

b54b7213a7 | ||

|

|

a14943c8de | ||

|

|

a10cad54fc | ||

|

|

8568b7702a | ||

|

|

5d8cb34885 | ||

|

|

8d248333e8 | ||

|

|

99e2ef7f33 | ||

|

|

e767230383 | ||

|

|

90601314d6 | ||

|

|

9c5eac1274 | ||

|

|

50905439e4 | ||

|

|

a0c1239246 | ||

|

|

b8e851c332 | ||

|

|

baaf2eb24d | ||

|

|

e197895c10 | ||

|

|

cb75efa05d | ||

|

|

8b0cf2c982 | ||

|

|

fc7d9e1f9c | ||

|

|

10caafa34c | ||

|

|

22cc22225a | ||

|

|

22dff4b0e5 | ||

|

|

a00ff2b086 | ||

|

|

e4acddc23b | ||

|

|

2b2d8e4e02 | ||

|

|

5501d49032 | ||

|

|

fa54b2eec4 | ||

|

|

cb0160021f | ||

|

|

93a723d588 | ||

|

|

8ebe1fb5e8 | ||

|

|

2acdf685b1 | ||

|

|

9f122ccd16 | ||

|

|

03be26fafc | ||

|

|

df5d309d6e | ||

|

|

c355f9bd91 | ||

|

|

9c28ba417e | ||

|

|

705b58c741 | ||

|

|

510302d667 | ||

|

|

025a537413 | ||

|

|

60a1ff0fc0 | ||

|

|

f94a0b1bff | ||

|

|

4ccfeeb2cd | ||

|

|

2646f6a4f2 | ||

|

|

b286ab539e | ||

|

|

2cca6e0922 | ||

|

|

db51f1b063 | ||

|

|

d979c47f50 | ||

|

|

e64b87b99b | ||

|

|

b985011a00 | ||

|

|

c2ed2314c8 | ||

|

|

cd496658c3 | ||

|

|

deca082623 | ||

|

|

0ea8bb7c83 | ||

|

|

1fb251a4c2 | ||

|

|

4295923b76 | ||

|

|

572aa4b26c | ||

|

|

b1359f039f | ||

|

|

867d8ee49e | ||

|

|

04c86e8a89 | ||

|

|

bc0cb43ef9 | ||

|

|

769454fdce | ||

|

|

4ee81af8f6 | ||

|

|

8b0e66122f | ||

|

|

8a98efb929 | ||

|

|

b6fd555038 | ||

|

|

7eb413ad51 | ||

|

|

4421d509eb | ||

|

|

793ffd7b01 | ||

|

|

1e22222c60 | ||

|

|

544e0549bc | ||

|

|

83178d0836 | ||

|

|

c44f5f5701 | ||

|

|

138f5bc989 | ||

|

|

e4759f86ef | ||

|

|

d71416437a | ||

|

|

a84c583b2c | ||

|

|

cdacdccdb8 | ||

|

|

d3ccd3f174 | ||

|

|

cb6de0387d | ||

|

|

abff40519d | ||

|

|

55c74ad164 | ||

|

|

673b4f7e23 | ||

|

|

d11e02da49 | ||

|

|

8790f89e08 | ||

|

|

33442026b8 | ||

|

|

03193de6d0 | ||

|

|

8675ff40f3 | ||

|

|

d88889d3fc | ||

|

|

6f244d4335 | ||

|

|

cacca663b3 | ||

|

|

d5109be559 | ||

|

|

d999f06bb9 | ||

|

|

a1a8a8c7b5 | ||

|

|

fdd6f3b4a6 | ||

|

|

f5191973df | ||

|

|

ddbaebe779 | ||

|

|

42099baeff | ||

|

|

2459965ca8 | ||

|

|

6acf436573 | ||

|

|

f217e1ce71 | ||

|

|

418000aee3 | ||

|

|

dbbba9625b | ||

|

|

397bc92fbc | ||

|

|

6e615dcd03 | ||

|

|

9ac5908b33 | ||

|

|

50912480b9 | ||

|

|

24b9b8319d | ||

|

|

b0f4f0b653 | ||

|

|

05bbd41c4b | ||

|

|

8f5f8a3cda | ||

|

|

c8938fc033 | ||

|

|

1550350e05 | ||

|

|

5cc190c026 | ||

|

|

d6a0a738ce | ||

|

|

f5fe3678ee | ||

|

|

f2a7925387 | ||

|

|

fa953ced52 | ||

|

|

f0000d9861 | ||

|

|

4e67516719 | ||

|

|

29db7a6270 | ||

|

|

852499e296 | ||

|

|

f1775fd51c | ||

|

|

4bb306932a | ||

|

|

2a37e81bd8 | ||

|

|

6a312ca856 | ||

|

|

e7f3e475a2 | ||

|

|

854ba0ec06 | ||

|

|

209b49d771 | ||

|

|

949baae539 | ||

|

|

5f4ea27586 | ||

|

|

099cc97247 | ||

|

|

592b7d6315 | ||

|

|

0880bf55a1 | ||

|

|

4cbffec0ec | ||

|

|

cc355417d4 | ||

|

|

e2bc573e61 | ||

|

|

41c0376177 | ||

|

|

c01cad091e | ||

|

|

eb349f339c | ||

|

|

24d8caaf3e | ||

|

|

5ac2c20959 | ||

|

|

bb72e6bf30 | ||

|

|

d8142e866a | ||

|

|

7b7979fd61 | ||

|

|

749616d09d | ||

|

|

5485c6d7ca | ||

|

|

b7aea38d77 | ||

|

|

0ecd9f99e6 | ||

|

|

ca04a00662 | ||

|

|

8a09601be8 | ||

|

|

1fe0d4693e | ||

|

|

bba8a3c6bc | ||

|

|

e3d7f0c7d5 | ||

|

|

be7bb71bbc | ||

|

|

e0c4829ec6 | ||

|

|

5af1575329 | ||

|

|

884f966b86 | ||

|

|

f6c6fbc223 | ||

|

|

b0cc396bca | ||

|

|

ae463518f6 | ||

|

|

2be2e9a0d8 | ||

|

|

e405fddf74 | ||

|

|

c269b0dd91 | ||

|

|

8c3211263a | ||

|

|

bf04e7c089 | ||

|

|

c7c6e48b1a | ||

|

|

974ca773be | ||

|

|

9270c2df19 | ||

|

|

b39ff92f34 | ||

|

|

7454167f78 | ||

|

|

5ceb3a962f | ||

|

|

52bd5642da | ||

|

|

c39c93725f | ||

|

|

d00f0b9fa7 | ||

|

|

01cfc70982 | ||

|

|

e6aec189bd | ||

|

|

c98fff1647 | ||

|

|

0009e31bd3 | ||

|

|

db95e880b2 | ||

|

|

e69fea4a59 | ||

|

|

4360800a6e | ||

|

|

b179e2b031 | ||

|

|

ecdec75b4e | ||

|

|

5cb2e33353 | ||

|

|

43ff2e531a | ||

|

|

1c2c9db8f0 | ||

|

|

7ea183baef | ||

|

|

ab87fac6d8 | ||

|

|

1e3b7eee3b | ||

|

|

4de028fc3b | ||

|

|

604e5dfaaf | ||

|

|

05e0c2ec9e | ||

|

|

76bd005bdc | ||

|

|

5effaed352 | ||

|

|

cedaf4809f | ||

|

|

6deaf5c268 | ||

|

|

9dc6a26472 | ||

|

|

14ad5916fc | ||

|

|

1a46738649 | ||

|

|

9e5e3b099a | ||

|

|

292ce75cc2 | ||

|

|

ce7df7afd4 | ||

|

|

e28e793f81 | ||

|

|

3e561976db | ||

|

|

273a4eb7d0 | ||

|

|

6175f85bb6 | ||

|

|

a80579f63a | ||

|

|

96d6bcf26e | ||

|

|

49e8df25ac | ||

|

|

6a05850f21 | ||

|

|

5e7c3defe3 | ||

|

|

6c0987d4d0 | ||

|

|

6eba9feffe | ||

|

|

8adfcf5950 | ||

|

|

36d6fa512a | ||

|

|

79b6e9b393 | ||

|

|

dc2e2cbd4b | ||

|

|

5c12dac30f | ||

|

|

641929191e | ||

|

|

617321631a | ||

|

|

ddc0c899f8 | ||

|

|

cdec42c1ae | ||

|

|

c48f469e39 | ||

|

|

44909cc7b8 | ||

|

|

8f61e1568c | ||

|

|

b7be7a0fd8 | ||

|

|

1526a4e084 | ||

|

|

dbdb9574b1 | ||

|

|

853ae6386c | ||

|

|

a4b56c74c7 | ||

|

|

d7f1951e44 | ||

|

|

7e2ff9825e | ||

|

|

9b423396ec | ||

|

|

781146b2fb | ||

|

|

84937d1ce0 | ||

|

|

98cce66aa4 | ||

|

|

043c2d4858 | ||

|

|

99cc434779 | ||

|

|

5095d17e81 | ||

|

|

87d835ae37 | ||

|

|

6939ca768b | ||

|

|

e3957e8239 | ||

|

|

4ad6e45216 | ||

|

|

76e5eeea3f | ||

|

|

eb17f57761 | ||

|

|

b0db14d8b0 | ||

|

|

2b644fa81b | ||

|

|

190ccee820 | ||

|

|

4e7dd32e78 | ||

|

|

5817fb66ae | ||

|

|

9cb04eef93 | ||

|

|

0019fe7f04 | ||

|

|

852c6f2de1 | ||

|

|

c4191de2e7 | ||

|

|

4de61defc9 | ||

|

|

0aa88590d0 | ||

|

|

405f3ee5fe | ||

|

|

bc339f774a | ||

|

|

e67b695b23 | ||

|

|

4a7633ab99 | ||

|

|

c58f2ef61f | ||

|

|

3866e6a3f2 | ||

|

|

381686fc66 | ||

|

|

a918c285bf | ||

|

|

1e20eafbe0 | ||

|

|

39399934ee | ||

|

|

b47635150a | ||

|

|

78d2f69ed5 | ||

|

|

7a98dc669e | ||

|

|

2f15bb5085 | ||

|

|

712a578e6c | ||

|

|

d8dfc4ccb2 | ||

|

|

e413007eb0 | ||

|

|

6d1d3e48d8 | ||

|

|

04966164ce | ||

|

|

8b62aa7cc7 | ||

|

|

1088e8c6a5 | ||

|

|

8c54c2226f | ||

|

|

f74ac1f18b | ||

|

|

25931e62fd | ||

|

|

707a940399 | ||

|

|

87ef50d384 |

2

.github/pull_request_template.md

vendored

Normal file

2

.github/pull_request_template.md

vendored

Normal file

@@ -0,0 +1,2 @@

|

||||

To show that your contribution is compatible with the MIT License, please include the following text somewhere in this PR description:

|

||||

This PR complies with the DCO; https://developercertificate.org/

|

||||

14

.gitignore

vendored

14

.gitignore

vendored

@@ -21,11 +21,23 @@ copyparty.egg-info/

|

||||

# winmerge

|

||||

*.bak

|

||||

|

||||

# apple pls

|

||||

.DS_Store

|

||||

|

||||

# derived

|

||||

copyparty/res/COPYING.txt

|

||||

copyparty/web/deps/

|

||||

srv/

|

||||

scripts/docker/i/

|

||||

contrib/package/arch/pkg/

|

||||

contrib/package/arch/src/

|

||||

|

||||

# state/logs

|

||||

up.*.txt

|

||||

.hist/

|

||||

.hist/

|

||||

scripts/docker/*.out

|

||||

scripts/docker/*.err

|

||||

/perf.*

|

||||

|

||||

# nix build output link

|

||||

result

|

||||

|

||||

10

.vscode/launch.py

vendored

10

.vscode/launch.py

vendored

@@ -30,9 +30,17 @@ except:

|

||||

|

||||

argv = [os.path.expanduser(x) if x.startswith("~") else x for x in argv]

|

||||

|

||||

sfx = ""

|

||||

if len(sys.argv) > 1 and os.path.isfile(sys.argv[1]):

|

||||

sfx = sys.argv[1]

|

||||

sys.argv = [sys.argv[0]] + sys.argv[2:]

|

||||

|

||||

argv += sys.argv[1:]

|

||||

|

||||

if re.search(" -j ?[0-9]", " ".join(argv)):

|

||||

if sfx:

|

||||

argv = [sys.executable, sfx] + argv

|

||||

sp.check_call(argv)

|

||||

elif re.search(" -j ?[0-9]", " ".join(argv)):

|

||||

argv = [sys.executable, "-m", "copyparty"] + argv

|

||||

sp.check_call(argv)

|

||||

else:

|

||||

|

||||

32

.vscode/settings.json

vendored

32

.vscode/settings.json

vendored

@@ -35,34 +35,18 @@

|

||||

"python.linting.flake8Enabled": true,

|

||||

"python.linting.banditEnabled": true,

|

||||

"python.linting.mypyEnabled": true,

|

||||

"python.linting.mypyArgs": [

|

||||

"--ignore-missing-imports",

|

||||

"--follow-imports=silent",

|

||||

"--show-column-numbers",

|

||||

"--strict"

|

||||

],

|

||||

"python.linting.flake8Args": [

|

||||

"--max-line-length=120",

|

||||

"--ignore=E722,F405,E203,W503,W293,E402,E501,E128",

|

||||

"--ignore=E722,F405,E203,W503,W293,E402,E501,E128,E226",

|

||||

],

|

||||

"python.linting.banditArgs": [

|

||||

"--ignore=B104"

|

||||

],

|

||||

"python.linting.pylintArgs": [

|

||||

"--disable=missing-module-docstring",

|

||||

"--disable=missing-class-docstring",

|

||||

"--disable=missing-function-docstring",

|

||||

"--disable=import-outside-toplevel",

|

||||

"--disable=wrong-import-position",

|

||||

"--disable=raise-missing-from",

|

||||

"--disable=bare-except",

|

||||

"--disable=broad-except",

|

||||

"--disable=invalid-name",

|

||||

"--disable=line-too-long",

|

||||

"--disable=consider-using-f-string"

|

||||

"--ignore=B104,B110,B112"

|

||||

],

|

||||

// python3 -m isort --py=27 --profile=black copyparty/

|

||||

"python.formatting.provider": "black",

|

||||

"python.formatting.provider": "none",

|

||||

"[python]": {

|

||||

"editor.defaultFormatter": "ms-python.black-formatter"

|

||||

},

|

||||

"editor.formatOnSave": true,

|

||||

"[html]": {

|

||||

"editor.formatOnSave": false,

|

||||

@@ -74,10 +58,6 @@

|

||||

"files.associations": {

|

||||

"*.makefile": "makefile"

|

||||

},

|

||||

"python.formatting.blackArgs": [

|

||||

"-t",

|

||||

"py27"

|

||||

],

|

||||

"python.linting.enabled": true,

|

||||

"python.pythonPath": "/usr/bin/python3"

|

||||

}

|

||||

576

README.md

576

README.md

@@ -1,35 +1,21 @@

|

||||

# ⇆🎉 copyparty

|

||||

# 💾🎉 copyparty

|

||||

|

||||

* portable file sharing hub (py2/py3) [(on PyPI)](https://pypi.org/project/copyparty/)

|

||||

* MIT-Licensed, 2019-05-26, ed @ irc.rizon.net

|

||||

turn almost any device into a file server with resumable uploads/downloads using [*any*](#browser-support) web browser

|

||||

|

||||

* server only needs Python (2 or 3), all dependencies optional

|

||||

* 🔌 protocols: [http](#the-browser) // [ftp](#ftp-server) // [webdav](#webdav-server) // [smb/cifs](#smb-server)

|

||||

* 📱 [android app](#android-app) // [iPhone shortcuts](#ios-shortcuts)

|

||||

|

||||

## summary

|

||||

|

||||

turn your phone or raspi into a portable file server with resumable uploads/downloads using *any* web browser

|

||||

|

||||

* server only needs Python (`2.7` or `3.3+`), all dependencies optional

|

||||

* browse/upload with [IE4](#browser-support) / netscape4.0 on win3.11 (heh)

|

||||

* protocols: [http](#the-browser) // [ftp](#ftp-server) // [webdav](#webdav-server) // [smb/cifs](#smb-server)

|

||||

|

||||

try the **[read-only demo server](https://a.ocv.me/pub/demo/)** 👀 running from a basement in finland

|

||||

👉 **[Get started](#quickstart)!** or visit the **[read-only demo server](https://a.ocv.me/pub/demo/)** 👀 running from a basement in finland

|

||||

|

||||

📷 **screenshots:** [browser](#the-browser) // [upload](#uploading) // [unpost](#unpost) // [thumbnails](#thumbnails) // [search](#searching) // [fsearch](#file-search) // [zip-DL](#zip-downloads) // [md-viewer](#markdown-viewer)

|

||||

|

||||

|

||||

## get the app

|

||||

|

||||

<a href="https://f-droid.org/packages/me.ocv.partyup/"><img src="https://ocv.me/fdroid.png" alt="Get it on F-Droid" height="50" /> '' <img src="https://img.shields.io/f-droid/v/me.ocv.partyup.svg" alt="f-droid version info" /></a> '' <a href="https://github.com/9001/party-up"><img src="https://img.shields.io/github/release/9001/party-up.svg?logo=github" alt="github version info" /></a>

|

||||

|

||||

(the app is **NOT** the full copyparty server! just a basic upload client, nothing fancy yet)

|

||||

|

||||

|

||||

## readme toc

|

||||

|

||||

* top

|

||||

* [quickstart](#quickstart) - download **[copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py)** and you're all set!

|

||||

* [quickstart](#quickstart) - just run **[copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py)** -- that's it! 🎉

|

||||

* [on servers](#on-servers) - you may also want these, especially on servers

|

||||

* [on debian](#on-debian) - recommended additional steps on debian

|

||||

* [features](#features)

|

||||

* [testimonials](#testimonials) - small collection of user feedback

|

||||

* [motivations](#motivations) - project goals / philosophy

|

||||

@@ -53,11 +39,14 @@ try the **[read-only demo server](https://a.ocv.me/pub/demo/)** 👀 running fro

|

||||

* [self-destruct](#self-destruct) - uploads can be given a lifetime

|

||||

* [file manager](#file-manager) - cut/paste, rename, and delete files/folders (if you have permission)

|

||||

* [batch rename](#batch-rename) - select some files and press `F2` to bring up the rename UI

|

||||

* [media player](#media-player) - plays almost every audio format there is

|

||||

* [audio equalizer](#audio-equalizer) - bass boosted

|

||||

* [fix unreliable playback on android](#fix-unreliable-playback-on-android) - due to phone / app settings

|

||||

* [markdown viewer](#markdown-viewer) - and there are *two* editors

|

||||

* [other tricks](#other-tricks)

|

||||

* [searching](#searching) - search by size, date, path/name, mp3-tags, ...

|

||||

* [server config](#server-config) - using arguments or config files, or a mix of both

|

||||

* [zeroconf](#zeroconf) - announce enabled services on the LAN

|

||||

* [zeroconf](#zeroconf) - announce enabled services on the LAN ([pic](https://user-images.githubusercontent.com/241032/215344737-0eae8d98-9496-4256-9aa8-cd2f6971810d.png))

|

||||

* [mdns](#mdns) - LAN domain-name and feature announcer

|

||||

* [ssdp](#ssdp) - windows-explorer announcer

|

||||

* [qr-code](#qr-code) - print a qr-code [(screenshot)](https://user-images.githubusercontent.com/241032/194728533-6f00849b-c6ac-43c6-9359-83e454d11e00.png) for quick access

|

||||

@@ -77,28 +66,39 @@ try the **[read-only demo server](https://a.ocv.me/pub/demo/)** 👀 running fro

|

||||

* [file parser plugins](#file-parser-plugins) - provide custom parsers to index additional tags

|

||||

* [event hooks](#event-hooks) - trigger a program on uploads, renames etc ([examples](./bin/hooks/))

|

||||

* [upload events](#upload-events) - the older, more powerful approach ([examples](./bin/mtag/))

|

||||

* [handlers](#handlers) - redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

* [hiding from google](#hiding-from-google) - tell search engines you dont wanna be indexed

|

||||

* [themes](#themes)

|

||||

* [complete examples](#complete-examples)

|

||||

* [reverse-proxy](#reverse-proxy) - running copyparty next to other websites

|

||||

* [prometheus](#prometheus) - metrics/stats can be enabled

|

||||

* [packages](#packages) - the party might be closer than you think

|

||||

* [arch package](#arch-package) - now [available on aur](https://aur.archlinux.org/packages/copyparty) maintained by [@icxes](https://github.com/icxes)

|

||||

* [fedora package](#fedora-package) - now [available on copr-pypi](https://copr.fedorainfracloud.org/coprs/g/copr/PyPI/)

|

||||

* [nix package](#nix-package) - `nix profile install github:9001/copyparty`

|

||||

* [nixos module](#nixos-module)

|

||||

* [browser support](#browser-support) - TLDR: yes

|

||||

* [client examples](#client-examples) - interact with copyparty using non-browser clients

|

||||

* [folder sync](#folder-sync) - sync folders to/from copyparty

|

||||

* [mount as drive](#mount-as-drive) - a remote copyparty server as a local filesystem

|

||||

* [android app](#android-app) - upload to copyparty with one tap

|

||||

* [iOS shortcuts](#iOS-shortcuts) - there is no iPhone app, but

|

||||

* [performance](#performance) - defaults are usually fine - expect `8 GiB/s` download, `1 GiB/s` upload

|

||||

* [client-side](#client-side) - when uploading files

|

||||

* [security](#security) - some notes on hardening

|

||||

* [security](#security) - there is a [discord server](https://discord.gg/25J8CdTT6G)

|

||||

* [gotchas](#gotchas) - behavior that might be unexpected

|

||||

* [cors](#cors) - cross-site request config

|

||||

* [password hashing](#password-hashing) - you can hash passwords

|

||||

* [https](#https) - both HTTP and HTTPS are accepted

|

||||

* [recovering from crashes](#recovering-from-crashes)

|

||||

* [client crashes](#client-crashes)

|

||||

* [frefox wsod](#frefox-wsod) - firefox 87 can crash during uploads

|

||||

* [HTTP API](#HTTP-API) - see [devnotes](#./docs/devnotes.md#http-api)

|

||||

* [HTTP API](#HTTP-API) - see [devnotes](./docs/devnotes.md#http-api)

|

||||

* [dependencies](#dependencies) - mandatory deps

|

||||

* [optional dependencies](#optional-dependencies) - install these to enable bonus features

|

||||

* [install recommended deps](#install-recommended-deps)

|

||||

* [optional gpl stuff](#optional-gpl-stuff)

|

||||

* [sfx](#sfx) - the self-contained "binary"

|

||||

* [copyparty.exe](#copypartyexe) - download [copyparty.exe](https://github.com/9001/copyparty/releases/latest/download/copyparty.exe) or [copyparty64.exe](https://github.com/9001/copyparty/releases/latest/download/copyparty64.exe)

|

||||

* [copyparty.exe](#copypartyexe) - download [copyparty.exe](https://github.com/9001/copyparty/releases/latest/download/copyparty.exe) (win8+) or [copyparty32.exe](https://github.com/9001/copyparty/releases/latest/download/copyparty32.exe) (win7+)

|

||||

* [install on android](#install-on-android)

|

||||

* [reporting bugs](#reporting-bugs) - ideas for context to include in bug reports

|

||||

* [devnotes](#devnotes) - for build instructions etc, see [./docs/devnotes.md](./docs/devnotes.md)

|

||||

@@ -106,27 +106,49 @@ try the **[read-only demo server](https://a.ocv.me/pub/demo/)** 👀 running fro

|

||||

|

||||

## quickstart

|

||||

|

||||

download **[copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py)** and you're all set!

|

||||

just run **[copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py)** -- that's it! 🎉

|

||||

|

||||

if you cannot install python, you can use [copyparty.exe](#copypartyexe) instead

|

||||

* or install through pypi: `python3 -m pip install --user -U copyparty`

|

||||

* or if you cannot install python, you can use [copyparty.exe](#copypartyexe) instead

|

||||

* or install [on arch](#arch-package) ╱ [on fedora](#fedora-package) ╱ [on NixOS](#nixos-module) ╱ [through nix](#nix-package)

|

||||

* or if you are on android, [install copyparty in termux](#install-on-android)

|

||||

* or if you prefer to [use docker](./scripts/docker/) 🐋 you can do that too

|

||||

* docker has all deps built-in, so skip this step:

|

||||

|

||||

running the sfx without arguments (for example doubleclicking it on Windows) will give everyone read/write access to the current folder; you may want [accounts and volumes](#accounts-and-volumes)

|

||||

enable thumbnails (images/audio/video), media indexing, and audio transcoding by installing some recommended deps:

|

||||

|

||||

* **Alpine:** `apk add py3-pillow ffmpeg`

|

||||

* **Debian:** `apt install python3-pil ffmpeg`

|

||||

* **Fedora:** `dnf install python3-pillow ffmpeg`

|

||||

* **FreeBSD:** `pkg install py39-sqlite3 py39-pillow ffmpeg`

|

||||

* **MacOS:** `port install py-Pillow ffmpeg`

|

||||

* **MacOS** (alternative): `brew install pillow ffmpeg`

|

||||

* **Windows:** `python -m pip install --user -U Pillow`

|

||||

* install python and ffmpeg manually; do not use `winget` or `Microsoft Store` (it breaks $PATH)

|

||||

* copyparty.exe comes with `Pillow` and only needs `ffmpeg`

|

||||

* see [optional dependencies](#optional-dependencies) to enable even more features

|

||||

|

||||

running copyparty without arguments (for example doubleclicking it on Windows) will give everyone read/write access to the current folder; you may want [accounts and volumes](#accounts-and-volumes)

|

||||

|

||||

or see [some usage examples](#complete-examples) for inspiration, or the [complete windows example](./docs/examples/windows.md)

|

||||

|

||||

some recommended options:

|

||||

* `-e2dsa` enables general [file indexing](#file-indexing)

|

||||

* `-e2ts` enables audio metadata indexing (needs either FFprobe or Mutagen), see [optional dependencies](#optional-dependencies) to enable thumbnails and more

|

||||

* `-e2ts` enables audio metadata indexing (needs either FFprobe or Mutagen)

|

||||

* `-v /mnt/music:/music:r:rw,foo -a foo:bar` shares `/mnt/music` as `/music`, `r`eadable by anyone, and read-write for user `foo`, password `bar`

|

||||

* replace `:r:rw,foo` with `:r,foo` to only make the folder readable by `foo` and nobody else

|

||||

* see [accounts and volumes](#accounts-and-volumes) for the syntax and other permissions (`r`ead, `w`rite, `m`ove, `d`elete, `g`et, up`G`et)

|

||||

* see [accounts and volumes](#accounts-and-volumes) (or `--help-accounts`) for the syntax and other permissions

|

||||

|

||||

|

||||

### on servers

|

||||

|

||||

you may also want these, especially on servers:

|

||||

|

||||

* [contrib/systemd/copyparty.service](contrib/systemd/copyparty.service) to run copyparty as a systemd service

|

||||

* [contrib/systemd/copyparty.service](contrib/systemd/copyparty.service) to run copyparty as a systemd service (see guide inside)

|

||||

* [contrib/systemd/prisonparty.service](contrib/systemd/prisonparty.service) to run it in a chroot (for extra security)

|

||||

* [contrib/rc/copyparty](contrib/rc/copyparty) to run copyparty on FreeBSD

|

||||

* [contrib/nginx/copyparty.conf](contrib/nginx/copyparty.conf) to [reverse-proxy](#reverse-proxy) behind nginx (for better https)

|

||||

* [nixos module](#nixos-module) to run copyparty on NixOS hosts

|

||||

|

||||

and remember to open the ports you want; here's a complete example including every feature copyparty has to offer:

|

||||

```

|

||||

@@ -137,18 +159,6 @@ firewall-cmd --reload

|

||||

```

|

||||

(1900:ssdp, 3921:ftp, 3923:http/https, 3945:smb, 3990:ftps, 5353:mdns, 12000:passive-ftp)

|

||||

|

||||

### on debian

|

||||

|

||||

recommended additional steps on debian which enable audio metadata and thumbnails (from images and videos):

|

||||

|

||||

* as root, run the following:

|

||||

`apt install python3 python3-pip python3-dev ffmpeg`

|

||||

|

||||

* then, as the user which will be running copyparty (so hopefully not root), run this:

|

||||

`python3 -m pip install --user -U Pillow pillow-avif-plugin`

|

||||

|

||||

(skipped `pyheif-pillow-opener` because apparently debian is too old to build it)

|

||||

|

||||

|

||||

## features

|

||||

|

||||

@@ -162,14 +172,18 @@ recommended additional steps on debian which enable audio metadata and thumbnai

|

||||

* ☑ [smb/cifs server](#smb-server)

|

||||

* ☑ [qr-code](#qr-code) for quick access

|

||||

* ☑ [upnp / zeroconf / mdns / ssdp](#zeroconf)

|

||||

* ☑ [event hooks](#event-hooks) / script runner

|

||||

* ☑ [reverse-proxy support](https://github.com/9001/copyparty#reverse-proxy)

|

||||

* upload

|

||||

* ☑ basic: plain multipart, ie6 support

|

||||

* ☑ [up2k](#uploading): js, resumable, multithreaded

|

||||

* unaffected by cloudflare's max-upload-size (100 MiB)

|

||||

* ☑ stash: simple PUT filedropper

|

||||

* ☑ filename randomizer

|

||||

* ☑ write-only folders

|

||||

* ☑ [unpost](#unpost): undo/delete accidental uploads

|

||||

* ☑ [self-destruct](#self-destruct) (specified server-side or client-side)

|

||||

* ☑ symlink/discard existing files (content-matching)

|

||||

* ☑ symlink/discard duplicates (content-matching)

|

||||

* download

|

||||

* ☑ single files in browser

|

||||

* ☑ [folders as zip / tar files](#zip-downloads)

|

||||

@@ -190,16 +204,21 @@ recommended additional steps on debian which enable audio metadata and thumbnai

|

||||

* ☑ [locate files by contents](#file-search)

|

||||

* ☑ search by name/path/date/size

|

||||

* ☑ [search by ID3-tags etc.](#searching)

|

||||

* client support

|

||||

* ☑ [folder sync](#folder-sync)

|

||||

* ☑ [curl-friendly](https://user-images.githubusercontent.com/241032/215322619-ea5fd606-3654-40ad-94ee-2bc058647bb2.png)

|

||||

* markdown

|

||||

* ☑ [viewer](#markdown-viewer)

|

||||

* ☑ editor (sure why not)

|

||||

|

||||

PS: something missing? post any crazy ideas you've got as a [feature request](https://github.com/9001/copyparty/issues/new?assignees=9001&labels=enhancement&template=feature_request.md) or [discussion](https://github.com/9001/copyparty/discussions/new?category=ideas) 🤙

|

||||

|

||||

|

||||

## testimonials

|

||||

|

||||

small collection of user feedback

|

||||

|

||||

`good enough`, `surprisingly correct`, `certified good software`, `just works`, `why`

|

||||

`good enough`, `surprisingly correct`, `certified good software`, `just works`, `why`, `wow this is better than nextcloud`

|

||||

|

||||

|

||||

# motivations

|

||||

@@ -208,7 +227,7 @@ project goals / philosophy

|

||||

|

||||

* inverse linux philosophy -- do all the things, and do an *okay* job

|

||||

* quick drop-in service to get a lot of features in a pinch

|

||||

* check [the alternatives](./docs/versus.md)

|

||||

* some of [the alternatives](./docs/versus.md) might be a better fit for you

|

||||

* run anywhere, support everything

|

||||

* as many web-browsers and python versions as possible

|

||||

* every browser should at least be able to browse, download, upload files

|

||||

@@ -237,6 +256,9 @@ browser-specific:

|

||||

server-os-specific:

|

||||

* RHEL8 / Rocky8: you can run copyparty using `/usr/libexec/platform-python`

|

||||

|

||||

server notes:

|

||||

* pypy is supported but regular cpython is faster if you enable the database

|

||||

|

||||

|

||||

# bugs

|

||||

|

||||

@@ -260,9 +282,14 @@ server-os-specific:

|

||||

|

||||

* [Firefox issue 1790500](https://bugzilla.mozilla.org/show_bug.cgi?id=1790500) -- entire browser can crash after uploading ~4000 small files

|

||||

|

||||

* Android: music playback randomly stops due to [battery usage settings](#fix-unreliable-playback-on-android)

|

||||

|

||||

* iPhones: the volume control doesn't work because [apple doesn't want it to](https://developer.apple.com/library/archive/documentation/AudioVideo/Conceptual/Using_HTML5_Audio_Video/Device-SpecificConsiderations/Device-SpecificConsiderations.html#//apple_ref/doc/uid/TP40009523-CH5-SW11)

|

||||

* *future workaround:* enable the equalizer, make it all-zero, and set a negative boost to reduce the volume

|

||||

* "future" because `AudioContext` is broken in the current iOS version (15.1), maybe one day...

|

||||

* `AudioContext` will probably never be a viable workaround as apple introduces new issues faster than they fix current ones

|

||||

|

||||

* iPhones: the preload feature (in the media-player-options tab) can cause a tiny audio glitch 20sec before the end of each song, but disabling it may cause worse iOS bugs to appear instead

|

||||

* just a hunch, but disabling preloading may cause playback to stop entirely, or possibly mess with bluetooth speakers

|

||||

* tried to add a tooltip regarding this but looks like apple broke my tooltips

|

||||

|

||||

* Windows: folders cannot be accessed if the name ends with `.`

|

||||

* python or windows bug

|

||||

@@ -272,6 +299,7 @@ server-os-specific:

|

||||

|

||||

* VirtualBox: sqlite throws `Disk I/O Error` when running in a VM and the up2k database is in a vboxsf

|

||||

* use `--hist` or the `hist` volflag (`-v [...]:c,hist=/tmp/foo`) to place the db inside the vm instead

|

||||

* also happens on mergerfs, so put the db elsewhere

|

||||

|

||||

* Ubuntu: dragging files from certain folders into firefox or chrome is impossible

|

||||

* due to snap security policies -- see `snap connections firefox` for the allowlist, `removable-media` permits all of `/mnt` and `/media` apparently

|

||||

@@ -285,7 +313,7 @@ upgrade notes

|

||||

* http-api: delete/move is now `POST` instead of `GET`

|

||||

* everything other than `GET` and `HEAD` must pass [cors validation](#cors)

|

||||

* `1.5.0` (2022-12-03): [new chunksize formula](https://github.com/9001/copyparty/commit/54e1c8d261df) for files larger than 128 GiB

|

||||

* **users:** upgrade to the latest [cli uploader](https://github.com/9001/copyparty/blob/hovudstraum/bin/up2k.py) if you use that

|

||||

* **users:** upgrade to the latest [cli uploader](https://github.com/9001/copyparty/blob/hovudstraum/bin/u2c.py) if you use that

|

||||

* **devs:** update third-party up2k clients (if those even exist)

|

||||

|

||||

|

||||

@@ -304,7 +332,8 @@ upgrade notes

|

||||

# accounts and volumes

|

||||

|

||||

per-folder, per-user permissions - if your setup is getting complex, consider making a [config file](./docs/example.conf) instead of using arguments

|

||||

* much easier to manage, and you can modify the config at runtime with `systemctl reload copyparty` or more conveniently using the `[reload cfg]` button in the control-panel (if logged in as admin)

|

||||

* much easier to manage, and you can modify the config at runtime with `systemctl reload copyparty` or more conveniently using the `[reload cfg]` button in the control-panel (if the user has `a`/admin in any volume)

|

||||

* changes to the `[global]` config section requires a restart to take effect

|

||||

|

||||

a quick summary can be seen using `--help-accounts`

|

||||

|

||||

@@ -322,6 +351,7 @@ permissions:

|

||||

* `d` (delete): delete files/folders

|

||||

* `g` (get): only download files, cannot see folder contents or zip/tar

|

||||

* `G` (upget): same as `g` except uploaders get to see their own filekeys (see `fk` in examples below)

|

||||

* `a` (admin): can see uploader IPs, config-reload

|

||||

|

||||

examples:

|

||||

* add accounts named u1, u2, u3 with passwords p1, p2, p3: `-a u1:p1 -a u2:p2 -a u3:p3`

|

||||

@@ -450,6 +480,7 @@ click the `🌲` or pressing the `B` hotkey to toggle between breadcrumbs path (

|

||||

## thumbnails

|

||||

|

||||

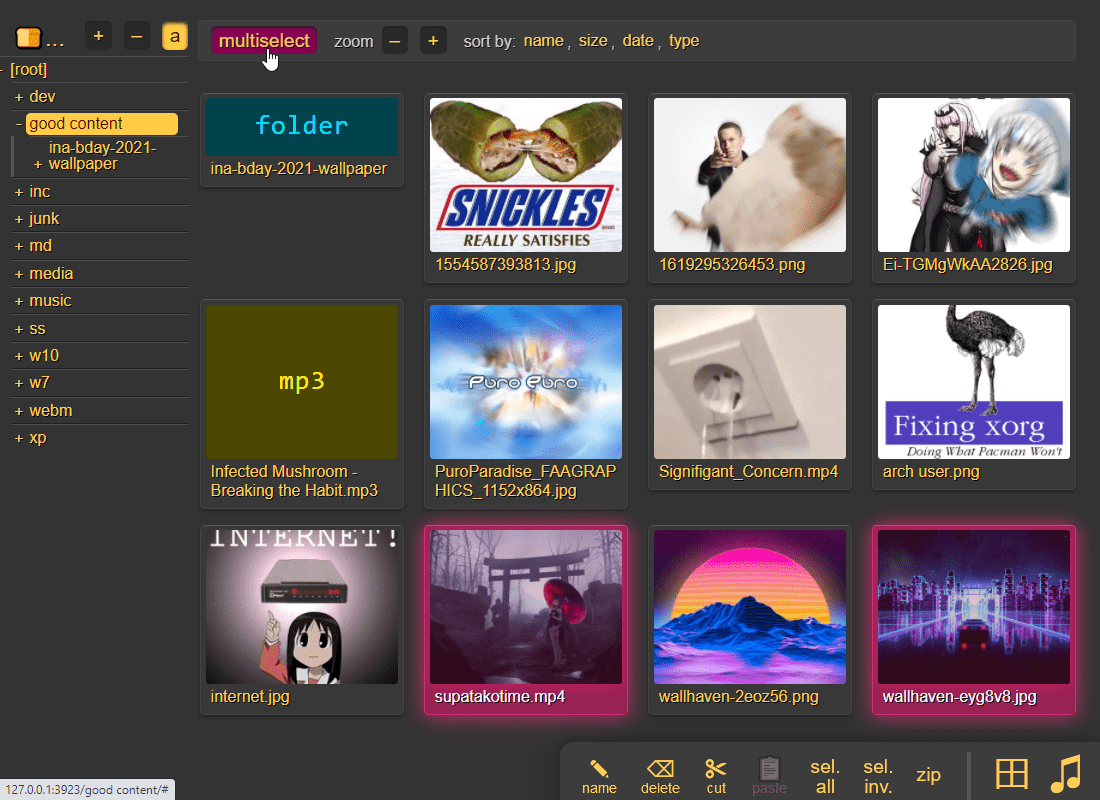

press `g` or `田` to toggle grid-view instead of the file listing and `t` toggles icons / thumbnails

|

||||

* can be made default globally with `--grid` or per-volume with volflag `grid`

|

||||

|

||||

|

||||

|

||||

@@ -460,10 +491,14 @@ it does static images with Pillow / pyvips / FFmpeg, and uses FFmpeg for video f

|

||||

audio files are covnerted into spectrograms using FFmpeg unless you `--no-athumb` (and some FFmpeg builds may need `--th-ff-swr`)

|

||||

|

||||

images with the following names (see `--th-covers`) become the thumbnail of the folder they're in: `folder.png`, `folder.jpg`, `cover.png`, `cover.jpg`

|

||||

* and, if you enable [file indexing](#file-indexing), all remaining folders will also get thumbnails (as long as they contain any pics at all)

|

||||

|

||||

in the grid/thumbnail view, if the audio player panel is open, songs will start playing when clicked

|

||||

* indicated by the audio files having the ▶ icon instead of 💾

|

||||

|

||||

enabling `multiselect` lets you click files to select them, and then shift-click another file for range-select

|

||||

* `multiselect` is mostly intended for phones/tablets, but the `sel` option in the `[⚙️] settings` tab is better suited for desktop use, allowing selection by CTRL-clicking and range-selection with SHIFT-click, all without affecting regular clicking

|

||||

|

||||

|

||||

## zip downloads

|

||||

|

||||

@@ -474,10 +509,16 @@ select which type of archive you want in the `[⚙️] config` tab:

|

||||

| name | url-suffix | description |

|

||||

|--|--|--|

|

||||

| `tar` | `?tar` | plain gnutar, works great with `curl \| tar -xv` |

|

||||

| `tar.gz` | `?tar=gz` | gzip compressed tar, for `curl \| tar -xvz` |

|

||||

| `tar.xz` | `?tar=xz` | gnu-tar with xz / lzma compression (good) |

|

||||

| `tar.bz2` | `?tar=bz2` | bzip2-compressed tar (mostly useless) |

|

||||

| `zip` | `?zip=utf8` | works everywhere, glitchy filenames on win7 and older |

|

||||

| `zip_dos` | `?zip` | traditional cp437 (no unicode) to fix glitchy filenames |

|

||||

| `zip_crc` | `?zip=crc` | cp437 with crc32 computed early for truly ancient software |

|

||||

|

||||

* gzip default level is `3` (0=fast, 9=best), change with `?tar=gz:9`

|

||||

* xz default level is `1` (0=fast, 9=best), change with `?tar=xz:9`

|

||||

* bz2 default level is `2` (1=fast, 9=best), change with `?tar=bz2:9`

|

||||

* hidden files (dotfiles) are excluded unless `-ed`

|

||||

* `up2k.db` and `dir.txt` is always excluded

|

||||

* `zip_crc` will take longer to download since the server has to read each file twice

|

||||

@@ -488,10 +529,14 @@ you can also zip a selection of files or folders by clicking them in the browser

|

||||

|

||||

|

||||

|

||||

cool trick: download a folder by appending url-params `?tar&opus` to transcode all audio files (except aac|m4a|mp3|ogg|opus|wma) to opus before they're added to the archive

|

||||

* super useful if you're 5 minutes away from takeoff and realize you don't have any music on your phone but your server only has flac files and downloading those will burn through all your data + there wouldn't be enough time anyways

|

||||

* and url-params `&j` / `&w` produce jpeg/webm thumbnails/spectrograms instead of the original audio/video/images

|

||||

|

||||

|

||||

## uploading

|

||||

|

||||

drag files/folders into the web-browser to upload (or use the [command-line uploader](https://github.com/9001/copyparty/tree/hovudstraum/bin#up2kpy))

|

||||

drag files/folders into the web-browser to upload (or use the [command-line uploader](https://github.com/9001/copyparty/tree/hovudstraum/bin#u2cpy))

|

||||

|

||||

this initiates an upload using `up2k`; there are two uploaders available:

|

||||

* `[🎈] bup`, the basic uploader, supports almost every browser since netscape 4.0

|

||||

@@ -509,11 +554,14 @@ up2k has several advantages:

|

||||

* much higher speeds than ftp/scp/tarpipe on some internet connections (mainly american ones) thanks to parallel connections

|

||||

* the last-modified timestamp of the file is preserved

|

||||

|

||||

> it is perfectly safe to restart / upgrade copyparty while someone is uploading to it!

|

||||

> all known up2k clients will resume just fine 💪

|

||||

|

||||

see [up2k](#up2k) for details on how it works, or watch a [demo video](https://a.ocv.me/pub/demo/pics-vids/#gf-0f6f5c0d)

|

||||

|

||||

|

||||

|

||||

**protip:** you can avoid scaring away users with [contrib/plugins/minimal-up2k.html](contrib/plugins/minimal-up2k.html) which makes it look [much simpler](https://user-images.githubusercontent.com/241032/118311195-dd6ca380-b4ef-11eb-86f3-75a3ff2e1332.png)

|

||||

**protip:** you can avoid scaring away users with [contrib/plugins/minimal-up2k.js](contrib/plugins/minimal-up2k.js) which makes it look [much simpler](https://user-images.githubusercontent.com/241032/118311195-dd6ca380-b4ef-11eb-86f3-75a3ff2e1332.png)

|

||||

|

||||

**protip:** if you enable `favicon` in the `[⚙️] settings` tab (by typing something into the textbox), the icon in the browser tab will indicate upload progress -- also, the `[🔔]` and/or `[🔊]` switches enable visible and/or audible notifications on upload completion

|

||||

|

||||

@@ -583,6 +631,7 @@ file selection: click somewhere on the line (not the link itsef), then:

|

||||

* `up/down` to move

|

||||

* `shift-up/down` to move-and-select

|

||||

* `ctrl-shift-up/down` to also scroll

|

||||

* shift-click another line for range-select

|

||||

|

||||

* cut: select some files and `ctrl-x`

|

||||

* paste: `ctrl-v` in another folder

|

||||

@@ -638,12 +687,70 @@ or a mix of both:

|

||||

the metadata keys you can use in the format field are the ones in the file-browser table header (whatever is collected with `-mte` and `-mtp`)

|

||||

|

||||

|

||||

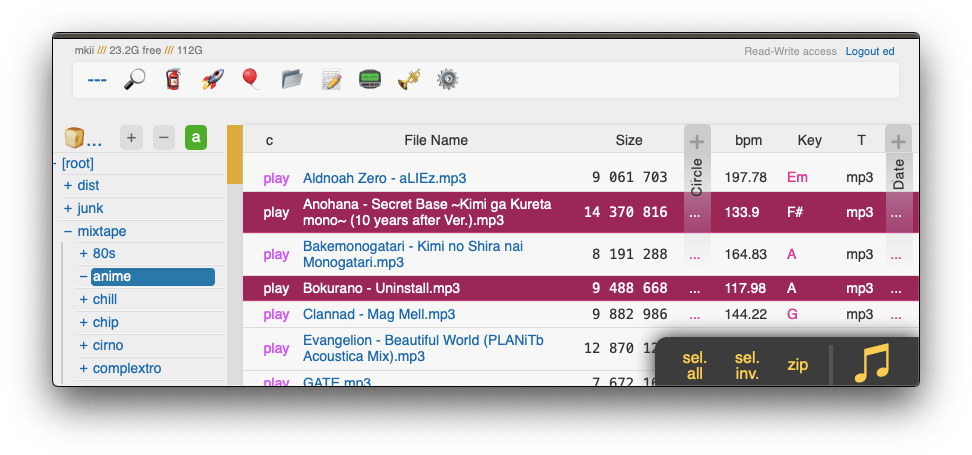

## media player

|

||||

|

||||

plays almost every audio format there is (if the server has FFmpeg installed for on-demand transcoding)

|

||||

|

||||

the following audio formats are usually always playable, even without FFmpeg: `aac|flac|m4a|mp3|ogg|opus|wav`

|

||||

|

||||

some hilights:

|

||||

* OS integration; control playback from your phone's lockscreen ([windows](https://user-images.githubusercontent.com/241032/233213022-298a98ba-721a-4cf1-a3d4-f62634bc53d5.png) // [iOS](https://user-images.githubusercontent.com/241032/142711926-0700be6c-3e31-47b3-9928-53722221f722.png) // [android](https://user-images.githubusercontent.com/241032/233212311-a7368590-08c7-4f9f-a1af-48ccf3f36fad.png))

|

||||

* shows the audio waveform in the seekbar

|

||||

* not perfectly gapless but can get really close (see settings + eq below); good enough to enjoy gapless albums as intended

|

||||

|

||||

click the `play` link next to an audio file, or copy the link target to [share it](https://a.ocv.me/pub/demo/music/Ubiktune%20-%20SOUNDSHOCK%202%20-%20FM%20FUNK%20TERRROR!!/#af-1fbfba61&t=18) (optionally with a timestamp to start playing from, like that example does)

|

||||

|

||||

open the `[🎺]` media-player-settings tab to configure it,

|

||||

* switches:

|

||||

* `[preload]` starts loading the next track when it's about to end, reduces the silence between songs

|

||||

* `[full]` does a full preload by downloading the entire next file; good for unreliable connections, bad for slow connections

|

||||

* `[~s]` toggles the seekbar waveform display

|

||||

* `[/np]` enables buttons to copy the now-playing info as an irc message

|

||||

* `[os-ctl]` makes it possible to control audio playback from the lockscreen of your device (enables [mediasession](https://developer.mozilla.org/en-US/docs/Web/API/MediaSession))

|

||||

* `[seek]` allows seeking with lockscreen controls (buggy on some devices)

|

||||

* `[art]` shows album art on the lockscreen

|

||||

* `[🎯]` keeps the playing song scrolled into view (good when using the player as a taskbar dock)

|

||||

* `[⟎]` shrinks the playback controls

|

||||

* playback mode:

|

||||

* `[loop]` keeps looping the folder

|

||||

* `[next]` plays into the next folder

|

||||

* transcode:

|

||||

* `[flac]` converts `flac` and `wav` files into opus

|

||||

* `[aac]` converts `aac` and `m4a` files into opus

|

||||

* `[oth]` converts all other known formats into opus

|

||||

* `aac|ac3|aif|aiff|alac|alaw|amr|ape|au|dfpwm|dts|flac|gsm|it|m4a|mo3|mod|mp2|mp3|mpc|mptm|mt2|mulaw|ogg|okt|opus|ra|s3m|tak|tta|ulaw|wav|wma|wv|xm|xpk`

|

||||

* "tint" reduces the contrast of the playback bar

|

||||

|

||||

|

||||

### audio equalizer

|

||||

|

||||

bass boosted

|

||||

|

||||

can also boost the volume in general, or increase/decrease stereo width (like [crossfeed](https://www.foobar2000.org/components/view/foo_dsp_meiercf) just worse)

|

||||

|

||||

has the convenient side-effect of reducing the pause between songs, so gapless albums play better with the eq enabled (just make it flat)

|

||||

|

||||

not available on iPhones / iPads because AudioContext currently breaks background audio playback on iOS (15.7.8)

|

||||

|

||||

|

||||

### fix unreliable playback on android

|

||||

|

||||

due to phone / app settings, android phones may randomly stop playing music when the power saver kicks in, especially at the end of an album -- you can fix it by [disabling power saving](https://user-images.githubusercontent.com/241032/235262123-c328cca9-3930-4948-bd18-3949b9fd3fcf.png) in the [app settings](https://user-images.githubusercontent.com/241032/235262121-2ffc51ae-7821-4310-a322-c3b7a507890c.png) of the browser you use for music streaming (preferably a dedicated one)

|

||||

|

||||

|

||||

## markdown viewer

|

||||

|

||||

and there are *two* editors

|

||||

|

||||

|

||||

|

||||

there is a built-in extension for inline clickable thumbnails;

|

||||

* enable it by adding `<!-- th -->` somewhere in the doc

|

||||

* add thumbnails with `!th[l](your.jpg)` where `l` means left-align (`r` = right-align)

|

||||

* a single line with `---` clears the float / inlining

|

||||

* in the case of README.md being displayed below a file listing, thumbnails will open in the gallery viewer

|

||||

|

||||

other notes,

|

||||

* the document preview has a max-width which is the same as an A4 paper when printed

|

||||

|

||||

|

||||

@@ -688,7 +795,8 @@ for the above example to work, add the commandline argument `-e2ts` to also scan

|

||||

using arguments or config files, or a mix of both:

|

||||

* config files (`-c some.conf`) can set additional commandline arguments; see [./docs/example.conf](docs/example.conf) and [./docs/example2.conf](docs/example2.conf)

|

||||

* `kill -s USR1` (same as `systemctl reload copyparty`) to reload accounts and volumes from config files without restarting

|

||||

* or click the `[reload cfg]` button in the control-panel when logged in as admin

|

||||

* or click the `[reload cfg]` button in the control-panel if the user has `a`/admin in any volume

|

||||

* changes to the `[global]` config section requires a restart to take effect

|

||||

|

||||

|

||||

## zeroconf

|

||||

@@ -747,6 +855,13 @@ an FTP server can be started using `--ftp 3921`, and/or `--ftps` for explicit T

|

||||

* some older software (filezilla on debian-stable) cannot passive-mode with TLS

|

||||

* login with any username + your password, or put your password in the username field

|

||||

|

||||

some recommended FTP / FTPS clients; `wark` = example password:

|

||||

* https://winscp.net/eng/download.php

|

||||

* https://filezilla-project.org/ struggles a bit with ftps in active-mode, but is fine otherwise

|

||||

* https://rclone.org/ does FTPS with `tls=false explicit_tls=true`

|

||||

* `lftp -u k,wark -p 3921 127.0.0.1 -e ls`

|

||||

* `lftp -u k,wark -p 3990 127.0.0.1 -e 'set ssl:verify-certificate no; ls'`

|

||||

|

||||

|

||||

## webdav server

|

||||

|

||||

@@ -760,6 +875,8 @@ general usage:

|

||||

on macos, connect from finder:

|

||||

* [Go] -> [Connect to Server...] -> http://192.168.123.1:3923/

|

||||

|

||||

in order to grant full write-access to webdav clients, the volflag `daw` must be set and the account must also have delete-access (otherwise the client won't be allowed to replace the contents of existing files, which is how webdav works)

|

||||

|

||||

|

||||

### connecting to webdav from windows

|

||||

|

||||

@@ -799,7 +916,7 @@ some **BIG WARNINGS** specific to SMB/CIFS, in decreasing importance:

|

||||

|

||||

and some minor issues,

|

||||

* clients only see the first ~400 files in big folders; [impacket#1433](https://github.com/SecureAuthCorp/impacket/issues/1433)

|

||||

* hot-reload of server config (`/?reload=cfg`) only works for volumes, not account passwords

|

||||

* hot-reload of server config (`/?reload=cfg`) does not include the `[global]` section (commandline args)

|

||||

* listens on the first IPv4 `-i` interface only (default = :: = 0.0.0.0 = all)

|

||||

* login doesn't work on winxp, but anonymous access is ok -- remove all accounts from copyparty config for that to work

|

||||

* win10 onwards does not allow connecting anonymously / without accounts

|

||||

@@ -839,14 +956,13 @@ through arguments:

|

||||

* `--xlink` enables deduplication across volumes

|

||||

|

||||

the same arguments can be set as volflags, in addition to `d2d`, `d2ds`, `d2t`, `d2ts`, `d2v` for disabling:

|

||||

* `-v ~/music::r:c,e2dsa,e2tsr` does a full reindex of everything on startup

|

||||

* `-v ~/music::r:c,e2ds,e2tsr` does a full reindex of everything on startup

|

||||

* `-v ~/music::r:c,d2d` disables **all** indexing, even if any `-e2*` are on

|

||||

* `-v ~/music::r:c,d2t` disables all `-e2t*` (tags), does not affect `-e2d*`

|

||||

* `-v ~/music::r:c,d2ds` disables on-boot scans; only index new uploads

|

||||

* `-v ~/music::r:c,d2ts` same except only affecting tags

|

||||

|

||||

note:

|

||||

* the parser can finally handle `c,e2dsa,e2tsr` so you no longer have to `c,e2dsa:c,e2tsr`

|

||||

* `e2tsr` is probably always overkill, since `e2ds`/`e2dsa` would pick up any file modifications and `e2ts` would then reindex those, unless there is a new copyparty version with new parsers and the release note says otherwise

|

||||

* the rescan button in the admin panel has no effect unless the volume has `-e2ds` or higher

|

||||

* deduplication is possible on windows if you run copyparty as administrator (not saying you should!)

|

||||

@@ -868,7 +984,11 @@ avoid traversing into other filesystems using `--xdev` / volflag `:c,xdev`, ski

|

||||

|

||||

and/or you can `--xvol` / `:c,xvol` to ignore all symlinks leaving the volume's top directory, but still allow bind-mounts pointing elsewhere

|

||||

|

||||

**NB: only affects the indexer** -- users can still access anything inside a volume, unless shadowed by another volume

|

||||

* symlinks are permitted with `xvol` if they point into another volume where the user has the same level of access

|

||||

|

||||

these options will reduce performance; unlikely worst-case estimates are 14% reduction for directory listings, 35% for download-as-tar

|

||||

|

||||

as of copyparty v1.7.0 these options also prevent file access at runtime -- in previous versions it was just hints for the indexer

|

||||

|

||||

### periodic rescan

|

||||

|

||||

@@ -885,6 +1005,8 @@ set upload rules using volflags, some examples:

|

||||

|

||||

* `:c,sz=1k-3m` sets allowed filesize between 1 KiB and 3 MiB inclusive (suffixes: `b`, `k`, `m`, `g`)

|

||||

* `:c,df=4g` block uploads if there would be less than 4 GiB free disk space afterwards

|

||||

* `:c,vmaxb=1g` block uploads if total volume size would exceed 1 GiB afterwards

|

||||

* `:c,vmaxn=4k` block uploads if volume would contain more than 4096 files afterwards

|

||||

* `:c,nosub` disallow uploading into subdirectories; goes well with `rotn` and `rotf`:

|

||||

* `:c,rotn=1000,2` moves uploads into subfolders, up to 1000 files in each folder before making a new one, two levels deep (must be at least 1)

|

||||

* `:c,rotf=%Y/%m/%d/%H` enforces files to be uploaded into a structure of subfolders according to that date format

|

||||

@@ -898,6 +1020,9 @@ you can also set transaction limits which apply per-IP and per-volume, but these

|

||||

* `:c,maxn=250,3600` allows 250 files over 1 hour from each IP (tracked per-volume)

|

||||

* `:c,maxb=1g,300` allows 1 GiB total over 5 minutes from each IP (tracked per-volume)

|

||||

|

||||

notes:

|

||||

* `vmaxb` and `vmaxn` requires either the `e2ds` volflag or `-e2dsa` global-option

|

||||

|

||||

|

||||

## compress uploads

|

||||

|

||||

@@ -1028,6 +1153,13 @@ note that this is way more complicated than the new [event hooks](#event-hooks)

|

||||

note that it will occupy the parsing threads, so fork anything expensive (or set `kn` to have copyparty fork it for you) -- otoh if you want to intentionally queue/singlethread you can combine it with `--mtag-mt 1`

|

||||

|

||||

|

||||

## handlers

|

||||

|

||||

redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

|

||||

replace 404 and 403 errors with something completely different (that's it for now)

|

||||

|

||||

|

||||

## hiding from google

|

||||

|

||||

tell search engines you dont wanna be indexed, either using the good old [robots.txt](https://www.robotstxt.org/robotstxt.html) or through copyparty settings:

|

||||

@@ -1064,7 +1196,33 @@ see the top of [./copyparty/web/browser.css](./copyparty/web/browser.css) where

|

||||

|

||||

## complete examples

|

||||

|

||||

* read-only music server

|

||||

* see [running on windows](./docs/examples/windows.md) for a fancy windows setup

|

||||

|

||||

* or use any of the examples below, just replace `python copyparty-sfx.py` with `copyparty.exe` if you're using the exe edition

|

||||

|

||||

* allow anyone to download or upload files into the current folder:

|

||||

`python copyparty-sfx.py`

|

||||

|

||||

* enable searching and music indexing with `-e2dsa -e2ts`

|

||||

|

||||

* start an FTP server on port 3921 with `--ftp 3921`

|

||||

|

||||

* announce it on your LAN with `-z` so it appears in windows/Linux file managers

|

||||

|

||||

* anyone can upload, but nobody can see any files (even the uploader):

|

||||

`python copyparty-sfx.py -e2dsa -v .::w`

|

||||

|

||||

* block uploads if there's less than 4 GiB free disk space with `--df 4`

|

||||

|

||||

* show a popup on new uploads with `--xau bin/hooks/notify.py`

|

||||

|

||||

* anyone can upload, and receive "secret" links for each upload they do:

|

||||

`python copyparty-sfx.py -e2dsa -v .::wG:c,fk=8`

|

||||

|

||||

* anyone can browse, only `kevin` (password `okgo`) can upload/move/delete files:

|

||||

`python copyparty-sfx.py -e2dsa -a kevin:okgo -v .::r:rwmd,kevin`

|

||||

|

||||

* read-only music server:

|

||||

`python copyparty-sfx.py -v /mnt/nas/music:/music:r -e2dsa -e2ts --no-robots --force-js --theme 2`

|

||||

|

||||

* ...with bpm and key scanning

|

||||

@@ -1079,19 +1237,194 @@ see the top of [./copyparty/web/browser.css](./copyparty/web/browser.css) where

|

||||

|

||||

## reverse-proxy

|

||||

|

||||

running copyparty next to other websites hosted on an existing webserver such as nginx or apache

|

||||

running copyparty next to other websites hosted on an existing webserver such as nginx, caddy, or apache

|

||||

|

||||

you can either:

|

||||

* give copyparty its own domain or subdomain (recommended)

|

||||

* or do location-based proxying, using `--rp-loc=/stuff` to tell copyparty where it is mounted -- has a slight performance cost and higher chance of bugs

|

||||

* if copyparty says `incorrect --rp-loc or webserver config; expected vpath starting with [...]` it's likely because the webserver is stripping away the proxy location from the request URLs -- see the `ProxyPass` in the apache example below

|

||||

|

||||

some reverse proxies (such as [Caddy](https://caddyserver.com/)) can automatically obtain a valid https/tls certificate for you, and some support HTTP/2 and QUIC which could be a nice speed boost

|

||||

* **warning:** nginx-QUIC is still experimental and can make uploads much slower, so HTTP/2 is recommended for now

|

||||

|

||||

example webserver configs:

|

||||

|

||||

* [nginx config](contrib/nginx/copyparty.conf) -- entire domain/subdomain

|

||||

* [apache2 config](contrib/apache/copyparty.conf) -- location-based

|

||||

|

||||

|

||||

## prometheus

|

||||

|

||||

metrics/stats can be enabled at URL `/.cpr/metrics` for grafana / prometheus / etc (openmetrics 1.0.0)

|

||||

|

||||

must be enabled with `--stats` since it reduces startup time a tiny bit, and you probably want `-e2dsa` too

|

||||

|

||||

the endpoint is only accessible by `admin` accounts, meaning the `a` in `rwmda` in the following example commandline: `python3 -m copyparty -a ed:wark -v /mnt/nas::rwmda,ed --stats -e2dsa`

|

||||

|

||||

follow a guide for setting up `node_exporter` except have it read from copyparty instead; example `/etc/prometheus/prometheus.yml` below

|

||||

|

||||

```yaml

|

||||

scrape_configs:

|

||||

- job_name: copyparty

|

||||

metrics_path: /.cpr/metrics

|

||||

basic_auth:

|

||||

password: wark

|

||||

static_configs:

|

||||

- targets: ['192.168.123.1:3923']

|

||||

```

|

||||

|

||||

currently the following metrics are available,

|

||||

* `cpp_uptime_seconds`

|

||||

* `cpp_bans` number of banned IPs

|

||||

|

||||

and these are available per-volume only:

|

||||

* `cpp_disk_size_bytes` total HDD size

|

||||

* `cpp_disk_free_bytes` free HDD space

|

||||

|

||||

and these are per-volume and `total`:

|

||||

* `cpp_vol_bytes` size of all files in volume

|

||||

* `cpp_vol_files` number of files

|

||||

* `cpp_dupe_bytes` disk space presumably saved by deduplication

|

||||

* `cpp_dupe_files` number of dupe files

|

||||

* `cpp_unf_bytes` currently unfinished / incoming uploads

|

||||

|

||||

some of the metrics have additional requirements to function correctly,

|

||||

* `cpp_vol_*` requires either the `e2ds` volflag or `-e2dsa` global-option

|

||||

|

||||

the following options are available to disable some of the metrics:

|

||||

* `--nos-hdd` disables `cpp_disk_*` which can prevent spinning up HDDs

|

||||

* `--nos-vol` disables `cpp_vol_*` which reduces server startup time

|

||||

* `--nos-dup` disables `cpp_dupe_*` which reduces the server load caused by prometheus queries

|

||||

* `--nos-unf` disables `cpp_unf_*` for no particular purpose

|

||||

|

||||

|

||||

# packages

|

||||

|

||||

the party might be closer than you think

|

||||

|

||||

|

||||

## arch package

|

||||

|

||||

now [available on aur](https://aur.archlinux.org/packages/copyparty) maintained by [@icxes](https://github.com/icxes)

|

||||

|

||||

|

||||

## fedora package

|

||||

|

||||

now [available on copr-pypi](https://copr.fedorainfracloud.org/coprs/g/copr/PyPI/) , maintained autonomously -- [track record](https://copr.fedorainfracloud.org/coprs/g/copr/PyPI/package/python-copyparty/) seems OK

|

||||

|

||||

```bash

|

||||

dnf copr enable @copr/PyPI

|

||||

dnf install python3-copyparty # just a minimal install, or...

|

||||

dnf install python3-{copyparty,pillow,argon2-cffi,pyftpdlib,pyOpenSSL} ffmpeg-free # with recommended deps

|

||||

```

|

||||

|

||||

this *may* also work on RHEL but [I'm not paying IBM to verify that](https://www.jeffgeerling.com/blog/2023/dear-red-hat-are-you-dumb)

|

||||

|

||||

|

||||

## nix package

|

||||

|

||||

`nix profile install github:9001/copyparty`

|

||||

|

||||

requires a [flake-enabled](https://nixos.wiki/wiki/Flakes) installation of nix

|

||||

|

||||

some recommended dependencies are enabled by default; [override the package](https://github.com/9001/copyparty/blob/hovudstraum/contrib/package/nix/copyparty/default.nix#L3-L22) if you want to add/remove some features/deps

|

||||

|

||||

`ffmpeg-full` was chosen over `ffmpeg-headless` mainly because we need `withWebp` (and `withOpenmpt` is also nice) and being able to use a cached build felt more important than optimizing for size at the time -- PRs welcome if you disagree 👍

|

||||

|

||||

|

||||

## nixos module

|

||||

|

||||

for this setup, you will need a [flake-enabled](https://nixos.wiki/wiki/Flakes) installation of NixOS.

|

||||

|

||||

```nix

|

||||

{

|

||||

# add copyparty flake to your inputs

|

||||

inputs.copyparty.url = "github:9001/copyparty";

|

||||

|

||||

# ensure that copyparty is an allowed argument to the outputs function

|

||||

outputs = { self, nixpkgs, copyparty }: {

|

||||

nixosConfigurations.yourHostName = nixpkgs.lib.nixosSystem {

|

||||

modules = [

|

||||

# load the copyparty NixOS module

|

||||

copyparty.nixosModules.default

|

||||

({ pkgs, ... }: {

|

||||

# add the copyparty overlay to expose the package to the module

|

||||

nixpkgs.overlays = [ copyparty.overlays.default ];

|

||||

# (optional) install the package globally

|

||||

environment.systemPackages = [ pkgs.copyparty ];

|

||||

# configure the copyparty module

|

||||

services.copyparty.enable = true;

|

||||

})

|

||||

];

|

||||

};

|

||||

};

|

||||

}

|

||||

```

|

||||

|

||||

copyparty on NixOS is configured via `services.copyparty` options, for example:

|

||||

```nix

|

||||

services.copyparty = {

|

||||

enable = true;

|

||||

# directly maps to values in the [global] section of the copyparty config.

|

||||

# see `copyparty --help` for available options

|

||||

settings = {

|

||||

i = "0.0.0.0";

|

||||

# use lists to set multiple values

|

||||

p = [ 3210 3211 ];

|

||||

# use booleans to set binary flags

|

||||

no-reload = true;

|

||||

# using 'false' will do nothing and omit the value when generating a config

|

||||

ignored-flag = false;

|

||||

};

|

||||

|

||||

# create users

|

||||

accounts = {

|

||||

# specify the account name as the key

|

||||

ed = {

|

||||

# provide the path to a file containing the password, keeping it out of /nix/store

|

||||

# must be readable by the copyparty service user

|

||||

passwordFile = "/run/keys/copyparty/ed_password";

|

||||

};

|

||||

# or do both in one go

|

||||

k.passwordFile = "/run/keys/copyparty/k_password";

|

||||

};

|

||||

|

||||

# create a volume

|

||||

volumes = {

|

||||

# create a volume at "/" (the webroot), which will

|

||||

"/" = {

|

||||

# share the contents of "/srv/copyparty"

|

||||

path = "/srv/copyparty";

|

||||