Compare commits

127 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

cadaeeeace | ||

|

|

767696185b | ||

|

|

c1efd227b7 | ||

|

|

a50d0563c3 | ||

|

|

e5641ddd16 | ||

|

|

700111ffeb | ||

|

|

b8adeb824a | ||

|

|

30cc9defcb | ||

|

|

61875bd773 | ||

|

|

30905c6f5d | ||

|

|

9986136dfb | ||

|

|

1c0d978979 | ||

|

|

0a0364e9f8 | ||

|

|

3376fbde1a | ||

|

|

ac21fa7782 | ||

|

|

c1c8dc5e82 | ||

|

|

5a38311481 | ||

|

|

9f8edb7f32 | ||

|

|

c5a6ac8417 | ||

|

|

50e01d6904 | ||

|

|

9b46291a20 | ||

|

|

14497b2425 | ||

|

|

f7ceae5a5f | ||

|

|

c9492d16ba | ||

|

|

9fb9ada3aa | ||

|

|

db0abbfdda | ||

|

|

e7f0009e57 | ||

|

|

4444f0f6ff | ||

|

|

418842d2d3 | ||

|

|

cafe53c055 | ||

|

|

7673beef72 | ||

|

|

b28bfe64c0 | ||

|

|

135ece3fbd | ||

|

|

bd3640d256 | ||

|

|

fc0405c8f3 | ||

|

|

7df890d964 | ||

|

|

8341041857 | ||

|

|

1b7634932d | ||

|

|

48a3898aa6 | ||

|

|

5d13ebb4ac | ||

|

|

015b87ee99 | ||

|

|

0a48acf6be | ||

|

|

2b6a3afd38 | ||

|

|

18aa82fb2f | ||

|

|

f5407b2997 | ||

|

|

474d5a155b | ||

|

|

afcd98b794 | ||

|

|

4f80e44ff7 | ||

|

|

406e413594 | ||

|

|

033b50ae1b | ||

|

|

bee26e853b | ||

|

|

04a1f7040e | ||

|

|

f9d5bb3b29 | ||

|

|

ca0cd04085 | ||

|

|

999ee2e7bc | ||

|

|

1ff7f968e8 | ||

|

|

3966266207 | ||

|

|

d03e96a392 | ||

|

|

4c843c6df9 | ||

|

|

0896c5295c | ||

|

|

cc0c9839eb | ||

|

|

d0aa20e17c | ||

|

|

1a658dedb7 | ||

|

|

8d376b854c | ||

|

|

490c16b01d | ||

|

|

2437a4e864 | ||

|

|

007d948cb9 | ||

|

|

335fcc8535 | ||

|

|

9eaa9904e0 | ||

|

|

0778da6c4d | ||

|

|

a1bb10012d | ||

|

|

1441ccee4f | ||

|

|

491803d8b7 | ||

|

|

3dcc386b6f | ||

|

|

5aa54d1217 | ||

|

|

88b876027c | ||

|

|

fcc3aa98fd | ||

|

|

f2f5e266b4 | ||

|

|

e17bf8f325 | ||

|

|

d19cb32bf3 | ||

|

|

85a637af09 | ||

|

|

043e3c7dd6 | ||

|

|

8f59afb159 | ||

|

|

77f1e51444 | ||

|

|

22fc4bb938 | ||

|

|

50c7bba6ea | ||

|

|

551d99b71b | ||

|

|

b54b7213a7 | ||

|

|

a14943c8de | ||

|

|

a10cad54fc | ||

|

|

8568b7702a | ||

|

|

5d8cb34885 | ||

|

|

8d248333e8 | ||

|

|

99e2ef7f33 | ||

|

|

e767230383 | ||

|

|

90601314d6 | ||

|

|

9c5eac1274 | ||

|

|

50905439e4 | ||

|

|

a0c1239246 | ||

|

|

b8e851c332 | ||

|

|

baaf2eb24d | ||

|

|

e197895c10 | ||

|

|

cb75efa05d | ||

|

|

8b0cf2c982 | ||

|

|

fc7d9e1f9c | ||

|

|

10caafa34c | ||

|

|

22cc22225a | ||

|

|

22dff4b0e5 | ||

|

|

a00ff2b086 | ||

|

|

e4acddc23b | ||

|

|

2b2d8e4e02 | ||

|

|

5501d49032 | ||

|

|

fa54b2eec4 | ||

|

|

cb0160021f | ||

|

|

93a723d588 | ||

|

|

8ebe1fb5e8 | ||

|

|

2acdf685b1 | ||

|

|

9f122ccd16 | ||

|

|

03be26fafc | ||

|

|

df5d309d6e | ||

|

|

c355f9bd91 | ||

|

|

9c28ba417e | ||

|

|

705b58c741 | ||

|

|

510302d667 | ||

|

|

025a537413 | ||

|

|

60a1ff0fc0 | ||

|

|

f94a0b1bff |

147

README.md

147

README.md

@@ -66,12 +66,15 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [file parser plugins](#file-parser-plugins) - provide custom parsers to index additional tags

|

||||

* [event hooks](#event-hooks) - trigger a program on uploads, renames etc ([examples](./bin/hooks/))

|

||||

* [upload events](#upload-events) - the older, more powerful approach ([examples](./bin/mtag/))

|

||||

* [handlers](#handlers) - redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

* [hiding from google](#hiding-from-google) - tell search engines you dont wanna be indexed

|

||||

* [themes](#themes)

|

||||

* [complete examples](#complete-examples)

|

||||

* [reverse-proxy](#reverse-proxy) - running copyparty next to other websites

|

||||

* [prometheus](#prometheus) - metrics/stats can be enabled

|

||||

* [packages](#packages) - the party might be closer than you think

|

||||

* [arch package](#arch-package) - now [available on aur](https://aur.archlinux.org/packages/copyparty) maintained by [@icxes](https://github.com/icxes)

|

||||

* [fedora package](#fedora-package) - now [available on copr-pypi](https://copr.fedorainfracloud.org/coprs/g/copr/PyPI/)

|

||||

* [nix package](#nix-package) - `nix profile install github:9001/copyparty`

|

||||

* [nixos module](#nixos-module)

|

||||

* [browser support](#browser-support) - TLDR: yes

|

||||

@@ -82,9 +85,10 @@ turn almost any device into a file server with resumable uploads/downloads using

|

||||

* [iOS shortcuts](#iOS-shortcuts) - there is no iPhone app, but

|

||||

* [performance](#performance) - defaults are usually fine - expect `8 GiB/s` download, `1 GiB/s` upload

|

||||

* [client-side](#client-side) - when uploading files

|

||||

* [security](#security) - some notes on hardening

|

||||

* [security](#security) - there is a [discord server](https://discord.gg/25J8CdTT6G)

|

||||

* [gotchas](#gotchas) - behavior that might be unexpected

|

||||

* [cors](#cors) - cross-site request config

|

||||

* [password hashing](#password-hashing) - you can hash passwords

|

||||

* [https](#https) - both HTTP and HTTPS are accepted

|

||||

* [recovering from crashes](#recovering-from-crashes)

|

||||

* [client crashes](#client-crashes)

|

||||

@@ -106,7 +110,7 @@ just run **[copyparty-sfx.py](https://github.com/9001/copyparty/releases/latest/

|

||||

|

||||

* or install through pypi: `python3 -m pip install --user -U copyparty`

|

||||

* or if you cannot install python, you can use [copyparty.exe](#copypartyexe) instead

|

||||

* or install [on arch](#arch-package) ╱ [on NixOS](#nixos-module) ╱ [through nix](#nix-package)

|

||||

* or install [on arch](#arch-package) ╱ [on fedora](#fedora-package) ╱ [on NixOS](#nixos-module) ╱ [through nix](#nix-package)

|

||||

* or if you are on android, [install copyparty in termux](#install-on-android)

|

||||

* or if you prefer to [use docker](./scripts/docker/) 🐋 you can do that too

|

||||

* docker has all deps built-in, so skip this step:

|

||||

@@ -281,8 +285,11 @@ server notes:

|

||||

* Android: music playback randomly stops due to [battery usage settings](#fix-unreliable-playback-on-android)

|

||||

|

||||

* iPhones: the volume control doesn't work because [apple doesn't want it to](https://developer.apple.com/library/archive/documentation/AudioVideo/Conceptual/Using_HTML5_Audio_Video/Device-SpecificConsiderations/Device-SpecificConsiderations.html#//apple_ref/doc/uid/TP40009523-CH5-SW11)

|

||||

* *future workaround:* enable the equalizer, make it all-zero, and set a negative boost to reduce the volume

|

||||

* "future" because `AudioContext` can't maintain a stable playback speed in the current iOS version (15.7), maybe one day...

|

||||

* `AudioContext` will probably never be a viable workaround as apple introduces new issues faster than they fix current ones

|

||||

|

||||

* iPhones: the preload feature (in the media-player-options tab) can cause a tiny audio glitch 20sec before the end of each song, but disabling it may cause worse iOS bugs to appear instead

|

||||

* just a hunch, but disabling preloading may cause playback to stop entirely, or possibly mess with bluetooth speakers

|

||||

* tried to add a tooltip regarding this but looks like apple broke my tooltips

|

||||

|

||||

* Windows: folders cannot be accessed if the name ends with `.`

|

||||

* python or windows bug

|

||||

@@ -292,6 +299,7 @@ server notes:

|

||||

|

||||

* VirtualBox: sqlite throws `Disk I/O Error` when running in a VM and the up2k database is in a vboxsf

|

||||

* use `--hist` or the `hist` volflag (`-v [...]:c,hist=/tmp/foo`) to place the db inside the vm instead

|

||||

* also happens on mergerfs, so put the db elsewhere

|

||||

|

||||

* Ubuntu: dragging files from certain folders into firefox or chrome is impossible

|

||||

* due to snap security policies -- see `snap connections firefox` for the allowlist, `removable-media` permits all of `/mnt` and `/media` apparently

|

||||

@@ -324,7 +332,7 @@ upgrade notes

|

||||

# accounts and volumes

|

||||

|

||||

per-folder, per-user permissions - if your setup is getting complex, consider making a [config file](./docs/example.conf) instead of using arguments

|

||||

* much easier to manage, and you can modify the config at runtime with `systemctl reload copyparty` or more conveniently using the `[reload cfg]` button in the control-panel (if logged in as admin)

|

||||

* much easier to manage, and you can modify the config at runtime with `systemctl reload copyparty` or more conveniently using the `[reload cfg]` button in the control-panel (if the user has `a`/admin in any volume)

|

||||

* changes to the `[global]` config section requires a restart to take effect

|

||||

|

||||

a quick summary can be seen using `--help-accounts`

|

||||

@@ -343,6 +351,7 @@ permissions:

|

||||

* `d` (delete): delete files/folders

|

||||

* `g` (get): only download files, cannot see folder contents or zip/tar

|

||||

* `G` (upget): same as `g` except uploaders get to see their own filekeys (see `fk` in examples below)

|

||||

* `a` (admin): can see uploader IPs, config-reload

|

||||

|

||||

examples:

|

||||

* add accounts named u1, u2, u3 with passwords p1, p2, p3: `-a u1:p1 -a u2:p2 -a u3:p3`

|

||||

@@ -471,6 +480,7 @@ click the `🌲` or pressing the `B` hotkey to toggle between breadcrumbs path (

|

||||

## thumbnails

|

||||

|

||||

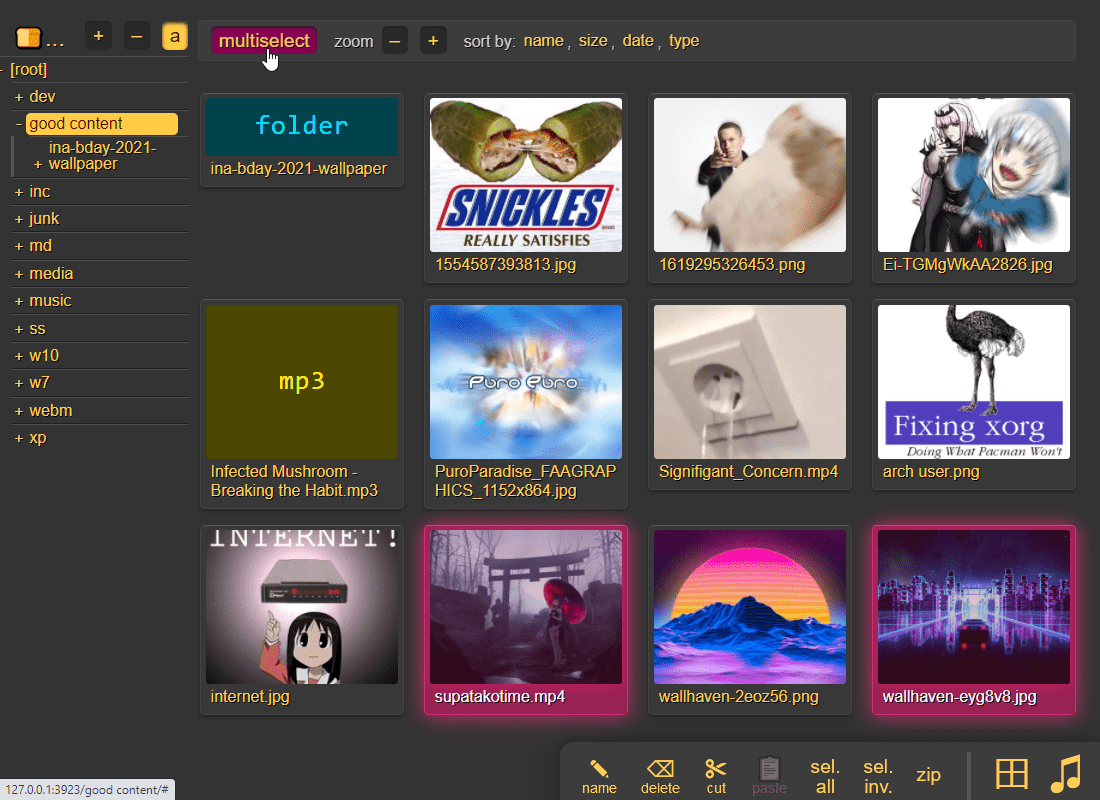

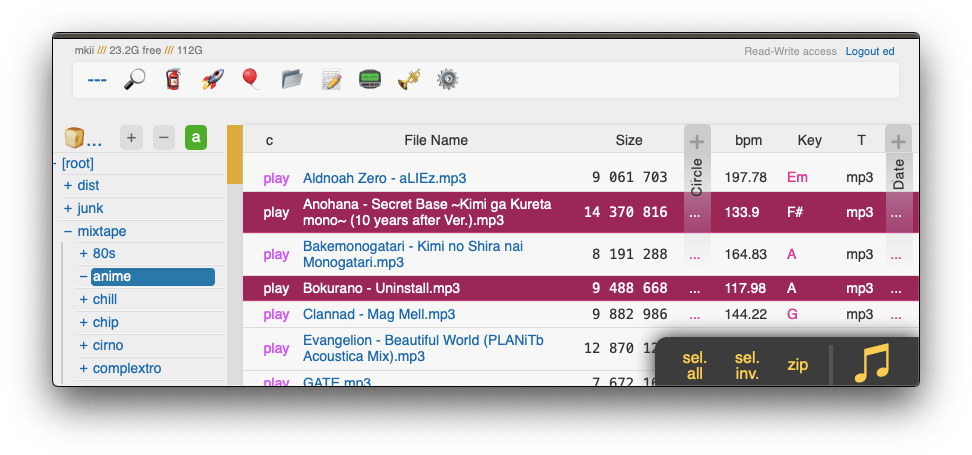

press `g` or `田` to toggle grid-view instead of the file listing and `t` toggles icons / thumbnails

|

||||

* can be made default globally with `--grid` or per-volume with volflag `grid`

|

||||

|

||||

|

||||

|

||||

@@ -481,10 +491,14 @@ it does static images with Pillow / pyvips / FFmpeg, and uses FFmpeg for video f

|

||||

audio files are covnerted into spectrograms using FFmpeg unless you `--no-athumb` (and some FFmpeg builds may need `--th-ff-swr`)

|

||||

|

||||

images with the following names (see `--th-covers`) become the thumbnail of the folder they're in: `folder.png`, `folder.jpg`, `cover.png`, `cover.jpg`

|

||||

* and, if you enable [file indexing](#file-indexing), all remaining folders will also get thumbnails (as long as they contain any pics at all)

|

||||

|

||||

in the grid/thumbnail view, if the audio player panel is open, songs will start playing when clicked

|

||||

* indicated by the audio files having the ▶ icon instead of 💾

|

||||

|

||||

enabling `multiselect` lets you click files to select them, and then shift-click another file for range-select

|

||||

* `multiselect` is mostly intended for phones/tablets, but the `sel` option in the `[⚙️] settings` tab is better suited for desktop use, allowing selection by CTRL-clicking and range-selection with SHIFT-click, all without affecting regular clicking

|

||||

|

||||

|

||||

## zip downloads

|

||||

|

||||

@@ -495,10 +509,16 @@ select which type of archive you want in the `[⚙️] config` tab:

|

||||

| name | url-suffix | description |

|

||||

|--|--|--|

|

||||

| `tar` | `?tar` | plain gnutar, works great with `curl \| tar -xv` |

|

||||

| `tar.gz` | `?tar=gz` | gzip compressed tar, for `curl \| tar -xvz` |

|

||||

| `tar.xz` | `?tar=xz` | gnu-tar with xz / lzma compression (good) |

|

||||

| `tar.bz2` | `?tar=bz2` | bzip2-compressed tar (mostly useless) |

|

||||

| `zip` | `?zip=utf8` | works everywhere, glitchy filenames on win7 and older |

|

||||

| `zip_dos` | `?zip` | traditional cp437 (no unicode) to fix glitchy filenames |

|

||||

| `zip_crc` | `?zip=crc` | cp437 with crc32 computed early for truly ancient software |

|

||||

|

||||

* gzip default level is `3` (0=fast, 9=best), change with `?tar=gz:9`

|

||||

* xz default level is `1` (0=fast, 9=best), change with `?tar=xz:9`

|

||||

* bz2 default level is `2` (1=fast, 9=best), change with `?tar=bz2:9`

|

||||

* hidden files (dotfiles) are excluded unless `-ed`

|

||||

* `up2k.db` and `dir.txt` is always excluded

|

||||

* `zip_crc` will take longer to download since the server has to read each file twice

|

||||

@@ -509,6 +529,10 @@ you can also zip a selection of files or folders by clicking them in the browser

|

||||

|

||||

|

||||

|

||||

cool trick: download a folder by appending url-params `?tar&opus` to transcode all audio files (except aac|m4a|mp3|ogg|opus|wma) to opus before they're added to the archive

|

||||

* super useful if you're 5 minutes away from takeoff and realize you don't have any music on your phone but your server only has flac files and downloading those will burn through all your data + there wouldn't be enough time anyways

|

||||

* and url-params `&j` / `&w` produce jpeg/webm thumbnails/spectrograms instead of the original audio/video/images

|

||||

|

||||

|

||||

## uploading

|

||||

|

||||

@@ -607,6 +631,7 @@ file selection: click somewhere on the line (not the link itsef), then:

|

||||

* `up/down` to move

|

||||

* `shift-up/down` to move-and-select

|

||||

* `ctrl-shift-up/down` to also scroll

|

||||

* shift-click another line for range-select

|

||||

|

||||

* cut: select some files and `ctrl-x`

|

||||

* paste: `ctrl-v` in another folder

|

||||

@@ -690,7 +715,7 @@ open the `[🎺]` media-player-settings tab to configure it,

|

||||

* `[loop]` keeps looping the folder

|

||||

* `[next]` plays into the next folder

|

||||

* transcode:

|

||||

* `[flac]` convers `flac` and `wav` files into opus

|

||||

* `[flac]` converts `flac` and `wav` files into opus

|

||||

* `[aac]` converts `aac` and `m4a` files into opus

|

||||

* `[oth]` converts all other known formats into opus

|

||||

* `aac|ac3|aif|aiff|alac|alaw|amr|ape|au|dfpwm|dts|flac|gsm|it|m4a|mo3|mod|mp2|mp3|mpc|mptm|mt2|mulaw|ogg|okt|opus|ra|s3m|tak|tta|ulaw|wav|wma|wv|xm|xpk`

|

||||

@@ -705,6 +730,8 @@ can also boost the volume in general, or increase/decrease stereo width (like [c

|

||||

|

||||

has the convenient side-effect of reducing the pause between songs, so gapless albums play better with the eq enabled (just make it flat)

|

||||

|

||||

not available on iPhones / iPads because AudioContext currently breaks background audio playback on iOS (15.7.8)

|

||||

|

||||

|

||||

### fix unreliable playback on android

|

||||

|

||||

@@ -768,7 +795,7 @@ for the above example to work, add the commandline argument `-e2ts` to also scan

|

||||

using arguments or config files, or a mix of both:

|

||||

* config files (`-c some.conf`) can set additional commandline arguments; see [./docs/example.conf](docs/example.conf) and [./docs/example2.conf](docs/example2.conf)

|

||||

* `kill -s USR1` (same as `systemctl reload copyparty`) to reload accounts and volumes from config files without restarting

|

||||

* or click the `[reload cfg]` button in the control-panel when logged in as admin

|

||||

* or click the `[reload cfg]` button in the control-panel if the user has `a`/admin in any volume

|

||||

* changes to the `[global]` config section requires a restart to take effect

|

||||

|

||||

|

||||

@@ -929,14 +956,13 @@ through arguments:

|

||||

* `--xlink` enables deduplication across volumes

|

||||

|

||||

the same arguments can be set as volflags, in addition to `d2d`, `d2ds`, `d2t`, `d2ts`, `d2v` for disabling:

|

||||

* `-v ~/music::r:c,e2dsa,e2tsr` does a full reindex of everything on startup

|

||||

* `-v ~/music::r:c,e2ds,e2tsr` does a full reindex of everything on startup

|

||||

* `-v ~/music::r:c,d2d` disables **all** indexing, even if any `-e2*` are on

|

||||

* `-v ~/music::r:c,d2t` disables all `-e2t*` (tags), does not affect `-e2d*`

|

||||

* `-v ~/music::r:c,d2ds` disables on-boot scans; only index new uploads

|

||||

* `-v ~/music::r:c,d2ts` same except only affecting tags

|

||||

|

||||

note:

|

||||

* the parser can finally handle `c,e2dsa,e2tsr` so you no longer have to `c,e2dsa:c,e2tsr`

|

||||

* `e2tsr` is probably always overkill, since `e2ds`/`e2dsa` would pick up any file modifications and `e2ts` would then reindex those, unless there is a new copyparty version with new parsers and the release note says otherwise

|

||||

* the rescan button in the admin panel has no effect unless the volume has `-e2ds` or higher

|

||||

* deduplication is possible on windows if you run copyparty as administrator (not saying you should!)

|

||||

@@ -979,6 +1005,8 @@ set upload rules using volflags, some examples:

|

||||

|

||||

* `:c,sz=1k-3m` sets allowed filesize between 1 KiB and 3 MiB inclusive (suffixes: `b`, `k`, `m`, `g`)

|

||||

* `:c,df=4g` block uploads if there would be less than 4 GiB free disk space afterwards

|

||||

* `:c,vmaxb=1g` block uploads if total volume size would exceed 1 GiB afterwards

|

||||

* `:c,vmaxn=4k` block uploads if volume would contain more than 4096 files afterwards

|

||||

* `:c,nosub` disallow uploading into subdirectories; goes well with `rotn` and `rotf`:

|

||||

* `:c,rotn=1000,2` moves uploads into subfolders, up to 1000 files in each folder before making a new one, two levels deep (must be at least 1)

|

||||

* `:c,rotf=%Y/%m/%d/%H` enforces files to be uploaded into a structure of subfolders according to that date format

|

||||

@@ -992,6 +1020,9 @@ you can also set transaction limits which apply per-IP and per-volume, but these

|

||||

* `:c,maxn=250,3600` allows 250 files over 1 hour from each IP (tracked per-volume)

|

||||

* `:c,maxb=1g,300` allows 1 GiB total over 5 minutes from each IP (tracked per-volume)

|

||||

|

||||

notes:

|

||||

* `vmaxb` and `vmaxn` requires either the `e2ds` volflag or `-e2dsa` global-option

|

||||

|

||||

|

||||

## compress uploads

|

||||

|

||||

@@ -1122,6 +1153,13 @@ note that this is way more complicated than the new [event hooks](#event-hooks)

|

||||

note that it will occupy the parsing threads, so fork anything expensive (or set `kn` to have copyparty fork it for you) -- otoh if you want to intentionally queue/singlethread you can combine it with `--mtag-mt 1`

|

||||

|

||||

|

||||

## handlers

|

||||

|

||||

redefine behavior with plugins ([examples](./bin/handlers/))

|

||||

|

||||

replace 404 and 403 errors with something completely different (that's it for now)

|

||||

|

||||

|

||||

## hiding from google

|

||||

|

||||

tell search engines you dont wanna be indexed, either using the good old [robots.txt](https://www.robotstxt.org/robotstxt.html) or through copyparty settings:

|

||||

@@ -1207,6 +1245,7 @@ you can either:

|

||||

* if copyparty says `incorrect --rp-loc or webserver config; expected vpath starting with [...]` it's likely because the webserver is stripping away the proxy location from the request URLs -- see the `ProxyPass` in the apache example below

|

||||

|

||||

some reverse proxies (such as [Caddy](https://caddyserver.com/)) can automatically obtain a valid https/tls certificate for you, and some support HTTP/2 and QUIC which could be a nice speed boost

|

||||

* **warning:** nginx-QUIC is still experimental and can make uploads much slower, so HTTP/2 is recommended for now

|

||||

|

||||

example webserver configs:

|

||||

|

||||

@@ -1214,6 +1253,51 @@ example webserver configs:

|

||||

* [apache2 config](contrib/apache/copyparty.conf) -- location-based

|

||||

|

||||

|

||||

## prometheus

|

||||

|

||||

metrics/stats can be enabled at URL `/.cpr/metrics` for grafana / prometheus / etc (openmetrics 1.0.0)

|

||||

|

||||

must be enabled with `--stats` since it reduces startup time a tiny bit, and you probably want `-e2dsa` too

|

||||

|

||||

the endpoint is only accessible by `admin` accounts, meaning the `a` in `rwmda` in the following example commandline: `python3 -m copyparty -a ed:wark -v /mnt/nas::rwmda,ed --stats -e2dsa`

|

||||

|

||||

follow a guide for setting up `node_exporter` except have it read from copyparty instead; example `/etc/prometheus/prometheus.yml` below

|

||||

|

||||

```yaml

|

||||

scrape_configs:

|

||||

- job_name: copyparty

|

||||

metrics_path: /.cpr/metrics

|

||||

basic_auth:

|

||||

password: wark

|

||||

static_configs:

|

||||

- targets: ['192.168.123.1:3923']

|

||||

```

|

||||

|

||||

currently the following metrics are available,

|

||||

* `cpp_uptime_seconds`

|

||||

* `cpp_bans` number of banned IPs

|

||||

|

||||

and these are available per-volume only:

|

||||

* `cpp_disk_size_bytes` total HDD size

|

||||

* `cpp_disk_free_bytes` free HDD space

|

||||

|

||||

and these are per-volume and `total`:

|

||||

* `cpp_vol_bytes` size of all files in volume

|

||||

* `cpp_vol_files` number of files

|

||||

* `cpp_dupe_bytes` disk space presumably saved by deduplication

|

||||

* `cpp_dupe_files` number of dupe files

|

||||

* `cpp_unf_bytes` currently unfinished / incoming uploads

|

||||

|

||||

some of the metrics have additional requirements to function correctly,

|

||||

* `cpp_vol_*` requires either the `e2ds` volflag or `-e2dsa` global-option

|

||||

|

||||

the following options are available to disable some of the metrics:

|

||||

* `--nos-hdd` disables `cpp_disk_*` which can prevent spinning up HDDs

|

||||

* `--nos-vol` disables `cpp_vol_*` which reduces server startup time

|

||||

* `--nos-dup` disables `cpp_dupe_*` which reduces the server load caused by prometheus queries

|

||||

* `--nos-unf` disables `cpp_unf_*` for no particular purpose

|

||||

|

||||

|

||||

# packages

|

||||

|

||||

the party might be closer than you think

|

||||

@@ -1224,6 +1308,19 @@ the party might be closer than you think

|

||||

now [available on aur](https://aur.archlinux.org/packages/copyparty) maintained by [@icxes](https://github.com/icxes)

|

||||

|

||||

|

||||

## fedora package

|

||||

|

||||

now [available on copr-pypi](https://copr.fedorainfracloud.org/coprs/g/copr/PyPI/) , maintained autonomously -- [track record](https://copr.fedorainfracloud.org/coprs/g/copr/PyPI/package/python-copyparty/) seems OK

|

||||

|

||||

```bash

|

||||

dnf copr enable @copr/PyPI

|

||||

dnf install python3-copyparty # just a minimal install, or...

|

||||

dnf install python3-{copyparty,pillow,argon2-cffi,pyftpdlib,pyOpenSSL} ffmpeg-free # with recommended deps

|

||||

```

|

||||

|

||||

this *may* also work on RHEL but [I'm not paying IBM to verify that](https://www.jeffgeerling.com/blog/2023/dear-red-hat-are-you-dumb)

|

||||

|

||||

|

||||

## nix package

|

||||

|

||||

`nix profile install github:9001/copyparty`

|

||||

@@ -1506,10 +1603,14 @@ when uploading files,

|

||||

|

||||

# security

|

||||

|

||||

there is a [discord server](https://discord.gg/25J8CdTT6G) with an `@everyone` for all important updates (at the lack of better ideas)

|

||||

|

||||

some notes on hardening

|

||||

|

||||

* set `--rproxy 0` if your copyparty is directly facing the internet (not through a reverse-proxy)

|

||||

* cors doesn't work right otherwise

|

||||

* if you allow anonymous uploads or otherwise don't trust the contents of a volume, you can prevent XSS with volflag `nohtml`

|

||||

* this returns html documents as plaintext, and also disables markdown rendering

|

||||

|

||||

safety profiles:

|

||||

|

||||

@@ -1523,9 +1624,9 @@ safety profiles:

|

||||

* `--unpost 0`, `--no-del`, `--no-mv` disables all move/delete support

|

||||

* `--hardlink` creates hardlinks instead of symlinks when deduplicating uploads, which is less maintenance

|

||||

* however note if you edit one file it will also affect the other copies

|

||||

* `--vague-401` returns a "404 not found" instead of "401 unauthorized" which is a common enterprise meme

|

||||

* `--vague-403` returns a "404 not found" instead of "401 unauthorized" which is a common enterprise meme

|

||||

* `--ban-404=50,60,1440` ban client for 1440min (24h) if they hit 50 404's in 60min

|

||||

* **NB:** will ban anyone who enables up2k turbo

|

||||

* `--turbo=-1` to force-disable turbo-mode in the uploader which could otherwise hit the 404-ban

|

||||

* `--nih` removes the server hostname from directory listings

|

||||

|

||||

* option `-sss` is a shortcut for the above plus:

|

||||

@@ -1547,10 +1648,12 @@ other misc notes:

|

||||

behavior that might be unexpected

|

||||

|

||||

* users without read-access to a folder can still see the `.prologue.html` / `.epilogue.html` / `README.md` contents, for the purpose of showing a description on how to use the uploader for example

|

||||

* users can submit `<script>`s which autorun for other visitors in a few ways;

|

||||

* users can submit `<script>`s which autorun (in a sandbox) for other visitors in a few ways;

|

||||

* uploading a `README.md` -- avoid with `--no-readme`

|

||||

* renaming `some.html` to `.epilogue.html` -- avoid with either `--no-logues` or `--no-dot-ren`

|

||||

* the directory-listing embed is sandboxed (so any malicious scripts can't do any damage) but the markdown editor is not

|

||||

* the directory-listing embed is sandboxed (so any malicious scripts can't do any damage) but the markdown editor is not 100% safe, see below

|

||||

* markdown documents can contain html and `<script>`s; attempts are made to prevent scripts from executing (unless `-emp` is specified) but this is not 100% bulletproof, so setting the `nohtml` volflag is still the safest choice

|

||||

* or eliminate the problem entirely by only giving write-access to trustworthy people :^)

|

||||

|

||||

|

||||

## cors

|

||||

@@ -1565,12 +1668,28 @@ by default, except for `GET` and `HEAD` operations, all requests must either:

|

||||

cors can be configured with `--acao` and `--acam`, or the protections entirely disabled with `--allow-csrf`

|

||||

|

||||

|

||||

## password hashing

|

||||

|

||||

you can hash passwords before putting them into config files / providing them as arguments; see `--help-pwhash` for all the details

|

||||

|

||||

`--ah-alg argon2` enables it, and if you have any plaintext passwords then it'll print the hashed versions on startup so you can replace them

|

||||

|

||||

optionally also specify `--ah-cli` to enter an interactive mode where it will hash passwords without ever writing the plaintext ones to disk

|

||||

|

||||

the default configs take about 0.4 sec and 256 MiB RAM to process a new password on a decent laptop

|

||||

|

||||

|

||||

## https

|

||||

|

||||

both HTTP and HTTPS are accepted by default, but letting a [reverse proxy](#reverse-proxy) handle the https/tls/ssl would be better (probably more secure by default)

|

||||

|

||||

copyparty doesn't speak HTTP/2 or QUIC, so using a reverse proxy would solve that as well

|

||||

|

||||

if [cfssl](https://github.com/cloudflare/cfssl/releases/latest) is installed, copyparty will automatically create a CA and server-cert on startup

|

||||

* the certs are written to `--crt-dir` for distribution, see `--help` for the other `--crt` options

|

||||

* this will be a self-signed certificate so you must install your `ca.pem` into all your browsers/devices

|

||||

* if you want to avoid the hassle of distributing certs manually, please consider using a reverse proxy

|

||||

|

||||

|

||||

# recovering from crashes

|

||||

|

||||

@@ -1607,6 +1726,8 @@ mandatory deps:

|

||||

|

||||

install these to enable bonus features

|

||||

|

||||

enable hashed passwords in config: `argon2-cffi`

|

||||

|

||||

enable ftp-server:

|

||||

* for just plaintext FTP, `pyftpdlib` (is built into the SFX)

|

||||

* with TLS encryption, `pyftpdlib pyopenssl`

|

||||

|

||||

35

bin/handlers/README.md

Normal file

35

bin/handlers/README.md

Normal file

@@ -0,0 +1,35 @@

|

||||

replace the standard 404 / 403 responses with plugins

|

||||

|

||||

|

||||

# usage

|

||||

|

||||

load plugins either globally with `--on404 ~/dev/copyparty/bin/handlers/sorry.py` or for a specific volume with `:c,on404=~/handlers/sorry.py`

|

||||

|

||||

|

||||

# api

|

||||

|

||||

each plugin must define a `main()` which takes 3 arguments;

|

||||

|

||||

* `cli` is an instance of [copyparty/httpcli.py](https://github.com/9001/copyparty/blob/hovudstraum/copyparty/httpcli.py) (the monstrosity itself)

|

||||

* `vn` is the VFS which overlaps with the requested URL, and

|

||||

* `rem` is the URL remainder below the VFS mountpoint

|

||||

* so `vn.vpath + rem` == `cli.vpath` == original request

|

||||

|

||||

|

||||

# examples

|

||||

|

||||

## on404

|

||||

|

||||

* [sorry.py](answer.py) replies with a custom message instead of the usual 404

|

||||

* [nooo.py](nooo.py) replies with an endless noooooooooooooo

|

||||

* [never404.py](never404.py) 100% guarantee that 404 will never be a thing again as it automatically creates dummy files whenever necessary

|

||||

* [caching-proxy.py](caching-proxy.py) transforms copyparty into a squid/varnish knockoff

|

||||

|

||||

## on403

|

||||

|

||||

* [ip-ok.py](ip-ok.py) disables security checks if client-ip is 1.2.3.4

|

||||

|

||||

|

||||

# notes

|

||||

|

||||

* on403 only works for trivial stuff (basic http access) since I haven't been able to think of any good usecases for it (was just easy to add while doing on404)

|

||||

36

bin/handlers/caching-proxy.py

Executable file

36

bin/handlers/caching-proxy.py

Executable file

@@ -0,0 +1,36 @@

|

||||

# assume each requested file exists on another webserver and

|

||||

# download + mirror them as they're requested

|

||||

# (basically pretend we're warnish)

|

||||

|

||||

import os

|

||||

import requests

|

||||

|

||||

from typing import TYPE_CHECKING

|

||||

|

||||

if TYPE_CHECKING:

|

||||

from copyparty.httpcli import HttpCli

|

||||

|

||||

|

||||

def main(cli: "HttpCli", vn, rem):

|

||||

url = "https://mirrors.edge.kernel.org/alpine/" + rem

|

||||

abspath = os.path.join(vn.realpath, rem)

|

||||

|

||||

# sneaky trick to preserve a requests-session between downloads

|

||||

# so it doesn't have to spend ages reopening https connections;

|

||||

# luckily we can stash it inside the copyparty client session,

|

||||

# name just has to be definitely unused so "hacapo_req_s" it is

|

||||

req_s = getattr(cli.conn, "hacapo_req_s", None) or requests.Session()

|

||||

setattr(cli.conn, "hacapo_req_s", req_s)

|

||||

|

||||

try:

|

||||

os.makedirs(os.path.dirname(abspath), exist_ok=True)

|

||||

with req_s.get(url, stream=True, timeout=69) as r:

|

||||

r.raise_for_status()

|

||||

with open(abspath, "wb", 64 * 1024) as f:

|

||||

for buf in r.iter_content(chunk_size=64 * 1024):

|

||||

f.write(buf)

|

||||

except:

|

||||

os.unlink(abspath)

|

||||

return "false"

|

||||

|

||||

return "retry"

|

||||

6

bin/handlers/ip-ok.py

Executable file

6

bin/handlers/ip-ok.py

Executable file

@@ -0,0 +1,6 @@

|

||||

# disable permission checks and allow access if client-ip is 1.2.3.4

|

||||

|

||||

|

||||

def main(cli, vn, rem):

|

||||

if cli.ip == "1.2.3.4":

|

||||

return "allow"

|

||||

11

bin/handlers/never404.py

Executable file

11

bin/handlers/never404.py

Executable file

@@ -0,0 +1,11 @@

|

||||

# create a dummy file and let copyparty return it

|

||||

|

||||

|

||||

def main(cli, vn, rem):

|

||||

print("hello", cli.ip)

|

||||

|

||||

abspath = vn.canonical(rem)

|

||||

with open(abspath, "wb") as f:

|

||||

f.write(b"404? not on MY watch!")

|

||||

|

||||

return "retry"

|

||||

16

bin/handlers/nooo.py

Executable file

16

bin/handlers/nooo.py

Executable file

@@ -0,0 +1,16 @@

|

||||

# reply with an endless "noooooooooooooooooooooooo"

|

||||

|

||||

|

||||

def say_no():

|

||||

yield b"n"

|

||||

while True:

|

||||

yield b"o" * 4096

|

||||

|

||||

|

||||

def main(cli, vn, rem):

|

||||

cli.send_headers(None, 404, "text/plain")

|

||||

|

||||

for chunk in say_no():

|

||||

cli.s.sendall(chunk)

|

||||

|

||||

return "false"

|

||||

7

bin/handlers/sorry.py

Executable file

7

bin/handlers/sorry.py

Executable file

@@ -0,0 +1,7 @@

|

||||

# sends a custom response instead of the usual 404

|

||||

|

||||

|

||||

def main(cli, vn, rem):

|

||||

msg = f"sorry {cli.ip} but {cli.vpath} doesn't exist"

|

||||

|

||||

return str(cli.reply(msg.encode("utf-8"), 404, "text/plain"))

|

||||

@@ -37,6 +37,10 @@ def main():

|

||||

if "://" not in url:

|

||||

url = "https://" + url

|

||||

|

||||

proto = url.split("://")[0].lower()

|

||||

if proto not in ("http", "https", "ftp", "ftps"):

|

||||

raise Exception("bad proto {}".format(proto))

|

||||

|

||||

os.chdir(inf["ap"])

|

||||

|

||||

name = url.split("?")[0].split("/")[-1]

|

||||

|

||||

@@ -24,6 +24,15 @@ these do not have any problematic dependencies at all:

|

||||

* also available as an [event hook](../hooks/wget.py)

|

||||

|

||||

|

||||

## dangerous plugins

|

||||

|

||||

plugins in this section should only be used with appropriate precautions:

|

||||

|

||||

* [very-bad-idea.py](./very-bad-idea.py) combined with [meadup.js](https://github.com/9001/copyparty/blob/hovudstraum/contrib/plugins/meadup.js) converts copyparty into a janky yet extremely flexible chromecast clone

|

||||

* also adds a virtual keyboard by @steinuil to the basic-upload tab for comfy couch crowd control

|

||||

* anything uploaded through the [android app](https://github.com/9001/party-up) (files or links) are executed on the server, meaning anyone can infect your PC with malware... so protect this with a password and keep it on a LAN!

|

||||

|

||||

|

||||

# dependencies

|

||||

|

||||

run [`install-deps.sh`](install-deps.sh) to build/install most dependencies required by these programs (supports windows/linux/macos)

|

||||

|

||||

@@ -1,6 +1,11 @@

|

||||

#!/usr/bin/env python3

|

||||

|

||||

"""

|

||||

WARNING -- DANGEROUS PLUGIN --

|

||||

if someone is able to upload files to a copyparty which is

|

||||

running this plugin, they can execute malware on your machine

|

||||

so please keep this on a LAN and protect it with a password

|

||||

|

||||

use copyparty as a chromecast replacement:

|

||||

* post a URL and it will open in the default browser

|

||||

* upload a file and it will open in the default application

|

||||

@@ -10,16 +15,17 @@ use copyparty as a chromecast replacement:

|

||||

|

||||

the android app makes it a breeze to post pics and links:

|

||||

https://github.com/9001/party-up/releases

|

||||

(iOS devices have to rely on the web-UI)

|

||||

|

||||

goes without saying, but this is HELLA DANGEROUS,

|

||||

GIVES RCE TO ANYONE WHO HAVE UPLOAD PERMISSIONS

|

||||

iOS devices can use the web-UI or the shortcut instead:

|

||||

https://github.com/9001/copyparty#ios-shortcuts

|

||||

|

||||

example copyparty config to use this:

|

||||

--urlform save,get -v.::w:c,e2d,e2t,mte=+a1:c,mtp=a1=ad,kn,c0,bin/mtag/very-bad-idea.py

|

||||

example copyparty config to use this;

|

||||

lets the user "kevin" with password "hunter2" use this plugin:

|

||||

-a kevin:hunter2 --urlform save,get -v.::w,kevin:c,e2d,e2t,mte=+a1:c,mtp=a1=ad,kn,c0,bin/mtag/very-bad-idea.py

|

||||

|

||||

recommended deps:

|

||||

apt install xdotool libnotify-bin

|

||||

apt install xdotool libnotify-bin mpv

|

||||

python3 -m pip install --user -U streamlink yt-dlp

|

||||

https://github.com/9001/copyparty/blob/hovudstraum/contrib/plugins/meadup.js

|

||||

|

||||

and you probably want `twitter-unmute.user.js` from the res folder

|

||||

@@ -63,8 +69,10 @@ set -e

|

||||

EOF

|

||||

chmod 755 /usr/local/bin/chromium-browser

|

||||

|

||||

# start the server (note: replace `-v.::rw:` with `-v.::w:` to disallow retrieving uploaded stuff)

|

||||

cd ~/Downloads; python3 copyparty-sfx.py --urlform save,get -v.::rw:c,e2d,e2t,mte=+a1:c,mtp=a1=ad,kn,very-bad-idea.py

|

||||

# start the server

|

||||

# note 1: replace hunter2 with a better password to access the server

|

||||

# note 2: replace `-v.::rw` with `-v.::w` to disallow retrieving uploaded stuff

|

||||

cd ~/Downloads; python3 copyparty-sfx.py -a kevin:hunter2 --urlform save,get -v.::rw,kevin:c,e2d,e2t,mte=+a1:c,mtp=a1=ad,kn,very-bad-idea.py

|

||||

|

||||

"""

|

||||

|

||||

@@ -72,11 +80,23 @@ cd ~/Downloads; python3 copyparty-sfx.py --urlform save,get -v.::rw:c,e2d,e2t,mt

|

||||

import os

|

||||

import sys

|

||||

import time

|

||||

import shutil

|

||||

import subprocess as sp

|

||||

from urllib.parse import unquote_to_bytes as unquote

|

||||

from urllib.parse import quote

|

||||

|

||||

have_mpv = shutil.which("mpv")

|

||||

have_vlc = shutil.which("vlc")

|

||||

|

||||

|

||||

def main():

|

||||

if len(sys.argv) > 2 and sys.argv[1] == "x":

|

||||

# invoked on commandline for testing;

|

||||

# python3 very-bad-idea.py x msg=https://youtu.be/dQw4w9WgXcQ

|

||||

txt = " ".join(sys.argv[2:])

|

||||

txt = quote(txt.replace(" ", "+"))

|

||||

return open_post(txt.encode("utf-8"))

|

||||

|

||||

fp = os.path.abspath(sys.argv[1])

|

||||

with open(fp, "rb") as f:

|

||||

txt = f.read(4096)

|

||||

@@ -92,7 +112,7 @@ def open_post(txt):

|

||||

try:

|

||||

k, v = txt.split(" ", 1)

|

||||

except:

|

||||

open_url(txt)

|

||||

return open_url(txt)

|

||||

|

||||

if k == "key":

|

||||

sp.call(["xdotool", "key"] + v.split(" "))

|

||||

@@ -128,6 +148,17 @@ def open_url(txt):

|

||||

# else:

|

||||

# sp.call(["xdotool", "getactivewindow", "windowminimize"]) # minimizes the focused windo

|

||||

|

||||

# mpv is probably smart enough to use streamlink automatically

|

||||

if try_mpv(txt):

|

||||

print("mpv got it")

|

||||

return

|

||||

|

||||

# or maybe streamlink would be a good choice to open this

|

||||

if try_streamlink(txt):

|

||||

print("streamlink got it")

|

||||

return

|

||||

|

||||

# nope,

|

||||

# close any error messages:

|

||||

sp.call(["xdotool", "search", "--name", "Error", "windowclose"])

|

||||

# sp.call(["xdotool", "key", "ctrl+alt+d"]) # doesnt work at all

|

||||

@@ -136,4 +167,39 @@ def open_url(txt):

|

||||

sp.call(["xdg-open", txt])

|

||||

|

||||

|

||||

def try_mpv(url):

|

||||

t0 = time.time()

|

||||

try:

|

||||

print("trying mpv...")

|

||||

sp.check_call(["mpv", "--fs", url])

|

||||

return True

|

||||

except:

|

||||

# if it ran for 15 sec it probably succeeded and terminated

|

||||

t = time.time()

|

||||

return t - t0 > 15

|

||||

|

||||

|

||||

def try_streamlink(url):

|

||||

t0 = time.time()

|

||||

try:

|

||||

import streamlink

|

||||

|

||||

print("trying streamlink...")

|

||||

streamlink.Streamlink().resolve_url(url)

|

||||

|

||||

if have_mpv:

|

||||

args = "-m streamlink -p mpv -a --fs"

|

||||

else:

|

||||

args = "-m streamlink"

|

||||

|

||||

cmd = [sys.executable] + args.split() + [url, "best"]

|

||||

t0 = time.time()

|

||||

sp.check_call(cmd)

|

||||

return True

|

||||

except:

|

||||

# if it ran for 10 sec it probably succeeded and terminated

|

||||

t = time.time()

|

||||

return t - t0 > 10

|

||||

|

||||

|

||||

main()

|

||||

|

||||

@@ -65,6 +65,10 @@ def main():

|

||||

if "://" not in url:

|

||||

url = "https://" + url

|

||||

|

||||

proto = url.split("://")[0].lower()

|

||||

if proto not in ("http", "https", "ftp", "ftps"):

|

||||

raise Exception("bad proto {}".format(proto))

|

||||

|

||||

os.chdir(fdir)

|

||||

|

||||

name = url.split("?")[0].split("/")[-1]

|

||||

|

||||

23

bin/u2c.py

23

bin/u2c.py

@@ -1,8 +1,8 @@

|

||||

#!/usr/bin/env python3

|

||||

from __future__ import print_function, unicode_literals

|

||||

|

||||

S_VERSION = "1.9"

|

||||

S_BUILD_DT = "2023-05-07"

|

||||

S_VERSION = "1.10"

|

||||

S_BUILD_DT = "2023-08-15"

|

||||

|

||||

"""

|

||||

u2c.py: upload to copyparty

|

||||

@@ -14,6 +14,7 @@ https://github.com/9001/copyparty/blob/hovudstraum/bin/u2c.py

|

||||

- if something breaks just try again and it'll autoresume

|

||||

"""

|

||||

|

||||

import re

|

||||

import os

|

||||

import sys

|

||||

import stat

|

||||

@@ -39,7 +40,7 @@ except:

|

||||

|

||||

try:

|

||||

import requests

|

||||

except ImportError:

|

||||

except ImportError as ex:

|

||||

if EXE:

|

||||

raise

|

||||

elif sys.version_info > (2, 7):

|

||||

@@ -50,7 +51,7 @@ except ImportError:

|

||||

m = "\n ERROR: need these:\n" + "\n".join(m) + "\n"

|

||||

m += "\n for f in *.whl; do unzip $f; done; rm -r *.dist-info\n"

|

||||

|

||||

print(m.format(sys.executable))

|

||||

print(m.format(sys.executable), "\nspecifically,", ex)

|

||||

sys.exit(1)

|

||||

|

||||

|

||||

@@ -411,10 +412,11 @@ def walkdir(err, top, seen):

|

||||

err.append((ap, str(ex)))

|

||||

|

||||

|

||||

def walkdirs(err, tops):

|

||||

def walkdirs(err, tops, excl):

|

||||

"""recursive statdir for a list of tops, yields [top, relpath, stat]"""

|

||||

sep = "{0}".format(os.sep).encode("ascii")

|

||||

if not VT100:

|

||||

excl = excl.replace("/", r"\\")

|

||||

za = []

|

||||

for td in tops:

|

||||

try:

|

||||

@@ -431,6 +433,8 @@ def walkdirs(err, tops):

|

||||

za = [x.replace(b"/", b"\\") for x in za]

|

||||

tops = za

|

||||

|

||||

ptn = re.compile(excl.encode("utf-8") or b"\n")

|

||||

|

||||

for top in tops:

|

||||

isdir = os.path.isdir(top)

|

||||

if top[-1:] == sep:

|

||||

@@ -443,6 +447,8 @@ def walkdirs(err, tops):

|

||||

|

||||

if isdir:

|

||||

for ap, inf in walkdir(err, top, []):

|

||||

if ptn.match(ap):

|

||||

continue

|

||||

yield stop, ap[len(stop) :].lstrip(sep), inf

|

||||

else:

|

||||

d, n = top.rsplit(sep, 1)

|

||||

@@ -654,7 +660,7 @@ class Ctl(object):

|

||||

nfiles = 0

|

||||

nbytes = 0

|

||||

err = []

|

||||

for _, _, inf in walkdirs(err, ar.files):

|

||||

for _, _, inf in walkdirs(err, ar.files, ar.x):

|

||||

if stat.S_ISDIR(inf.st_mode):

|

||||

continue

|

||||

|

||||

@@ -696,7 +702,7 @@ class Ctl(object):

|

||||

if ar.te:

|

||||

req_ses.verify = ar.te

|

||||

|

||||

self.filegen = walkdirs([], ar.files)

|

||||

self.filegen = walkdirs([], ar.files, ar.x)

|

||||

self.recheck = [] # type: list[File]

|

||||

|

||||

if ar.safe:

|

||||

@@ -1097,6 +1103,7 @@ source file/folder selection uses rsync syntax, meaning that:

|

||||

ap.add_argument("-v", action="store_true", help="verbose")

|

||||

ap.add_argument("-a", metavar="PASSWORD", help="password or $filepath")

|

||||

ap.add_argument("-s", action="store_true", help="file-search (disables upload)")

|

||||

ap.add_argument("-x", type=unicode, metavar="REGEX", default="", help="skip file if filesystem-abspath matches REGEX, example: '.*/\.hist/.*'")

|

||||

ap.add_argument("--ok", action="store_true", help="continue even if some local files are inaccessible")

|

||||

ap.add_argument("--version", action="store_true", help="show version and exit")

|

||||

|

||||

@@ -1113,7 +1120,7 @@ source file/folder selection uses rsync syntax, meaning that:

|

||||

ap.add_argument("-j", type=int, metavar="THREADS", default=4, help="parallel connections")

|

||||

ap.add_argument("-J", type=int, metavar="THREADS", default=hcores, help="num cpu-cores to use for hashing; set 0 or 1 for single-core hashing")

|

||||

ap.add_argument("-nh", action="store_true", help="disable hashing while uploading")

|

||||

ap.add_argument("-ns", action="store_true", help="no status panel (for slow consoles)")

|

||||

ap.add_argument("-ns", action="store_true", help="no status panel (for slow consoles and macos)")

|

||||

ap.add_argument("--safe", action="store_true", help="use simple fallback approach")

|

||||

ap.add_argument("-z", action="store_true", help="ZOOMIN' (skip uploading files if they exist at the destination with the ~same last-modified timestamp, so same as yolo / turbo with date-chk but even faster)")

|

||||

|

||||

|

||||

@@ -1,14 +1,44 @@

|

||||

#!/bin/bash

|

||||

set -e

|

||||

|

||||

cat >/dev/null <<'EOF'

|

||||

|

||||

NOTE: copyparty is now able to do this automatically;

|

||||

however you may wish to use this script instead if

|

||||

you have specific needs (or if copyparty breaks)

|

||||

|

||||

this script generates a new self-signed TLS certificate and

|

||||

replaces the default insecure one that comes with copyparty

|

||||

|

||||

as it is trivial to impersonate a copyparty server using the

|

||||

default certificate, it is highly recommended to do this

|

||||

|

||||

this will create a self-signed CA, and a Server certificate

|

||||

which gets signed by that CA -- you can run it multiple times

|

||||

with different server-FQDNs / IPs to create additional certs

|

||||

for all your different servers / (non-)copyparty services

|

||||

|

||||

EOF

|

||||

|

||||

|

||||

# ca-name and server-fqdn

|

||||

ca_name="$1"

|

||||

srv_fqdn="$2"

|

||||

|

||||

[ -z "$srv_fqdn" ] && {

|

||||

echo "need arg 1: ca name"

|

||||

echo "need arg 2: server fqdn and/or IPs, comma-separated"

|

||||

echo "optional arg 3: if set, write cert into copyparty cfg"

|

||||

[ -z "$srv_fqdn" ] && { cat <<'EOF'

|

||||

need arg 1: ca name

|

||||

need arg 2: server fqdn and/or IPs, comma-separated

|

||||

optional arg 3: if set, write cert into copyparty cfg

|

||||

|

||||

example:

|

||||

./cfssl.sh PartyCo partybox.local y

|

||||

EOF

|

||||

exit 1

|

||||

}

|

||||

|

||||

|

||||

command -v cfssljson 2>/dev/null || {

|

||||

echo please install cfssl and try again

|

||||

exit 1

|

||||

}

|

||||

|

||||

@@ -59,12 +89,14 @@ show() {

|

||||

}

|

||||

show ca.pem

|

||||

show "$srv_fqdn.pem"

|

||||

|

||||

echo

|

||||

echo "successfully generated new certificates"

|

||||

|

||||

# write cert into copyparty config

|

||||

[ -z "$3" ] || {

|

||||

mkdir -p ~/.config/copyparty

|

||||

cat "$srv_fqdn".{key,pem} ca.pem >~/.config/copyparty/cert.pem

|

||||

echo "successfully replaced copyparty certificate"

|

||||

}

|

||||

|

||||

|

||||

|

||||

@@ -34,6 +34,8 @@ server {

|

||||

proxy_set_header Host $host;

|

||||

proxy_set_header X-Real-IP $remote_addr;

|

||||

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

|

||||

# NOTE: with cloudflare you want this instead:

|

||||

#proxy_set_header X-Forwarded-For $http_cf_connecting_ip;

|

||||

proxy_set_header X-Forwarded-Proto $scheme;

|

||||

proxy_set_header Connection "Keep-Alive";

|

||||

}

|

||||

|

||||

@@ -138,6 +138,7 @@ in {

|

||||

"d" (delete): permanently delete files and folders

|

||||

"g" (get): download files, but cannot see folder contents

|

||||

"G" (upget): "get", but can see filekeys of their own uploads

|

||||

"a" (upget): can see uploader IPs, config-reload

|

||||

|

||||

For example: "rwmd"

|

||||

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Maintainer: icxes <dev.null@need.moe>

|

||||

pkgname=copyparty

|

||||

pkgver="1.7.1"

|

||||

pkgver="1.9.3"

|

||||

pkgrel=1

|

||||

pkgdesc="Portable file sharing hub"

|

||||

arch=("any")

|

||||

@@ -15,11 +15,12 @@ optdepends=("ffmpeg: thumbnails for videos, images (slower) and audio, music tag

|

||||

"libkeyfinder-git: detection of musical keys"

|

||||

"qm-vamp-plugins: BPM detection"

|

||||

"python-pyopenssl: ftps functionality"

|

||||

"python-argon2_cffi: hashed passwords in config"

|

||||

"python-impacket-git: smb support (bad idea)"

|

||||

)

|

||||

source=("https://github.com/9001/${pkgname}/releases/download/v${pkgver}/${pkgname}-${pkgver}.tar.gz")

|

||||

backup=("etc/${pkgname}.d/init" )

|

||||

sha256sums=("b369966e65a0c0f622b5dea231e3e62649d3b6ef3074c1b2a88335e48e024604")

|

||||

sha256sums=("87db55a57adf14b3b875c72d94b5df67560abc6dbfc104104e0c76d7f02848b6")

|

||||

|

||||

build() {

|

||||

cd "${srcdir}/${pkgname}-${pkgver}"

|

||||

|

||||

@@ -1,4 +1,7 @@

|

||||

{ lib, stdenv, makeWrapper, fetchurl, utillinux, python, jinja2, impacket, pyftpdlib, pyopenssl, pillow, pyvips, ffmpeg, mutagen,

|

||||

{ lib, stdenv, makeWrapper, fetchurl, utillinux, python, jinja2, impacket, pyftpdlib, pyopenssl, argon2-cffi, pillow, pyvips, ffmpeg, mutagen,

|

||||

|

||||

# use argon2id-hashed passwords in config files (sha2 is always available)

|

||||

withHashedPasswords ? true,

|

||||

|

||||

# create thumbnails with Pillow; faster than FFmpeg / MediaProcessing

|

||||

withThumbnails ? true,

|

||||

@@ -35,6 +38,7 @@ let

|

||||

++ lib.optional withFastThumbnails pyvips

|

||||

++ lib.optional withMediaProcessing ffmpeg

|

||||

++ lib.optional withBasicAudioMetadata mutagen

|

||||

++ lib.optional withHashedPasswords argon2-cffi

|

||||

);

|

||||

in stdenv.mkDerivation {

|

||||

pname = "copyparty";

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

{

|

||||

"url": "https://github.com/9001/copyparty/releases/download/v1.7.1/copyparty-sfx.py",

|

||||

"version": "1.7.1",

|

||||

"hash": "sha256-Mg3z5DkOhQc58bbjkEKo/UiLehghIYjR66LcYHqlm1o="

|

||||

"url": "https://github.com/9001/copyparty/releases/download/v1.9.3/copyparty-sfx.py",

|

||||

"version": "1.9.3",

|

||||

"hash": "sha256-ufT7WARaj6nKaLX/r3X/ex/hMLMh1rtG0lkZHCm4Gu4="

|

||||

}

|

||||

@@ -1,3 +1,6 @@

|

||||

# NOTE: this is now a built-in feature in copyparty

|

||||

# but you may still want this if you have specific needs

|

||||

#

|

||||

# systemd service which generates a new TLS certificate on each boot,

|

||||

# that way the one-year expiry time won't cause any issues --

|

||||

# just have everyone trust the ca.pem once every 10 years

|

||||

|

||||

@@ -22,6 +22,7 @@

|

||||

# add '-i 127.0.0.1' to only allow local connections

|

||||

# add '-e2dsa' to enable filesystem scanning + indexing

|

||||

# add '-e2ts' to enable metadata indexing

|

||||

# remove '--ansi' to disable colored logs

|

||||

#

|

||||

# with `Type=notify`, copyparty will signal systemd when it is ready to

|

||||

# accept connections; correctly delaying units depending on copyparty.

|

||||

@@ -59,7 +60,7 @@ ExecStartPre=+nft add rule ip nat prerouting tcp dport 443 redirect to :3923

|

||||

ExecStartPre=+/bin/bash -c 'mkdir -p /run/tmpfiles.d/ && echo "x /tmp/pe-copyparty*" > /run/tmpfiles.d/copyparty.conf'

|

||||

|

||||

# copyparty settings

|

||||

ExecStart=/usr/bin/python3 /usr/local/bin/copyparty-sfx.py -e2d -v /mnt::rw

|

||||

ExecStart=/usr/bin/python3 /usr/local/bin/copyparty-sfx.py --ansi -e2d -v /mnt::rw

|

||||

|

||||

[Install]

|

||||

WantedBy=multi-user.target

|

||||

|

||||

@@ -6,6 +6,10 @@ import platform

|

||||

import sys

|

||||

import time

|

||||

|

||||

# fmt: off

|

||||

_:tuple[int,int]=(0,0) # _____________________________________________________________________ hey there! if you are reading this, your python is too old to run copyparty without some help. Please use https://github.com/9001/copyparty/releases/latest/download/copyparty-sfx.py or the pypi package instead, or see https://github.com/9001/copyparty/blob/hovudstraum/docs/devnotes.md#building if you want to build it yourself :-) ************************************************************************************************************************************************

|

||||

# fmt: on

|

||||

|

||||

try:

|

||||

from typing import TYPE_CHECKING

|

||||

except:

|

||||

@@ -27,7 +31,12 @@ WINDOWS: Any = (

|

||||

else False

|

||||

)

|

||||

|

||||

VT100 = not WINDOWS or WINDOWS >= [10, 0, 14393]

|

||||

VT100 = "--ansi" in sys.argv or (

|

||||

os.environ.get("NO_COLOR", "").lower() in ("", "0", "false")

|

||||

and sys.stdout.isatty()

|

||||

and "--no-ansi" not in sys.argv

|

||||

and (not WINDOWS or WINDOWS >= [10, 0, 14393])

|

||||

)

|

||||

# introduced in anniversary update

|

||||

|

||||

ANYWIN = WINDOWS or sys.platform in ["msys", "cygwin"]

|

||||

|

||||

@@ -10,11 +10,9 @@ __url__ = "https://github.com/9001/copyparty/"

|

||||

|

||||

import argparse

|

||||

import base64

|

||||

import filecmp

|

||||

import locale

|

||||

import os

|

||||

import re

|

||||

import shutil

|

||||

import socket

|

||||

import sys

|

||||

import threading

|

||||

@@ -242,6 +240,37 @@ def get_srvname() -> str:

|

||||

return ret

|

||||

|

||||

|

||||

def get_fk_salt(cert_path) -> str:

|

||||

fp = os.path.join(E.cfg, "fk-salt.txt")

|

||||

try:

|

||||

with open(fp, "rb") as f:

|

||||

ret = f.read().strip()

|

||||

except:

|

||||

if os.path.exists(cert_path):

|

||||

zi = os.path.getmtime(cert_path)

|

||||

ret = "{}".format(zi).encode("utf-8")

|

||||

else:

|

||||

ret = base64.b64encode(os.urandom(18))

|

||||

|

||||

with open(fp, "wb") as f:

|

||||

f.write(ret + b"\n")

|

||||

|

||||

return ret.decode("utf-8")

|

||||

|

||||

|

||||

def get_ah_salt() -> str:

|

||||

fp = os.path.join(E.cfg, "ah-salt.txt")

|

||||

try:

|

||||

with open(fp, "rb") as f:

|

||||

ret = f.read().strip()

|

||||

except:

|

||||

ret = base64.b64encode(os.urandom(18))

|

||||

with open(fp, "wb") as f:

|

||||

f.write(ret + b"\n")

|

||||

|

||||

return ret.decode("utf-8")

|

||||

|

||||

|

||||

def ensure_locale() -> None:

|

||||

safe = "en_US.UTF-8"

|

||||

for x in [

|

||||

@@ -261,45 +290,22 @@ def ensure_locale() -> None:

|

||||

warn(t.format(safe))

|

||||

|

||||

|

||||

def ensure_cert(al: argparse.Namespace) -> None:

|

||||

def ensure_webdeps() -> None:

|

||||

ap = os.path.join(E.mod, "web/deps/mini-fa.woff")

|

||||

if os.path.exists(ap):

|

||||

return

|

||||

|

||||

warn(

|

||||

"""could not find webdeps;

|

||||

if you are running the sfx, or exe, or pypi package, or docker image,

|

||||

then this is a bug! Please let me know so I can fix it, thanks :-)

|

||||

https://github.com/9001/copyparty/issues/new?labels=bug&template=bug_report.md

|

||||

|

||||

however, if you are a dev, or running copyparty from source, and you want

|

||||

full client functionality, you will need to build or obtain the webdeps:

|

||||

https://github.com/9001/copyparty/blob/hovudstraum/docs/devnotes.md#building

|

||||

"""

|

||||

the default cert (and the entire TLS support) is only here to enable the

|

||||

crypto.subtle javascript API, which is necessary due to the webkit guys

|

||||

being massive memers (https://www.chromium.org/blink/webcrypto)

|

||||

|

||||

i feel awful about this and so should they

|

||||

"""

|

||||

cert_insec = os.path.join(E.mod, "res/insecure.pem")

|

||||

cert_appdata = os.path.join(E.cfg, "cert.pem")

|

||||

if not os.path.isfile(al.cert):

|

||||

if cert_appdata != al.cert:

|

||||

raise Exception("certificate file does not exist: " + al.cert)

|

||||

|

||||

shutil.copy(cert_insec, al.cert)

|

||||

|

||||

with open(al.cert, "rb") as f:

|

||||

buf = f.read()

|

||||

o1 = buf.find(b" PRIVATE KEY-")

|

||||

o2 = buf.find(b" CERTIFICATE-")

|

||||

m = "unsupported certificate format: "

|

||||

if o1 < 0:

|

||||

raise Exception(m + "no private key inside pem")

|

||||

if o2 < 0:

|

||||

raise Exception(m + "no server certificate inside pem")

|

||||

if o1 > o2:

|

||||

raise Exception(m + "private key must appear before server certificate")

|

||||

|

||||

try:

|

||||

if filecmp.cmp(al.cert, cert_insec):

|

||||

lprint(

|

||||

"\033[33musing default TLS certificate; https will be insecure."

|

||||

+ "\033[36m\ncertificate location: {}\033[0m\n".format(al.cert)

|

||||

)

|

||||

except:

|

||||

pass

|

||||

|

||||

# speaking of the default cert,

|

||||

# printf 'NO\n.\n.\n.\n.\ncopyparty-insecure\n.\n' | faketime '2000-01-01 00:00:00' openssl req -x509 -sha256 -newkey rsa:2048 -keyout insecure.pem -out insecure.pem -days $((($(printf %d 0x7fffffff)-$(date +%s --date=2000-01-01T00:00:00Z))/(60*60*24))) -nodes && ls -al insecure.pem && openssl x509 -in insecure.pem -text -noout

|

||||

)

|

||||

|

||||

|

||||

def configure_ssl_ver(al: argparse.Namespace) -> None:

|

||||

@@ -486,6 +492,7 @@ def get_sects():

|

||||

"d" (delete): permanently delete files and folders

|

||||

"g" (get): download files, but cannot see folder contents

|

||||

"G" (upget): "get", but can see filekeys of their own uploads

|

||||

"a" (admin): can see uploader IPs, config-reload

|

||||

|

||||

too many volflags to list here, see --help-flags

|

||||

|

||||

@@ -522,6 +529,50 @@ def get_sects():

|

||||

).rstrip()

|

||||

+ build_flags_desc(),

|

||||

],

|

||||

[

|

||||

"handlers",

|

||||

"use plugins to handle certain events",

|

||||

dedent(

|

||||

"""

|

||||

usually copyparty returns a \033[33m404\033[0m if a file does not exist, and

|

||||

\033[33m403\033[0m if a user tries to access a file they don't have access to

|

||||

|

||||

you can load a plugin which will be invoked right before this

|

||||

happens, and the plugin can choose to override this behavior

|

||||

|

||||

load the plugin using --args or volflags; for example \033[36m

|

||||

--on404 ~/partyhandlers/not404.py

|

||||

-v .::r:c,on404=~/partyhandlers/not404.py

|

||||

\033[0m

|

||||

the file must define the function \033[35mmain(cli,vn,rem)\033[0m:

|

||||

\033[35mcli\033[0m: the copyparty HttpCli instance

|

||||

\033[35mvn\033[0m: the VFS which overlaps with the requested URL

|

||||

\033[35mrem\033[0m: the remainder of the URL below the VFS mountpoint

|

||||

|

||||

`main` must return a string; one of the following:

|

||||

|

||||

> \033[32m"true"\033[0m: the plugin has responded to the request,

|

||||

and the TCP connection should be kept open

|

||||

|

||||

> \033[32m"false"\033[0m: the plugin has responded to the request,

|

||||

and the TCP connection should be terminated

|

||||

|

||||

> \033[32m"retry"\033[0m: the plugin has done something to resolve the 404

|

||||

situation, and copyparty should reattempt reading the file.

|

||||

if it still fails, a regular 404 will be returned

|

||||

|

||||

> \033[32m"allow"\033[0m: should ignore the insufficient permissions

|

||||

and let the client continue anyways

|

||||

|

||||

> \033[32m""\033[0m: the plugin has not handled the request;

|

||||

try the next plugin or return the usual 404 or 403

|

||||

|

||||

\033[1;35mPS!\033[0m the folder that contains the python file should ideally

|

||||

not contain many other python files, and especially nothing

|

||||

with filenames that overlap with modules used by copyparty

|

||||

"""

|

||||

),

|

||||

],

|

||||

[

|

||||

"hooks",

|

||||

"execute commands before/after various events",

|

||||

@@ -536,6 +587,7 @@ def get_sects():

|

||||

\033[36mxbd\033[35m executes CMD before a file delete

|

||||

\033[36mxad\033[35m executes CMD after a file delete

|

||||

\033[36mxm\033[35m executes CMD on message

|

||||